Our Verdict

Pascal and the GTX 1080 deliver more performance and features, with greater efficiency.

For

- Fastest single GPU

- Impressive efficiency

- Useful new features

Against

- Founders Edition pricing

- Limited stock right now

PC Gamer's got your back

There can be only one

Earlier this month, Nvidia officially revealed the name and a few core specs for their next generation GPU, the GTX 1080. They invited a bunch of press and gamers to the unveiling party, showed off the hardware, claimed performance would beat the Titan X by about 30 percent, and then told us the launch date of May 27 and the price of $599. Then they dropped the mic and walked off the stage. Or at least, that's how it could have gone down.

What really happened is that we had several hours over the next day to try out some demos and hardware, all running on the GTX 1080. We were also given a deep dive into the Pascal GP104 architecture at the heart of the graphics card, and we left for home with a shiny new GTX 1080 box tucked safely in our luggage. And then we waited a few days for Nvidia to ship us drivers, benchmarked a bunch of games, and prepared for today, the day where we can officially talk performance, architecture, and some other new features.

If you're not really concerned about what makes the GTX 1080 tick, feel free to skip down about 2000 words to the charts, where we'll show performance against the current crop of graphics cards. Here's a spoiler: the card is damn fast, and even at the Founders Edition price of $699, it's still extremely impressive. For those who want more information, we've previously discussed the initial details of the GTX 1080, some of the new features and software, and explained the Founders Edition. Here's the 'brief' summary.

Let's get technical, technical…

Transistors: 7.2 billion

Die size: 314 mm^2

Process: TSMC 16nm FinFET

CUDA cores: 2560

Texture units: 160

ROPs: 64

Memory capacity: 8GB

Memory bus: 256-bit

GDDR5X clock: 10000MHz

L2 cache: 2048KB

TDP: 180W

Texel fill-rate: 277.3 GT/s

Base clock: 1607MHz

Boost clock: 1733MHz

GFLOPS (boost): 8873

Bandwidth: 320GB/s

The GTX 1080 uses Nvidia's new Pascal GP104 architecture, which is not to be confused with the previously announced GP100 architecture—that's currently slated for HPC (high-performance computing) and artificial intelligence applications, and it's currently only available via pre-order of Nvidia's DGX-1 server, which comes with eight Tesla P100 graphics cards. The GP104 in contrast is a lot more like a die shrink and enhancement of Maxwell GM200 and GM204, the chips at the heart of the GTX Titan X and 980. And that's not a bad thing.

Where GP100 moves to 64 CUDA cores per SMM cluster (the smallest functional block in an Nvidia GPU that can be disabled), GP104 stays at Maxwell 2.0's 128 cores per SMM. GP104 also goes light on the FP64 functionality, running at 1/32 the FP32 rate, compared to GP100 running FP64 at half the rate of FP32. Finally, GP104 also dispenses with HBM2 memory, instead using the new Micron GDDR5X memory that ends up being a lot like a higher clocked version of GDDR5.

If you're wondering about these differences, the main goal appears to be reducing cost and improving efficiency. GP100 is a large chip, HBM2 is far more expensive than GDDR5/GDDR5X, and FP64 isn't something that's used or needed for gaming. The result is a chip that has 2560 FP32 CUDA cores, uses 7.2 billion transistors, and it outperforms the previous king, the GTX Titan X by a sizeable margin. Oh, and it also uses less power, at just 180W, while carrying an MSRP of $600. If that's still too much, the upcoming GTX 1070, slated to launch on June 10, should roughly match the Titan X performance with an MSRP of $380.

Let's quickly talk prices, since we're on the subject. Nvidia will work with their usual AIB (add-in board) partner cards—Asus, EVGA, Gigabyte, MSI, PNY, Zotac, etc.—to offer GTX 1080 and GTX 1070 graphics cards. However, Nvidia will also offer their traditional blower cooler design (with some updates), but instead of calling it the "reference card," these will now be sold as Founders Edition cards. Nvidia will continue to produce these cards as long as they're manufacturing the GP104, and they'll even sell the cards under their own brand. That would be a problem for the AIB partners, however, so Nvidia is "kindly" bumping up the price on the Founders Edition to $700 and $450. It doesn't put them into direct competition, and it allows them to sell what is otherwise a high quality graphics card to users who prefer the blower design. Nvidia says their partners will produce a variety of cards, both at and above MSRP, with some even costing more than the Founders Edition. Hmmm….

The problem is, we're currently ten days before the official retail launch—the Order of 10 is everywhere, it seems—which means we have no clear view on actual availability and pricing. We might see some cards at MSRP come May 27, or we may see mostly/only Founders Edition cards at significantly higher prices. More likely than not, the vast majority of cards at launch are going to carry a hefty price premium, at least for a few weeks, because like we said earlier: the GTX 1080 is incredibly fast. Even at $700, it's a much better value than the previous generation GTX 980 Ti. It has more VRAM, it includes some new features, and it's simply a significant jump in performance. At $600, it's an incredible bargain. How was this accomplished?

It goes back to the process technology, which has finally moved from 28nm planar to TSMC's 16nm FinFET. Reducing the feature size by 33 percent (give or take) typically allows ASICs (Application Specific Integrated Circuits) to cram twice as many transistors into a given area, since the transistors are shrinking in X, Y, and Z dimensions. 16nm ends up being a larger jump than normal—though after five years of using 28nm, it's not really surprising—giving a 43 percent shrink. In theory, that means potentially three times as many transistors in the same area…or slightly more transistors in a much smaller area.

It's the latter path that Nvidia has chosen with GP104, using 7.2 billion transistors compared to GP204 at 5.2 billion, and GP200 at 8 billion. But where the GM204 required a 398 mm^2 die size and GM200 used 601 mm^2, GP104 fits into a relatively dainty 314 mm^2. That makes it less expensive to manufacture (not counting all the R&D costs), improves yields, and FinFET also helps to curb power requirements. It's a trifecta of improvements, and together it allows Nvidia to offer a large generational improvement in performance while reducing price and improving efficiency.

Which also means you can basically mark your calendar for next spring, when Nvidia will release a larger consumer Pascal chip, probably with around 50 percent more transistors and performance. My guess? It won't be a straight up consumerized variant of GP100, but instead we'll see a GP102 with more FP32 cores, 1/32 FP64 performance, and a 384-bit GDDR5X interface. Or we'll see "Titan P" using GP100, but the cost of HMB2 and FP64 make that questionable. I'd also bet this fall will bring about the mobile launch of the GTX 1080M and 1070M, which will both be partially disabled GP104 cores—saving "GTX 1080 for notebook" for fall 2017. But I'm getting ahead of myself….

New hardware features

All of the above changes help make Pascal faster than Maxwell, but Nvidia has also updated many hardware features. We're not going to go into all the details right now, but here's the short rundown of changes.

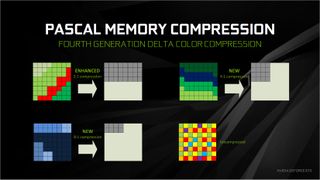

Better memory compression

Pascal has enhanced memory compression, which allows it to make better use of the available bandwidth. With Maxwell 2.0, Nvidia introduced an improved delta color compression engine, and Pascal takes that a step further. 2:1 compression is more effective, there's a new 4:1 delta color compression mode, and there's also a new 8:1 delta color compression. In practice, Nvidia says they're able to improve compression by about 20% over Maxwell, so with 320GB/s compared to the 980's 224GB/s, the effective increase is around 70% (instead of the 43% you'd see without the compression improvements).

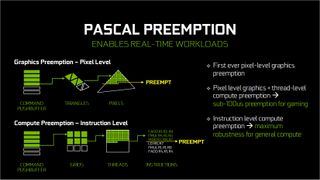

GPU preemption and dynamic load balancing

As GPUs have become more programmable and more powerful, the workloads they run have changed. Early programmable shaders had some serious limitations, but with DX11/DX12 and other APIs, whole new realms of potential are opened up. The problem is that GPUs have generally lacked certain abilities that still limit what can be done. One of those is workload preemption, and with Pascal Nvidia brings their hardware up to the level of CPUs.

Maxwell had preemption at the command level, and now Pascal implements preemption at the pixel level for graphics, thread level for DX12, and instruction level for CUDA programs. This is basically as far as you need to go with preemption, as you can stop the rendering process at a very granular level. When utilized properly, preemption requires less than 100us and allows effective scheduling of time sensitive operations—asynchronous time warp in VR being a prime example.

Pascal has also added dynamic load balancing (for when a GPU is running graphics plus compute), where Maxwell used static partitioning. The goal is to allow a GPU to run both graphics and compute, since Nvidia GPUs can only run graphics commands or compute commands in a specific block. With Maxwell, the developer had to guess the workload split; now with Pascal, when either workload finishes, the rest of the GPU can pick up the remaining work and complete it more quickly.

AMD has made a lot of noise about GCN's asynchronous compute capabilities. What Nvidia is doing with preemption and dynamic load balancing isn't the same as asynchronous shaders, but it can be used to accomplish the same thing. We'll have to see how this plays out in developer use, but the potential is here. If you're wondering, AMD's async compute relies on the GCN architecture's ability to mix compute and graphics commands without the need to partition workloads. Preemption is still needed for certain tasks, however, like changing from one application workload to another.

Simultaneous multi-projection

Simultaneous multi-projection (or SMP, as Nvidia cheekily calls it) is one of the potentially biggest hardware updates with Pascal. It allows much of the geometry setup work to be done a single time, but with multiple viewports (projections) all done in a single pass. This becomes particularly useful with stereoscopic rendering, as well as multiple displays—the former being particularly important for VR. SMP supports up to 16 pre-configured projections, sharing up to two projection centers, one for each eye in a stereoscopic setup.

For VR, Nvidia can use their SMP technology to enable developers to render both projections at the same time, which is something no previous architecture could do. Taking things a step further, SMP allows a different take on the multi-res shading Nvidia created for Maxwell 2.0—multi-res shading leveraged multi-viewport technology to render different viewports at lower resolutions. The net result is that in VR scenarios with titles that leverage SMP, GTX 1080 can be up to twice as fast as a Titan X (or 980 Ti), and it uses less power at the same time.

HDR output

High dynamic range (HDR) displays are the new hotness for high-end visuals, and Pascal includes hardware support for 12-bit color, BT.2020 wide color gamut, SMPTE 2084, and HDMI 2.0b/12b for 4K HDR. This is all technology previously available with Maxwell. Pascal also adds new features: 4K@60Hz 10/12b HEVC decode, 4K@60Hz 10b HEVC encode, and DisplayPort 1.4 ready HDR metadata transport. So if you happen to own one of the amazing HDR televisions (which is currently the only place you'll find HDR displays), Pascal is ready.

Changes to SLI

One final hardware aspect we want to quickly touch on concerns SLI. With Pascal, Nvidia is stepping away from 3-way and 4-way SLI configurations and explicitly recommending against them, instead stating that 2-way SLI as the best performance alternative with the fewest difficulties. There are many reasons for the change, but the net result is that the two SLI connectors on top of GTX 1080/1070 cards are now being used to increase the bandwidth for SLI mode, and you'll need new "high bandwidth" capable SLI bridges—the flimsy old ribbon cables will still work, but only in a "slower" mode.

The new SLI bridges are rigid, and they come in either two, three, or four slot spacing—which is a bit of a downer if you ever switch motherboards and end up needing different spacing, as you'd need to buy a new bridge (likely $20-$30). The bridges now run at 650MHz (compared to 400MHz on the old bridges), which explains the more stringent requirements, and they'll help with 4K, 5K, and surround gaming solutions.

But 3-way and 4-way SLI aren't totally dead—they're just not recommended. If you buy three GTX 1080 cards and want to run them in 3-way SLI, you can do so—or rather, you'll be able to do so. You need to unlock 3-way/4-way SLI first, however, by generating a special "key" for each GPU, using a tool and website Nvidia will provide at the retail launch on May 27. So for the 0.001% of gamers this affects, it will be a bit more difficult, and scaling in many games will still be questionable at best.

Get on with it!

We've spent a lot of time collecting performance results, using an extensive test suite of games, including some recent releases along with some oldies but goodies. Our test system is the same i7-5930K 4.2GHz platform we've been using for over a year now, and we've gathered results from all the high-end graphics cards currently available to bring you this:

CPU: Intel Core i7-5930K @ 4.2GHz

Mobo: Gigabyte GA-X99-UD4

RAM: G.Skill Ripjaws 16GB DDR4-2666

Storage: Samsung 850 EVO 2TB

PSU: EVGA SuperNOVA 1300 G2

CPU cooler: Cooler Master Nepton 280L

Case: Cooler Master CM Storm Trooper

OS: Windows 10 Pro 64-bit

Drivers: AMD Crimson 16.5.2 Hotfix, Nvidia 365.19 / 368.13

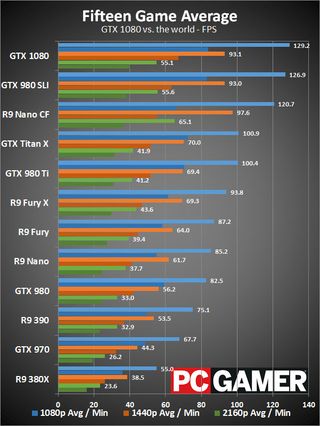

This is the high level overview, using the average performance across our entire test suite here. Individual games may favor one card or another, but what's it like in practice to use the GTX 1080? When Nvidia said it was faster than a Titan X—and by extension, every other single GPU configuration—they weren't blowing smoke. The GTX 1080 beats the reference GTX 980 Ti by over 35 percent…at our 1080p Ultra settings. Crank up the detail to 1440p Ultra or 4K High/Ultra, and the margin grows to 40 percent. The Titan X closes the gap, but only barely, losing by a considerable 30-35 percent.

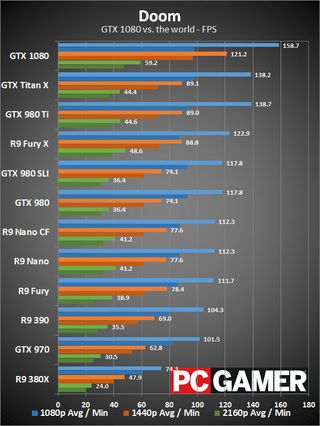

Ah, but what about that GTX 980 SLI comparison—is the single GTX 1080 faster than two GTX 980 cards? Remember, these cards cost $1000 as recently as late March, and it's only with the pending release of Pascal that prices have finally fallen below $500 per card (and they continue to drop). And the verdict is…well, it's close enough to call it a draw. Which is another way of saying there are individual games where 980 SLI beats a single 1080 (usually not by much), and others where it loses—sometimes by a large margin. Never mind those cases (like Doom right now, along with most DX12 titles) where the second GPU isn't currently doing anything.

A similar point of comparison between the 1080 and R9 Nano CF shows that overall, two high-end GPUs from last year can still beat Nvidia's latest and greatest…except when CrossFire doesn't work very well, in which case the second GPU is effectively useless. R9 Nano CrossFire also tends to do worse overall when it comes to minimum frame rates, even though average frame rates are typically higher than GTX 980 SLI.

If you're already running a high-end SLI or CrossFire setup, a single GTX 1080 may not be enough to cause you to jump ship, but for users looking to get dual-GPU levels of performance without the hassle, this is a seriously tempting offer. Or if you're the sort of person that always has to have the best graphics setup possible, well, now you can start thinking about buying a pair of 1080 cards.

If you're interested in additional graphs showing how performance stacks up between today's high-end GPUs and the GTX 1080, we've included more charts at the end, but the above chart showing average framerates across a collection of games should tell you everything you need to know. The GTX Titan X and 980 Ti have been the kings of the gaming world for the past year, but Pascal is better in every way that matters. Not that you need to rush out and upgrade if you're already running a high-end GPU, but we wouldn't blame you for lusting after the 1080.

Pedal to the metal

And then there's overclocking. Nvidia GPUs from the Maxwell generation have typically hit 20-25 percent overclocks with relative ease. Pascal is the new hotness, but that doesn't mean Nvidia has cranked the dial to eleven. Nvidia has left plenty of room for tweaking, and in fact they've opened up the full range of voltage controls to overclocking utilities.

Using a new version of EVGA's Precision X16 software, you can let it run for about 25-30 minutes (at the default settings) and it will attempt to tune clocks to your particular card and GPU. If you add better cooling, it should pick up on that as well. Unfortunately, it's not quite as robust as we'd like in this early preview; any crashes during the test basically end up with you restarting the whole process, but EVGA and Nvidia are working to fine-tune the software.

For now, we ended up getting the Precision X16 OC Scanner to work by limiting the maximum overclock to 200MHz. It applied a static +200MHz across all voltages, which sort of defeats the point of tuning each voltage step in the first place. Again, this is beta software, and the drivers are still early on GTX 1080 as well, so things will hopefully improve by the time the retail launch comes around in 10 days. Also, the scanning doesn't do any tweaking of GDDR5X clocks, so we had to search for a good clock speed on our own.

With manual tuning, we settled on a +200MHz core overclock, +725MHz on the GDDR5X (11.45GHz), with the overvoltage set to "100%" (whatever that means—it's not a doubling of the voltage, just to be clear). In practice, looking at actual GPU Boost 3.0 clock speeds running stock vs. overclocked, we saw 2.0-2.1GHz on the core (vs. 1700-1733MHz), so we got a healthy 15 percent overclock on the core and memory. Results may vary, but our sample card looks to be a bit less impressive than other cards, so 2.0GHz and more looks attainable.

This is what performance looks like, running stock vs. overclocked:

Performance improves on average by 10-15 percent, except at 1080p where we start to hit CPU limits in a few titles. We'll likely see factory overclocked cards with aftermarket coolers at some point, and some of those will almost certainly exceed what we're able to achieve with the Founders Edition. Even better, many of the factory overclocked cards will likely cost less than the Founders Edition. But if the 30 percent jump in performance over Titan X wasn't enough to get you excited, perhaps tacking on another 15 percent with overclocking will do the trick.

Hail to the king, baby!

The rumblings from team green have indicated something big was coming for months now. Launching Pascal on professional devices in the form of GP100 effectively threw down the gauntlet: catch us if you can! GP104 is a different beast, but no less potent in its own right. It's about half the die size of the GM200 used in Titan X, uses 800 million fewer transistors, consumes 28% less power, and still delivers 30-40% better performance—and potentially twice the performance in VR, if the developer chooses to use Pascal's simultaneous multi-projection feature.

If you're a gamer looking for something that will handle 4K gaming at nearly maxed out quality, the GTX 1080 is the card to get. Or if you want a GPU that has at least a reasonable chance of making use of a 1440p 144Hz G-Sync display, or a curved ultrawide 3440x1440 100Hz display, again: this is the card to get. It delivers everything Nvidia promised, and there's likely room for further improvements via driver updates—this is version 1.0 of the Pascal drivers, after all.

There's only one small problem: you can't buy one, at least not yet. You'll have to wait for the official retail launch on May 27, which sort of stinks. And then more likely than not, you'll be looking at Founders Edition versions of the GTX 1080, which will set you back $700—and probably more, because even at those prices, they're likely to go out of stock for a few weeks. If you're in no rush, prices and availability will no doubt settle down by late June or early July, at which point the GTX 1080 is a killer card for "only" $600.

What about the GTX 1070? It's looking like a larger gap than the GTX 970/980, if only slightly, but you do get 8GB of full-speed VRAM this round. We're still waiting for actual cards and the full Monty on specifications, but judging by the GTX 1080, the 1070 should be a good "affordable" alternative to the GTX Titan X and 980 Ti. We'll know more in the next few week, but if the GTX 1080 is too rich for your blood, that's the one to watch.

So kick back and relax while we wait to see what 1070 has to offer—and whether AMD's Polaris 10 can steal any of Nvidia's thunder, as AMD has repeatedly stated those chips will launch in June. Nvidia has dropped the mic on the stage, but the show's not over quite yet. All indications are that Polaris 10 won't go up against the GTX 1080, but GTX 1070 may not be out of reach. For now, GTX 1080 is the new heavyweight champion of the world, and will likely go uncontested until AMD brings out Vega...or until Nvidia pulls out "Big Pascal" for a new Titan card or a "1080 Ti."

And make no mistake: as fast as GP104 is, Nvidia left plenty of room for future products to eclipse it. 30-40 percent gains are great to see, but those hoping for a doubling of performance will have to skip generations of hardware to get it.

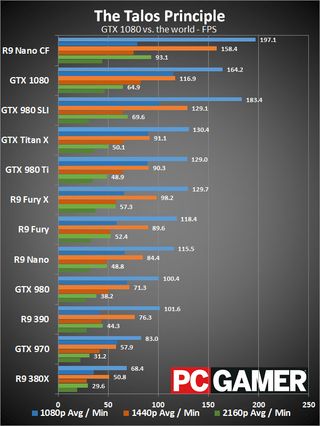

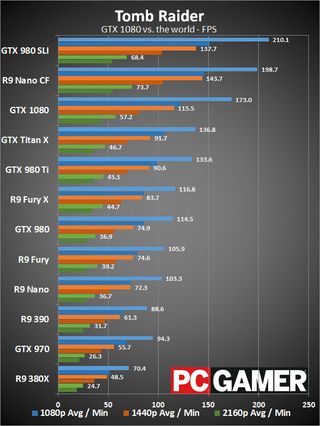

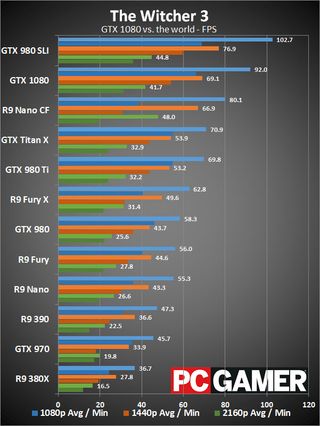

Additional gaming benchmarks

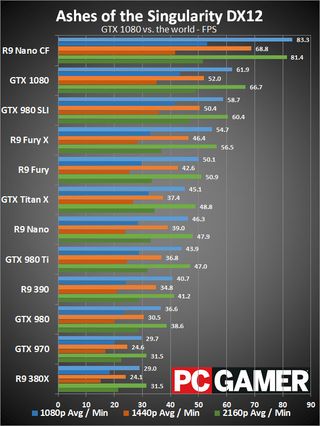

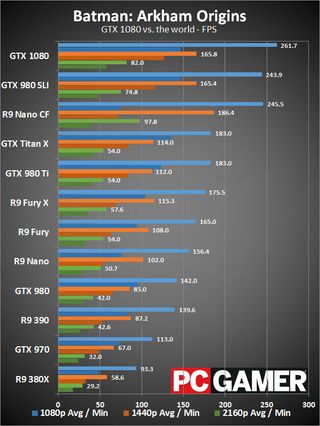

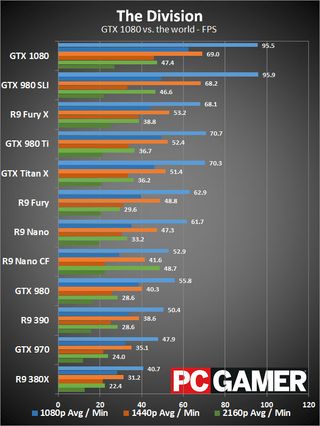

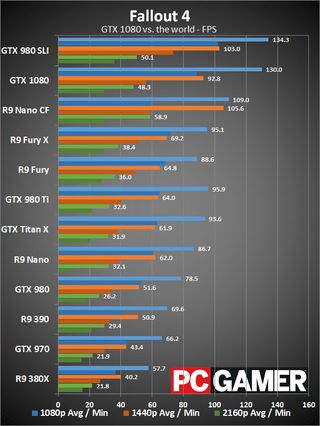

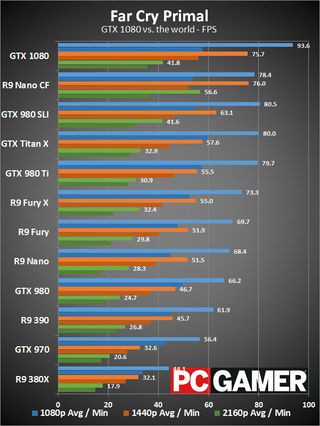

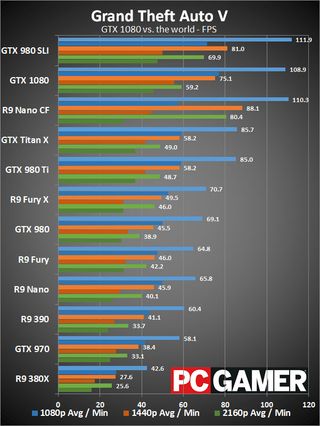

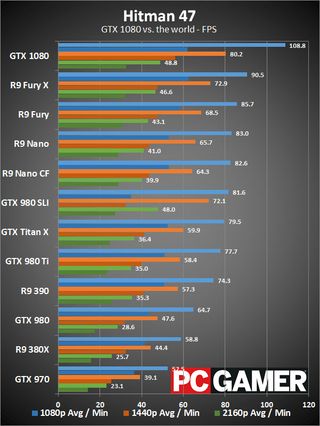

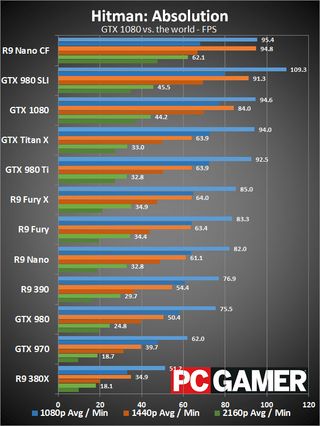

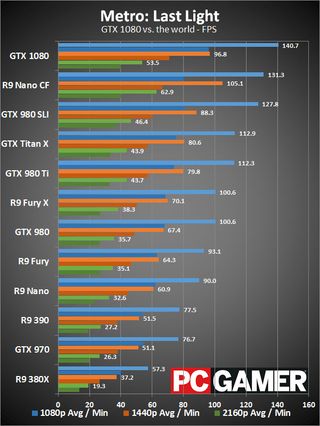

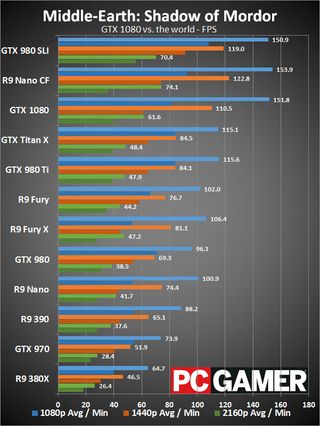

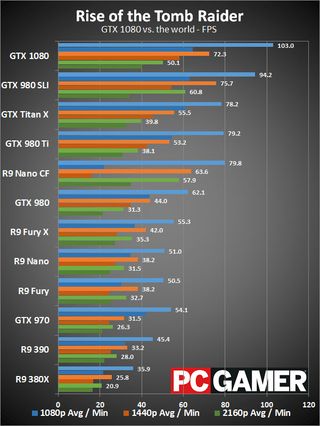

Below are the individual results for the fifteen games we tested. It's been a long week of benchmarking, so if you notice any scores that seem wonky, please let us know and we can retest to verify the results. All of the games were tested at Ultra/Very High settings at 1080p and 1440p, while many of the more demanding titles (specifically: Ashes of the Singularity, The Division, GTAV, Hitman 47, and Rise of the Tomb Raider) were run at High settings for 4K.

Pascal and the GTX 1080 deliver more performance and features, with greater efficiency.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.