How we test PC gaming hardware

Inside the silicon sausage factory.

We put a whole heap of different technological goodies through their paces here at PC Gamer, and we work hard to make sure that all our testing is reliable, repeatable, trustworthy, and ultimately fair.

It's not just about how fast a given component might be, or what fancy RGB lighting has been arrayed around a product, we're PC gamers at heart and we need to know how it's going to work in-game and how it's going to work for you, our readers. Value is always a key part of our testing methodology; whether it's the latest graphics card, some shiny new streaming mic, or hyperfast gaming monitor, tech needs to make sense at the checkout as well as on your desktop.

That doesn't mean we're only interested in the cheap stuff, though. The best PC gaming tech can be expensive, but if it does something nothing else can, then it can still be great value. And you need to know that when you're spending the sort of money today's PC hardware costs, you're making the right calls.

With well over fifty years of combined hardware journalistic experience in the core writers alone, your PC Gamer hardware team is dedicated to making sure we cover everything that matters to you and to our hobby as a whole.

What does PC Gamer review?

With the sheer volume of hardware releases every month it's not possible for us to test everything out there, which means we have to be selective about the tech that we get into the office for testing.

We'll always test the latest graphics cards, with the key releases covered on day one, though we won't necessarily pick every single processor from AMD and Intel to review. The flagship products, and what we see as the CPUs most relevant to gamers, will get our attention. We'll also keep an eye on the post-launch buzz and affordability down the line, too. If a chip suddenly becomes more affordable and is therefore garnering more interest we'll be on hand to see whether it's worth your money.

We'll also check out new gaming laptops with the latest mobile technology inside them, but again we cannot review every SKU on the shelves. We're looking for something new, something interesting to differentiate them, whether that's a new GPU, new mobile chip architecture, or screen configuration.

That same ethos goes for what SSDs, gaming monitors, and peripherals we test. Looking at yet another Cherry MX Red mechanical keyboard doesn't interest us unless it's offering something new. That could be something as simple as a new, lower price point, or a new feature or technology.

The breakneck pace of PC innovation means prices and the competitive landscape rapidly changes, and so we'll occasionally update tech reviews, and that may sometimes mean we change the score. Our aim is to be able to offer you the most relevant, up-to-date hardware buying advice no matter when you read a review.

We're not talking about updating a five year-old monitor review every time Amazon lops three percent off the sticker price, but when a graphics card, SSD, or processor is still relevant—or maybe suddenly more so—that's where you might see a change.

These are the most important, but also the most price and performance-sensitive products in the PC ecosystem. They are also the products that we will potentially be recommending to you years after their initial launch.

How we score hardware

PC Gamer uses a 100-point scoring system, expressed as a percentage. The descriptions and examples here are meant to clarify what those scores most often mean to us. Scores are a convenient summation of the reviewer's opinion, but it's worth underlining that they're not the review itself.

00-09% Utterly broken or offensively bad; absolutely no value.

10-19% We might be able to find one nice thing to say about it, but it’s still not worth anyone’s time or money.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

20-29% Completely falls short of its goals. Very few redeeming qualities.

30-39% An entirely clumsy or derivative effort.

40-49% Flawed and disappointing.

50-59% Mediocre. Other hardware probably does it better, or its unique qualities aren’t executed especially well.

60-69% There’s something here to like, but it can only be recommended with major caveats.

70-79% A well-made bit of tech that’s definitely worth a look.

80-89% A great piece of hardware that deserves a place in your PC setup.

90-94% An outstanding product worthy of any gamer's rig.

95-98% Absolutely brilliant. This is hardware that delivers unprecedented performance or features we've never seen before.

99-100% Advances the human species.

The Editor's Choice Award is awarded in addition to the score at the discretion of the PC Gamer staff and represents exceptional quality or innovation.

How we test graphics cards

CPU: Intel Core i9 12900K

Motherboard: Asus ROG Z690 Maximus Hero

Cooler: Corsair H100i RGB

RAM: 32GB G.Skill Trident Z5 RGB DDR5-5600

Storage: 1TB WD Black SN850, 4TB Sabrent Rocket 4Q

PSU: Seasonic Prime TX 1600W

OS: Windows 11 22H2

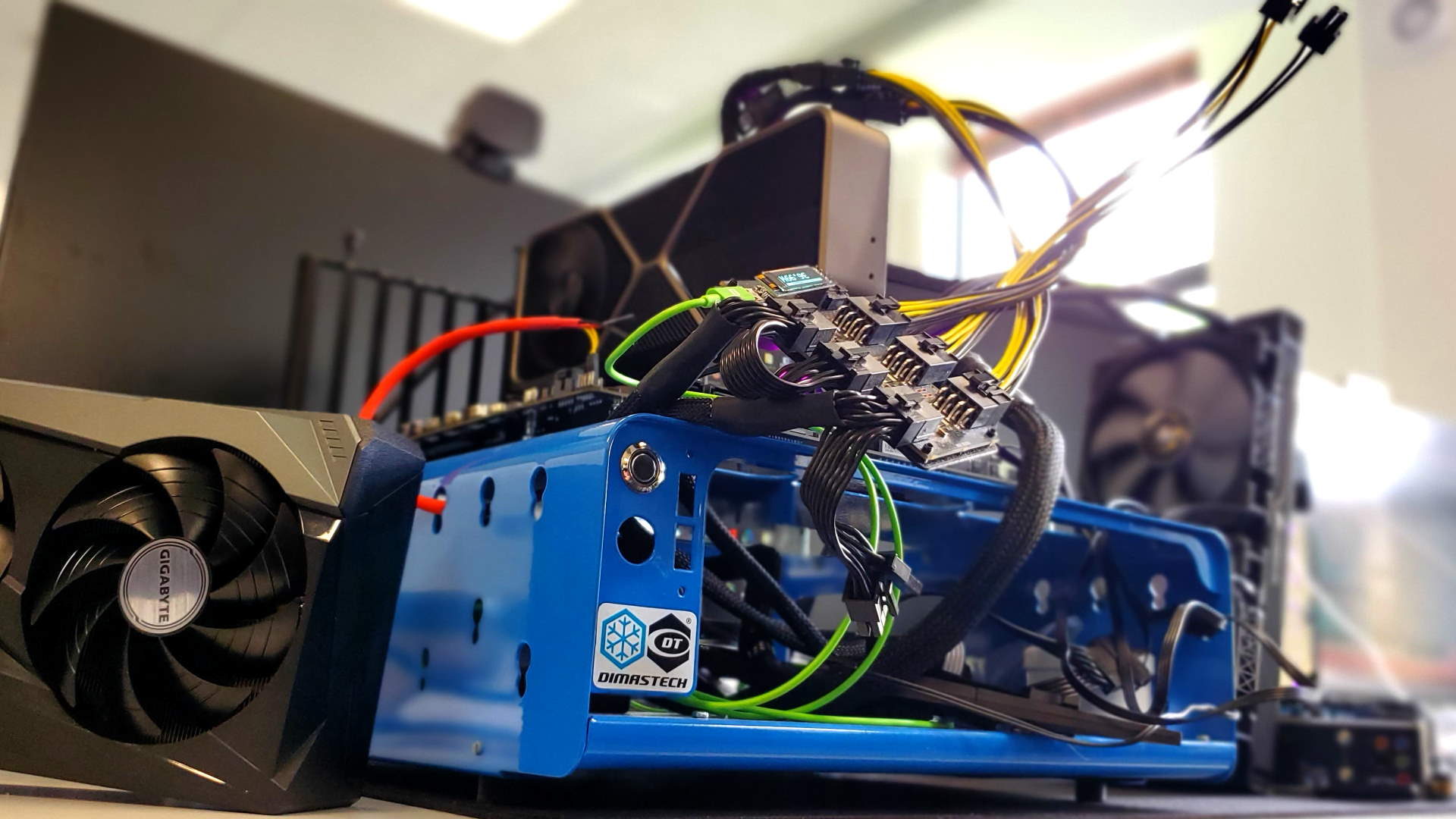

Chassis: DimasTech Mini V2

Monitor: Dough Spectrum ES07D03

We have a static PC Gamer test rig that only gets updated at the dawn of a whole new generation of gaming hardware. We use this to test all our graphics cards to maintain the relative benchmark numbers we use to measure comparative performance.

We use a mix of games and synthetic benchmarks, at a variety of resolutions, to see how they perform with the current titles available. This selection will generally change at the same time as we update the hardware inside the test rig.

Raw frame rates and index scores aren't the only measure of a GPU, however, so we use the Nvidia PCAT hardware and FrameView software to test the temperature, power draw, and performance per Watt (at both 4K and 1080p) to get a holistic view of a graphics card's performance.

We will also push the GPUs themselves to see if there is any mileage in overclocking the silicon on a day-to-day basis. We're not looking to sit at the top of HWBot with a GPU frequency that lasts a picosecond before the system crashes, we're looking to see whether there is an achievable overclock that will offer a genuine bump in performance.

How we test CPUs

We have static systems designated for testing AMD and Intel CPUs, in order to ensure that our test rig is able to deliver comparative numbers for measuring processor performance against the competition. That includes using the same GPU across both when we're comparing numbers.

- PC Gamer 12th Gen test rig: Asus ROG Maximus Z690 Hero, Corsair Dominator @ 5,200MHz (effective), Nvidia GeForce RTX 3080, 1TB WD Black SN850 PCIe 4.0, Asus ROG Ryujin II 360, NZXT 850W, DimasTech Mini V2, Windows 11

- PC Gamer 11th Gen test rig: MSI MPG Z490 Carbon WiFi, Corsair Vengeance Pro RGB @ 3,600MHz (effective), Nvidia GeForce RTX 3080, 1TB WD Black SN850 PCIe 4.0, Asus ROG Ryujin II 360, NZXT 850W, DimasTech Mini V2, Windows 11

- PC Gamer AMD (AM4) test rig: Gigabyte X570 Aorus Master, Thermaltake DDR4 @ 3,600MHz, Zadak Spark AIO, 2TB Sabrent Rocket PCIe 4.0, Corsair 850W, Windows 11

As we're PC gamers we use games, and the 3DMark Time Spy synthetic benchmark, to measure relative gaming performance. Our game testing is carried out at 1080p to ensure that our relative frame rate results aren't coloured by the performance of the graphics card. At the 1080p level games won't be GPU-bound on the high-end silicon we use for CPU testing, and therefore the performance delta between CPUs will be mostly down to them and their supporting platform, rather than the graphics card.

We also use synthetic CPU productivity tests to measure rendering and encoding performance, as well as the memory bandwidth on offer. We also use the PCMark 10 test to provide an overall system usage index score.

Power consumption and temperatures are also important, so we use the HWInfo software to measure those numbers during peak and idle usage of the CPU.

Like with GPUs, we will also indulge in some light overclocking to get a measure of how much further it might be possible to push the hardware in a home setup. We're not going to go deep with liquid nitrogen, but are just aiming to see if there's a level we'd be comfortable running the hardware at over an extended period of time and whether that really makes a difference to performance.

How we test PCs and laptops

Full systems get a mix of our CPU and GPU testing methodologies, because they contain both. That means we'll test games at a level which highlights the benefits of their processors, as well as at a level where the graphics components can really stretch their legs.

But we will also test memory performance, storage speed (in terms of synthetic throughput numbers as well as gaming load times), power draw, and temperatures, too.

When it comes to laptops we will also use the Lagom LCD test screens, and our own experience, to measure the worth of the screens attached to them. Battery life is a key part of the mobile world; how long a notebook lasts while watching a film or tapping at the keyboard is one thing, but how long it will last playing an intensive PC game is what really matters to us. We use the PCMark 10 Gaming Battery Life Test benchmark to get a bead on how long a laptop will last playing a modern title.

Then there's the thoroughly experiential. We'll use systems as we would our own devices, live with them for a while to see how we get on with them on a day-by-day basis as well as how their individual components perform. Me, I always like to write my reviews on the laptop I'm talking about—I always find that's the best way to get a bead on standard usage.

How we test SSDs

It's important to use a mix of real world and synthetic benchmarks to get a bead on the measurable performance of storage drives, whether that's an internal or external SSD. We also use different software to measure different levels of performance. For example, we use both the ATTO and AS SSD benchmarks, for peak throughput as well as performance with incompressible data. We also use CrystalDiskMark 7.0.0 to ensure our numbers are accurate.

The PCMark 10 Storage benchmark also gives an index score, as well as bandwidth and access time figures.

Finally we measure the time it takes to copy an entire 30GB folder of mixed file types, representative of a small Steam game folder, and the game load times benchmark of Final Fantasy XIV.

How we test monitors

The quality of the panel, and the technology behind it (sometimes literally in terms of backlighting), is the key factor when it comes to testing gaming monitors. And while the Lagom LCD screen testers help us measure different things, such as black levels, white saturation, and contrast, leaning on our decades of testing experience is also vital when it comes to how a screen feels to use.

And that means playing games. It's a tough job, but someone's got to do it.

We'll always start testing screens with the default factory settings, and test different features within the settings to measure the likes of potential blurring and ghosting, whether inverse or not.

What's around the panel is also important, but is a more subjective thing. Build quality, style, and feature sets are still things that make one monitor more valued than another, even if they are both using the exact same panel.

How we test peripherals

With most peripherals it's always going to be about the subjective, personal experience of using a device. Within the team we have different preferences when it comes to keyboard switches. I love a heavy switch with a light tactile bump, Jacob heretically likes a certain membrane switch, Katie's a dedicated clickity clacker, and Alan… well, we don't talk about Alan.

But we have decades of experience using hundreds of different keyboards, mice, and headsets over the years, so we know when something has been well built, well designed, and properly priced. Though all Chris really cares about is whether they'll work in his BIOS.

Headsets are an interesting one, though. And we will each of us have a specific set of tracks and games we use to get the measure of the performance of a set of drivers. Some of us even like to measure a headset by how well they fit a dog... but Hope's more bespoke tests aside, we have similar test methodologies. We will always use the same key songs we know well so we can hear the different tonal quality of different headsets.

We also have a script for mic testing, too, which allows us to really hear how certain microphones pick up different sounds, and how well they represent our own voices. And you've not heard a good microphone until you've heard Jorge's out of context game quotes through it.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.