Nvidia focuses Pascal on superhuman AI

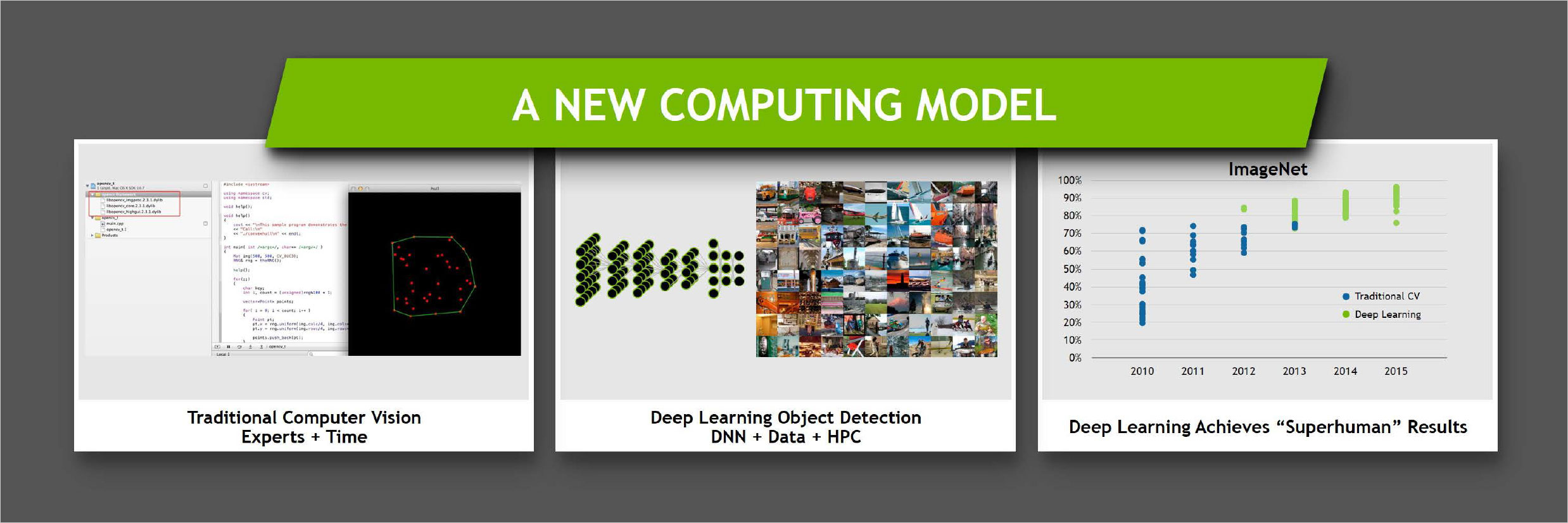

Besides Tesla P100 and Pascal, Nvidia had plenty more to discuss today at GTC16. A big topic was something Nvidia refers to as "deep learning," a relatively new way of doing artificial intelligence. AI for a long time consisted of expert systems, where an expert from a given field would essentially help to hand-code an algorithm to do a certain task. One of the problems with expert systems is that every time you need to handle a new task, you need a new expert, new software, etc. Nvidia claims deep learning gets around that by having the system essentially train itself.

Of course that doesn't mean it's suddenly a piece of cake to make smarter AI, as the task of humans has shifted. Deep learning involves processing huge amounts of data, learning to extract useful information over time, with the long-term goal being to create something that can infer what to do in a given situation. In other words, deep learning needs training, and for training to take place, someone needs to set up parameters on how to parse and represent the data.

A common example is vision systems, where the goal is to have a system recognize and categorize images in some fashion. Rules are defined, the basic groundwork is laid, and then you just feed the system mountains of data—images and video. (That sounds far easier than what is actually required, but you get the point.)

What's cool about deep learning systems is that there's a lot more overlap between problems; it's a general architecture and algorithm that can be used on all sorts of data sets. So a deep learning vision system can be adapted to build a deep learning speech recognition system for English, which could then be used on any other language—you just have to train it first.

Nvidia says that the Tesla P100 is built from the ground up to excel at deep learning, including the areas of artificial intelligence (AI), self-driving vehicles, and more. One of the more impressive results is that Google was able to take deep learning via their DeepMind software and apply it to the problem of playing the game Go.

In 1997, IBM's Deep Blue was able to win a match against the reigning world chess champion, Kasparov, but it was predicted it would be over a hundred years before a computer could take on the best humans in the game of Go. Then deep learning algorithms and GPUs entered the picture. AlphaGo was taught the rules of Go and then played itself millions of times, learning new strategies in a rapid fashion. Now, less than 20 years after Kasparov fell to Deep Blue, DeepMind was able to defeat one of the best Go players, Lee Sedol, 4-to-1 in a series of five matches. In effect, deep learning has created a "superhuman" Go champion.

Going back to the example of image recognition, deep learning via ImageNet has reached the point now where a trained system is able to recognize and classify details better than a human—again, a "superhuman" image analysis tool. Now it's no longer a question of getting a system to classify images, but rather a question of how fast the system can classify them. There are two elements to this task: training the system, and then using a trained system. The former is more difficult and can require tons of compute, while a trained database can run on relatively low power hardware.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

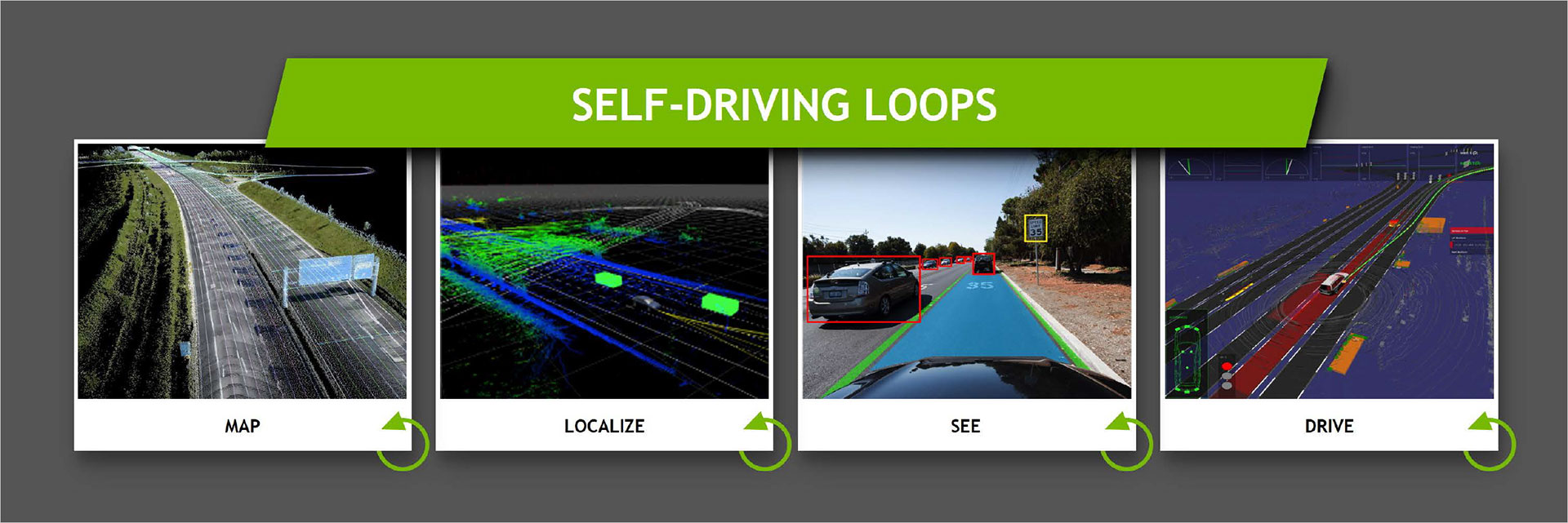

Nvidia's big push here is in the area of self-driving vehicles. The classic approach for self-driving vehicles (and robotics in general) has been: Sense -> Plan -> Act. Using deep learning, they're working toward the creation of superhuman driving skills—but they're not there yet. Because as complex as Go might be, driving a car is potentially orders of magnitude more difficult to get right. Vehicles, lane markers, pedestrians, signs, and object recognition are only just the beginning; how do you handle the weather, bad roads, aggressive drivers, and interaction between multiple self-driving vehicles? What happens if something goes wrong—who is liable for damages? And that's not even getting into the worrisome potential for hacking and intentionally malicious forces.

But outside of the security and liability aspects, progress is coming fast in the realm of autonomous vehicles. Deep learning has culminated in Nvidia's Drive PX solution, a set of hardware and software designed to help in creating more self-driving vehicles. Nvidia says that a trained Drive PX solution is now capable of recognizing 10 images per second per Watt—so a 20W solution could do 200 images per second. To further this point, Nvidia showed a video of a system processing multiple (up to 12) camera inputs running at 180 fps. But to get the system trained in the first place, you still need tons of computational power.

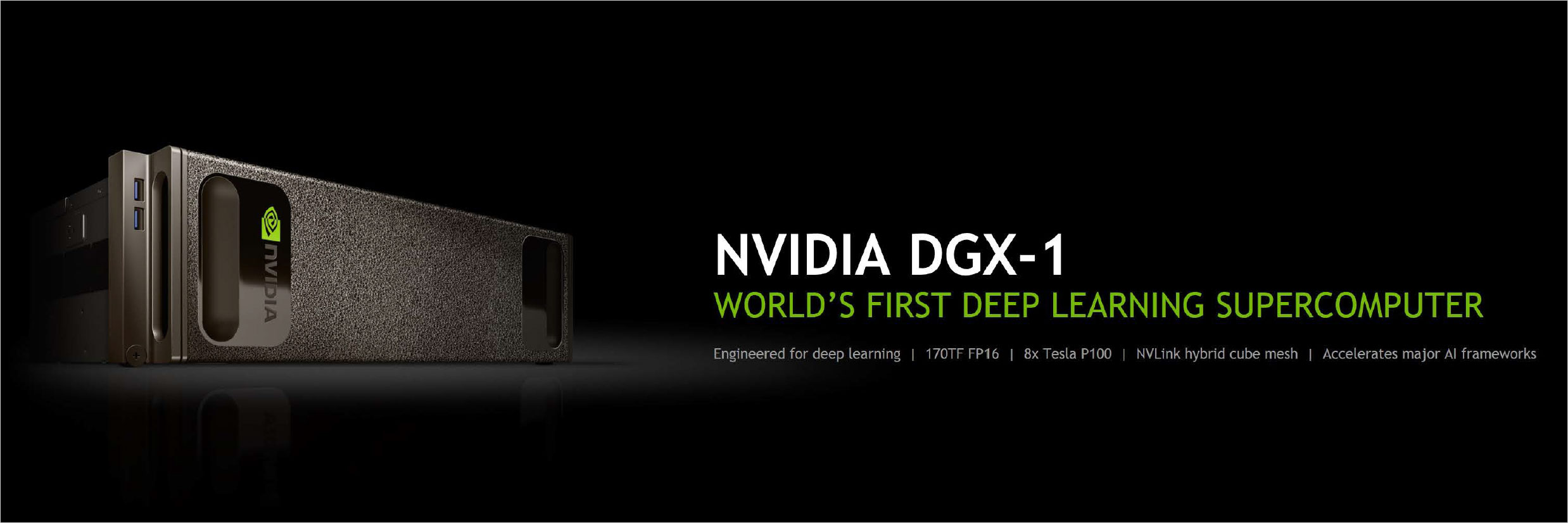

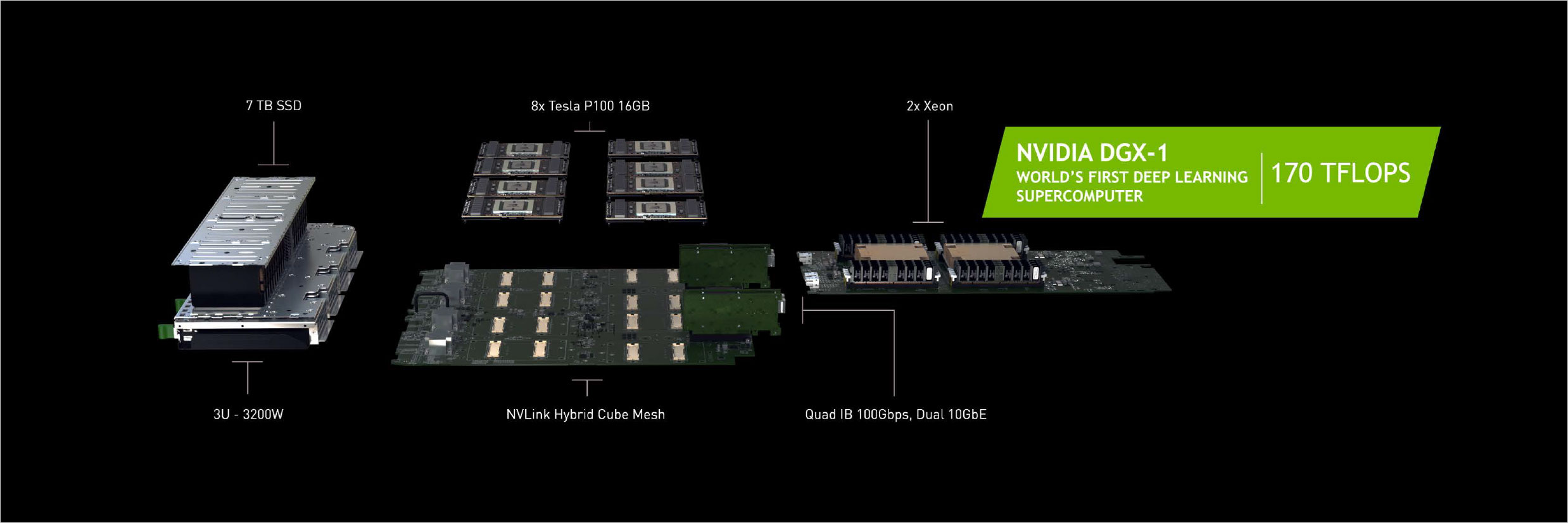

To that end, Nvidia has created a new server, the DGX-1, a 3U chassis that will include eight Tesla P100 modules capable of a whopping 170 TFLOPS in a single server. A rack of twelve of these servers meanwhile will offer a staggering 2 PFLOPS of compute performance, and Nvidia notes that the overall design represents a 12X increase in performance relative to a 2015 server with four Maxwell GPUs—or approximately 250 times the performance of a dual Xeon server. (There's a reason 97% of all new supercomputing nodes now include GPUs.) The cost of a single DGX-1 node will be $129,000, which might seem excessive until you consider the savings on infrastructure and other elements. Public availability of the DGX-1 is currently slated for Q1'2017, though it sounds like close partners can get their hands on working hardware much sooner than that.

During the keynote, Nvidia CEO Jen-Hsun Huang showed a video detailing the progress of training their self-driving vehicle, "BB8," using their DaveNet deep learning software. Initial attempts had the car driving off roads, requiring human intervention, but as time progressed DaveNet became much more capable. Now with more than 3,000 hours of driving experience, DaveNet is capable of driving in the rain, taking curves at appropriate speed, and transitioning from asphalt to a dirt road with no lane markers.

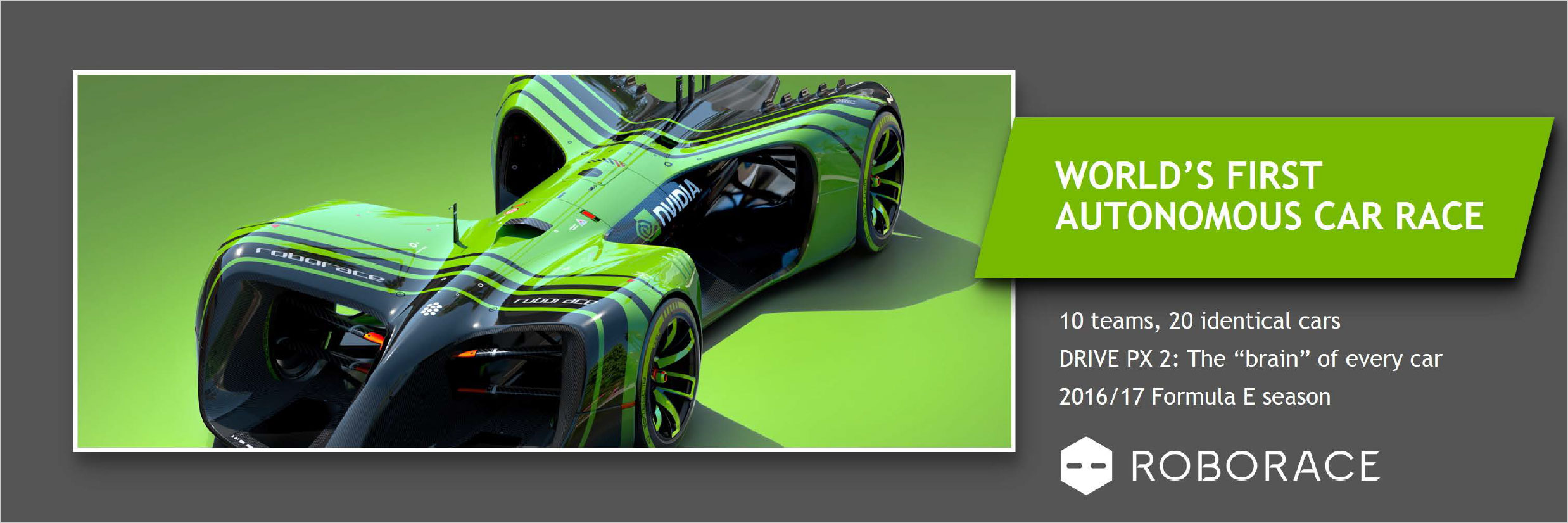

Not content to end there, Nvidia is taking things a step further with Drive PX 2, which is over four times as powerful as the original Drive PX. The Drive PX 2 will be put into electric racing cars to compete in the first Roborace Championship, featuring 20 identically equipped driverless cars pitted against each other. But the cars won't actually be "identical"—at least not in terms of software. They're electric vehicles, and the ten teams will have two cars each. They're free to customize the software as they see fit. It should prove to be a very interesting competition, and it's all thanks to the power of GPUs and deep learning.

I for one welcome our new GPU overlords. Let's hope they're more benign than SkyNet.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.