Intel announces XeSS 3 with multi-frame generation, putting it ahead of AMD in the AI-powered graphics performance race

Though Intel would probably prefer its GPU sales to be ahead instead.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

At this year's Technology Tour event in Arizona, Intel not only launched its Panther Lake CPU architecture but it also announced an update to its AI-powered performance scaling package, and it now brings XeSS on par with Nvidia's DLSS, as Arc GPU users will soon get the benefit of multi-frame generation.

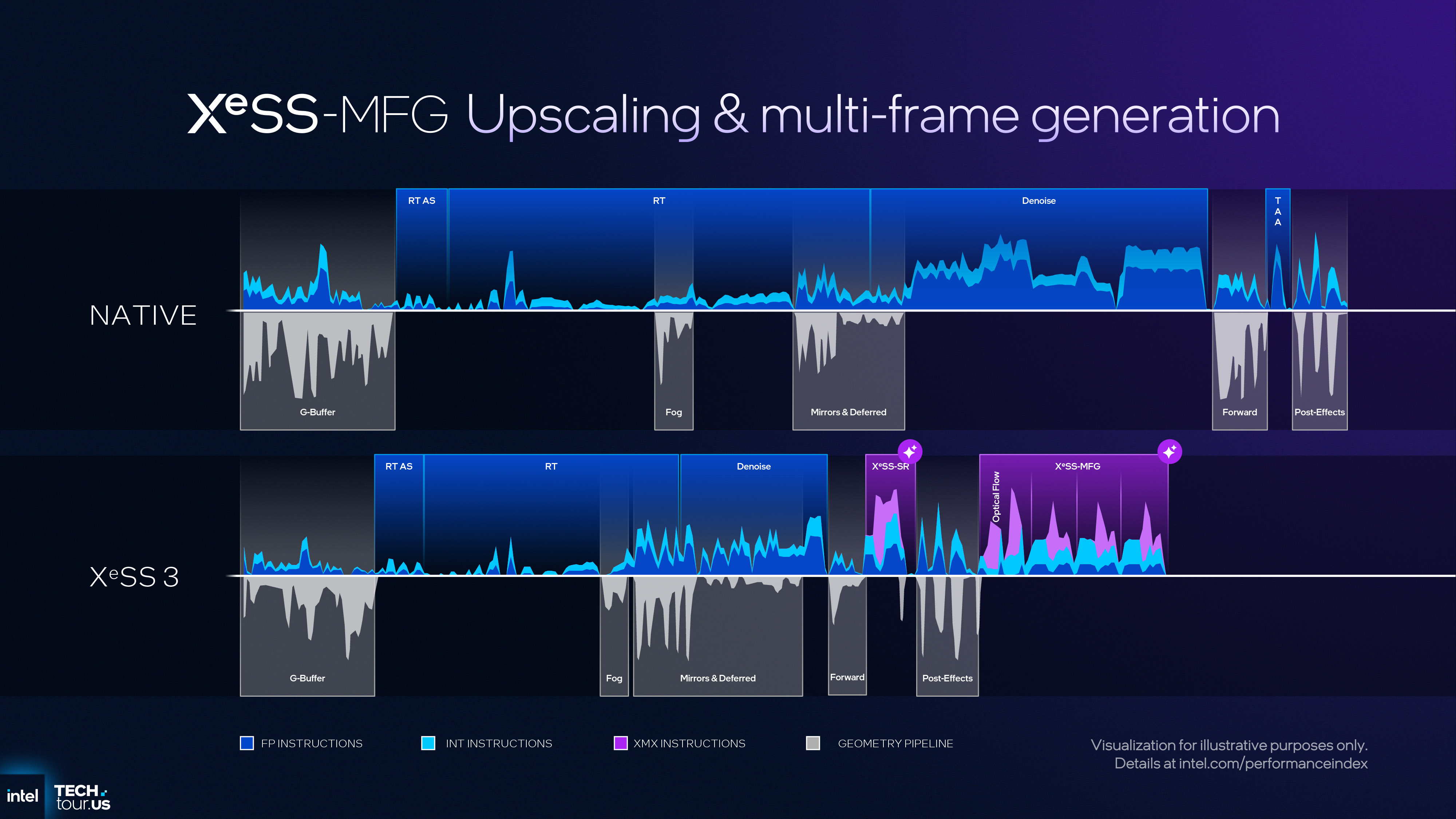

Exactly how soon is yet to be confirmed, and it's not clear whether XeSS 3 will have any other notable additions or improvements. However, XeSS-MFG is definitely coming, and in games that already support XeSS-FG, you'll be able to enable 3x or 4x frame generation. This can be either in the game itself or via Intel's Graphics Software application.

As it so happens, Intel has sneakily been hinting at multi-frame generation for several months, but it's only very recently that this was noticed. Hidden away in a config file was a single line titled 'Multi-Frame Generation (XeSS)', but I just assumed that it was either a fairly generic label or that Intel wouldn't have anything to show for a good while. How wrong I was on both counts.

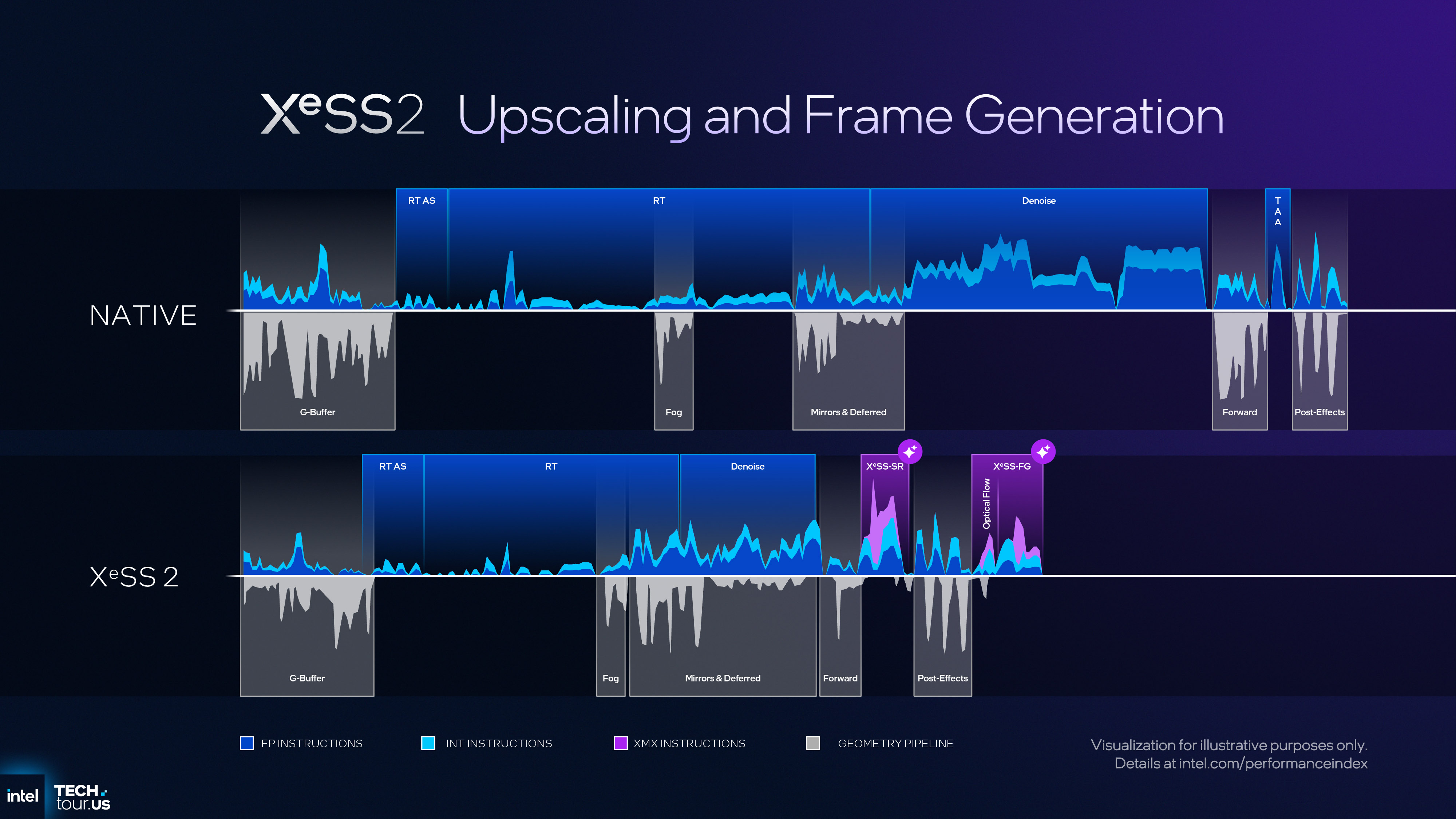

So just what exactly is XeSS-MFG? Well, Intel's frame generation system works very much like Nvidia's does. The GPU renders a frame as normal and then stores it in VRAM. Once the next successive frame has been finished, the two frames are then processed through an AI algorithm to generate an in-between frame.

Once that's done (and it's much quicker to do than rendering), the previous frame is then scheduled for presentation on the monitor, followed by the generated frame and finally, the one that's just been rendered. From the perspective of the user, the end result is a higher rate of frames appearing on screen, giving the whole shebang a smoother appearance.

Frame generation doesn't always make things feel smoother, though, because the actual rendered frames are delayed from appearing on screen in order to generate the additional one. And in the case of Intel's new XeSS-MFG, where one, two, or even free extra frames can be created, the delay can be big enough to be noticeable in terms of input lag.

It all comes down to how quickly the 'normal' frames can be rendered. The faster that process is, the less noticeable the presentation delay will be, and the lower the input lag will be. Hence why frame generation is generally recommended to only be used with upscaling and when the base frame rate is above a certain level (eg, 60 fps).

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Just as with Nvidia's GeForce RTX 50-series, Intel doesn't use any kind of hardware optical flow system as part of the frame generation process. DLSS 4 MFG and XeSS-MFG both use an AI algorithm to handle all of that. Blackwell GPUs run that on Tensor cores, whereas Alchemist and Battlemage GPUs use XMX (Xe matrix extension) units.

I got to see XeSS-MFG first-hand while over in Arizona, as Intel was running a demonstration of it, using an early version of the Painkiller reboot on a Panther Lake laptop. The 12 Xe core GPU in that PC ran the game at around 45 to 50 fps at 1080p native, and enabling 4x multi-frame generation pushed that to around 200 fps.

And just like DLSS 4, it actually felt perfectly fine to use. I tried my best to look for visual artefacts, but nothing really stood out as being a problem. The same is true of input lag: I managed to get a quick check of native vs MFG, and I honestly couldn't feel any difference between the two. However, such short experiences under tightly controlled conditions hardly make for compelling evidence.

That said, my experiences of using XeSS normally have always been very positive, so I'm confident that the brief blast with Painkiller and a Panther Lake iGPU bodes well for XeSS-MFG on a full-blown Arc A750 or B580. Compared to AMD's FSR and Nvidia's DLSS, XeSS is nowhere near as supported by game developers, which is a real shame considering how good it is.

The reason why is simple: Intel's share of the discrete graphics card market is minuscule, so if developers only have enough time to properly implement one or two upscaling/frame generation packages, it makes sense to choose those that the majority of gamers are going to use.

As things currently stand, XeSS-MFG is only for Intel Arc GPUs, and when I asked Intel if it had plans for extending it to other GPUs (just as it has done with XeSS upscaling), I just got a repetition of 'XeSS-MFG is only for Intel Arc GPUs'. I dare say that if Intel did make it widely accessible, it wouldn't do anything for its graphics card sales, but it would at least ensure all the hard work behind the development of XeSS would find its way to a bigger audience.

Whatever happens in the future with XeSS-MFG, it now just leaves AMD as the only major GPU vendor without a multi-frame generation technology. It doesn't really matter whether one needs it or not—it's about showing the world that you're at the cutting edge of graphics tech, and right now, it has to be said that AMD's FSR is currently in third place behind DLSS and XeSS.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.