Intel’s future discrete GPUs will support VESA adaptive sync

The confirmed support for adaptive sync hints at Intel's gaming aspirations.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

AMD and Nvidia both support adaptive sync technologies—FreeSync and G-Sync, respectively—that, at their core, match a display's refresh rate to the GPU's render rate. This eliminates screen tearing and results in smoother gameplay. As it turns out, Intel is also planning to support adaptive sync, as it's said in the past, and we've recently learned those plans include the company's upcoming discrete GPUs.

Let's back up a moment. Several years ago, it was reported that Intel was working on supporting VESA's adaptive sync technology in its discrete GPUs. Adaptive sync forms the basis of AMD's Freesync technology, and is an open standard that companies are free to use without paying royalties. In effect, adaptive sync support is synonymous with FreeSync support (but not FreeSync 2, which has additional feature requirements).

G-Sync, on the other hand, is a proprietary implementation that requires special hardware inside the display. This is why G-Sync monitors tend to cost more than Freesync ones when all other features are the same.

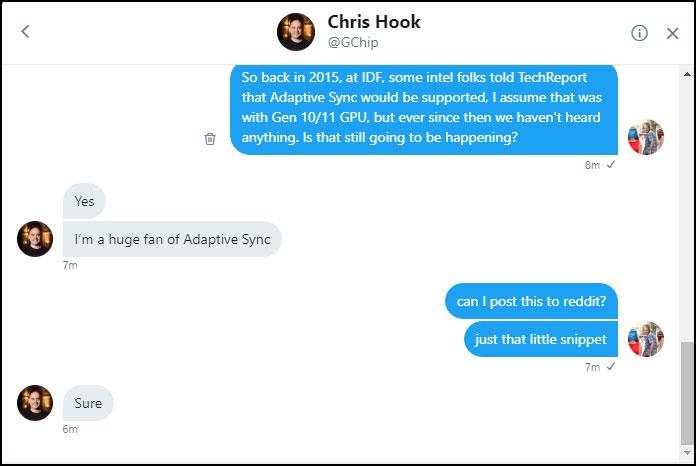

Up to this point, Intel hasn't made good on its promise to support adaptive sync, but that time is coming. A moderator at Reddit asked Intel's Chris Hook, the former marketing director at AMD who jumped ship to join Intel, if adaptive sync was still in the company's cards.

"Yes. I'm a huge fan of adaptive sync," Hook said. After seeing that, I reached out to Chris and asked specifically about Intel's discrete GPUs. Through a company spokesperson, I was told the "answer is yes."

Unfortunately, Intel isn't sharing any further details just yet, probably because it's keeping its discrete GPU plans relatively close to the vest at the moment. All we really know is that Intel is targeting "a broad range of computing segments," presumably including gaming, and that it's planning to launch its first discrete GPU in 2020.

Though there's much we don't know yet, Intel throwing its weight behind adaptive sync is potentially a big deal. If Intel proves it can compete with AMD and Nvidia in the discrete GPU space, there would then be two major GPU makers supporting the open standard. There's also a question of whether Intel will add adaptive sync support to its integrated graphics solutions.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Joel Hruska at ExtremeTech brings up some good points on the topic. The biggest one is that Nvidia could support the open standard instead of its own proprietary implementation, if it wanted to. Instead, gamers often choose a monitor based on which GPU they own (or plan to buy) because of an "entirely artificial barrier," he says.

To be fair, there's more to it than that, but it hardly matters when it comes to perception. Regardless, Intel jumping aboard the adaptive sync bandwagon is long overdue. The confirmation from Intel also suggests it's serious about gaming.

Paul has been playing PC games and raking his knuckles on computer hardware since the Commodore 64. He does not have any tattoos, but thinks it would be cool to get one that reads LOAD"*",8,1. In his off time, he rides motorcycles and wrestles alligators (only one of those is true).