Microsoft confirms that its new AI agent in Windows 11 hallucinates like every other chatbot and poses security risks to users

Hallucinating, hack-prone operating systems are the new normal.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Like the rest of the tech world and its LLM-powered pooch, Microsoft has been on a big AI push of late. Its latest achievement in that regard is the rollout of agentic AI capabilities for Windows 11 courtesy of the 26220.7262 update (via Windows Latest). Oh, and with that comes the warning that the new AI features are prone to "hallucinate" and "introduce novel security risks."

As to the details, Microsoft says security flaws include "cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation."

In other words, you could download, say, a PDF which contains hidden text instructing your Windows agent to execute nefarious tasks. And it might just carry out those instructions.

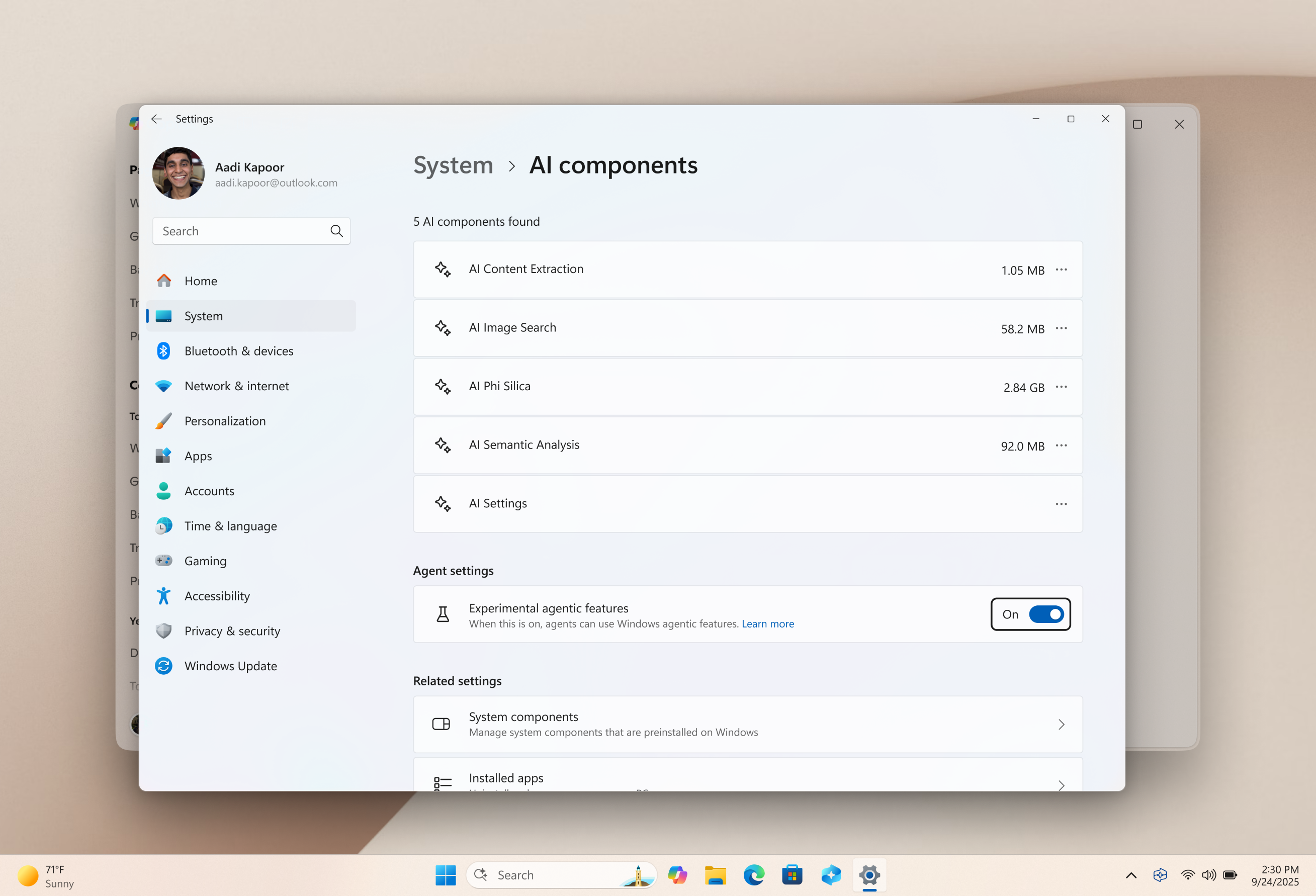

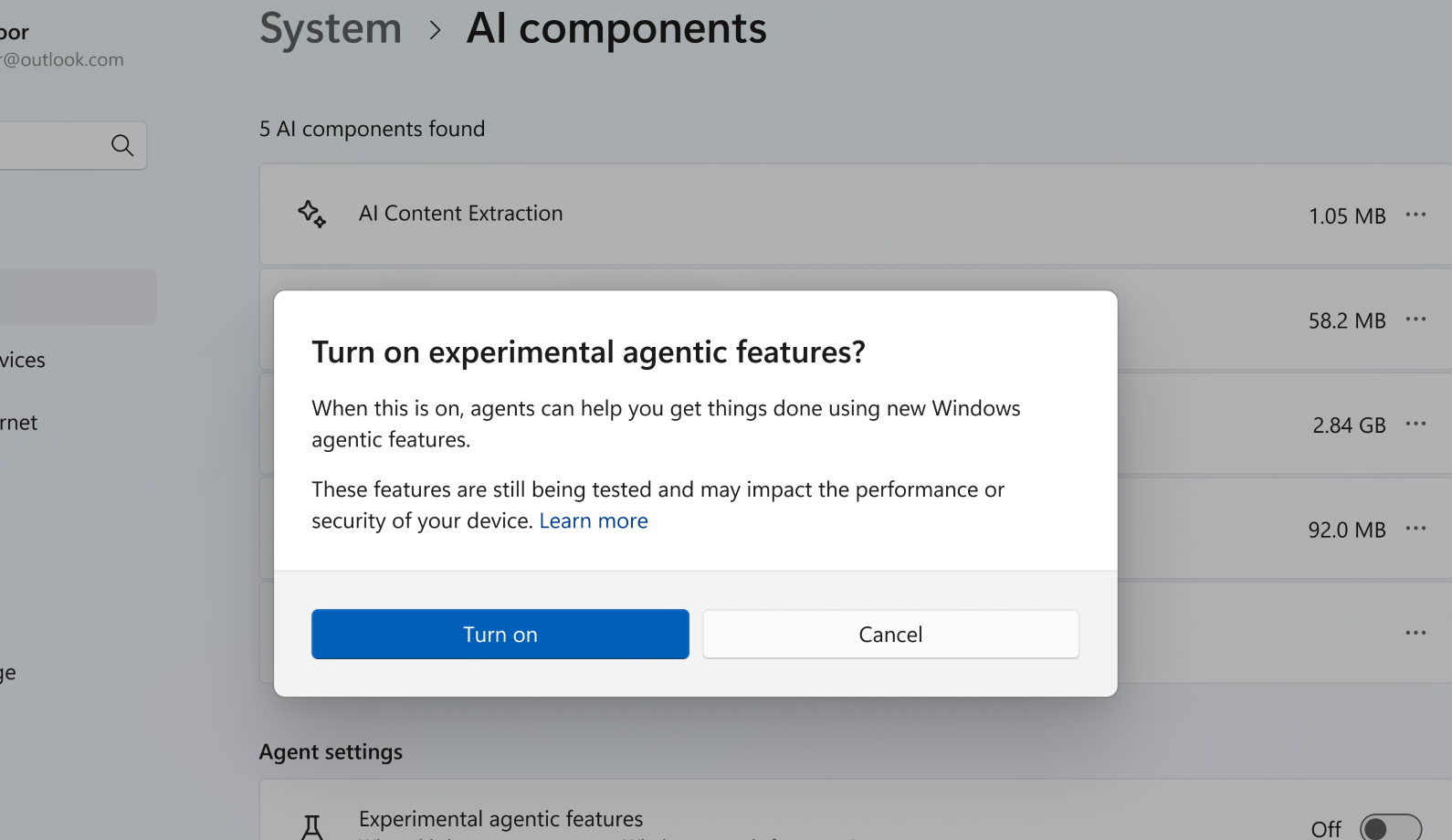

So, surely Microsoft has some mitigations in place? Up to a point. Firstly and mercifully, these new agentic features are not enabled by default. However, once switched on, they're enabled for all users, all the time. They are at least labelled as "experimental agentic features", and a warning is delivered during the setup process.

Microsoft also says the new agentic AI features operate under three core principles. First, "all actions of an agent are observable and distinguishable from those taken by a user." Second, "agents that collect, aggregate or otherwise utilize protected data of users meet or exceed the security and privacy standards of the data which they consume." And third, "Users approve all queries for user data as well as actions taken."

However, those principles do not appear to be guarantees, but rather aspirations, hence the security warnings. Microsoft also says, "We recommend you read through this information and understand the security implications of enabling an agent on your computer."

But it's hard to see how typical users are meant to understand the security implications. How is one to judge the risk? How likely is a successful security attack that relies on the agentic AI vulnerability to prompt injection? That's surely impossible for most users to "understand."

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

All of which means that Microsoft is, in effect, shunting the responsibility onto users, for now. It's up to them to decide whether to turn these features on and up to them to judge the risks.

Of course, AI models hallucinating and being vulnerable to prompt injection attacks is hardly news. Pretty much every major AI suffers from these problems. Heck, even poetry can be used to trick AI. But it is remarkable to observe Microsoft nonchalantly adding a feature with such self-confessed problems to its mainstream and utterly dominant PC operating system. Apparently, it's now completely fine to release a feature with major known flaws and security vulnerabilities.

The assumption here is that Microsoft feels the competitive impetus is absolutely overwhelming. If it does not add these features to Windows, it risks being totally overwhelmed by competitors who will. And maybe that's true. But it's still remarkable to see norms around reliability and safety to become comprehensively defenestrated. When it comes to AI, it seems buggy and insecure is the new normal. And that's really weird, isn't it?

1. Best CPU: AMD Ryzen 7 9800X3D

2. Best motherboard: MSI MAG X870 Tomahawk WiFi

3. Best RAM: G.Skill Trident Z5 RGB 32 GB DDR5-7200

4. Best SSD: WD_Black SN7100

5. Best graphics card: AMD Radeon RX 9070

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.