Nvidia sells half a million AI chips and bags $14.5 billion in just three months

We've created a monster, people.

It's all too easy to forget that Nvidia's AI-accelerating uber-GPUs started off as little more than an experimental offshoot from graphics chips for gaming PCs. So, if we do end up as slaves to artificial overlords, we really will only have ourselves to blame.

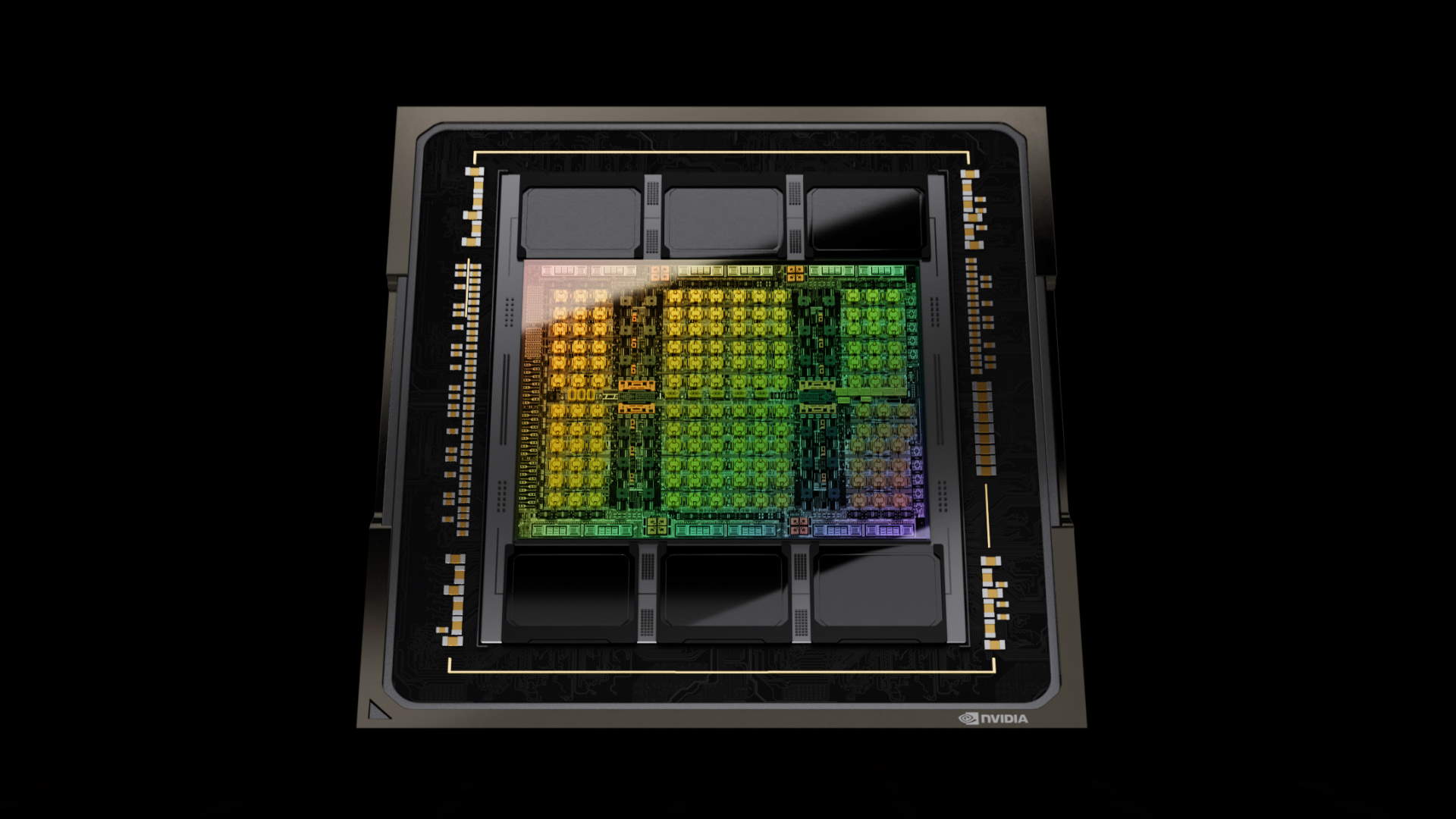

With that in mind, the latest news is that Nvidia has sold 500,000 of its H100 AI chips in the most recent completed quarter of 2023 according to research outfit Omdia. Most estimates of unit prices of the H100 range between $20,000 to $40,000 a pop, putting Nvidia's revenues for those sales at between $10 billion and $20 billion.

As it happens, Nvidia says its data center revenue for the period clocked in at $14.5 billion, right in the middle of that range. So, it all adds up.

Among the more interesting snippets from the Omdia data (via Tom's Hardware) is the identity of Nvidia's biggest customers for its pricey AI chips. We all knew that Microsoft was big on AI, so 150,000 H100s in that direction isn't a huge surprise, even if the cost of just under $5 billion at the purported average unit price is still an absolute hill of money.

But what about Facebook's parent company Meta taking the same number? What are they doing with them all...? That's three times as many, allegedly, as both Google and Amazon, both of which are said to have bought 50,000 H100s in the most recent quarter.

Tesla is on the list with a mere 15,000 units. That's still about half a billion dollars' worth.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

As if these numbers weren't big enough, Omdia reckons Nvidia's revenues for these big data GPUs will actually be double in 2027 what it is today. They won't all be used for large language models and similar AI applications, but a lot of them will.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It's interesting that Omdia is so bullish about Nvidia's prospects in this market despite the fact that many of Nvidia's biggest customers in this market are actually planning on building their own AI chips. Indeed, Google and Amazon already do, which is probably why they don't buy as many Nvidia chips as Microsoft and Meta.

Strong competition is also expected to come from AMD's MI300 GPU and its successors, too, and there are newer startups including Tenstorrent, led by one of the most highly regarded chip architects on the planet, Jim Keller, trying to get in on the action.

One thing is for sure, however, all of these numbers do rather make the gaming graphics market look puny. We've created a monster, folks, and there's no going back.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.