Intel's FakeCatcher tech can detect deepfakes instantly with 96% accuracy

It looks for the blood.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

Deepfakes are one of the more worrying developments when it comes to AI. They are often harrowingly realistic videos of people saying and doing things they've never done. Generally speaking, they're created by overlaying a face, usually one of a celebrity, over another person's to make these misleading videos.

They're often intended to be funny, or at least not made with any serious intent. Some are looking to create movies with famous actors without ever having to leave the house. But as you can imagine there's a tonne of porn out there using deepfakes, which range from fantasies made with video game characters to problematic images of real people.

Aside from the blatant disrespect this can often show to the images of people used, one of the most dangerous things about deepfakes is they're really good. I've seen deepfakes that I'd never have guessed weren't real, and that's especially dangerous when we have an internet that can spread misinformation like wildfire.

“Deepfake videos are everywhere now. You have probably already seen them; videos of celebrities doing or saying things they never actually did.” explains Ilke Demir, senior staff research scientist in Intel Labs.

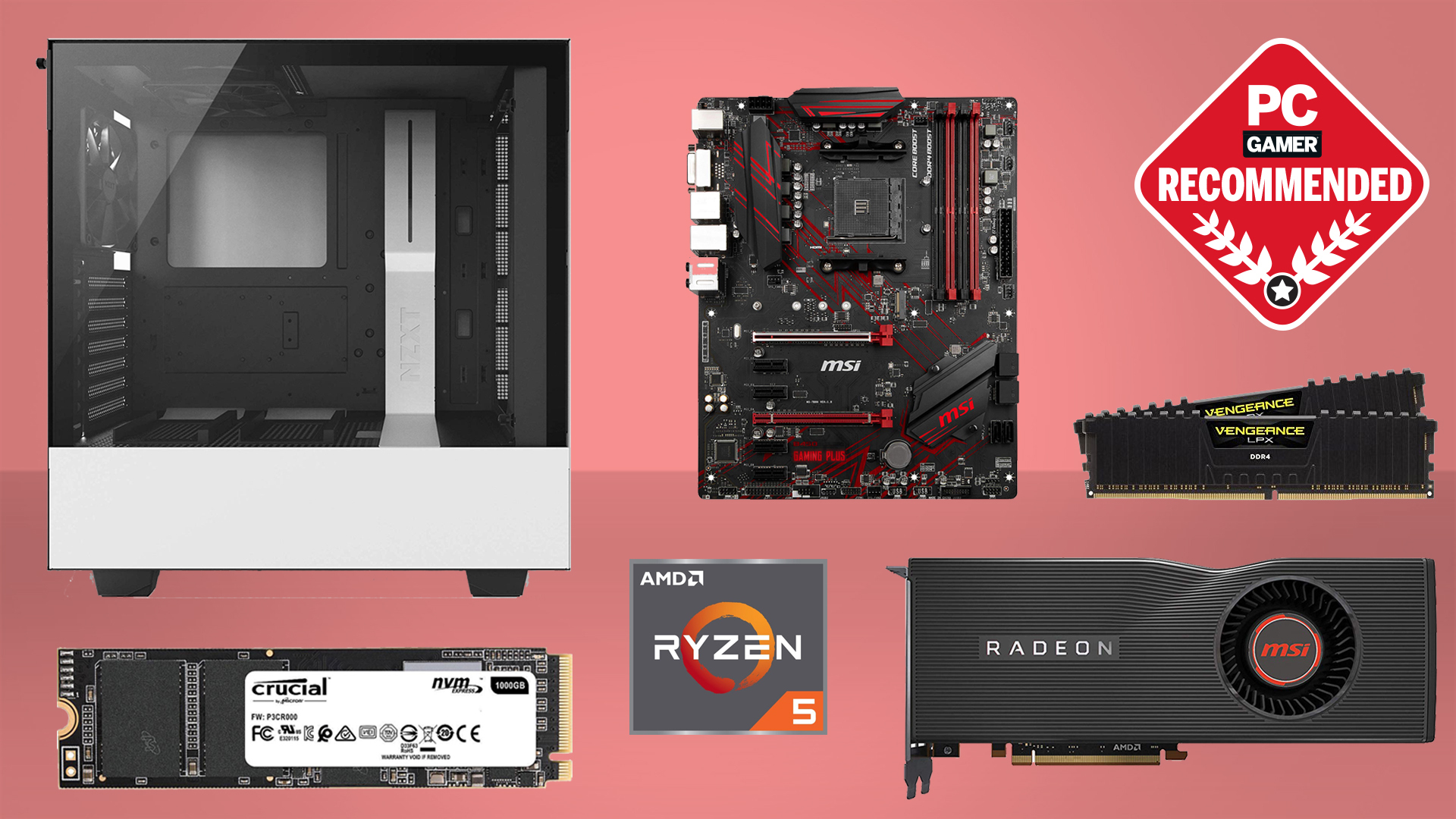

Best CPU for gaming: the top chips from Intel and AMD

Best graphics card: your perfect pixel-pusher awaits

Best SSD for gaming: get into the game ahead of the rest

As it turns out, it takes an AI to catch an AI, and thankfully Intel has been working on just the thing. The company has been working on efforts as part of its Responsible AI work, and has developed what Intel has dubbed the FakeCatcher. This is tech specifically designed to detect fake videos like deepfakes, and it can reportedly do so within milliseconds with a 96% accuracy rate.

Intel explains a lot of other tools attempting to detect deepfakes try to analyse the raw data in the files, while FakeCatcher has been trained with deep learning techniques to detect what a real human looks like in videos. This means it's been trained to look for all the little things that makes people real. Subtle things like noticing blood flow in pixels of a video, which apparently the deepfakes are yet to master. Though maybe this will be how they learn to master our human blood effects. That's a creepy sentence if I've ever written one.

FakeCatcher is set to work on a web-based platform that will hopefully allow anyone to have access to the tech. On the back-end it uses Intel's 3rd Gen Xeon scalable processors combined with a bunch of proprietary software to make the required computations.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

If you want to learn more about Intel's FakeCatcher, check out the video at the top of the article. Demir will also be hosting a Twitter Spaces event at 11:30 am PST on Nov 16 where she will further go into the technology used. That's if Twitter's new owner Elon Musk doesn't lay off the people who make Twitter Spaces work before then.

Hope’s been writing about games for about a decade, starting out way back when on the Australian Nintendo fan site Vooks.net. Since then, she’s talked far too much about games and tech for publications such as Techlife, Byteside, IGN, and GameSpot. Of course there’s also here at PC Gamer, where she gets to indulge her inner hardware nerd with news and reviews. You can usually find Hope fawning over some art, tech, or likely a wonderful combination of them both and where relevant she’ll share them with you here. When she’s not writing about the amazing creations of others, she’s working on what she hopes will one day be her own. You can find her fictional chill out ambient far future sci-fi radio show/album/listening experience podcast right here.

No, she’s not kidding.