Love it or hate it, but frame generation is the one major graphics technology that really needs improving in 2026

Upscaling is almost perfect. Now it's frame gen's time.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

When it comes to GPUs and graphics technology, I know what I'd like to see in 2026. Super-modern processors that are a substantial leap forward in terms of performance, capabilities, and affordability. We won't get that, of course, because we didn't get that this year. Or last year. But there is something GPU-related that can be a lot better, and that's frame generation.

Ever since GPU upscaling and frame generation first appeared (DLSS 1.0 in 2019, DLSS 3.0 in 2022), I've always held the opinion that if you couldn't tell they were working, other than just from the performance lift, everyone would be happily using them by default. With the first iteration of both technologies and their corresponding alternatives from AMD and Intel, you could very much tell they were being used, and that's only served to tarnish their reputations.

However, when it comes to upscaling these days, it's about as close to perfection as you can get. Assuming it's been properly implemented into a game, of course. When it's all working as intended, Quality or Balanced upscaling at most resolutions does an incredible job, and I always enable it in every game that supports the most recent versions.

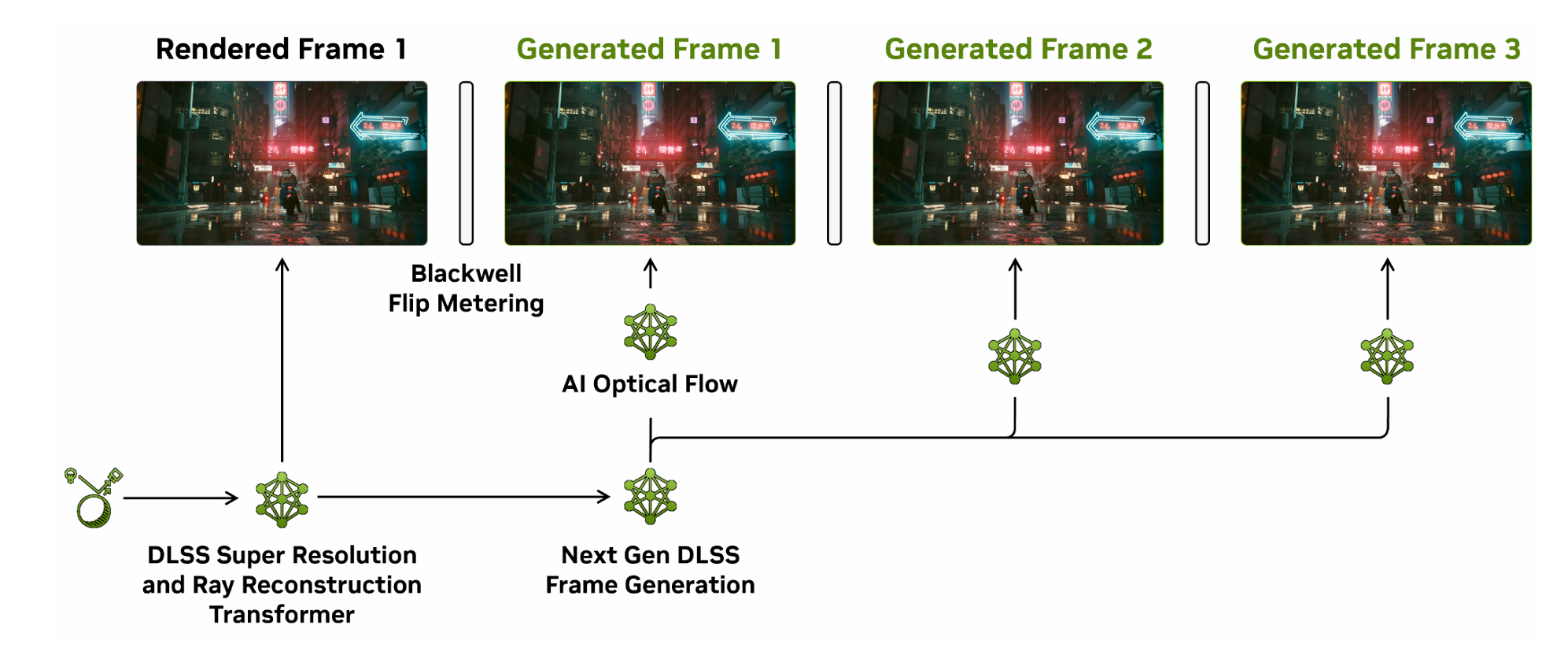

Alas, I can't say the same about frame generation. Don't get me wrong: Nvidia's Multi-Frame Generation in DLSS 4 is extremely good, but even in games that are the poster child for DLSS (e.g. Doom: The Dark Ages, Cyberpunk 2077), enabling frame gen requires a conscious decision to ignore its flaws and limitations.

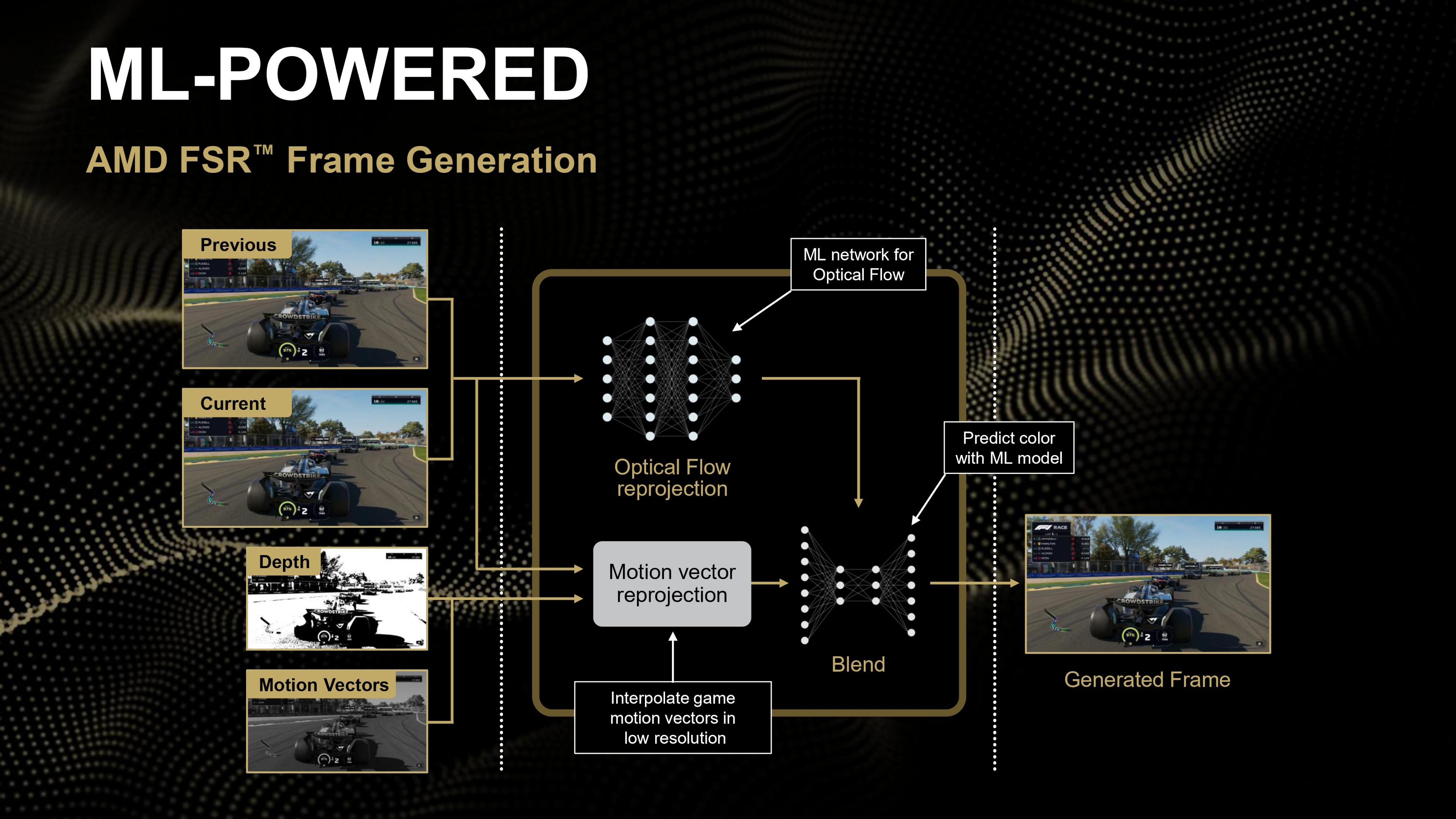

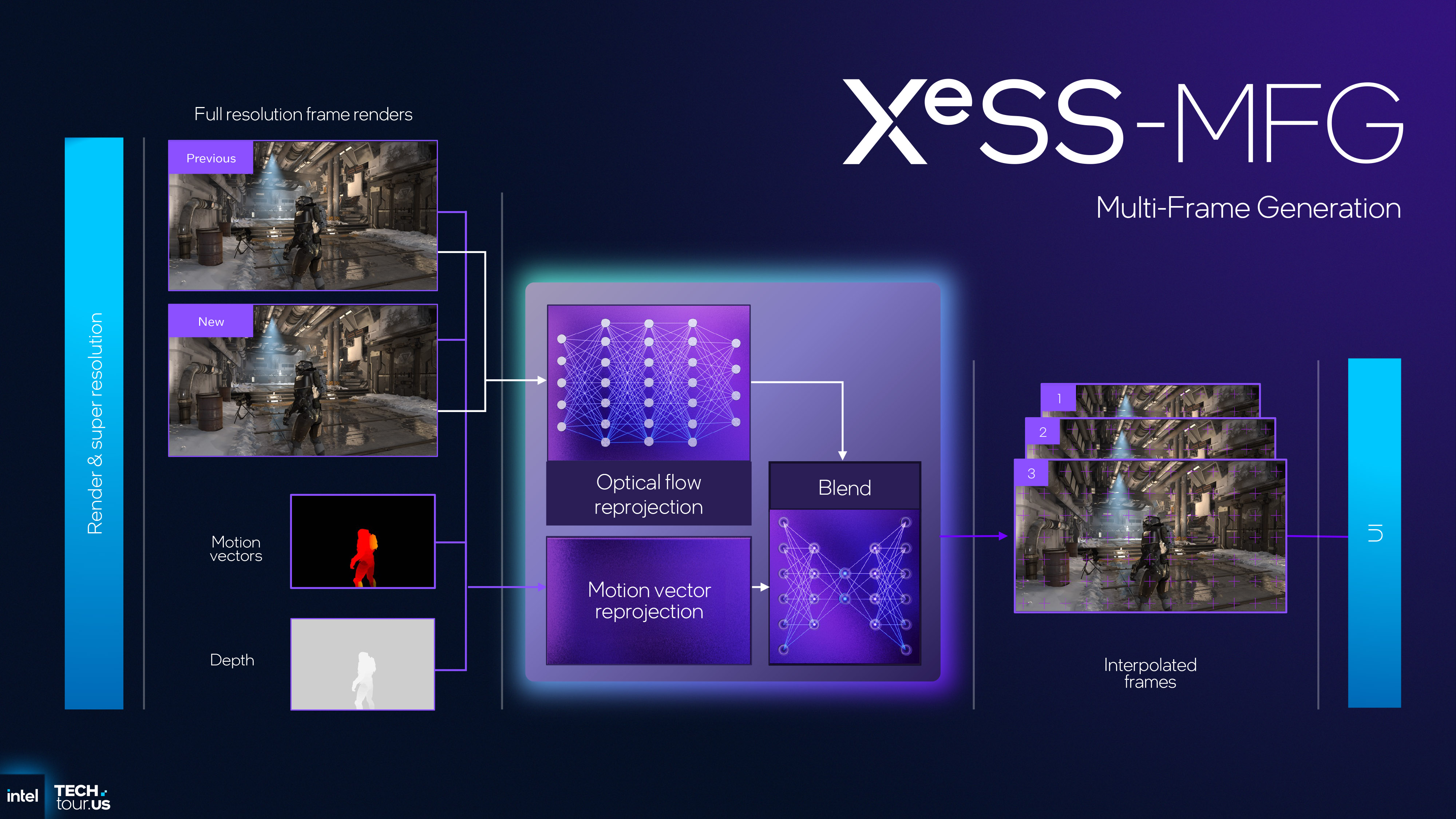

You have to accept that it demands a base frame rate of, ideally, 60 fps before you enable it, to ensure that the added input latency inherent to frame generation is at an agreeable level. And while you're unlikely to notice on-screen gobbledegook when you're gaming at over 120 fps, the neural networks used in DLSS 4 MFG, FSR 4 Frame Generation, and XeSS-MFG will get things wrong at times.

DLSS Quality upscaling + 3X frame generation

Ryzen 7 9800X3D, GeForce RTX 5090, 4K, Ultra preset

There's no way around the latency issue with frame interpolation, unless you start delving into the world of predicting input changes (which is a whole different tech), but I can live with it in certain games. What I can't live with is when frame gen turns my perfectly rendered and upscaling graphics into a wonky, blurry mess.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

If Nvidia, et al can properly sort out the visual quality of frame generation in 2026, then I'll be happy to use it as intended: a simple switch to seriously boost performance in games that comfortably hit 60 fps. My worry is that we won't because GPU companies can't usually sell more of their latest graphics cards this way. Instead, they tend to use tech like ray tracing or performance metrics such as FP32 TFLOPS to promote new GPUs.

FSR 4 Frame Generation being tied to RDNA 4 GPUs is the exception for AMD, not the norm, though Nvidia ties pretty much every new DLSS feature to a certain GeForce series. At least Intel's AI-powered interpolator works on every Arc card.

However, we're almost certainly not going to see any new GPU architecture in 2026, even if Nvidia Super updates miraculously appear or Intel actually launches its big Battlemage G31-based card, they'll have no actual physical changes inside the chips.

Nvidia's RTX 60-series is at least 14 months away, as RTX Blackwell only launched in January of this year. AMD's RDNA 4 architecture followed a few months later, and while Intel got the jump on both of them, releasing its Battlemage chips in December 2024, there's no sign of any plans for a successor to make an appearance next year.

This leaves the door open for everyone to stay in GPU news headlines by seriously updating their frame generation tech. Upscaling is about as good as it's ever going to get—refinements to the neural networks are all that's left to do—so frame interpolation is perfectly placed to get a nice overhaul.

FSR Performance upscaling + 2X frame generation

Ryzen 9 9900X, Radeon RX 9070 XT, 4K, RT Ultra preset

While I genuinely hope we do, I fear that we're only likely to be fed more AI stuff that has limited appeal or applications. For example, AMD has only just released its FSR 4 Frame Generation, so it's going to be concentrating on finalising FSR Radiance Cache for developers. Nvidia will probably push neural rendering a lot more in 2026, and Intel… well, who knows with Intel.

Right now, I'm not interested in any of that. Actually, I am, but that's just because I'm a graphics nerd, and have been for my entire computing life. But as a PC gamer, I just want fully-functional, works-as-intended performance boosters. I've already got solid upscalers like DLSS and FSR 4, and I've perfectly low-latency switches like Reflex. Now, I just want the same level of quality from frame generation.

It's not like I'm asking for cheap RAM or anything like that.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.