I've tested Nvidia's latest ray tracing magic in Cyberpunk 2077 and it's a no-brainer: at worst it's just better-looking, at best it's that and a whole lot more performance

Nvidia's DLSS 3.5 Ray Reconstruction feature fits perfectly with Cyberpunk 2077's 2.0 added realism.

The new Cyberpunk 2077 2.0 update is out and, as part of CDPR and Nvidia's long-standing effort to make it the new Crysis, it features an entirely new take on ray traced realism. Called Ray Reconstruction, this new DLSS feature literally grounds everything in the game, and makes the previous Overdrive ray tracing mode look faintly ridiculous.

And the best thing? It's actually a little lighter on your graphics card and isn't just restricted to the RTX 40-series either. Better visuals and less demanding. Now, that's something we can all get behind, right? Well, unless you've got an AMD card, then I feel bad for you, son.

I covered Nvidia's new method of bringing its AI chops to bear on denoising a real time ray-traced scene around its Gamescom announcement, but now I've had a chance to get hands on and see it in action around Night City myself. And it's much more than just smoothing out the otherwise dotty images of a noisy scene; Ray Reconstruction really has a huge impact on the game's believability as you're racing through the rain slicked streets.

The feature is essentially replacing the denoisers in the graphics pipeline—with a corresponding, though sometimes small boost in performance from dismissing that step—and it fits perfectly in with the rest of Cyberpunk 2077's 2.0 update; it's adding another layer of realism to the game. Though I'd argue it's probably fixing something you didn't even realise was an issue in the first place.

This has long been the problem with the introduction of real time ray tracing. It's at once incredibly demanding of your GPU hardware, and sometimes not all that obvious. With traditional rasterised rendering techniques, developers got incredibly good at faking lighting, to the point where when a more realistic method was introduced—ray-traced lighting—it barely looked any different unless you looked just right.

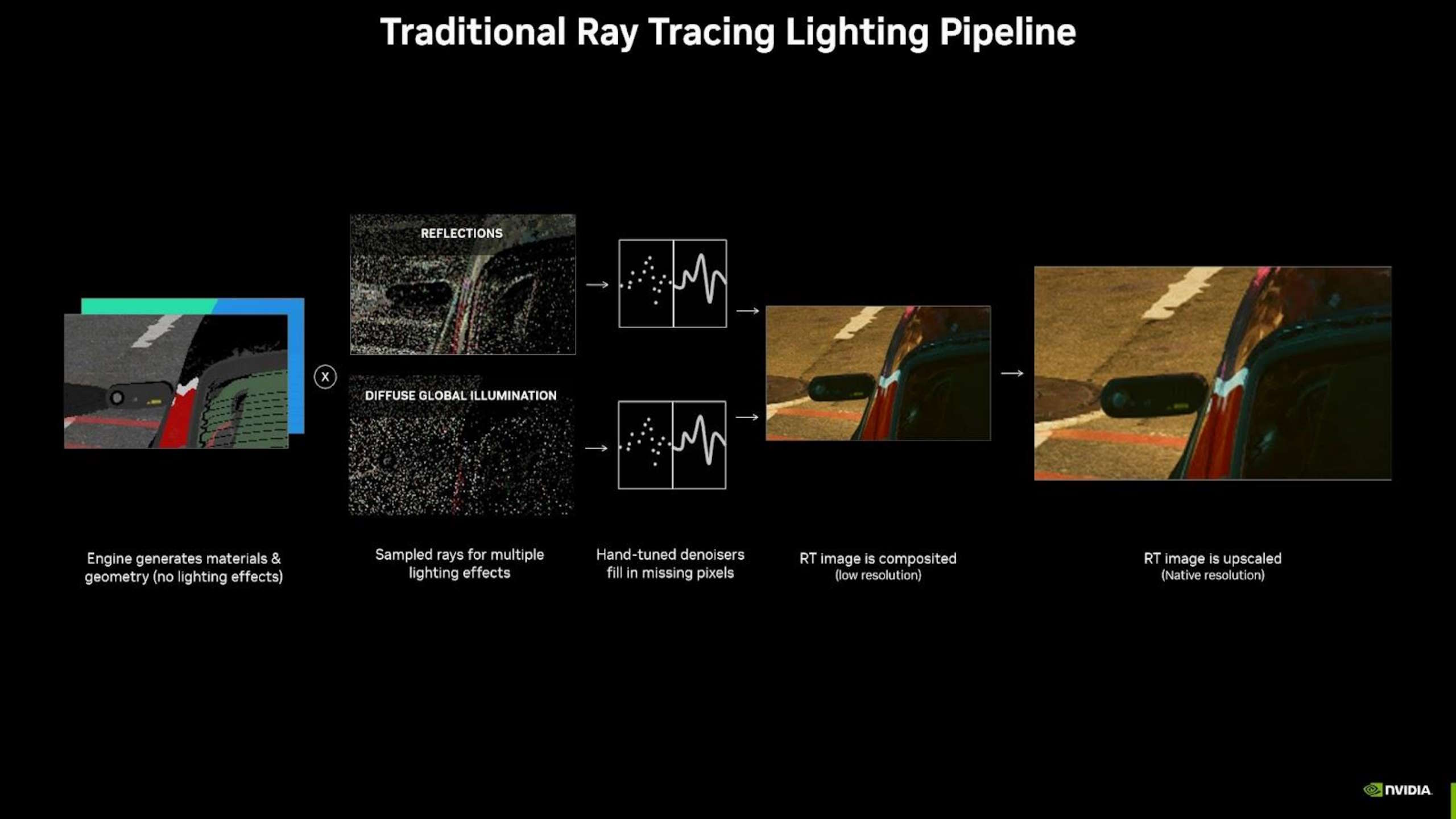

But when you're shown behind the curtain the fakery starts to become more obvious. And the same is true with ray tracing, too, because it's far too computationally expensive to trace every single photon or pixel that would make up a scene. So current ray tracing methods track a much smaller number of rays bouncing off the geometry in your game scene and use that as a representative sample.

And this is what you're left with:

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The final blending stage can smear away detail from a scene.

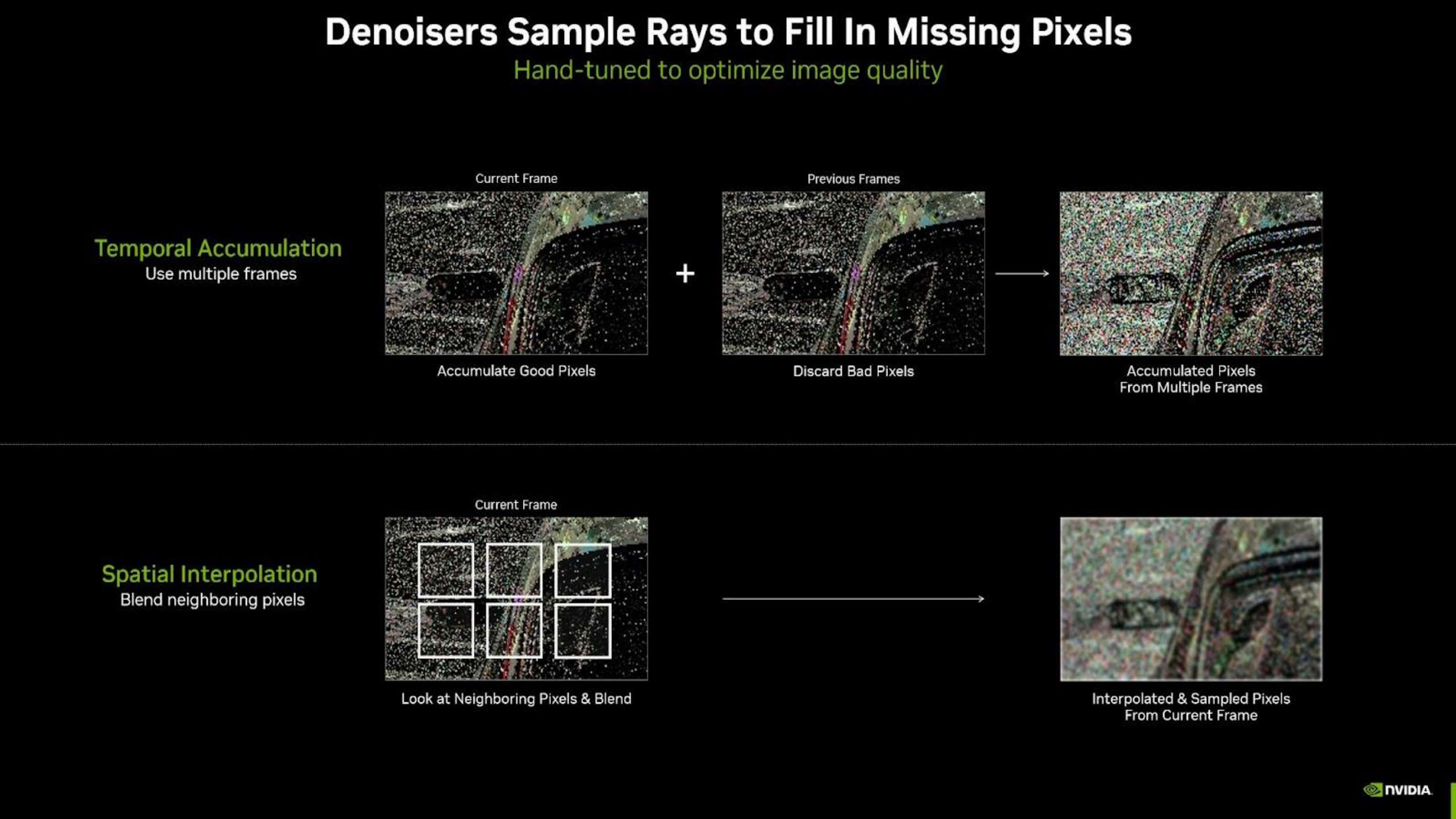

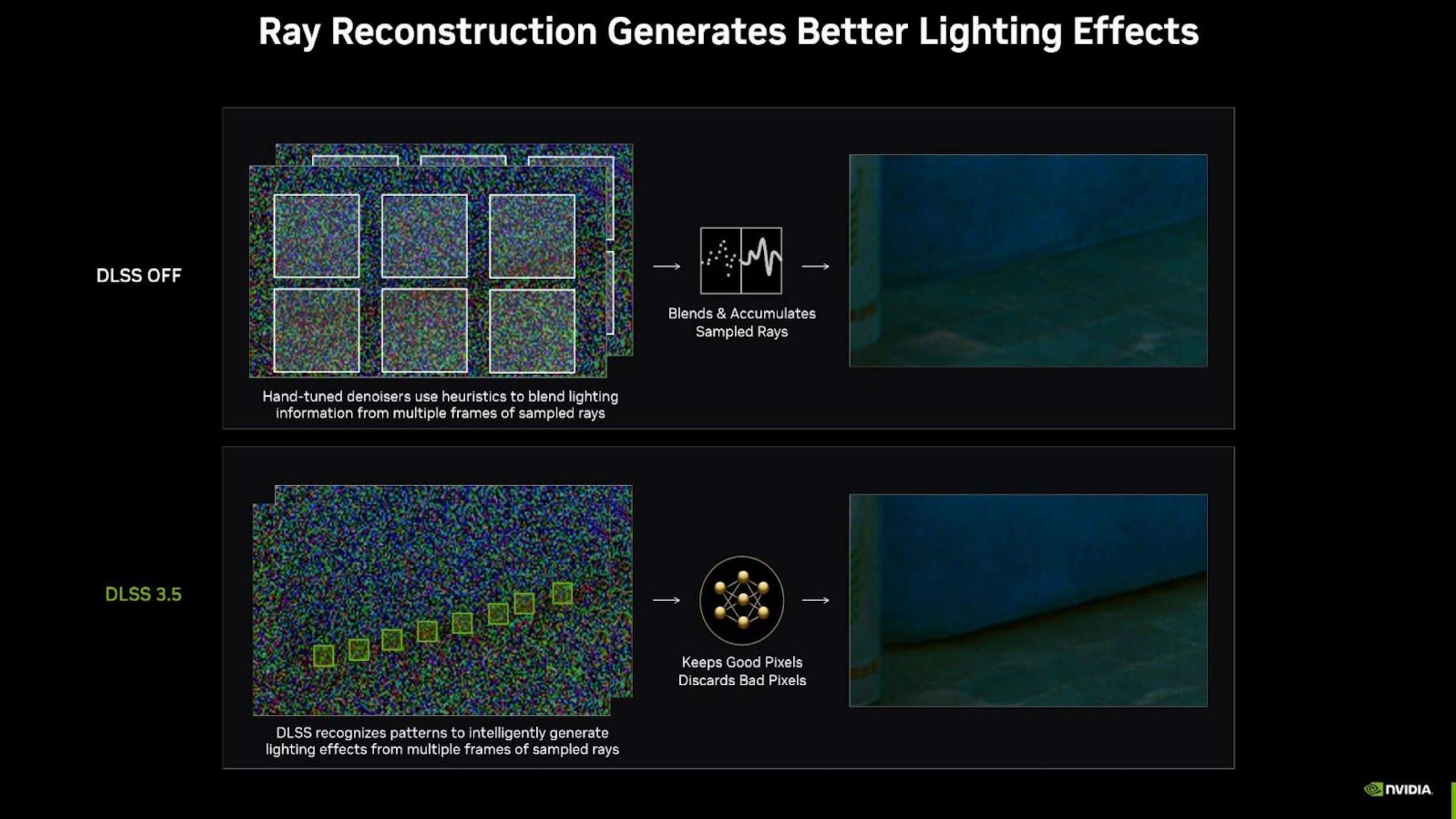

But this noisy image is far from what you want the final look of your game to be. So, from there it's the job of the denoisers to fill in the missing pixels that didn't result from the firing of those few specific light rays. These so-called hand-tuned denoisers use two different methods to fill in the blanks. First, they track and group pixels temporally across multiple frames, essentially allowing for more samples, then they use neighbouring pixels from these representative samples, and finally interpolate from them, essentially blending them together.

That's how you get the cleaner, not-so-speckly final image in something like Cyberpunk 2077.

But it's still just another layer of fakery, with Nvidia itself calling this accumulation of pixels in a temporal fashion across multiple frames "stealing rays from the past." That's fine for a static image, where that accumulation of pixels will be more or less the same from one moment to the next, but in motion is where it falls down. The temporal nature of denoising can add in weird graphical effects and ghosting, and then the final blending stage can smear away detail from a scene.

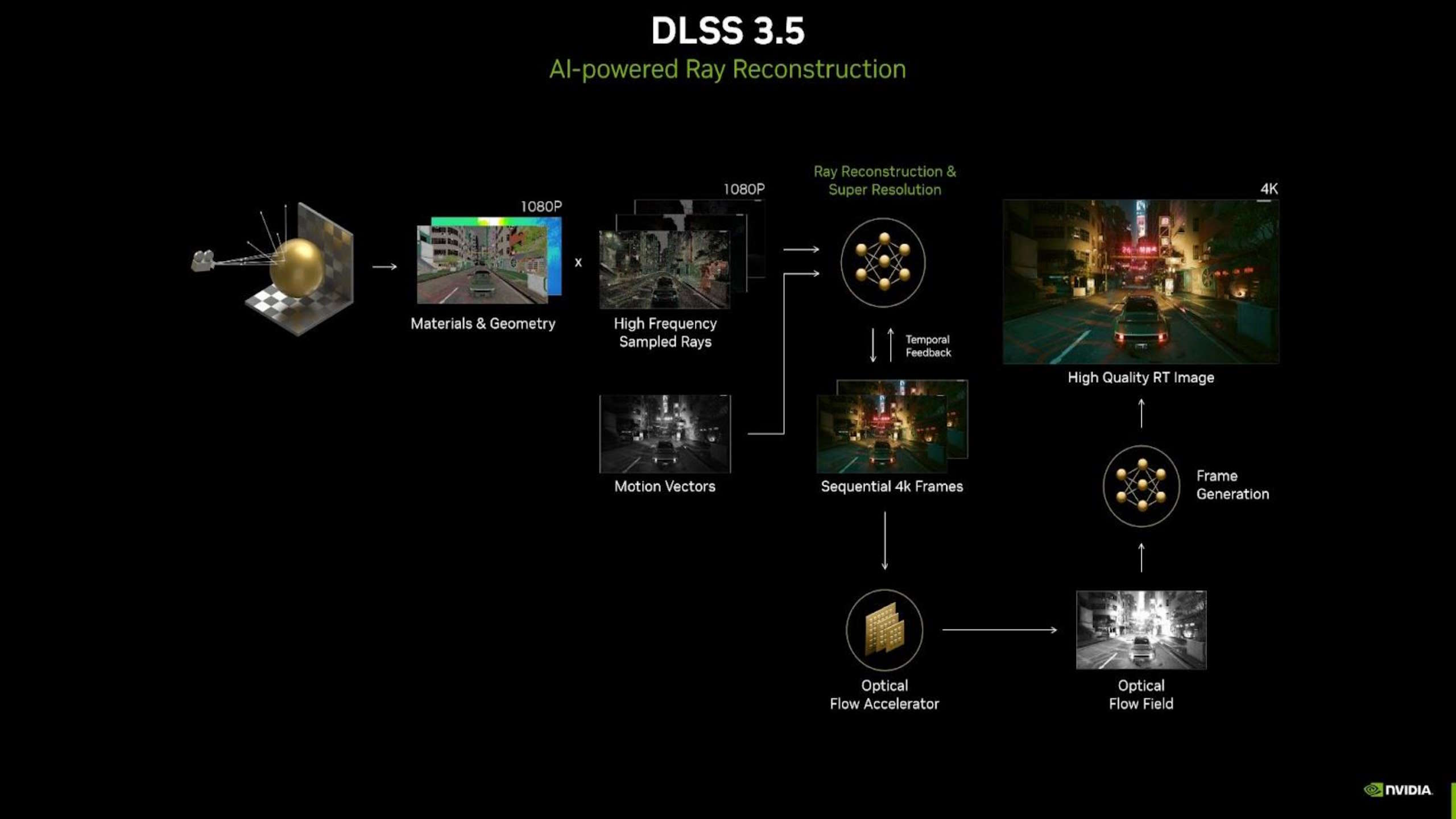

So, how do you fix that? Well, how do you fix anything in 2023? With AI. Yup, throw ChatGPT at it and you're laughing. Okay, not quite. What you're actually getting with Ray Reconstruction is a supercomputer-trained AI neural network beavering away to generate far more accurate pixels in between the sampled rays.

And it all works hand-in-glove with the magic of DLSS, coming in at the same stage of the graphics pipeline as Super Resolution upscaling. It still uses temporal feedback to help generate the higher quality image, but isn't reliant on the optical flow accelerator and instead builds on motion vectors to keep a stable image during periods of fast movement. That's important, because where Frame Generation needs the power of the new optical flow accelerator hardware inside the Ada GPUs, Ray Reconstruction doesn't, which makes it functional across every RTX card around.

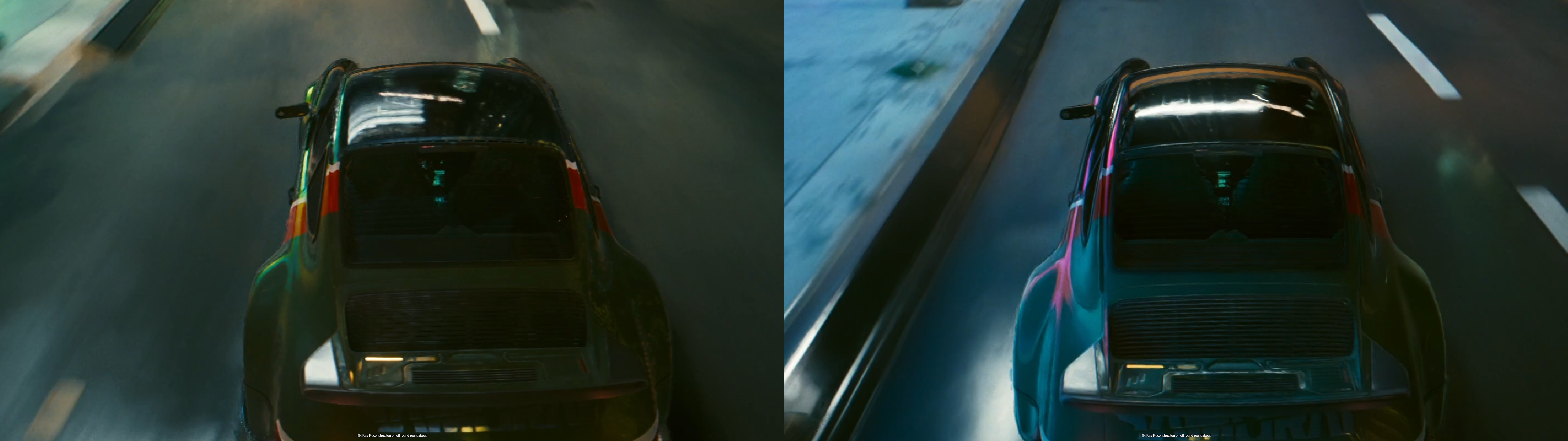

And it's really, really good-looking. When I talk about Ray Reconstruction really grounding everything in Cyberpunk 2077 I mean exactly that. Even in the path traced glory of the Overdrive ray tracing mode, people and vehicles seem to glide over the ground, never really seeming to actually make contact with Night City itself. With Ray Reconstruction enabled, however, they're far more physically connected.

The reflections and improved global illumination effects without the slightly sludgy denoisers give each person a definite point of contact with the gameworld. It's the same with vehicles, where, especially in motion, they're obviously there, not just floating across a painted floor without interacting with it.

And the temporal fakery of the denoisers is most obviously highlighted by the headlights of the myriad vehicles in Cyberpunk 2077. At rest, both images look fine, with a defined cone of light stretching away from the vehicle's headlamps. But put your foot on the floor and something weird happens; suddenly the headlight glow is all around the car, as each frame's pixel data confuses the hell out of the denoisers and tells them the light source is all over the place.

But with Ray Reconstruction that initial cone of light remains completely stable, beaming out to light up the night without bleeding back and forth in time.

Ray Reconstruction, while still part of DLSS, is not necessarily about increasing performance, but it certainly does at the high-end. Where the path tracing mode of the game needs a lot of GPU resources, it utilises a lot of denoisers in the pipeline. With that step gone, I was seeing a serious boost in performance with the RTX 4090 I was using to test the feature.

On Overdrive, with the path tracing tech demo at 4K, and with DLSS set to Quality and Frame Generation set on, I was getting 69 fps on average. But with Ray Reconstruction enabled that leapt up to 103 fps simply from ditching that denoising step. That makes Ray Reconstruction an absolute must for high-end GPUs running ray tracing.

Like Frame Generation, Nvidia has unlinked Ray Reconstruction from DLSS, but if you're using ray tracing in any form, in any compatible game, based on my experience, flip that damned switch. For me, it's a no-brainer. The extra level of detail is tangible—if you know where to look—and it's effectively free in performance terms, sometimes better than free. Sure, the effect can still be subtle, but why wouldn't you?

At this point AMD must be cursing Nvidia for consistently coming up with new, effective graphics features that it has to then go ahead and figure out a way to copy. It's doing so with Frame Generation, and based on the efficacy of Ray Reconstruction so far, I'd say AMD would do well to find a way to ape this one, too.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.