OpenAI's internal documents predict $14 billion loss in 2026 according to report

But it's claimed OpenAI will be making Nvidia-style money by 2029.

Internal OpenAI documents predict the AI specialist is set to bleed fully $14 billion in losses for 2026 according to a new report. It's also claimed that OpenAI will continue to make huge losses totalling $44 billion until 2029, when it won't just turn a profit, but will by then be generating Nvidia-style revenues.

A new report from The Information claims to have seen internal OpenAI documents setting out various financial performance projections. That $14 billion loss for 2026 is said to be roughly three times worse than early estimates for 2025.

Over the 2023 through end of 2028 period, the report claims OpenAI expects to lose $44 billion, before turning a profit of $14 billion in 2029. Somewhat incongruously, The Information also says that OpenAI's cash burn is not as bad as previously thought, with the company tearing through a mere $340 million in the first half of the most recent financial year. How that squares with overall losses counted in multiple billions isn't explained.

The report further claims that OpenAI plans to spend an astonishing $200 billion through the end of the decade, 60% to 80% of which will be spent on training and running AI models.

So, how does OpenAI eventually make money? The reports says internal forecasts predict the for-profit part of OpenAI will hit $100 billion in annual revenues in 2029, up from an estimated $4 billion in 2025.

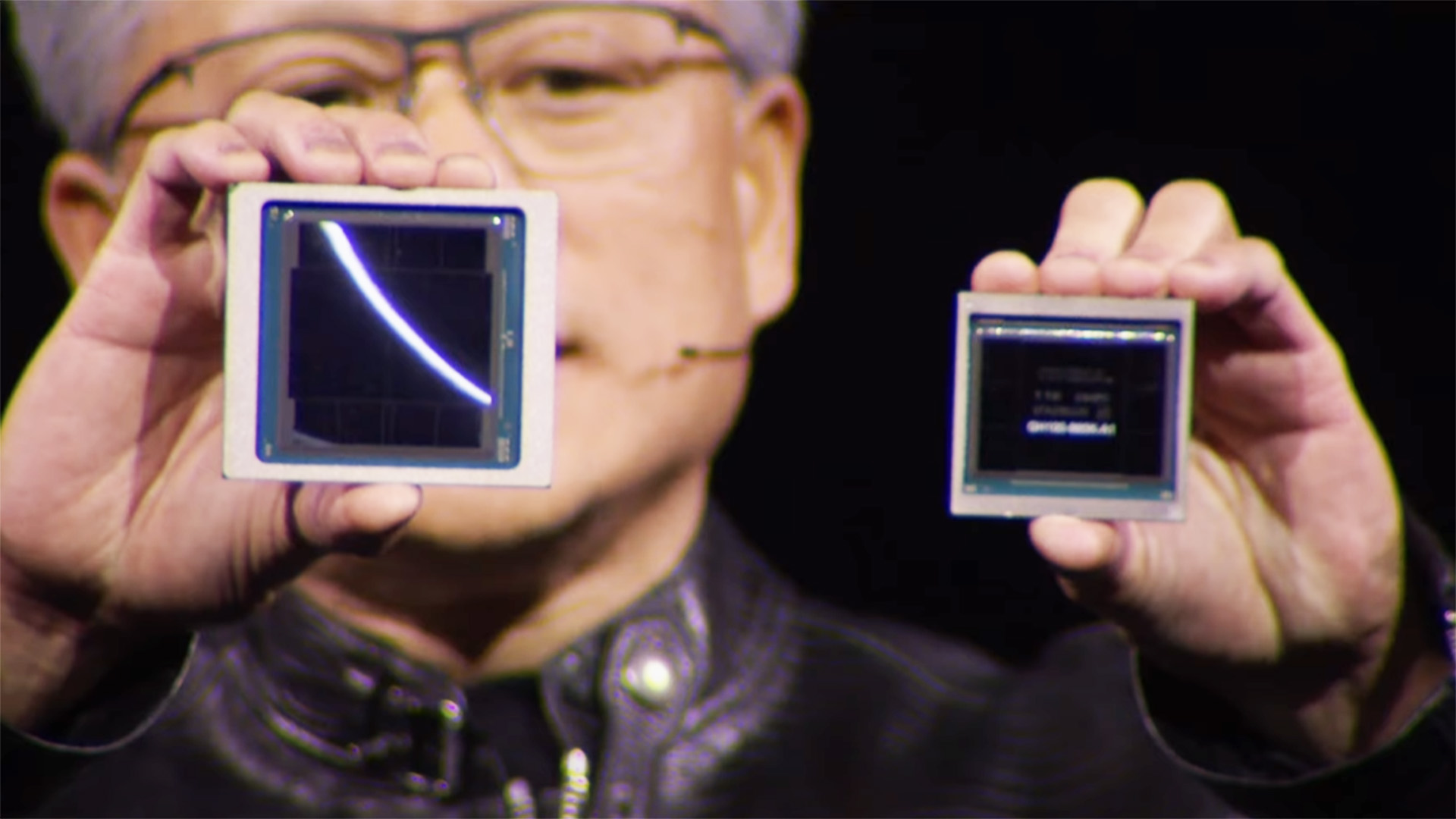

At this point, the numbers are getting silly. So, let's put that $100 billion in revenue into context. In 2025, Nvidia had revenues of around $130 billion as a consequence of holding a near-total monopoly over perhaps the largest tech hardware boom in human history. And OpenAI is expecting to more or less match that in about four years. Uh huh.

The revenue split for that $100 billion is said to be just over 50% from ChatGPT, roughly 20% from sales of AI models to developers through APIs and another 20% or so from "other products", which include video generation, search and mooted new services including AI research assistants.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It's also thought that the cost of inference, which is running AI models as opposed to training them, is coming down fast. Intriguingly, OpenAI expects to spend less on acquiring training data, too. That's forecast to cost $500 million this year, but taper down to $200 million annually towards the end of the decade.

Exactly what that says about how OpenAI trains its new models and what data it uses isn't clear. But it could suggest a move to more recursive training on AI-generated data.

Anywho, all one can say for sure is that a huge amount of money is involved. Whether OpenAI will come good financially—or for the human race, generally—well, that's a totally different matter.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.