Microsoft, Meta and OpenAI back AMD's monstrous new 153 billion-transistor alternative to Nvidia's AI chips

The big guns in the AI industry turn out for AMD's MI300X.

AMD's launch event for its new MI300X AI platform was backed by some of the biggest names in the industry, including Microsoft, Meta, OpenAI and many more. Those big three all said they planned to use the chip.

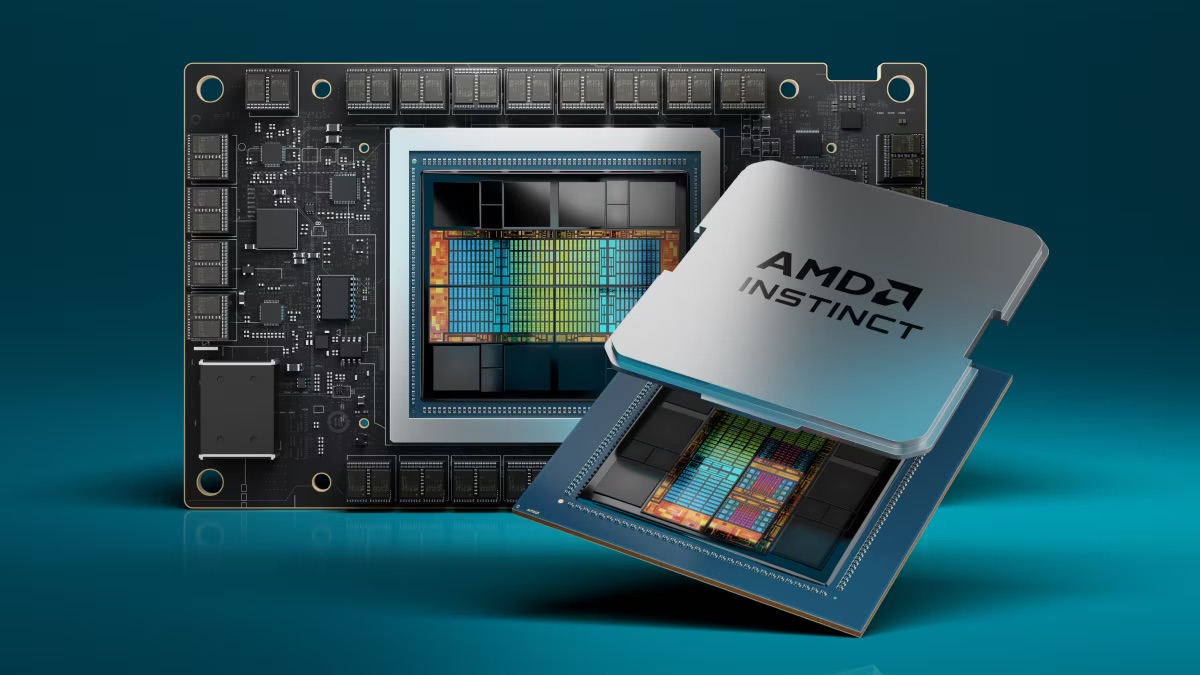

AMD's CEO Lisa Su bigged up MI300X, describing it as the most complex chip the company has ever produced. All told, it contains 153 billion transistors, significantly more than the 80 billion of Nvidia's all-conquering H100 GPU.

It's also built from no fewer than 12 chiplets on a mix of 5nm and 6nm nodes using what AMD says is the most advanced packaging in the world. The base layer of the chip is four big IO dies including Infinity cache, PCIe Gen 5, HBM interfaces, and Infinity Fabric. On top of that are eight CDNA 3 accelerator chiplets or "XCDs" for a total of 1.3 petaflops of raw FP16 compute power.

Either side of those stacked dies are eight HBM3 memory modules for a total of 192GB of memory. So, yeah, this thing is a monster.

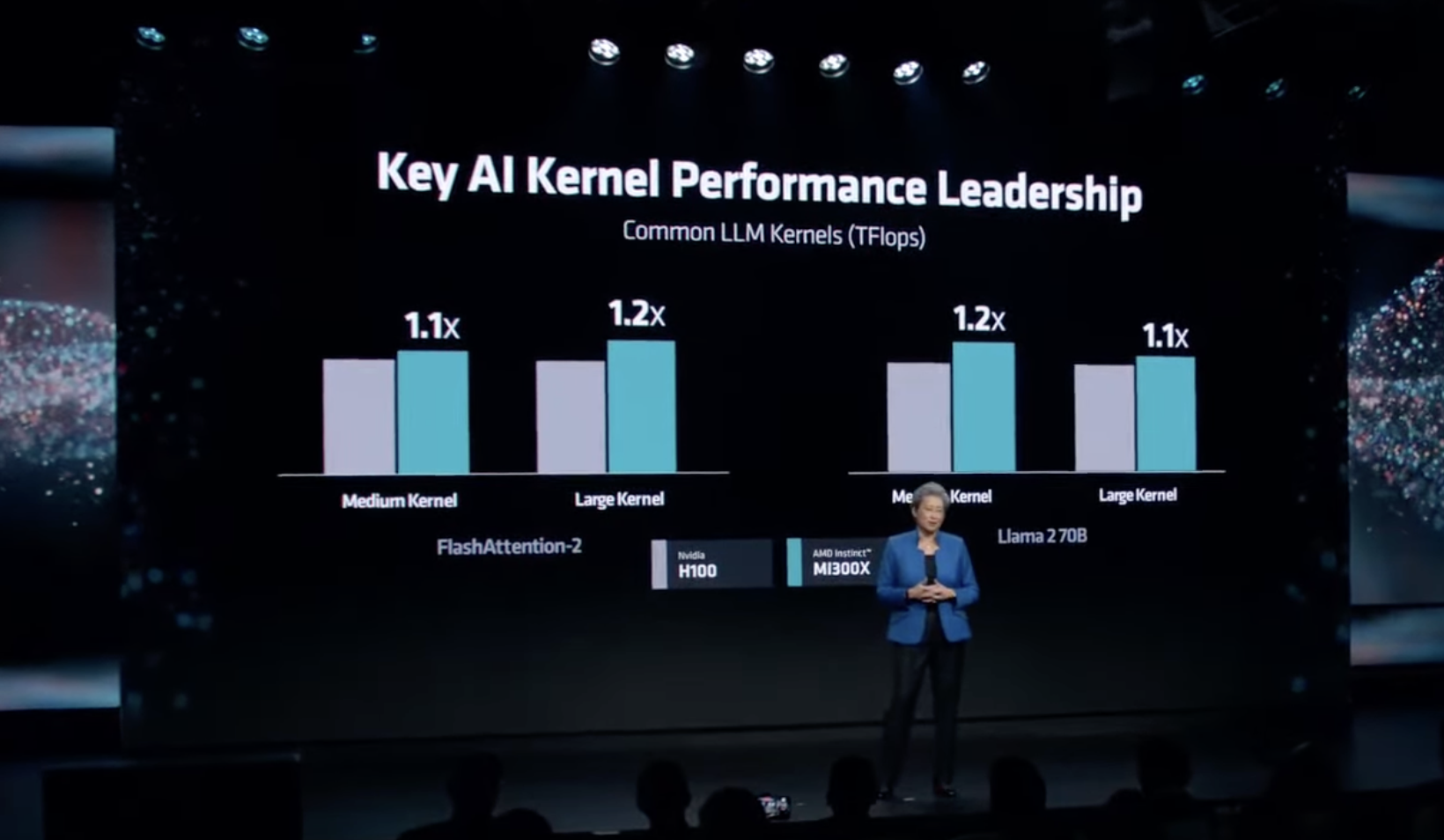

Overall AMD claims MI300X has 2.4 times the memory capacity, 1.6 times the memory bandwidth and 1.3 times the raw compute power of Nvidia's H100. In actual AI training and inferencing benchmarks, AMD generally claims that MI300X is about 1.2 times faster than H100.

In reality, so long as MI300X is broadly competitive on the hardware side, the finer details of how it compares probably don't matter all that much. Because arguably more important will be the software support. Nvidia's CUDA platform is far better supported thus far than AMD's ROCm, so the latter has much to prove.

It's worth noting that Nvidia employs more software engineers than hardware engineers, which speaks volumes about where Nvidia places value and important.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

All that said, AMD did emphasise that its memory capacity advantage means that you can do a lot more MI300X than Nvidia's H100. A fully built up server node of eight MI300X's delivers 1.5TB of memory to the 640GB of the equivalent Nvidia H100 GHX node.

How successful MI300X will be very much remains to be seen. While it's obviously promising to see the likes of Microsoft, Meta, OpenAI supporting AMD's big AI launch, we don't know how chips they'll each actually be buying.

As we reported, it's estimated Microsoft alone bought £5 billon worth of Nvidia H100 GPUs in the most recent completed quarter. What isn't clear from this event is whether companies like Microsoft plan to spend billions with AMD or if their involvement at this point is as much about keeping Nvidia on it toes with the appearance of willingness to buy a competing product as it is about actually buying truly significant quantities of AMD's new GPU.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

Equally, AMD is being very conservative about its predictions for revenue from MI300X. But does that reflect its true ambitions, or is it attempting to set expectations low and then smash through them? As ever, watch this space.

What any of this means for PC gaming is equally if not even more foggy. If AMD can make serious money on AI chips, then in theory that puts it in a better position to invest in gaming GPU technology. On the other hand, it might also divert AMD's attention and indeed its access to cutting edge manufacturing capacity at its key foundry partner TSMC.

All of which means we'll just have to wait and see how it pans out. In general, we think AMD doing well in AI will be a good thing for PC gamers if it makes for a healthier, stronger AMD. but equally there are so many variables at play, it's pretty hard to be sure. if you're interested in finding out more, you can watch the whole launch event here.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.