Just when you thought PC HDR gaming couldn't possibly get more awkward this happens

Turns out HDR monitor performance can vary depending on your choice of AMD or Nvidia GPU.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

Are you ready for yet another battlefield in the war between AMD and Nvidia? Well, it turns out you can get very different results when driving certain HDR monitors depending on whether you are using AMD or Nvidia graphics hardware.

As Monitors Unboxed explains, the reasons for this are complex. It's not necessarily that AMD or Nvidia is better. But it certainly adds a layer of complexity to the whole "what graphics card do I buy?" conundrum. As if things weren't already complicated enough, what with ray tracing, FSR versus DLSS, and all the other stuff you have to weigh up when choosing a new graphics cards.

The investigation here centres on the Alienware 34 AW3423DWF. That's the slightly cheaper version of Alienware's 34-inch OLED gaming monitor which ditches Nvidia's G-Sync tech for more generic adaptive refresh and AMD FreeSync.

The G-Sync equipped non-F Alienware 34 AW3423DW actually performs differently with HDR, which gives you an idea of how complicated this can all get. Anyway, the problem involves the AW3423DWF's performance when using the HDR 1000 mode.

That's the mode you need to use to achieve Alienware's claimed peak brightness of 1000 nits, as opposed to the True Black HDR mode, which tops out at just 400 nits. By default, the HDR 1000 mode simply ramps up the brightness of everything on screen.

That's not actually ideal. Instead, HDR 1000 should increase the brightness of the brightest objects but leave darker objects that are intended to hit brightness levels below 400 nits alone. That's the point of HDR—to increase the contrast between bright and dark objects, not just ramp up the overall brightness.

The difference between AMD and Nvidia GPUs comes when you attempt to adjust the Alienware to achieve more accurate brightness. In the settings menu, there's an option for using "source tone mapping" which essentially sets the monitor to use the brightness curve coded in the source content to dictate brightness.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

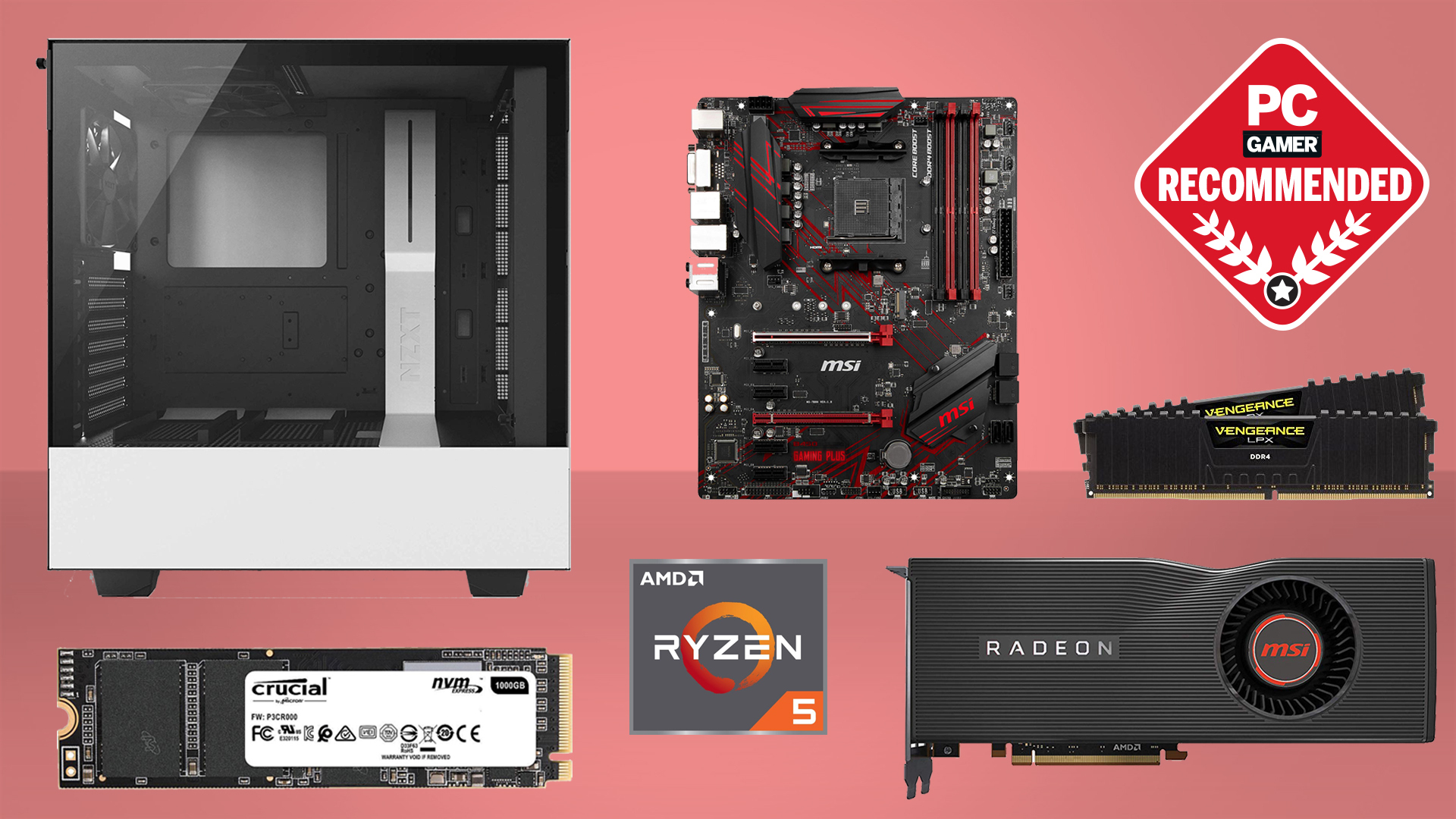

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

Problem is, that option is only available to Nvidia GPU users. The reason why? According to Monitors Unboxed, it's because AMD GPUs instead use AMD's own tone mapping, which is a part of the FreeSync feature set.

To be clear, while this problem isn't universal to all HDR monitors, it is indicative of the complexities of implementing HDR. How a given monitor implements tone mapping pipelines for HDR content can in turn mean you get very different results depending on the GPU being used.

Since its introduction on the PC, HDR has been a hit and miss affair. But this is the first time we've seen reports of dramatically different HDR performance depending on choice of GPU vendor. At the very least, we'll keep our scanners peeled for how the new generation of OLED and mini-LED HDR screens perform with both AMD and Nvidia GPUs when running HDR content.

You can check out the full video on Monitors Unboxed if you want to learn more.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.