Nvidia's promising '4K 240 Hz path traced gaming' with DLSS 4.5 but do you want 6x Multi Frame Gen?

It's convinced the 2nd gen Transformer model is good enough that you will.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

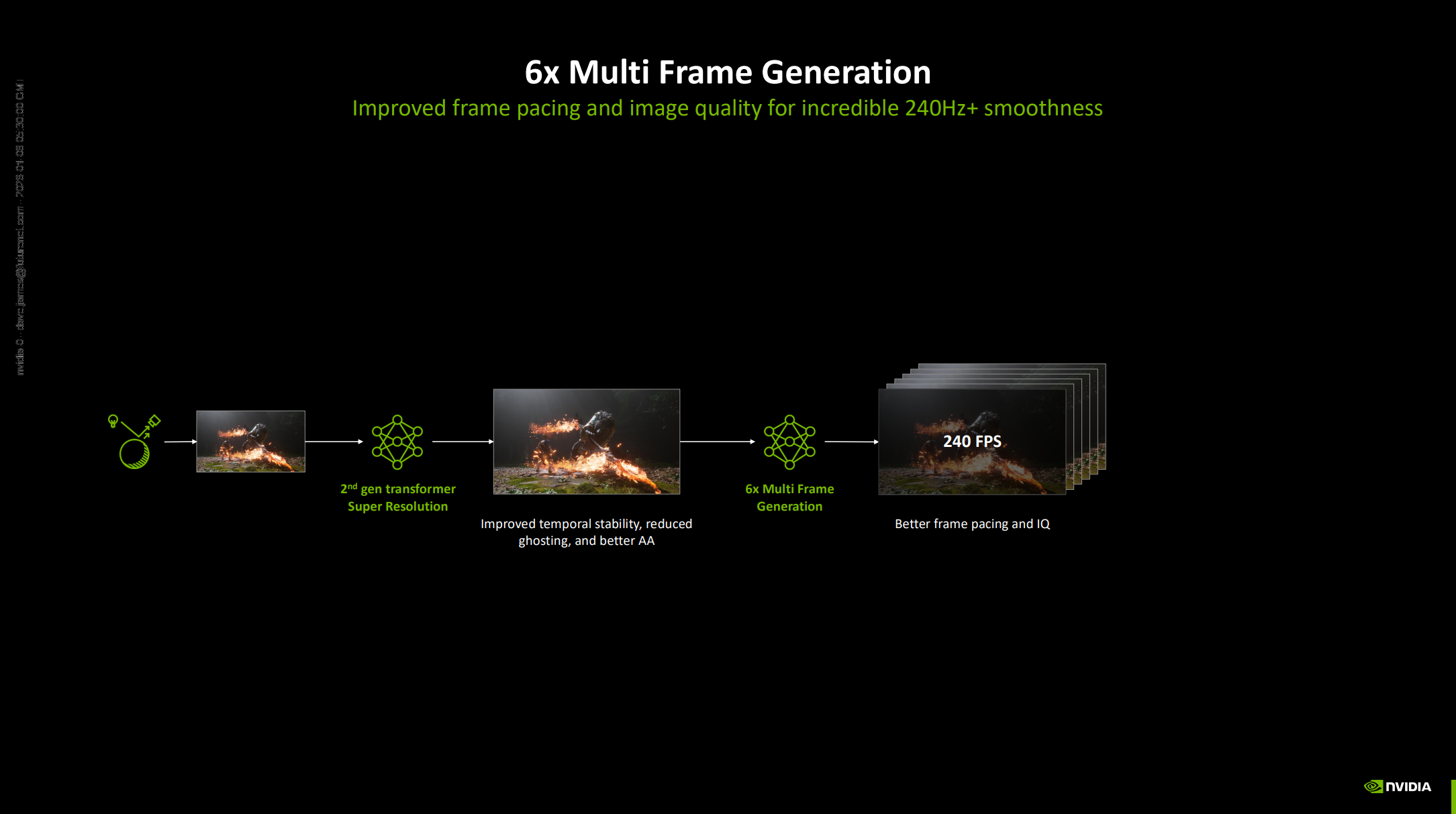

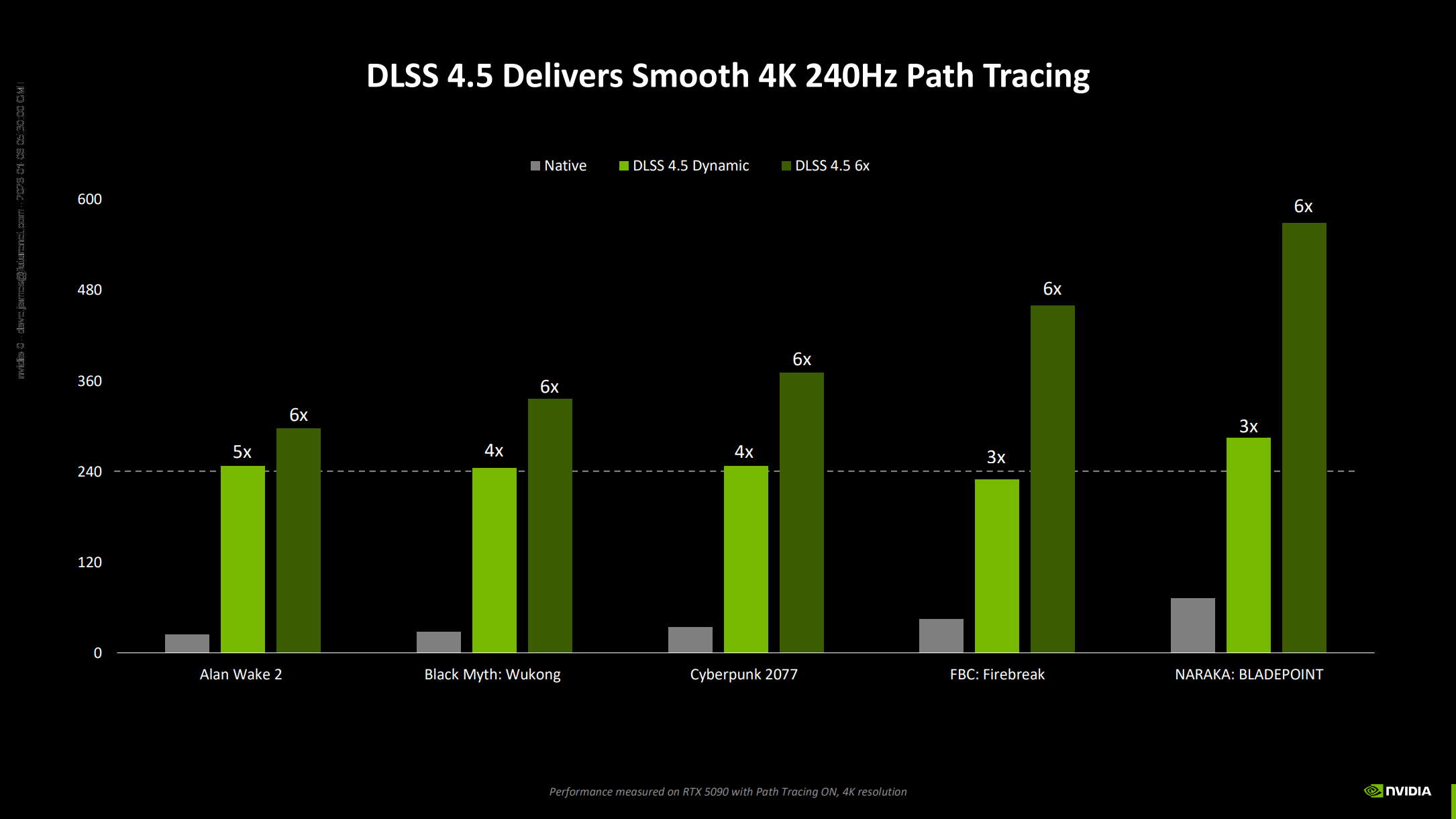

Nvidia's new DLSS 4.5 announcement comes with the promise of 4K 240 Hz path-traced gameplay, and you know there's only one way we're going to get there right now: AI. With a combination of a second generation transformer model and an expanded Multi Frame Generation feature offering up to 6x frame gen, the green team reckons it can deliver "incredible 240 Hz+ smoothness" in some of the most graphically intensive games around.

Though, I'm guessing your mileage may vary.

MFG (or Multi Frame Generation if you're not into the whole brevity thing) was introduced at CES last year as one of the fancy new features of the RTX Blackwell GPU generation. It follows the now-familiar pattern of the original DLSS Frame Generation style of using a mixture of frame interpolation, optical flow calculations, and AI image generation to smooth out your gameplay.

Catch up with CES 2026: We're on the ground in sunny Las Vegas covering all the latest announcements from some of the biggest names in tech, including Nvidia, AMD, Intel, Asus, Razer, MSI and more.

Except with MFG on RTX Blackwell chips you're adding in up to three completely generated frames in between each actually rendered one, and now using a new optical flow AI model instead of dedicated accelerator hardware with an enhanced display engine to help frame pacing.

The headline-grabbing update for the RTX Blackwell architecture at CES this year, however, is that Nvidia is convinced it can slip up to five extra frames between each rendered frame to give you 4K gaming at 240 Hz even with path-traced games.

Whether it can convince you will be the key, however, because that's a bold claim, and I can already feel many a gamer bristling at the thought of upping the ante of frame generation to such an extent. Even as someone who enjoys frame gen on a beefy enough GPU, I will admit my credulity is feeling as stretched as yours right now. But Nvidia's banking on the new transformer model powering the DLSS 4.5 Super Resolution upscaler to help fix the visual artifacts associated with frame generation.

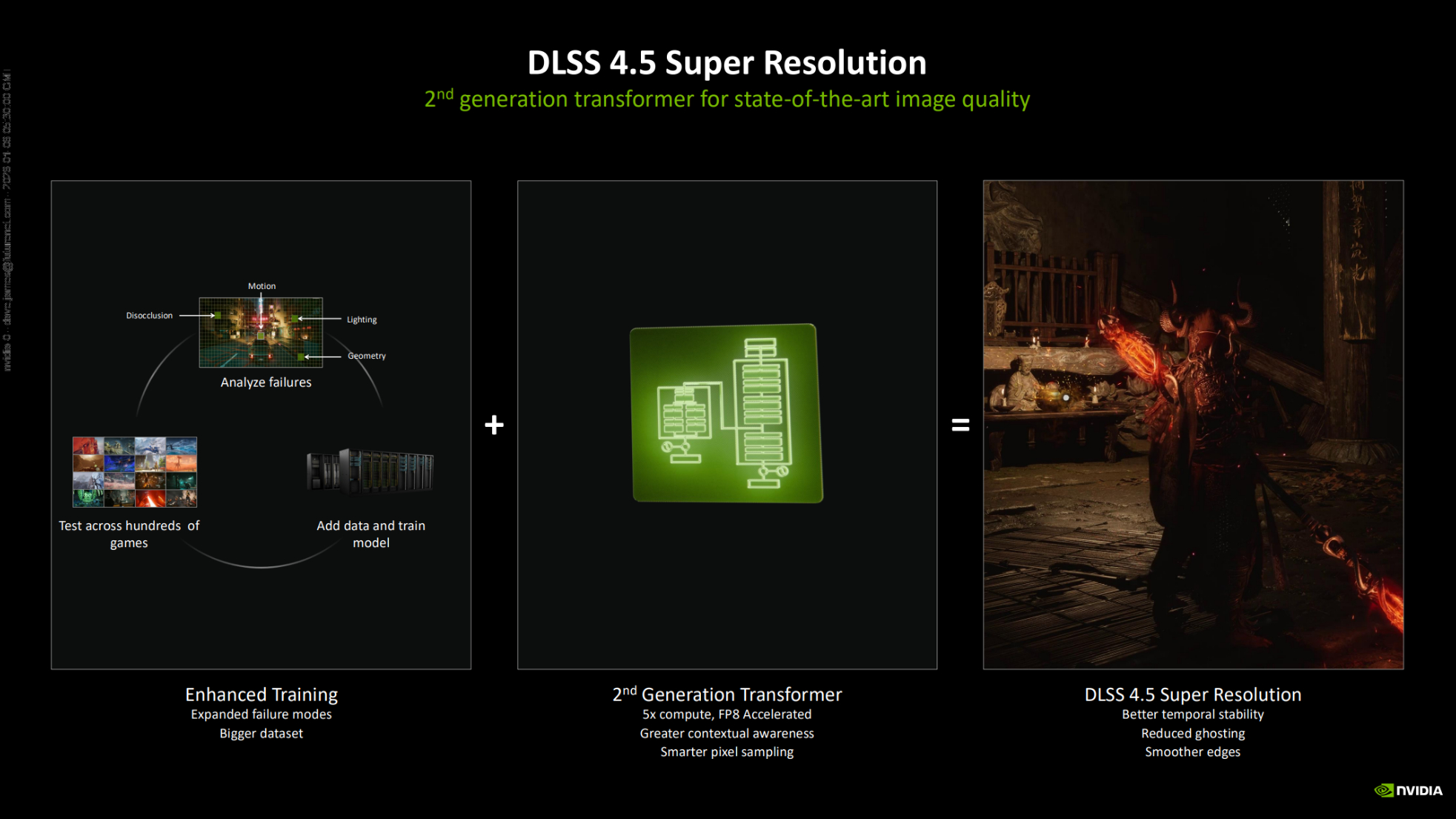

We're promised better temporal stability, reduced ghosting, and smoother edges with DLSS 4.5 thanks to a mix of that second-gen transformer model as well as improved training for the model itself. At last year's RTX Blackwell CES event Nvidia's VP of applied deep learning research, Brian Catanzaro noted that it has a supercomputer "with many 1000s of our latest and greatest GPUs, that is running 24/7, 365 days a year improving DLSS." And Nvidia's had another 12 months making that training dataset even bigger and has made it even better at analysing just where its upscaler is going wrong.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"When the DLSS model fails it looks like ghosting or flickering or blurriness," Catanzaro tells us. "And, you know, we find failures in many of the games we're looking at and we try to figure out what's going on, why does the model make the wrong choice about how to draw the image there?

"We then find ways to augment our training data set. Our training data sets are always growing. We're compiling examples of what good graphics looks like and what difficult problems DLSS needs to solve.

"We put those in our training set, and then we retrain the model, and then we test across hundreds of games in order to figure out how to make DLSS better. So, that's the process."

There's also more compute being used for DLSS now than even the previous generation of transformer model, which in itself used four times more compute than the old convolutional neural network (CNN) models which DLSS used to be built on. We're told the second-gen transformer now uses five times more compute (presumably than CNN), including greater contextual awareness and smarter pixel sampling.

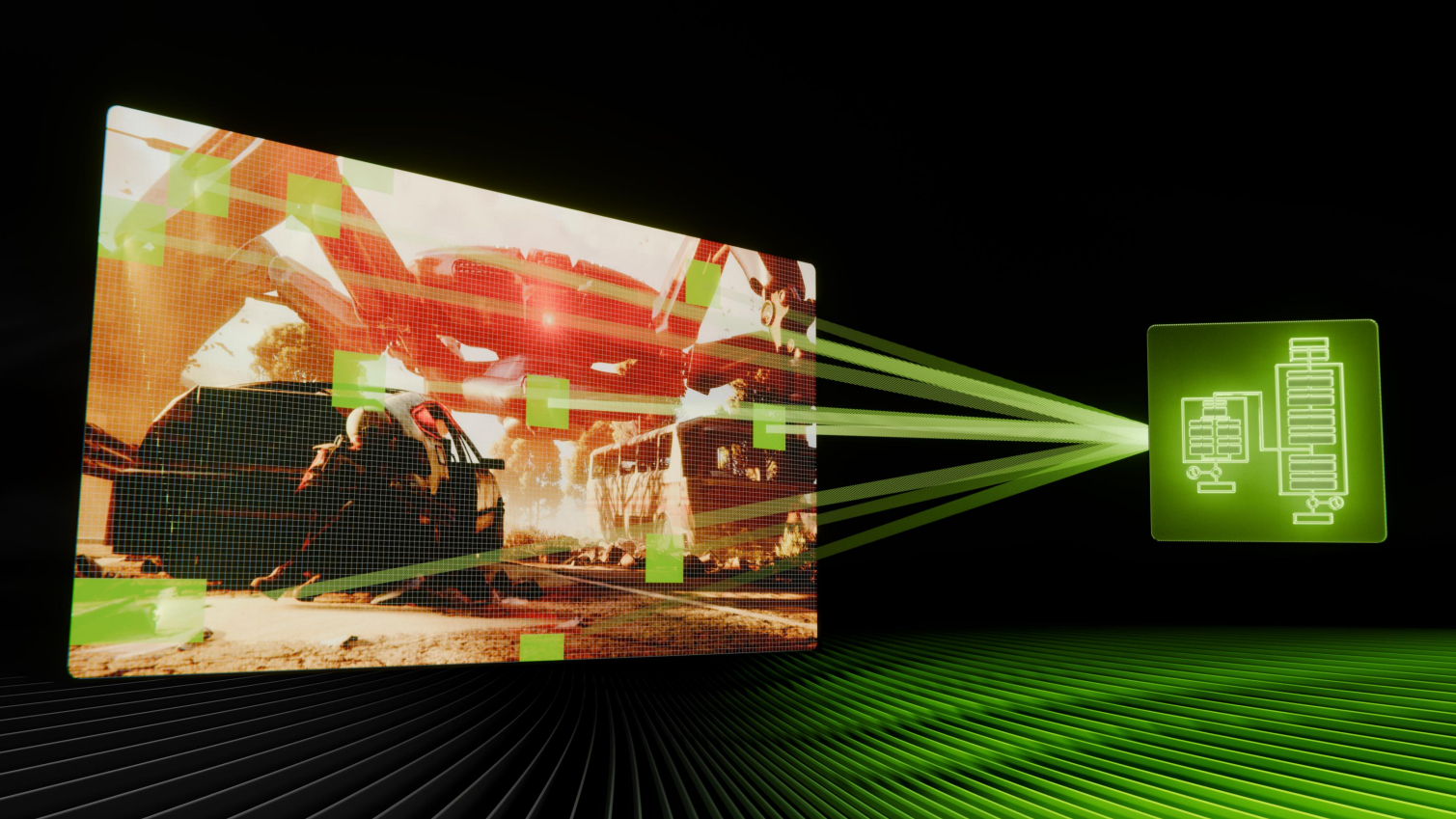

This is essentially where the transformer models are smarter than the old CNN one. CNN models were fine for analysing big images, but transformer models are better at looking at the finer pixel details and the data and means you can spend compute resources more efficiently. Though in its first iteration it was certainly far from perfect, as you can see from my own testing of the 1st gen transformer model.

"The idea behind transformer models," Catanzaro explains, "is that attention—how you spend your compute and how you analyse data—should be driven by the data itself. And so the neural network should learn how to direct its attention in order to look at the parts of the data that are most interesting or most useful to make decisions.

"And, when you think about DLSS, you can imagine that there are a lot of opportunities to use attention to make a neural graphics model smarter, because some parts of the image are inherently more challenging."

Nvidia must believe it has made the graphics model smart enough now that it can get past those occasional visual issues and get away with turning the MFG dial up to 6x and not end up with a laggy artifact-ridden gaming experience.

You will still need to have an RTX 50-series GPU capable enough of delivering at 60 fps in your chosen path-traced game to be able to take advantage of the extra frame gen levels, however. Because even if you're getting hugely inflated frame rate figures that is all for naught if your PC latency gets into triple figures.

I've experienced that throughout all of my RTX Blackwell GPU testing from last year, where it became clear further down the stack just how limited MFG gets when the input frame rate is low and PC latency is high.

That's the same situation for any level of frame generation—whether on Nvidia or AMD—and it's certainly not the perfect panacea for poor gaming performance it might have at first appeared; you still need to have a whole heap of graphical grunt to make it a functional experience.

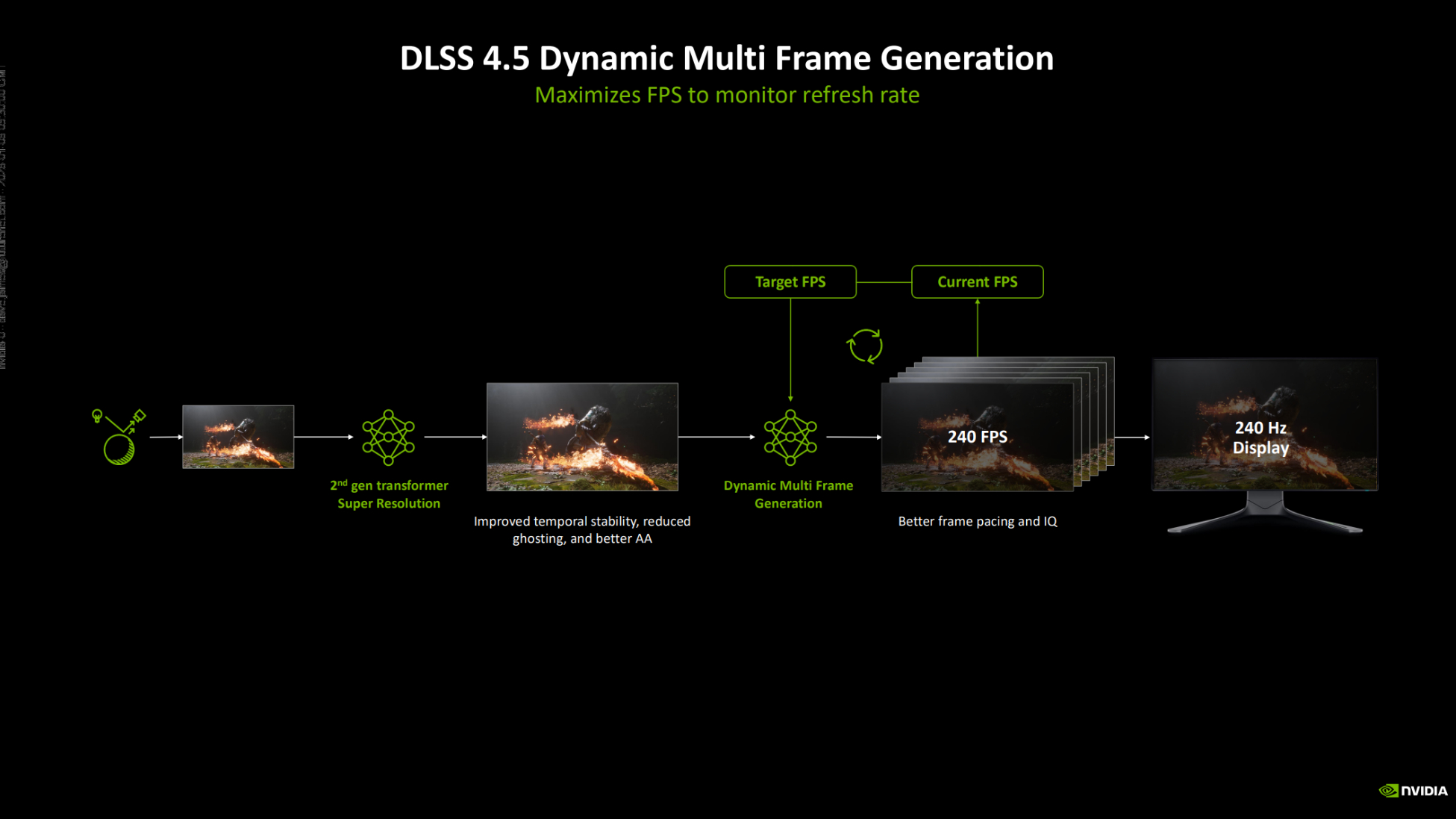

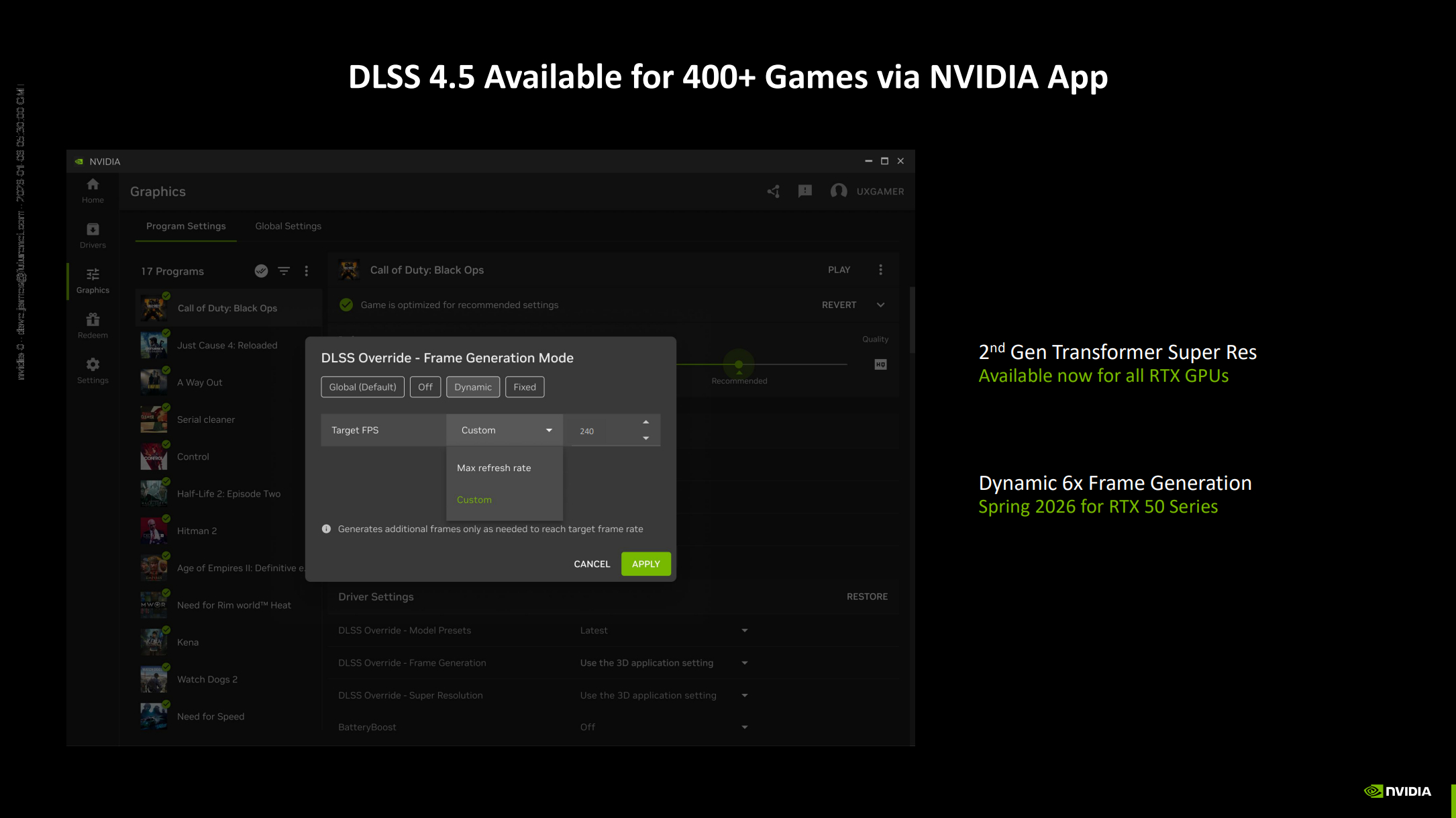

But if you do have that power already humming away in your rig, then another new feature being added into the Nvidia App sometime in the springtime will be of interest. Dynamic Multi Frame Generation allows you to use the DLSS Override feature of the app to either tie the Dynamic MFG feature to the maximum refresh rate of your screen or to a custom fixed level.

This then adjusts the level of MFG you require to hit that frame rate automatically, with Nvidia demonstrating what that might mean if you're lucky enough to have an RTX 5090 running in a selection of games. The example of Black Myth Wukong running at 246 fps on an RTX 5090 at 4K with path tracing and DLSS 4.5 running at 6x MFG looks very impressive, more so that it's reportedly doing so with just a PC latency of 53 ms.

That should be eminently playable, and I'm looking forward to testing that out in person when the feature is fully released to the public at large. Especially just to check out the impact of that improved transformer model and what effect it has on the frame gen issues that have put many a gamer off using the feature in the past.

But, outside of MFG, the exciting thing is that the new version of DLSS 4.5 Super Resolution isn't tied to the RTX 50-series graphics cards, meaning any RTX GPU will be able to take advantage of the new model. And because of the DLSS Override feature of the Nvidia App, there will be a ton of games—reportedly over 400 at launch—that will be able to use it right away.

That ought to mean every Nvidia RTX owner's AI-powered gaming will get that bit smarter, clearer, and sharper as 2026 goes on. But whether DLSS 4.5 is enough to convince a sceptical public that Dynamic Multi Frame Gen can be a feature you enable automatically, as upscaling has arguably become, will remain to be seen.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.