I did some quick and dirty testing of the Intel Arc B390 iGPU in Intel's new top-end Core Ultra chip and I'm pretty impressed

It's still an iGPU, though. Adjust your expectations accordingly.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

It'll be a little while yet before we get the Intel Core Ultra Series 3 mobile chips on our test bench. However, as I'm on the ground at CES 2026, Intel gave me a chance earlier today to put the Arc B390 iGPU through its paces in a brief benchmarking session. I have to say, its performance was pretty impressive—for an integrated graphics solution, at the very least.

Due to my packed CES schedule (my fault entirely), I had less than an hour to quickly run some benchmarks and futz around in the odd game. Still, I'm enough of an old hand to know exactly what game I was benching first—Cyberpunk 2077, of course.

My test laptop was a non-descript Lenovo machine with an Intel Core Ultra X9 388H onboard, the top-end Panther Lake CPU from the new lineup. Still, the lack of discrete graphics hardware means I didn't subject the poor lappy to path tracing, or even ultra settings, at least at first.

No, this is an iGPU, and expectations should be tempered—Intel says the Arc B390 is around 10% faster on average than an Nvidia RTX 4050, and that's not exactly a lightning-fast graphics card.

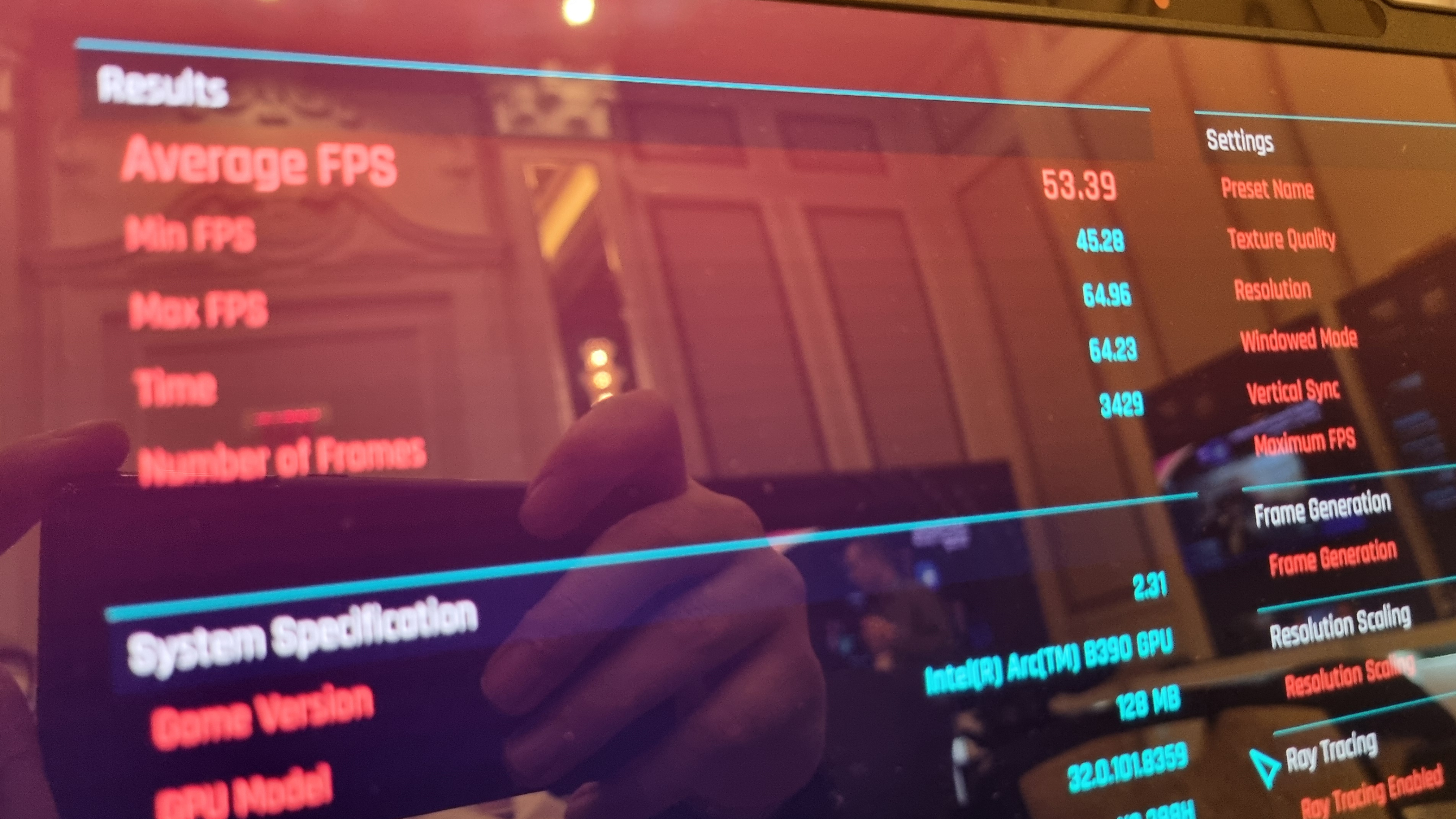

High settings then, 1200p. No upscaling, no ray tracing. The result?

A perfectly playable average of 53 fps. Now, I'm fully aware that this figure is unlikely to set anyone's world on fire. That being said, for an iGPU with no upscaling or frame generation help, it's still downright impressive. The machine I was testing it on felt like about as default a notebook as you could possible buy, so it was slightly surreal to see it deliver such a result with very little fanfare.

In reality, anyone gaming on a GPU-less portable machine will want to make use of upscaling, so I ran the bench again with XeSS set to Quality, resulting in a 74 fps average result. I'd say that was downright smooth, and I'd happily play Cyberpunk 2077 once more at this sort of image quality and frame rate without complaint.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

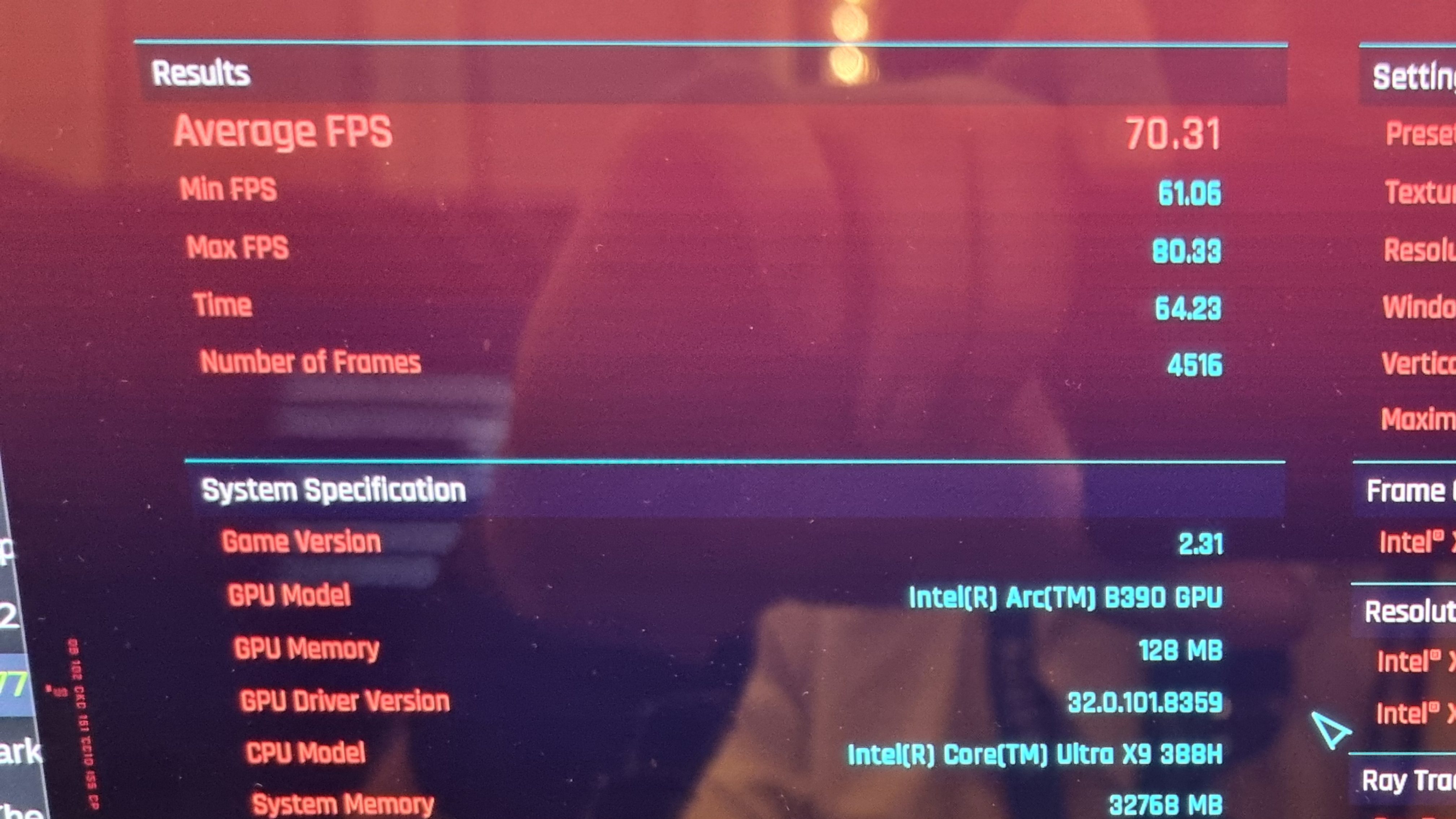

If I was really pushed, though, I'd quite like some ray tracing. With a gulp, I changed the preset to Ray Tracing: Ultra (hey, why not), enabled frame generation, set XeSS to Auto, consulted with the gods, and set the benchmark off once more.

70.31 fps. Now, given that 2x frame generation is involved, latency would almost certainly rear its ugly head pretty quickly while gaming at these sort of settings, given the lowness of the base frame rate—and as the game was a blank slate with no save file, I didn't have the time to jump in and run through the prologue to prove it. Still, the fact that an iGPU can spit out these sort of frames at all at these settings (with heavy assistance, granted), is fairly impressive.

Giddy with excitement, I noticed that Borderlands 4 was installed on my test machine. This game is, despite several performance patches, notoriously demanding. While there's no in-game benchmark to speak of, I thought I'd quickly run through the opening section to see what sort of frames I could get.

It should be no surprise that the Arc iGPU struggled. BL4 gives the RTX 5090 a hard time, after all, and at 1200p High settings with XeSS set to Quality, I bounced around the 40-45 fps mark. While frame generation is an option in the settings, I couldn't get it to reliably work on the Intel chip—although given FG worked well in my other testing, I'll blame the Rippers for that one.

To finish off, I thought I'd break out Shadow of the Tomb Raider, a game that's getting on in years but is still used by many outlets as a reliable test bench. It's still a very pretty-looking thing in motion, and the benchmark is fairly comprehensive, so I crossed my fingers and hoped for the best,

At 1200p High settings with no upscaling enabled, I saw highs in the 100s, 1% lows in the mid 30s, and an average of 75 fps. Again, this is an iGPU, and while SOTTR isn't exactly cutting edge anymore, it can still push some modern graphics cards with the settings turned up. I'd say that's a very good result for the Arc, and a great showcase of the sort of image quality (and smoothness) that can now be eeked out of a discrete GPU-less machine.

So, what can we glean from my quick and dirty testing? Well, I don't want to draw conclusions until we get the Panther Lake chips under PC Gamer lab conditions. But I have to say, as iGPUs go, it's a very promising start. The 12 Xe-cored chip seems to punch pretty darn hard for its size, and I'd be very, very curious to see what it could do in a gaming handheld.

I do wonder if it's something of a Rubicon-crossing moment for slim and light consumer laptops, too. The sort of people buying discrete GPU-less machines probably won't have gaming as a top priority—but with this sort of performance available, it might bring a few new converts to the cause.

We'll have to see. Still, it's encouraging stuff, and I can't wait to clamp one of these chips down and put it through its proper paces when I get home. What time's my flight again? I've got testing to do!

Catch up with CES 2026: We're on the ground in sunny Las Vegas covering all the latest announcements from some of the biggest names in tech, including Nvidia, AMD, Intel, Asus, Razer, MSI and more.

Andy built his first gaming PC at the tender age of 12, when IDE cables were a thing and high resolution wasn't—and he hasn't stopped since. Now working as a hardware writer for PC Gamer, Andy spends his time jumping around the world attending product launches and trade shows, all the while reviewing every bit of PC gaming hardware he can get his hands on. You name it, if it's interesting hardware he'll write words about it, with opinions and everything.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.