Are high-end GPUs even worth buying anymore? I'm starting to have my doubts

Time for some GPU hot takes that might save you some money.

Ditch the high-end and pick one of these instead:

Alright folks, I'm maybe about to get a little controversial with you here: I honestly don't think high-end graphics cards are worth the hassle or the money anymore. Please, hold off with the pitchforks, PC enthusiasts, and I shall explain.

At a time when it feels like you need, at minimum, one of the absolute best graphics cards around to run some of the latest games at even modest frame rates, I'm here to say, "hear me out." Because really, does having that beastly RTX 5090 really save you from launch day troubles? No. No, it doesn't. I may, or may not have tried to play Borderlands 4 the other day...

So, do you really need that beefy GPU? What if you could get by with something less obscene, and that maybe you'd hardly even notice?

My goal today is to show you that, contrary to popular belief, high-end GPUs don't make a lot of sense for a lot of people. And yes, I'll be bringing receipts.

You're paying a lot of money for not a whole lot of GPU these days

Before you feed me to the wolves, let's define what 'high-end' even means these days. The gap between what we, the gamers, consider to be high-end and what the manufacturers label as such is ever-growing.

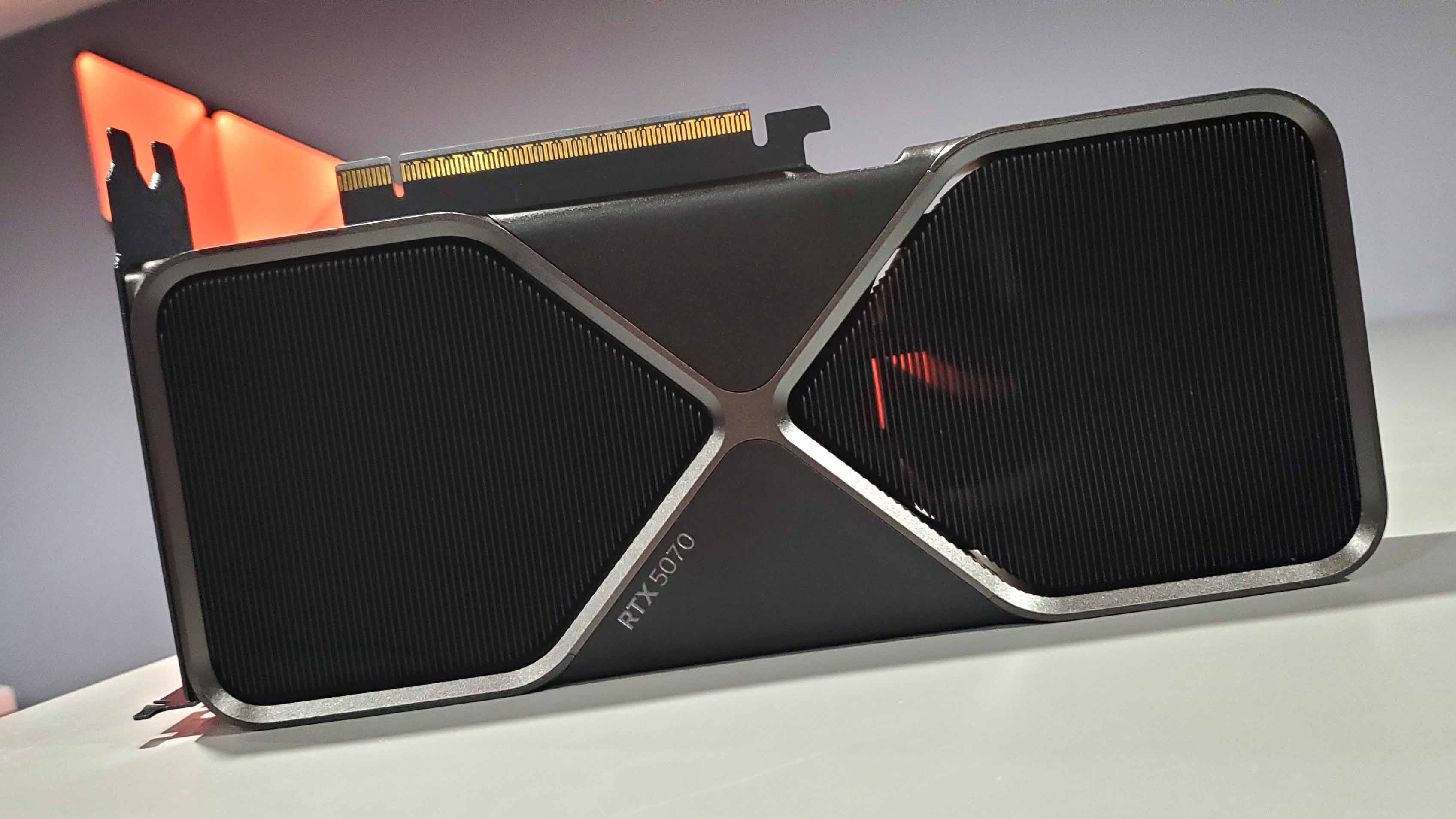

In the current generation of graphics cards, the term really varies depending on the vendor. Intel doesn't even try to pretend to have a high-end GPU (although the Arc B580 is still pretty great from a price/performance perspective). Nvidia would probably love it if we thought the RTX 5070 Ti was mid-range, but the $750 price tag really pushes it into that high-end wannabe segment. That's where AMD's RX 9070 XT resides, too, for lack of other options, although it's—theoretically—cheaper than the Nvidia equivalent.

So, that leaves us with the RX 9070 XT for AMD, and the RTX 5070 Ti, RTX 5080, and, of course, the outrageous RTX 5090 for Nvidia. Although the GPU market has been a hellscape for most of 2025, the prices are at least less terrible these days. Though, obviously, they are still terrible, which leaves us with the following:

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

- Nvidia RTX 5070 Ti: $749 at Amazon

- Nvidia RTX 5080: $999 at Amazon (finally at MSRP, thank you, retail gods)

- Nvidia RTX 5090: $2,399 at Amazon

- AMD RX 9070 XT: $699 at Amazon

If you just happen to be a millionaire, this might all sound affordable, but for most of us, they're all very, very pricey—and the difference in frame rates often doesn't make up for how expensive they are.

Don't get me wrong: there is a performance gap between each of these graphics cards, and each is better than the previous one. They also beat their last-gen counterparts. Though only by often small margins when it comes to actual raw graphical grunt, because the real performance magic these days is not in the actual hardware, but in upscaling and frame generation.

Upscaling and frame gen tech really do come in clutch (with some caveats)

Frame generation and upscaling go a long way toward making a mid-range GPU sufficient for most things, and a high-end GPU lowkey obsolete.

Am I a fan of having to pay a premium for hardware, only to still have to use AI to beef up the frame rates? No, but that's the reality that we live in now, and just like I've grown used to being told that my job will be replaced with ChatGPT any day now, I have learned to embrace DLSS and similar tech.

Our benchmarks don't lie. Let's take a look at our test of the RTX 5090. When faced with Cyberpunk 2077 at 4K RT Ultra, even the mighty 5090 doesn't hit 60 fps. Sad, but true.

Turn on DLSS 4 Quality, with 4x frame generation, and switch to RT Overdrive. We're up to 215 fps, which means that even on one of the best gaming monitors, you'll hit more frames than your eyes can truly perceive.

Let's drop down to the RTX 5070 Ti.

Same deal: In Cyberpunk 2077 at 4K RT Ultra, the RTX 5070 Ti hits a measly 30 fps, which is unplayable. Some will argue that it's more of a 1440p GPU, and at 1440p, we're looking at 61 fps on average.

With DLSS 4 Quality, at 4K, the RTX 5070 Ti averages 110 fps.

If most of your frames are coming from DLSS and not the actual raw silicon, why would you bother spending more money than you need to?

We weren't the biggest fans of the RTX 5070 upon launch, although it's now the most popular current-gen GPU. After all, Nvidia's CEO Jen-Hsun Huang said that the RTX 5070 would deliver the same performance as the RTX 4090.

I don't need to tell you that that didn't happen. It's around 60% slower than the last-gen flagship without upscaling. But with DLSS 4 Quality, this $549 GPU breezes through Cyberpunk 2077 at 4K RT Ultra, hitting 122 fps.

Of course, DLSS (just like similar tech) comes with some caveats. Visual artefacts, increased latency, and all that jazz. But honestly? Having used it extensively, most of the time, I hardly even notice these issues. The gains are there, and the trade-off is negligible enough to be worth it.

My point is this: If most of your frames are coming from DLSS and not the actual raw silicon, why would you bother spending more money than you need to?

Do you even need to play at max settings?

Let's put all those fake, contentious frames aside for a second and consider this. Do you need to be able to play everything at max settings?

No, seriously, please, the pitchforks! I can't work in these conditions. Put them away for another second.

I'm not going to pull wool over your eyes and claim a game played on medium settings looks the same as one that's played at max settings. But, speaking as someone who's been building PCs for 20 years and regularly helps people build or upgrade their computer, the difference really isn't as noticeable as you might think.

A lot of it comes down to perception. Many games will pick the optimal settings for you, and software, such as the Nvidia App will optimize them without your interference. With that in mind, would you even notice that you were playing on lower settings without digging into it and finding ray tracing turned off?

Just take a look at these screenshots of Cyberpunk 2077 I took at medium, high, and RT Overdrive settings. Sure, there's a difference, but will you really see it if you're fighting enemies?

Ray tracing—now there's another thing that warrants a hot take. I love me some realistic-looking lighting in games, but I've heard too many people say that they can hardly see a difference to straight up recommend it to everyone.

Honestly, I personally can't always see much of a difference either, especially not when I'm actually playing. Developers have spent a long time getting faked lighting really, really good, after all. If you primarily use your GPU to game and not just take pretty screenshots, you probably won't care all that much, either.

Sure, it does look better and more realistic, especially in games where path tracing is a thing (Cyberpunk 2077 comes to mind here once again). But is it worth spending an extra $1,000 or more just to enable ray tracing in games where frame generation isn't supported? Which, by the way, is very much a shortening list—many modern games support DLSS and/or FSR.

If your goal is playing at 165 fps (or more) at maximum settings, the reality is that you can achieve that with a cheaper GPU in many games. You're already in a better place than console gamers in that regard, who often have to settle for lower graphics settings and lower fps. Us PC gamers need to suffer through poor optimization, but we get better performance in return. Eventually.

And if you lower your settings just a little bit, you might hardly notice, but your GPU will certainly thank you. And so will your wallet.

Aim for the GPU sweet spot, not for the top of the line

As an avid PC builder, I often contribute to communities like r/buildapc, forums, and groups where people ask for help with their builds. And while those communities are helpful, they're also notorious for convincing people to go over budget.

"If you spend $200 more, you'll get a better GPU." I've said those exact words myself on more than one occasion. But lately, I'm starting to wonder—is it really worth it?

For some gamers, yes. If not having the best gaming PC in the world makes you itch, then you'll hate me for talking you into settling for less.

However, if you're trying to make the most of what you have, don't let the internet at large convince you that you can't play all your faves without a beastly, ultra-expensive GPU. It's just not true; not now, and especially not with frame generation in the picture.

If you're like me and you like to go for what makes sense, there are a few GPUs that save you money while still being solid, especially in games that support frame gen.

- Nvidia RTX 5060 TI 16 GB | $430

- Nvidia RTX 5070 | $550

- AMD RX 9070 | $600

- AMD RX 9070 XT | $700

- Nvidia RTX 5070 Ti | $750

For starters, the RTX 5070. Sure, in reality it's nowhere near an RTX 4090, but you can boost it to be a lot better than it really seems. And if you compromise on a few key settings, you'll get yourself a genuinely decent GPU for $550.

AMD's RX 9070 XT and RX 9070 are both fantastic. I only struggle to recommend them right now because they don't sell at MSRP, but if you can spot either at a reasonable price, they're great for most gamers. The downside? Nvidia's DLSS 4 still has the upper hand versus FSR 4, especially when it comes to widespread adoption.

The RTX 5060 Ti 16GB is the cheapest GPU I feel comfortable recommending if you truly want that "high-end experience" without spending a fortune. DLSS 4 will do all the heavy lifting for you here, though.

The highest I can recommend going right now is the RTX 5070 Ti. While I'm not a fan of the $749 MSRP, it's a GPU that can be pushed to 4K, and your next best bet is the $999 RTX 5080, which truly puts us firmly in the expensive territory.

No matter which GPU you pick, don't let FOMO get the best of you.

You can always upgrade in a couple of years, but chances are that you won't max out your super duper GPU worth a bazillion dollars right now, so why spend more? Food for thought.

Monica started her gaming journey playing Super Mario Bros on the SNES, but she quickly switched over to a PC and never looked back. These days, her gaming habits are all over the place, ranging from Pokémon and Spelunky 2 to World of Warcraft and Elden Ring. She built her first rig nearly two decades ago, and now, when she's not elbow-deep inside a PC case, she's probably getting paid to rant about the mess that is the GPU market. Outside of the endless battle between AMD and Nvidia, she writes about CPUs, gaming laptops, software, and peripherals. Her work has appeared in Digital Trends, TechRadar, Laptop Mag, SlashGear, Tom's Hardware, WePC, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.