AMD LiquidVR vs. Nvidia VRWorks, the SDK Wars

Nvidia's VRWorks SDK

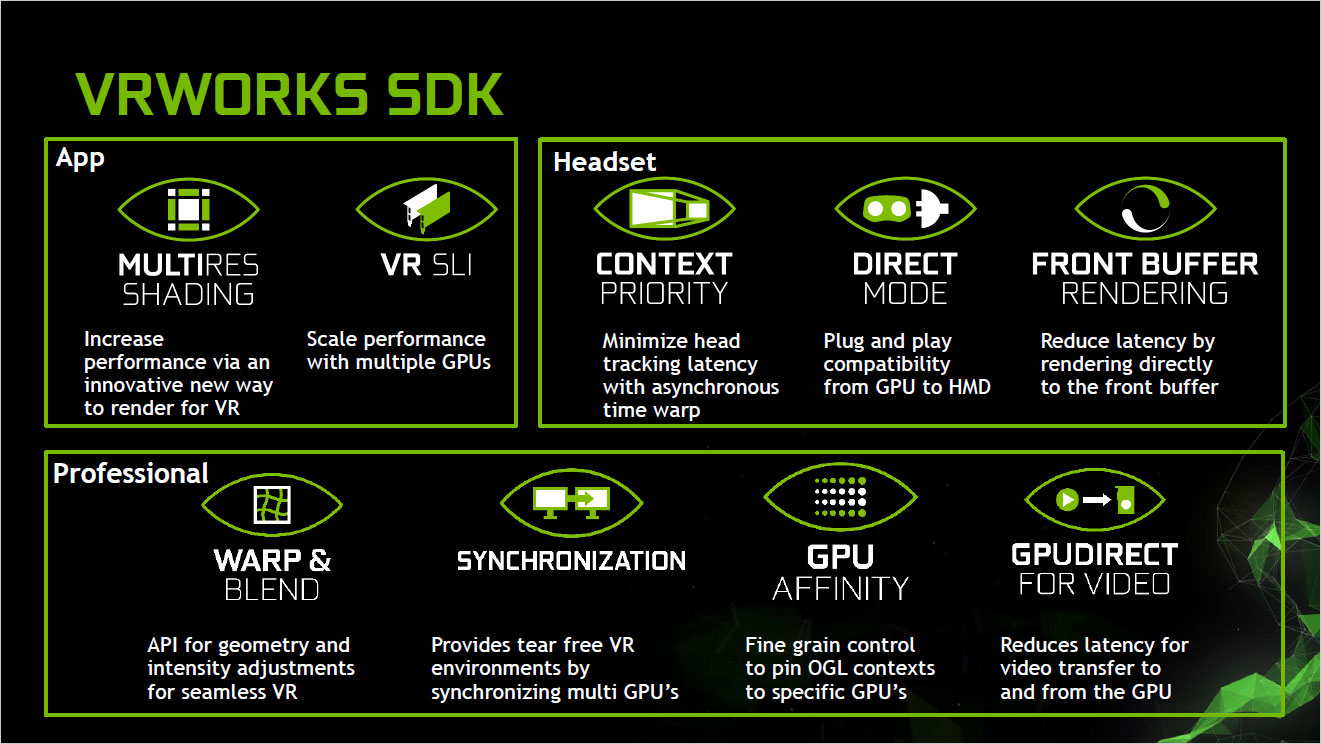

Since we started with AMD, when we talk about Nvidia's VRWorks, a lot of this is going to be a rehash. Rather than starting with the big differentiating factor, we're going to quickly cover the similarities first. There's not a 1-to-1 mapping between LiquidVR and VRWorks, but at a high level there are several items that largely correlate. Nvidia lists five core features in VRWorks, with four additional "professional" variants of these that apply specifically to Quadro cards, cluster solutions, and other high-end scenarios, so we won't cover those here.

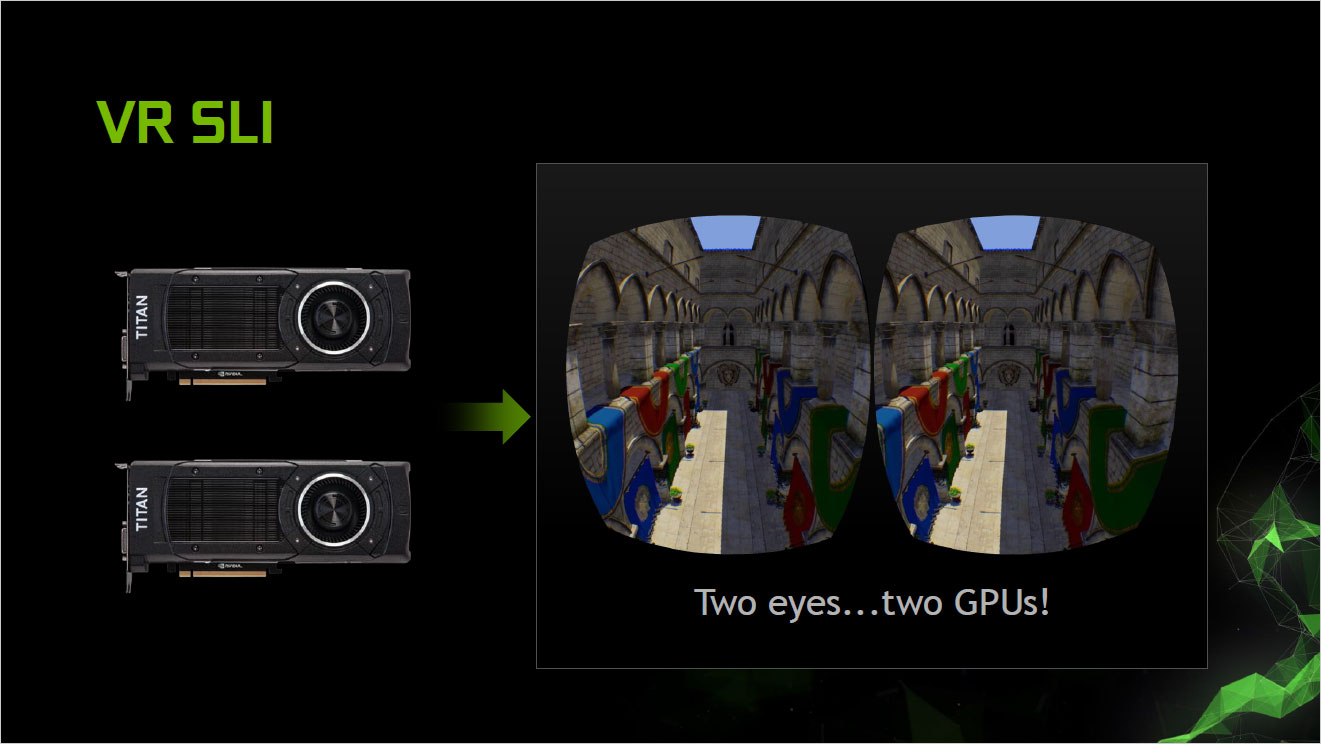

First is VR SLI, and all the stuff we said about AMD's Affinity Multi-GPU a moment ago applies here. You've got two eyes and two views to render, so using one GPU per eye is a quick way to nearly double performance, without the latency associated with AFR. The system generates a single command stream for all GPUs, and then affinity masking tells the GPUs which states apply to them, and which ones to ignore. For VR SLI to work, it needs to be integrated into the game engine, and it's up to the developer to decide how to utilize the GPU resources. There are certain types of work that require duplication (Nvidia mentions shadow maps, GPU particles, and physics), which can reduce the benefits of VR SLI from doubling performance to maybe a 50-70 percent improvement, but it's still a significant gain.

Nvidia's next two bullet points are Direct Mode and Front Buffer Rendering, which combined map to AMD's Direct-to-Display feature. Direct Mode does things like hiding the display from the OS, preventing the desktop from extending onto the VR headset. VR apps however can still see the display and render to it, with the net result being a better user experience. Front buffer rendering is frankly something that I'm still trying to wrap my head around. It's not normally accessible in D3D11, but VRWorks enables access to the front buffer. Given the Rift and Vive are both using 90Hz low-persistence displays that black out between frames, the idea here is to apparently render to the front buffer during vblank. Nvidia also mentions beam racing, which refers back to the earlier days of video games where there was no buffer used for rendering, and programmers basically had to "race the [electron] beam" as it scanned across the display. Nvidia doesn't really provide any additional details, but it will be interesting to see if some developers are able to achieve any cool effects via beam racing.

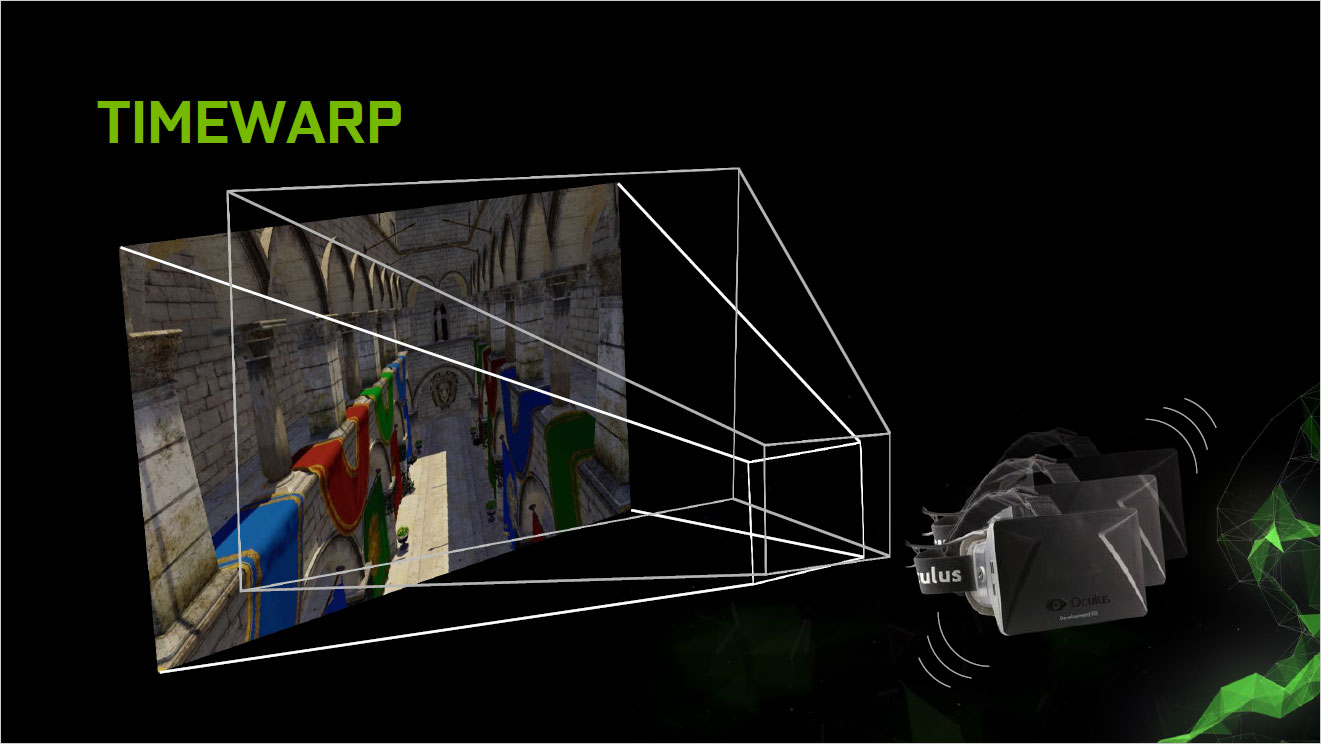

The fourth element of VRWorks is Context Priority, which is sort of the flipped approach to handling asynchronous time warp. AMD's async shaders allow the ATW stuff to be scheduled along with graphics, but on Nvidia hardware, a context switch takes place. It's not clear how long that takes, but reading between the lines, Nvidia does mention the need to avoid long draw calls (long being defined as >1ms). Anyway, Context Priority allows for two priority levels for work, the normal priority that handles all the usual rendering, and a high-priority context that can be used for ATW. It preempts the other work and presumably completes pretty quickly (less than 1ms seems likely), and then work resumes. Nvidia notes here that ATW is basically a "safety net" and that the goal should be to render at >90 fps, as that will provide a better experience, but again ATW can be used even in cases where a new frame is ready.

That covers the areas that are similar to AMD's LiquidVR, but Nvidia has one feather in their cap that AMD doesn't currently support, and that's Multi-Resolution Shading. This one is something I got to preview last year, and it's another clever use of technology. Nvidia's Maxwell 2.0 architecture (sorry, GM107/GM108 and Kepler users, you're out of luck!) has a feature called viewport multicasting, or multi-projection acceleration. They first talked about this at GDC2015, but there weren't a lot of details provided; now, however, we have a concrete example of what Nvidia can do with this feature.

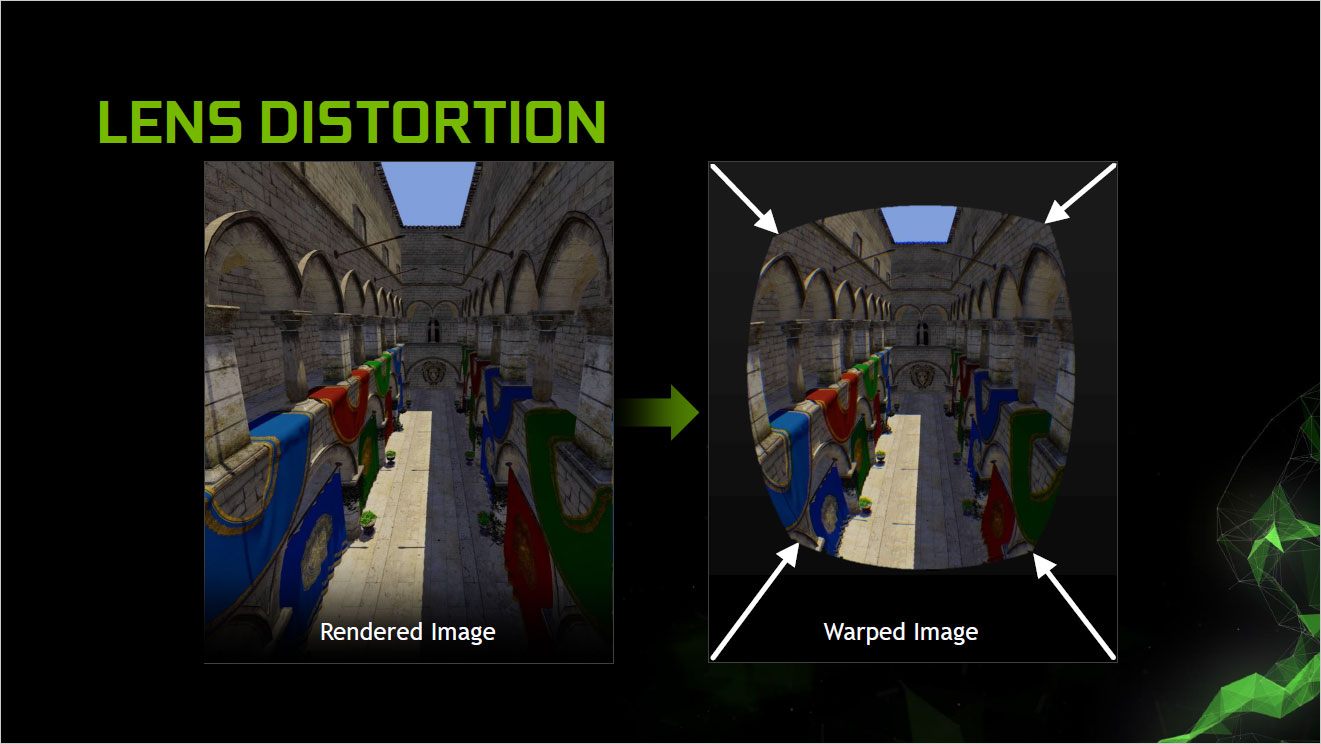

Normally, graphics rendering takes place from a single viewport; if you want to render from a different perspective, you have to recalculate a bunch of stuff and set things up for a new viewport, and that takes a lot of time. Multiport viewcasting allows Nvidia's GM20x architecture to do multiple viewports in a single pass, and what that means for VR is that Nvidia can do nine different scaled viewports. Why would they want to do that? Because a lot of the data normally rendered for VR gets discarded/lost during the preparation for the VR optics.

Above you can see the normal rendered image that the GPU generates, and then the final "warped" content that gets sent to the VR display. When you view the warped image through the lenses of a VR kit, it gets distorted back to a normal looking rectangle. In the process, a lot of content that was created prior to the distortion gets lost—it's like compressing a 2560x1440 image down to 1920x1080. Multi-res shading creates nine viewports, eight of which are scaled, so that most of the detail that gets lost isn't generated in the first place. The result is 30 percent to 50 percent fewer pixels actually rendered, leaving the GPU free to do other tasks if needed (or allowing a lower class of GPU to render a scene at 90+ fps).

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

So who is using what?

All of this may sound great—or it might just sound like a ton of technobabble and marketing speak—but ultimately, the real test of an SDK's usefulness is seeing how it's actually put into practice. Wrapping things up, then, here's a limited look at what we currently know in regards to developers actually using LiquidVR and VRWorks.

One of the main users is the VR headset creators. Both Oculus and Valve (SteamVR / Vive) are working closely with Nvidia and AMD, though they're not using all of the available features. Specifically, we know that Oculus is using Nvidia's Direct Mode and Context Priority features, and they're also using AMD's Direct-to-Display and Async Shaders. It appears Oculus has implemented their own take on asynchronous time warp, using Async Shaders or Context Priority as appropriate. One thing Oculus hasn't talked about supporting yet is any form of multi-GPU rendering, which maybe isn't too surprising since Oculus is also spending a lot of work on GearVR—if something can run on the Exynos SoC present in a GearVR, getting it working on any PC with a GTX 970/R9 290 or above should be a walk in the park.

Valve is somewhat similar to Oculus, in that they're supporting the direct display technologies—which any VR headset for PC basically has to support if it's going to be successful. There's no support of async time warp in the SteamVR SDK at present, but SteamVR includes features for dynamically scalable image fidelity in order to maintain 90+ fps, so it may not be as critical. On the other hand, SteamVR—including the SteamVR Performance Test—does have support for multi-GPU rendering from both AMD and Nvidia. We were able to test a beta version of this already, and it worked mostly as you'd expect, providing higher image fidelity and improved frame rates when using two fast GPUs. (Sorry—we didn't have three identical GPUs available for 3-way testing.)

Besides the two primary headset developers, the only other information we have on direct use of either SDK comes from Nvidia. It's not that AMD's LiquidVR isn't being used elsewhere, but they're not at liberty to tell us exactly who is using what—and being open source means there are potentially other companies using LiquidVR who haven't publicly said anything. So, understanding that AMD is likely to be represented in games and engines as well, here's what we know of Nvidia's VRWorks use.

The two biggest wins come in the form of engine support, with two of the heavy hitters. Unreal Engine 4 already has VRWorks support, including Multi-Res Shading and VR SLI, and this has been shown in the Bullet Train demo as well as Everest VR. Any UE4 licensees basically have access to these features now, if they want it. Unity engine is also working to include Multi-Res Shading and VR SLI, though it's apparently still a work in progress. A third engine, MaxPlay (of Max Payne fame), is also working to include VR SLI and Multi-Res Shading. Notably absent (for now) is CryEngine, not to mention plenty of other in-house game engines. The final users of VRWorks that are publicly announced are ILMxLABs' Trials on Tatooine, a game we were able to try at GDC2016, which makes use of VR SLI; and InnerVision Games' Thunderbird: The Legend Begins will support Multi-Res Shading.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.