Xbox One S and One X are getting AMD FreeSync 2 support

Welcome to the variable refresh rate club.

Jump to 1:37:57 in the above video for the FreeSync 2 discussion.

Over the weekend, Microsoft announced during it's Xbox Season Premiere event that it will be adding FreeSync 2 support to the Xbox One S and Xbox One X. This is big news, as even more than PCs, consoles often struggle to hit the native refresh rates on HDTVs. Let's talk quickly about what FreeSync 2 means, and how this will help console games.

Back in late 2013, Nvidia kicked off the variable refresh rate trend in displays with its G-Sync technology. It (still) requires an Nvidia graphics card along with a display that uses the G-Sync module, which inherently limits its application, but the result is quite impressive. AMD followed in 2015 with its own variation on the technology, called FreeSync, and worked with other companies to make FreeSync an open standard (though so far only AMD GPUs support the standard). More recently, AMD created an enhanced version called FreeSync 2, which is backwards compatible with the original FreeSync spec, so the Xbox One S/X will support both FreeSync 2 and FreeSync displays. But what makes variable refresh rates desirable in the first place?

Prior to G-Sync, signals were sent from your GPU to your display at fixed intervals. Because most displays refresh 60 times per second, that led to a choice between one of two compromises. The first option is to run with V-sync enabled, which means your display gets updated with a full frame of new information at its refresh rate. If a new frame isn't available, the previous frame is used, which in some situations can created stuttering and undesired effects. The other option is to turn V-sync off, and just sent every new frame to the display as soon as it's ready—even if the display is in the middle of refreshing. This leads to tearing, seen below, but it can give improved latency because you're not waiting for the display to finish updating.

The problem with fixed refresh rates is that if you're not able to render frames faster than the refresh rate—like say you come just short at 55fps instead of 60fps on a 60Hz display—you're often limited to half the refresh rate for your framerate. Frame 1 is sent to the display, 1/60 of a second later the display checks for a new frame, it's not ready, and frame 1 gets repeated. Just after frame 1 is repeated, frame 2 is ready, but now the display has to wait about 16ms before it can show that frame. Triple buffering can help improve the framerate by allowing the GPU to start rendering frames 3, 4, etc. while waiting for frame 2 to get displayed, but input lag (the latency between when an action occurs on the user end of the equation and when that action is reflected on-screen) is still a problem.

G-Sync and FreeSync are both similar in that they move to a dynamic refresh rate, within a range of values. Now, instead of waiting for the display refresh to restart, the GPU can send a signal to the display as soon as a new frame is ready. This improves input latency while avoiding tearing, and for games running between 30-60 fps the difference is very noticeable. And that's important, because maintaining a steady 60fps in many games is difficult, particularly on lower spec hardware (which is what modern consoles are, more or less).

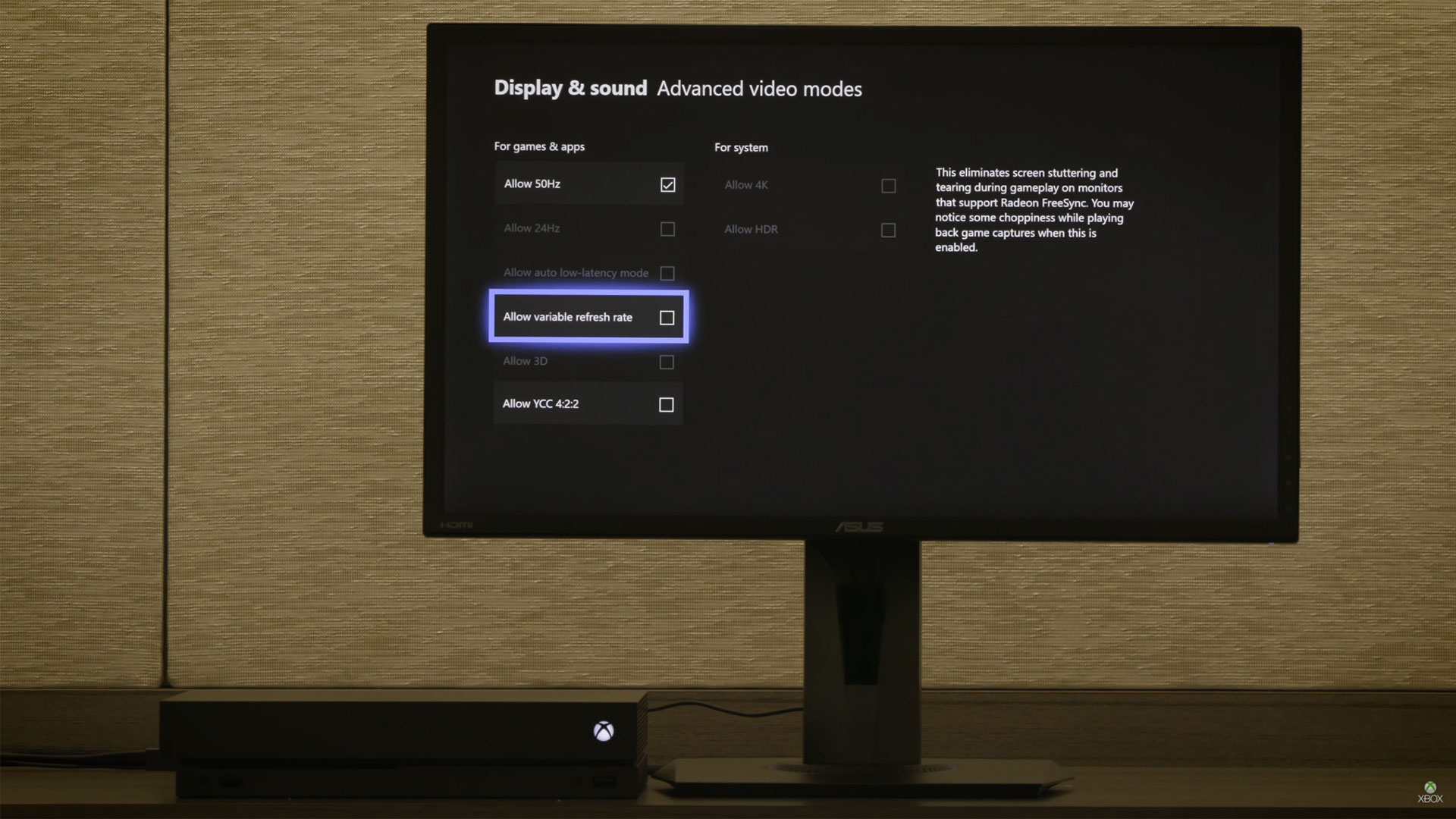

The initial FreeSync support will roll out next week to Xbox Insiders in the Alpha Ring (basically the more frequent 'beta' updates to test new features). The Xbox can work with FreeSync 2 displays, which include HDR support among other things, as well as existing FreeSync displays. HDMI FreeSync also works, with the appropriate display, which is an important thing to consider as the Xbox One S/X don't include native DisplayPort outputs (it's available via adapter). Also note that there are currently no HDTVs with FreeSync support, which is something to look for in the future, so initially this is intended for use with one of the 200+ FreeSync monitors.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.