After a terrible 2021 for hardware, was 2022 really any better?

In some ways, yes, in a lot of ways, oh hell no.

It's only natural that at the end of a long year you end up looking backwards. We've written a lot of tech news this year—weird, wonderful, and Gabe-y—and a monstrous number of hardware reviews, too. But it's a piece I published on the very first day of 2022 that I want to revisit, a piece where I called 2021 the worst year for PC gaming.

With 2022 now receding into the rearview mirror, was it really any better? Honestly, I'm not convinced. There are happenings you could point to and call them positives, but on the whole 2022 still rather sucked to be a PC gamer.

My main issue with 2021 was that the combination of cryptocurrency mining and chip supply chain shortages made it an almost impossible economic decision for manufacturers to put thought, energy, and more importantly money, into mainstream PC gaming. It was the fact that with every single GPU put on the market being sold, it made more sense to keep pumping out $1,000+ cards when you're set to sell the same number as if you'd listed a range of sub-$300 options.

So, the budget PC market was dead, meaning that if you wanted to recommend some sort of vaguely affordable entry-point into gaming it had to be one of either Sony or Microsoft's consoles. And it sucks to have to say that as a dyed-in-the-wool PC gamer.

But in 2022 both AMD and Nvidia released their budget graphics cards—the Radeon RX 6500 XT and the GeForce RTX 3050. And that actually only made me feel worse about the mainstream market. The RX 6500 XT delivers all the performance of an AMD card that cost the same price when the RX 480 launched back in 2016 and the RTX 3050, well in my review I said that "where I wanted at least RTX 2060 performance, I've got GTX 1660 Ti frame rates with a little RTX frosting on top."

That I do not call progress.

But then Valve dropped the Steam Deck. A device that is uniquely PC in its makeup, and the equivalent of our Switch. It's a console-like device, with a price tag to match. It's an entry-point into the world of PC gaming, offering a utility that no other rig can really offer, not even a good gaming laptop.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

We're still not talking about fire sale pricing here, just that you might actually be able to find the graphics card you want for only about 25% above its MSRP.

One of the things that stands out to me about Valve's handheld is that the only differentiator between its three price points is the type and amount of storage—something that is upgradeable down the line. Though, to be fair, Gabe's gang does kinda discourage that.

I've already gone on record to say that I believe that in the blandest year for PC gaming hardware it was Valve alone who took any positive risks. So, I won't keep banging on about the Steam Deck, though that was a bright point of the year for sure.

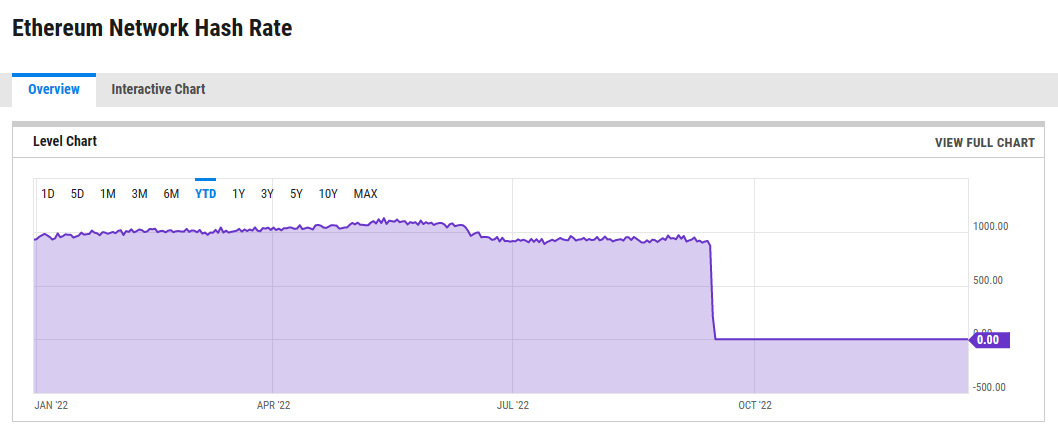

Another blazing white bright spot, and arguably far more important for the long-term health of PC gaming as a hobby, was the death of cryptocurrency GPU mining. The long-delayed switch of ethereum to a proof-of-stake consensus meant that the number-crunching power of our graphics cards was no longer needed to secure the ethereum blockchain. That, and the collapse of bitcoin helping crash prices across the board, made it effectively a worthless endeavour.

Cue lots of second-hand GPUs hitting the market as a load of miners sought to try and cash in their chips. At the same time graphics card manufacturers increased production meant there was a glut of new cards on the shelves of prime retail, too.

In turn that meant prices finally started to drop for GPUs. Though not particularly quickly, and we're still not talking about fire sale pricing here either, just that you might actually be able to find the graphics card you want for only about 25% above its MSRP. If you were lucky.

Nvidia raised the barrier of entry to such a scale that only its rich fans were invited to the party.

That's still kinda positive, right? And as we hit sales season with Prime Day in the summer we actually saw deals on GPUs. Actual, genuine discounts. Suddenly AMD's lacklustre mainstream RDNA 2 cards started to take on much greater significance. Suddenly they were at a price that made them actually kinda worthwhile, dare I say, even desirable.

And then the next gen happened. New graphics card generations are generally a point of great excitement in the PC gaming sphere, but Nvidia raised the barrier of entry to such a scale that only its rich fans were invited to the party. Then AMD hosted its own end of year GPU soirée and seemed to copy the party invitations directly off the green team.

After both GPU manufacturers had revealed their plans we were left with a new generation that featured five separate graphics cards costing between $899 and $1,599. On the Nvidia side, that translated down to a third tier GPU that was due to cost $899. And let's be clear on that, we're talking about a distinct slice of silicon, not a cut down version of the expensive top GPU, but a very small chip (around RTX 3050 levels) manufactured to be featured in a lower class of graphics card.

And yet was still priced at $899. And called an RTX 4080. Just like the other RTX 4080, which used a completely different GPU and cost $1,200+. Thankfully, after refusing to see that was a confusing state of affairs when it was pointed out at various press briefings, Nvidia finally conceded that it wasn't the best plan and "unlaunched" the 12GB RTX 4080. We're now likely to see that rebadged as an RTX 4070 Ti sometime in the new year.

Still, that left us with the 16GB RTX 4080 at $1,200 and the RTX 4090 at $1,600. And, inconceivably, the RTX 4090 was the only one that represented any sense of value for money. It's tenuous, sure, but that monster GPU is the only card from the current gen to really deliver something that feels like a genuine step up.

On the AMD side, its just followed Nvidia's ultra-enthusiast pricing lead and costed up its RDNA 3-powered RX 7900 XTX and RX 7900 XT at $999 and $899 respectively. It's like the red team doesn't know how to do enthusiast GPUs because the 'XT' card makes zero sense. Down at ~$500 that pricing delta makes sense, but pricing it $100 less than the full-fat Navi 31 chip doesn't work when you're talking about spending around $1,000 on a new GPU. When you're already ready to drop that amount of cash you're just going to spend the extra on the better card.

So, we're ending 2022 in much the same way as 2021. Graphics cards are priced at a ridiculous level and we're left hoping the new year will bring some sort of affordable GPU respite.

The current pricing structure is surely untenable, but thankfully we're starting to see some sort of market correction as neither the RTX 4080 or RX 7900 XT cards seem to be selling particularly well. Though that is all just going by circumstantial and anecdotal evidence, if I'm being completely fair. You don't have to go to Ebay to find either card overpriced by filthy reseller, no, traditional retailers still have stock and are willing to overprice those cards themselves. It's not about particularly great supply either, as Nvidia has admitted it's undersupplied the RTX 40-series so far.

But let me leave this maudlin screed on a high: DLSS 3 with Frame Generation is frickin' black gaming magic and will make any mainstream GPU it touches fly. It inserts completely AI generated frames into compatible games, drastically improving performance to a quite spectacular level. It's been incredibly impressive to see on Nvidia's high-end cards, but could be even more of a game changer in the lower echelons. AMD's got its own plans on that front, too, which will be music to budget PC gamers' ears.

Yeah, maybe 2023 will be better. Maybe.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.