Our Verdict

The RTX 4090 may not be subtle but the finesse of DLSS 3 and Frame Generation, and the raw graphical grunt of a 2.7GHz GPU combine to make one hell of a gaming card.

For

- Excellent gen-on-gen performance

- DLSS Frame Generation is magic

- Super-high clock speeds

Against

- Massive

- Ultra-enthusiast pricing

- Non-4K performance is constrained

- High power demands

PC Gamer's got your back

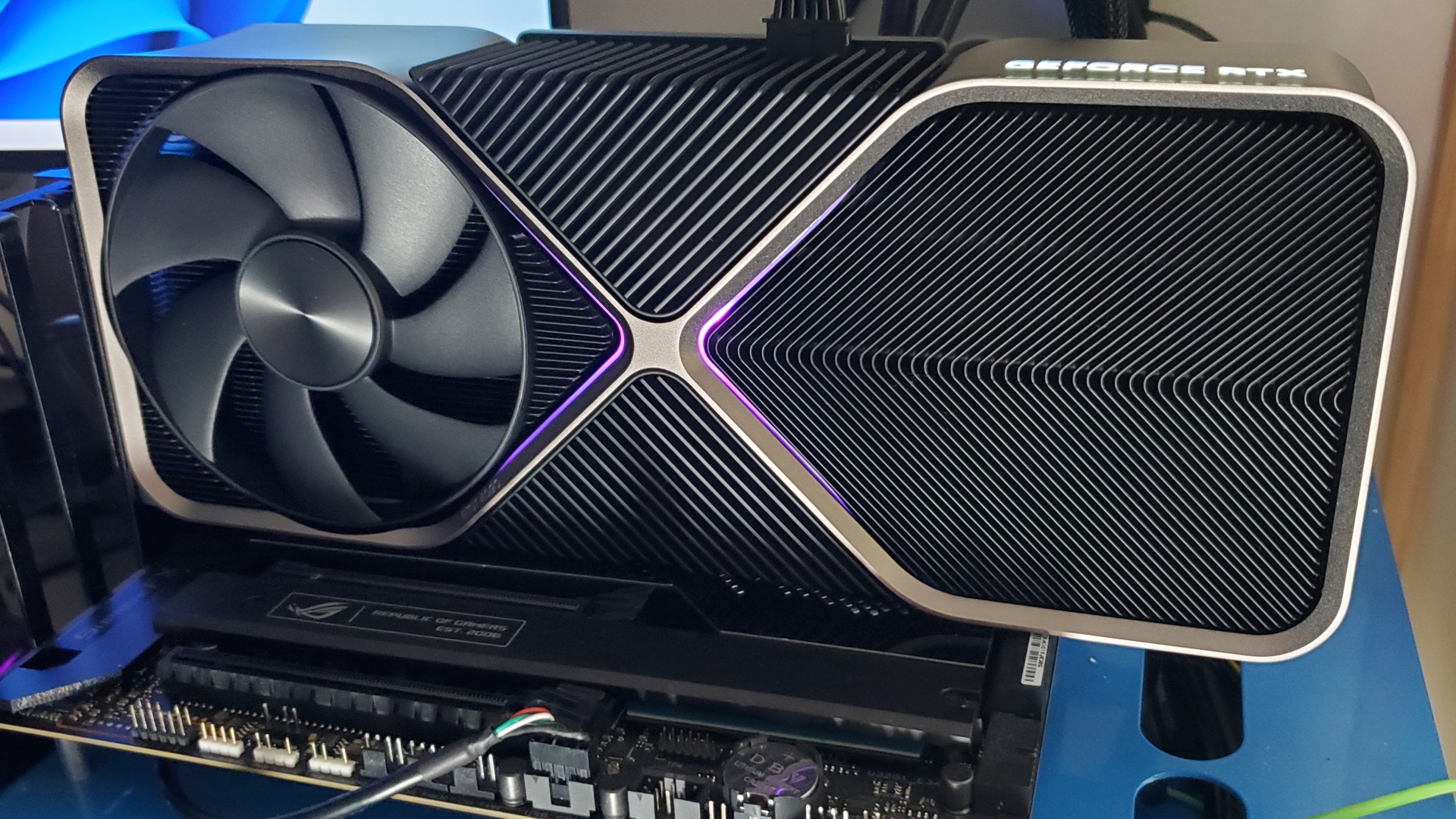

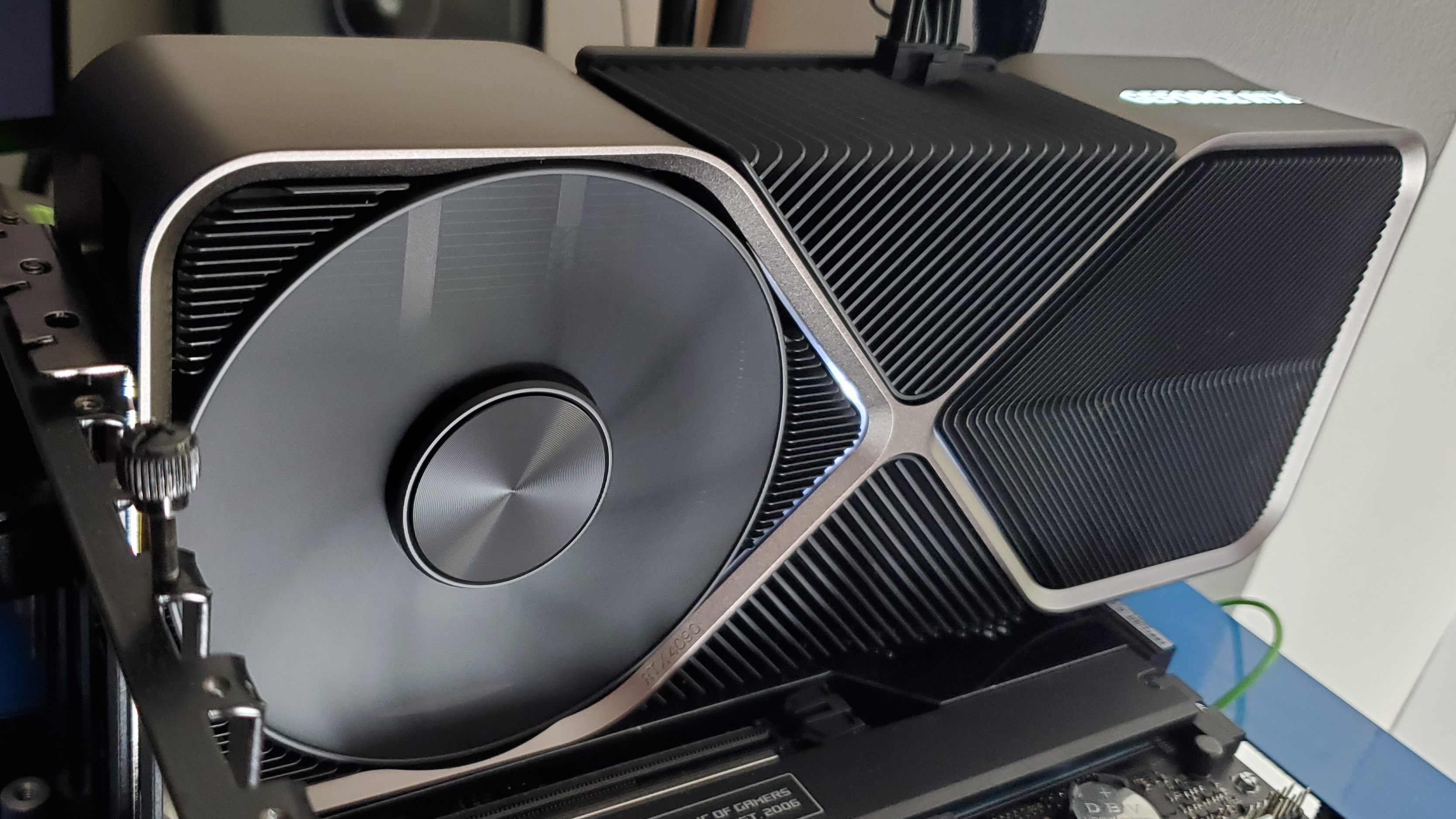

There's nothing subtle about Nvidia's GeForce RTX 4090 graphics card. It's a hulking great lump of a pixel pusher, and while there are some extra curves added to what could otherwise look like a respin of the RTX 3090 shroud, it still has that novelty graphics card aesthetic.

It looks like some semi-satirical plastic model made up to skewer GPU makers for the ever-increasing size of their cards. But it's no model, and it's no moon, this is the vanguard for the entire RTX 40-series GPU generation and our first taste of the new Ada Lovelace architecture.

On the one hand, it's a hell of an introduction to the sort of extreme performance Ada can deliver when given a long leash, and on the other, a slightly tone-deaf release in light of a global economic crisis that makes launching a graphics card for a tight minority of gamers feel a bit off.

This is a vast GPU that packs in 170% more transistors than even the impossibly chonk GA102 chip that powered the RTX 3090 Ti. And, for the most part, it makes the previous flagship card of the Ampere generation look well off the pace. That's even before you get into the equal mix of majesty and black magic that lies behind the new DLSS 3.0 revision designed purely for Ada.

But with the next suite of RTX 40-series cards not following up until sometime in November, and even then being ultra-enthusiast priced GPUs themselves, the marker being laid for this generation points to extreme performance, but at a higher cost. You could argue the RTX 4090, at $1,599 (£1,699), is only a little more than the RTX 3090—in dollar terms at least—and some $400 cheaper than the RTX 3090 Ti.

Though it must be said, the RTX 3090 Ti released at a different time, and its pandemic pricing matched the then scarcity of PC silicon and reflected a world where GPU mining was still a thing. Mercifully, as 2022 draws to a close, ethereum has finally moved to proof-of-stake and the days of algorithmically pounding graphics cards to power its blockchain are over.

Now, we're back to gamers and content creators picking up GPUs for their rigs, so what is the RTX 4090 going to offer them?

Nvidia RTX 4090 architecture and specs

What's inside the RTX 4090?

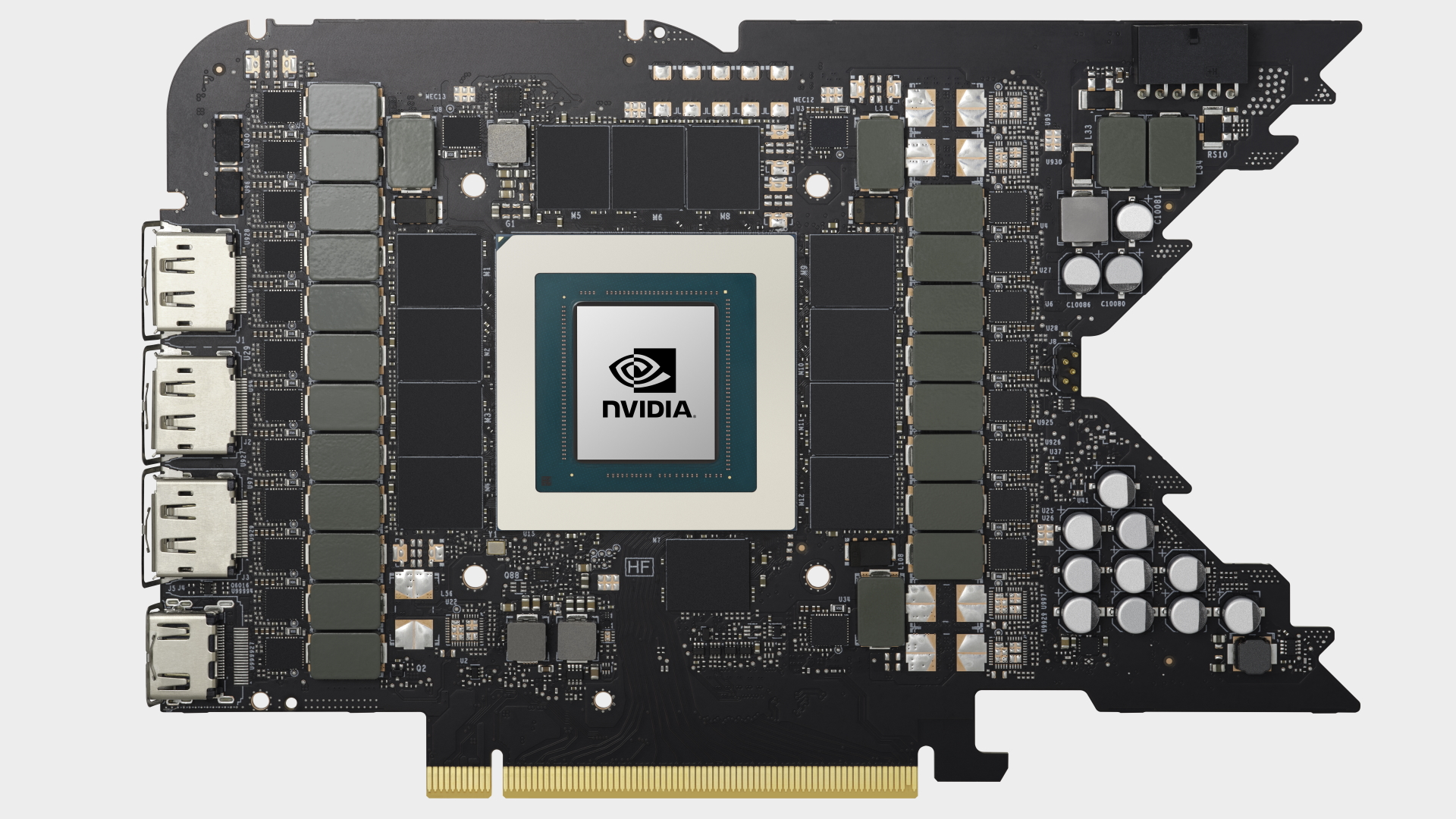

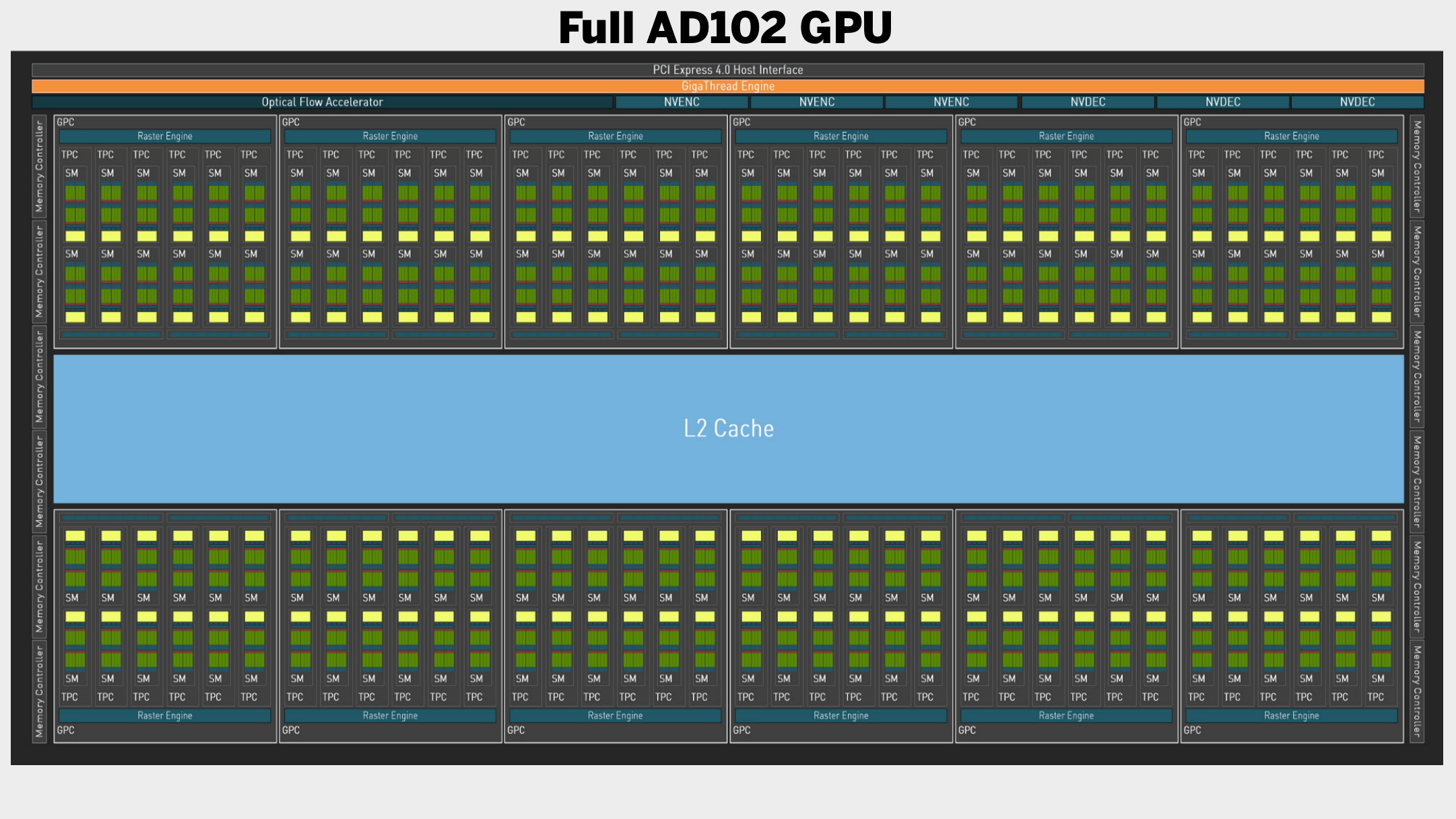

The RTX 4090 comes with the first Ada Lovelace GPU of this generation: the AD102. But it's worth noting the chip used in this flagship card is not the full core, despite its already monstrous specs sheet.

Still, at its heart are 16,384 CUDA cores arrayed across 128 streaming multiprocessors (SMs). That represents a 52% increase over the RTX 3090 Ti's GA102 GPU, which was itself the full Ampere core.

The full AD102 chip comprises 18,432 CUDA Cores and 144 SMs. That also means you're looking at 144 third gen RT Cores and 576 fourth gen Tensor Cores. Which I guess means there's plenty of room for an RTX 4090 Ti or even a Titan should Nvidia wish.

Memory hasn't changed much, again with 24GB of GDDR6X running at 21Gbps, which delivers 1,008GB/sec of memory bandwidth.

| Header Cell - Column 0 | GeForce RTX 4090 | GeForce RTX 3090 Ti |

|---|---|---|

| Lithography | TSMC 4N | Samsung 8N |

| CUDA cores | 16,384 | 10,752 |

| SMs | 128 | 84 |

| RT Cores | 128 | 84 |

| Tensor Cores | 512 | 336 |

| ROPs | 176 | 112 |

| Boost clock | 2,520MHz | 1,860MHz |

| Memory | 24GB GDDR6X | 24GB GDDR6X |

| Memory speed | 21Gbps | 21Gbps |

| Memory bandwidth | 1,008GB/s | 1,008GB/s |

| L1 | L2 cache | 16,384KB | 73,728KB | 10,752KB | 6,144KB |

| Transistors | 76.3 billion | 28.3 billion |

| Die Size | 608.5mm² | 628.5mm² |

| TGP | 450W | 450W |

| Price | $1,599 | £1,699 | $1,999 | £1,999 |

Almost a full 1GHz faster than the RTX 3090 of the previous generation.

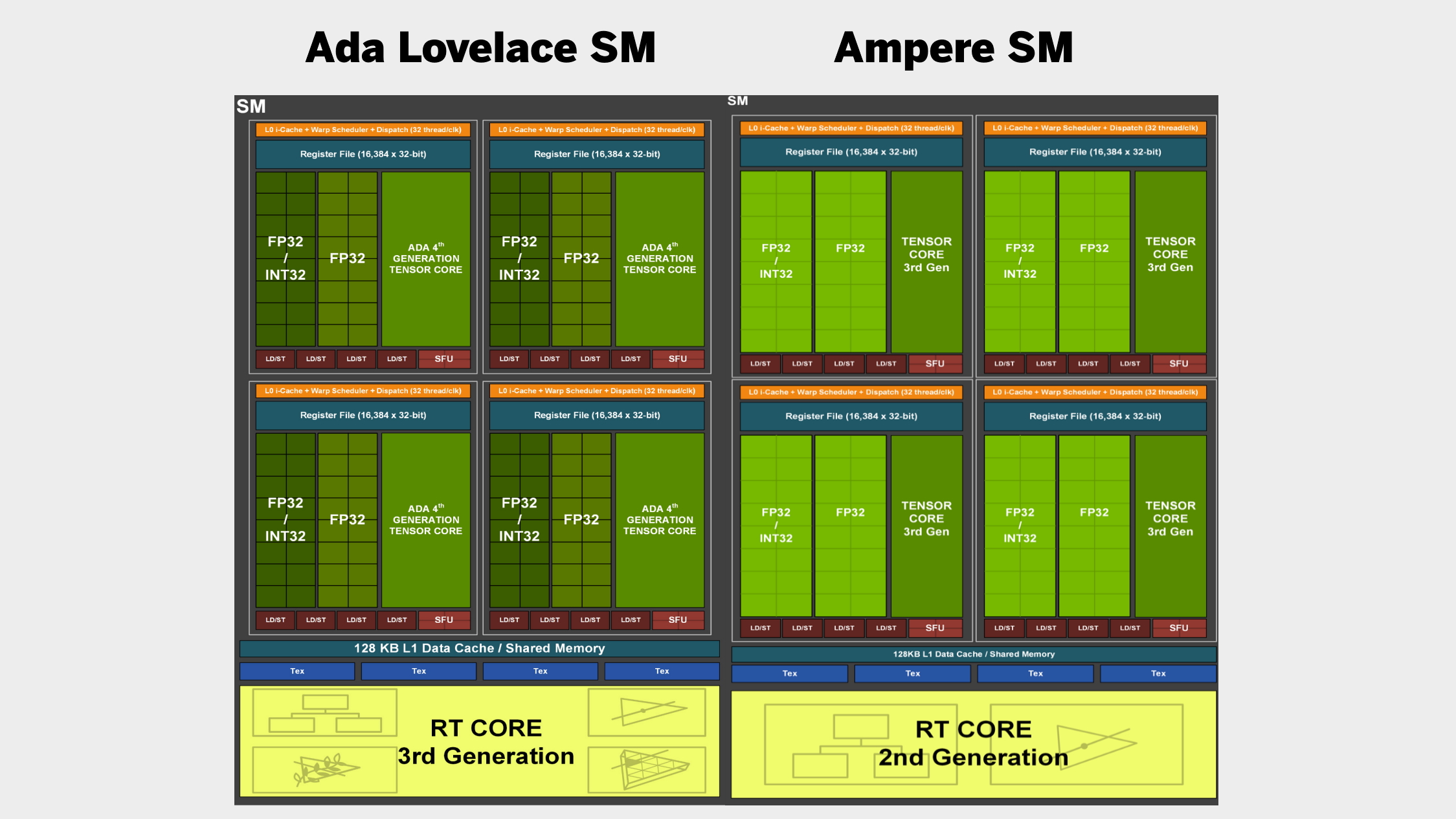

On the raw shader side of the equation, things haven't really moved that far along from the Ampere architecture either. Each SM is still using the same 64 dedicated FP32 units, but with a secondary stream of 64 units that can be split between floating point and integer calculations as necessary, the same as was introduced with Ampere.

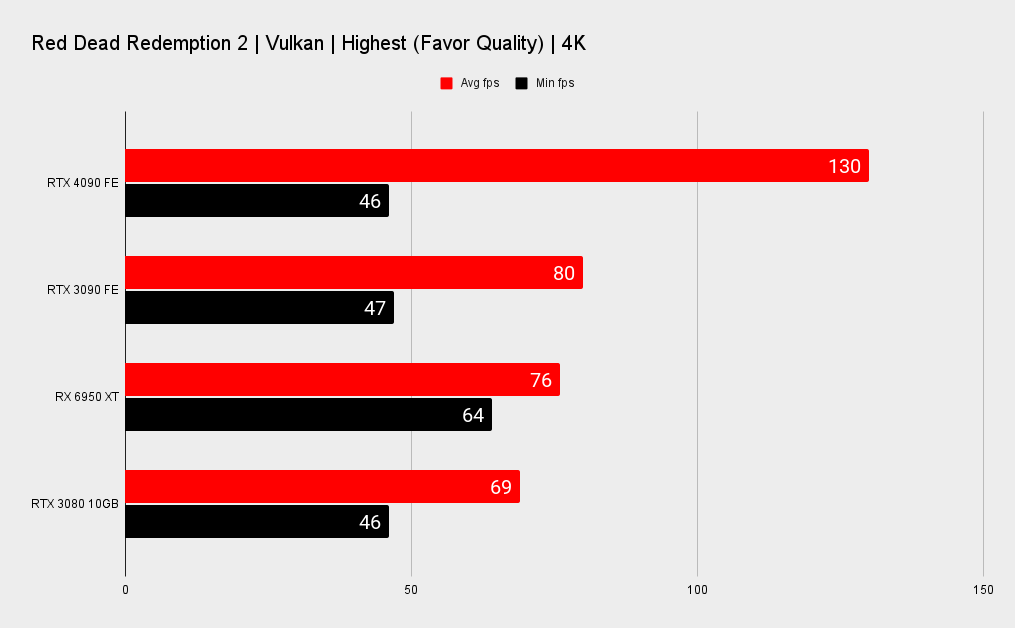

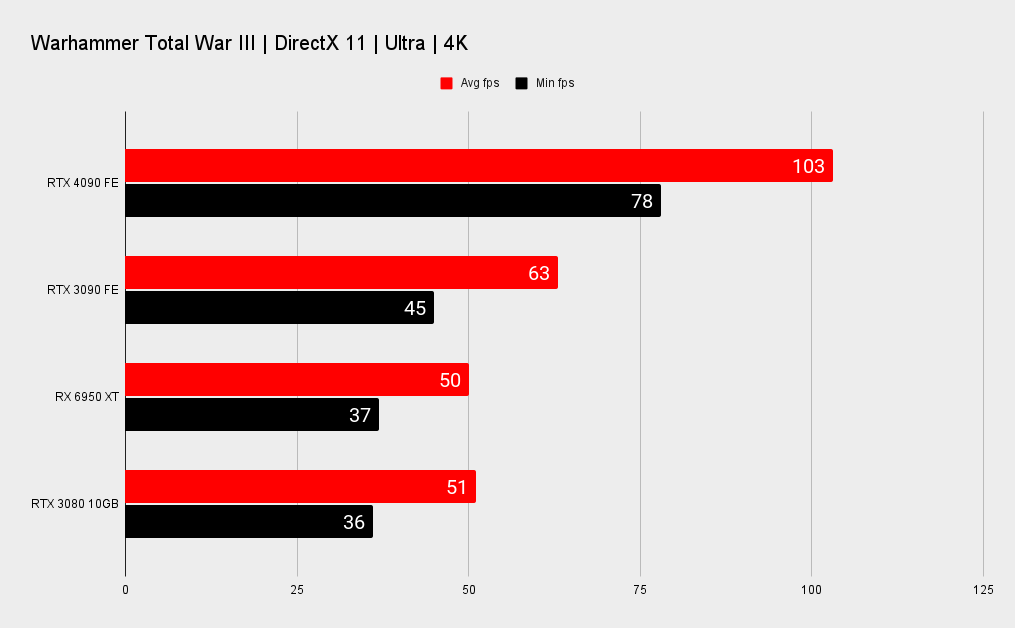

You can see how similar the two architectures are from a rasterisation perspective when looking at the relative performance difference between an RTX 3090 and RTX 4090.

If you ignore ray tracing and upscaling there is a corresponding performance boost that's only a little higher than you might expect from the extra number of CUDA Cores dropped into the AD102 GPU. The 'little higher' than commensurate performance increase though does show there are some differences at that level.

Part of that is down to the new 4N production process Nvidia is using for its Ada Lovelace GPUs. Compared with the 8N Samsung process of Ampere, the TSMC-built 4N process is said to offer either twice the performance at the same power, or half the power with the same performance.

This has meant Nvidia can be super-aggressive in terms of clock speeds, with the RTX 4090 listed with a boost clock of 2,520MHz. We've actually seen our Founders Edition card averaging 2,716MHz in our testing, which puts it almost a full 1GHz faster than the RTX 3090 of the previous generation.

And, because of that process shrink, Nvidia's engineers working with TSMC have crammed an astonishing 76.3 billion transistors into the AD102 core. Considering the 608.5mm² Ada GPU contains so many more than the 28.3 billion transistors of the GA102 silicon, it's maybe surprising it's that much smaller than the 628.4mm² Ampere chip.

The fact Nvidia can keep on jamming this ever-increasing number of transistors into a monolithic chip, and still keep shrinking its actual die size, is testament to the power of advanced process nodes in this sphere. For reference, the RTX 2080 Ti's TU102 chip was 754mm² and held just 18.6 billion 12nm transistors.

That doesn't mean the monolithic GPU can continue forever, unchecked. GPU rival, AMD, is promising to shift to graphics compute chiplets for its new RDNA 3 chips launching in November. Given the AD102 GPU's complexity is second only to the 80 billion transistors of the advanced 814mm² Nvidia Hopper silicon, it's sure to be an expensive chip to produce. The smaller compute chiplets, however, ought to bring costs down, and drive yields up.

For now, though, the brute force monolithic approach is still paying off for Nvidia.

What else do you do when you want more speed and you've already packed in as many advanced transistors as you can? You stick some more cache memory into the package. This is something AMD has done to great effect with its Infinity Cache and, while Nvidia isn't necessarily going with some fancy new branded approach, it is dropping a huge chunk more L2 cache into the Ada core.

The previous generation, GA102, contained 6,144KB of shared L2 cache, which sat in the middle of its SMs, and Ada is increasing that by 16 times to create a pool of 98,304KB of L2 for the AD102 SMs to play with. For the RTX 4090 version of the chip that drops to 73,728KB, but that's still a lot of cache. The amount of L1 hasn't changed per SM, but because there are now so many more SMs inside the chip in total, that also means there is a greater amount of L1 cache compared with Ampere, too.

But rasterisation isn't everything for a GPU these days. However you might have felt about it when Turing first introduced real time ray tracing in games, it has now become almost a standard part of PC gaming. The same can be said of upscaling, too, so how an architecture approaches these two further pillars of PC gaming is vital to understanding the design as a whole.

And now all three graphics card manufacturers (how weird it feels to be talking about a triumvirate now…) are focusing on ray tracing performance as well as the intricacies of upscaling technologies, it's become a whole new theatre of the war between them.

This is actually where the real changes have occurred in the Ada streaming multiprocessor. The rasterised components may be very similar, but the third-generation RT Core has seen a big change. The previous two generations of RT Core contained a pair of dedicated units—the Box Intersection Engine and Triangle Intersection Engine—which pulled a lot of the RT workload from the rest of the SM when calculating the bounding volume hierarchy (BVH) algorithm at the heart of ray tracing.

Ada introduces another two discrete units to offload even more work from the SM: the Opacity Micromap Engine and Displaced Micro-Mesh Engine. The first drastically accelerates calculations when dealing with transparencies in a scene, and the second is designed to break geometrically complex objects down to reduce the time it takes to go through the whole BVH calculation.

Added to this is something Nvidia is calling "as big an innovation for GPUs as out-of-order execution was for CPUs back in the 1990s." Shader Execution Reordering (SER) has been created to switch up shading workloads, allowing the Ada chips to greatly improve the efficiency of the graphics pipeline when it comes to ray tracing by rescheduling tasks on the fly.

Intel has been working on a similar feature for its Alchemist GPUs, the Thread Sorting Unit, to help with diverging rays in ray traced scenes. And its setup reportedly doesn't require developer input. For now, Nvidia requires a specific API to integrate SER into a developer's game code, but Nvidia says it's working with Microsoft, and others, to introduce the feature into standard graphics APIs such as DirectX 12 and Vulkan.

It's voodoo, it's black magic, it's the dark arts, and it's rather magnificent.

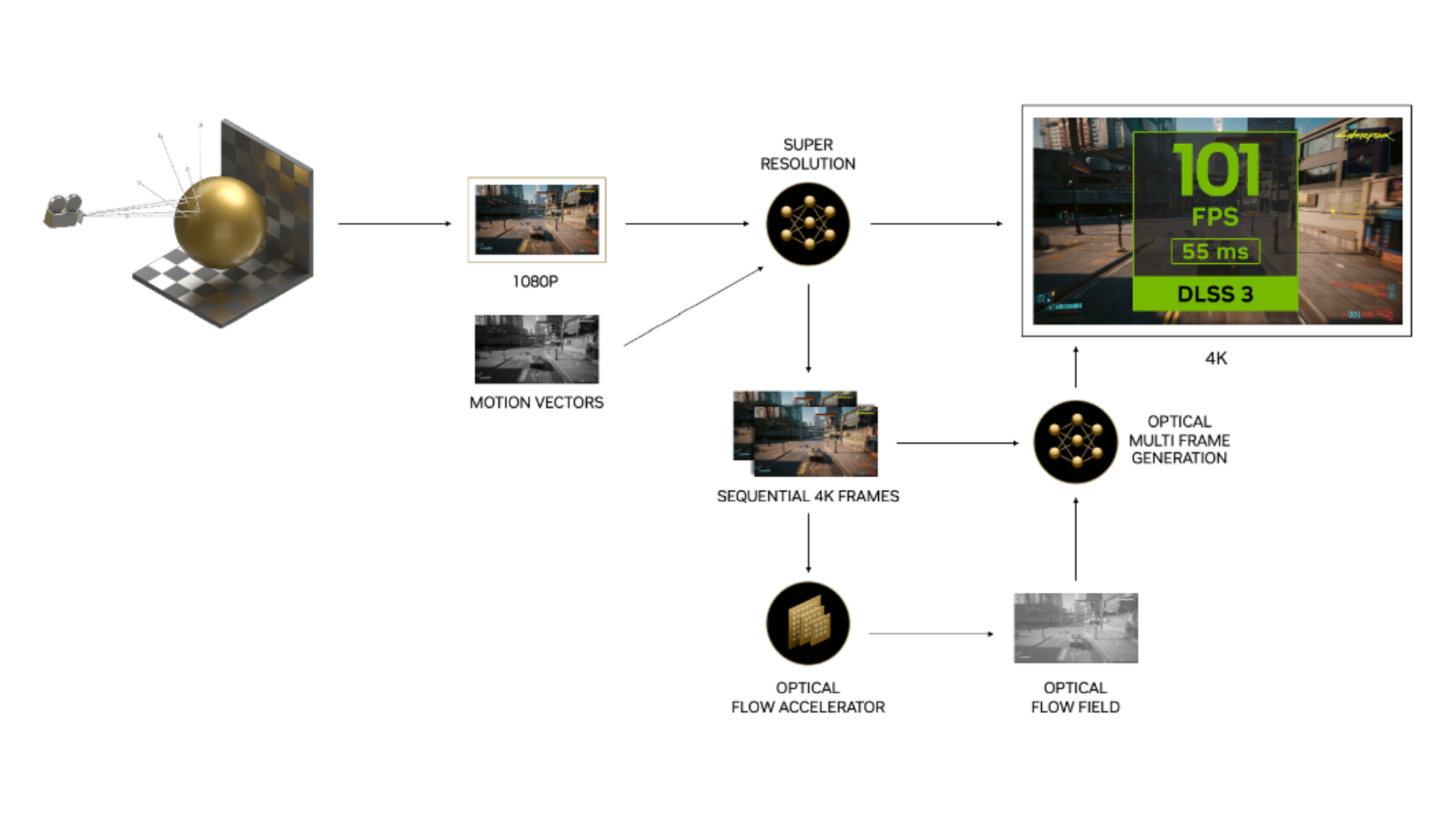

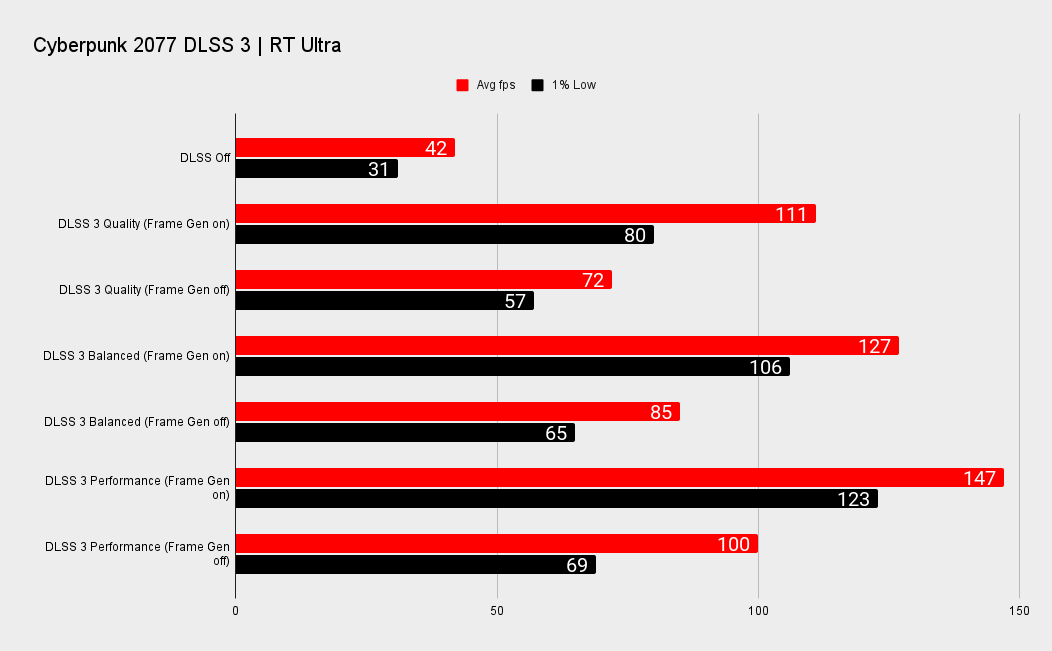

Lastly, we come to DLSS 3, with its ace in the hole: Frame Generation. Yes, DLSS 3 is now not just going to be upscaling, it's going to be creating entire game frames all by itself. Not necessarily from scratch, but by using the power of AI and deep learning to take a best guess at what the next frame would look like if you were actually going to render it. It then injects this AI generated frame in before the next genuinely rendered frame.

It's voodoo, it's black magic, it's the dark arts, and it's rather magnificent. It uses enhanced hardware units inside the fourth-gen Tensor Cores, called Optical Flow Units, to make all those in-flight calculations. It then takes advantage of a neural network to pull all the data from the previous frames, the motion vectors in a scene, and the Optical Flow Unit, together to help create a whole new frame, one that is also able to include ray tracing and post processing effects.

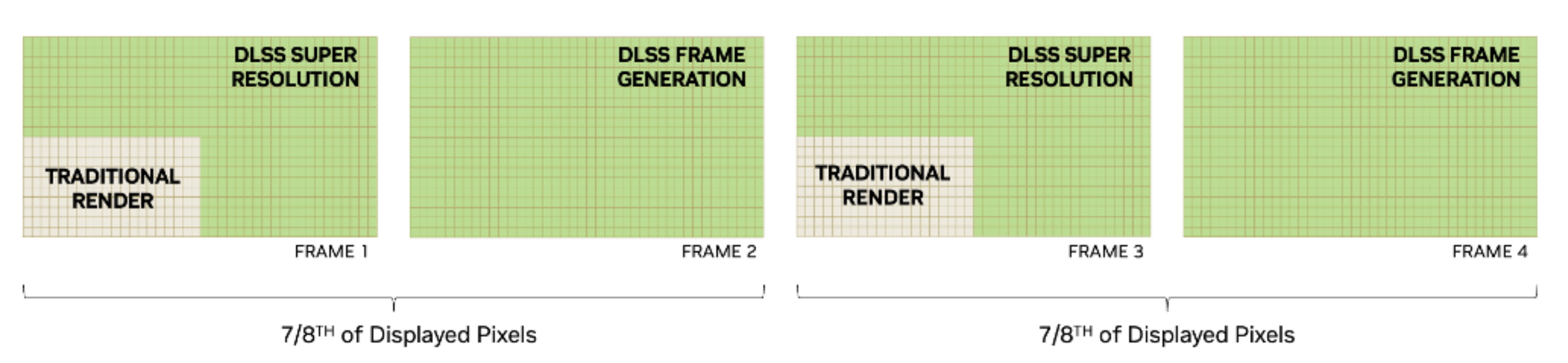

Working in conjunction with DLSS upscaling (now just called DLSS Super Resolution), Nvidia states that in certain circumstances AI will be generating three-fourths of an initial frame through upscaling, and then the entirety of a second frame using Frame Generation. In total then it estimates the AI is creating seven-eighths of all the displayed pixels.

And that just blows my little gamer mind.

Nvidia RTX 4090 performance

How does the RTX 4090 perform?

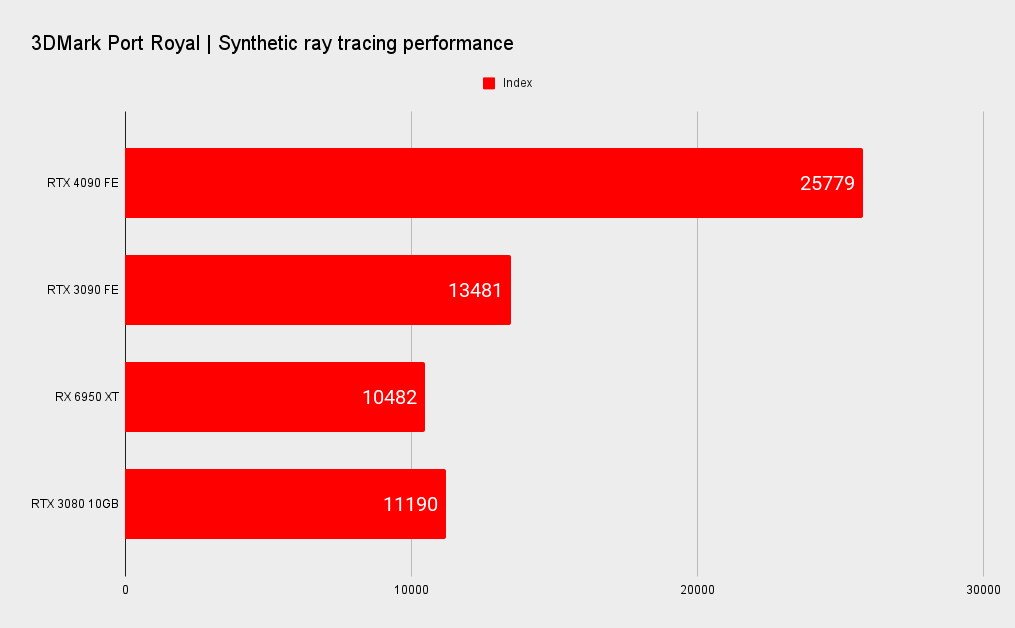

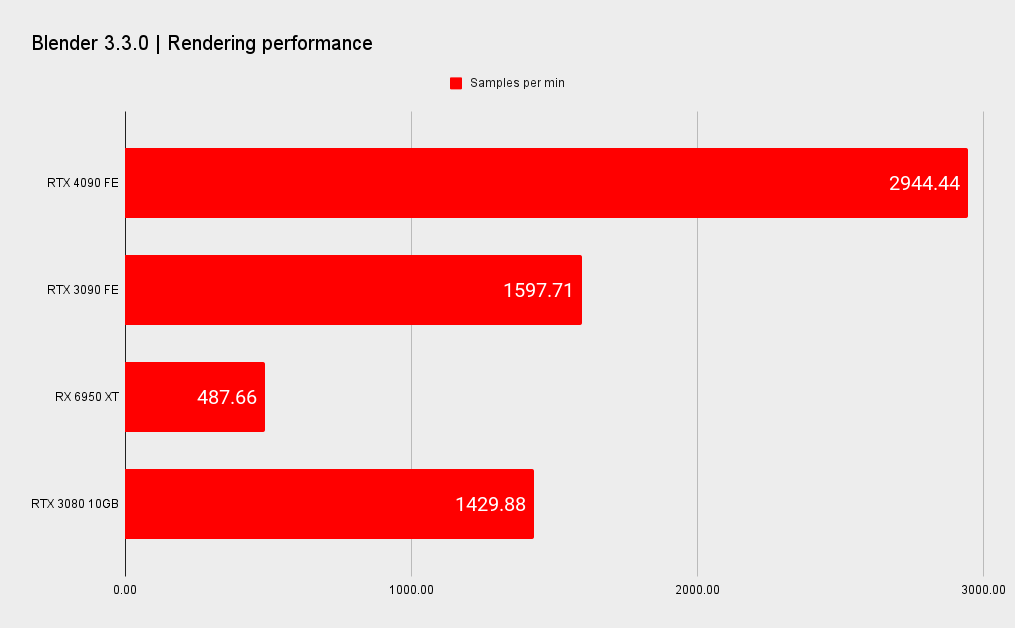

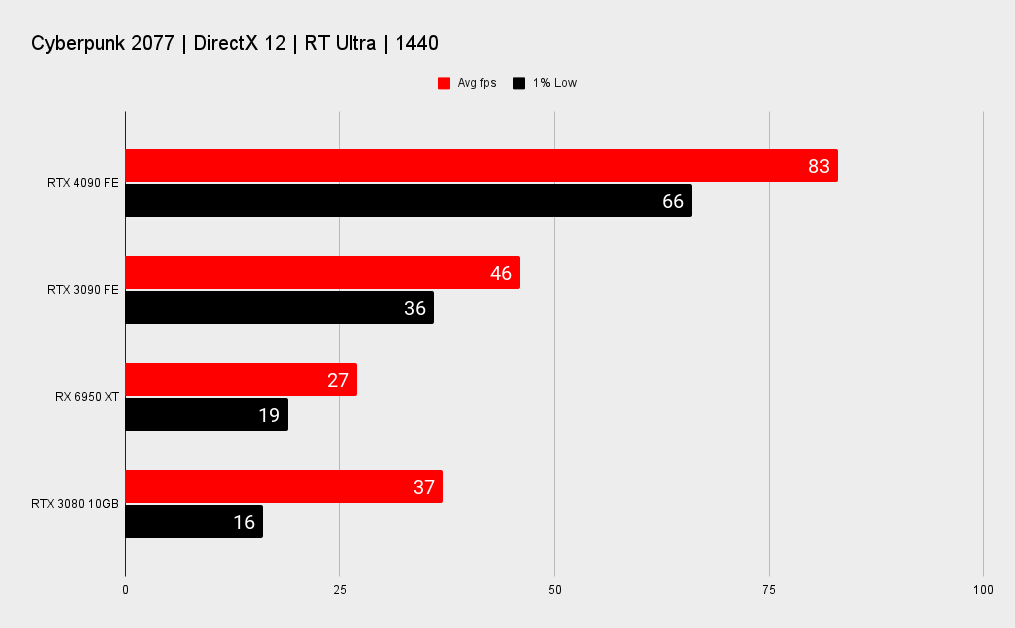

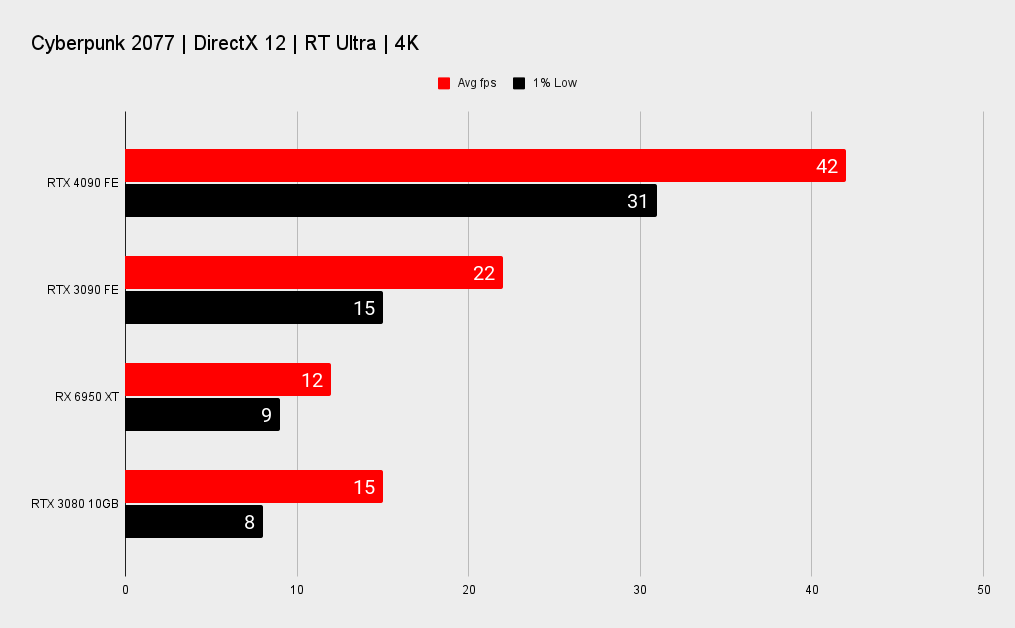

Look, it's quick, okay. With everything turned on, with DLSS 3 and Frame Generation working its magic, the RTX 4090 is monumentally faster than the RTX 3090 that came before it. The straight 3DMark Time Spy Extreme score is twice that of the big Ampere core, and before ray tracing or DLSS come into it, the raw silicon offers twice the 4K frame rate in Cyberpunk 2077, too.

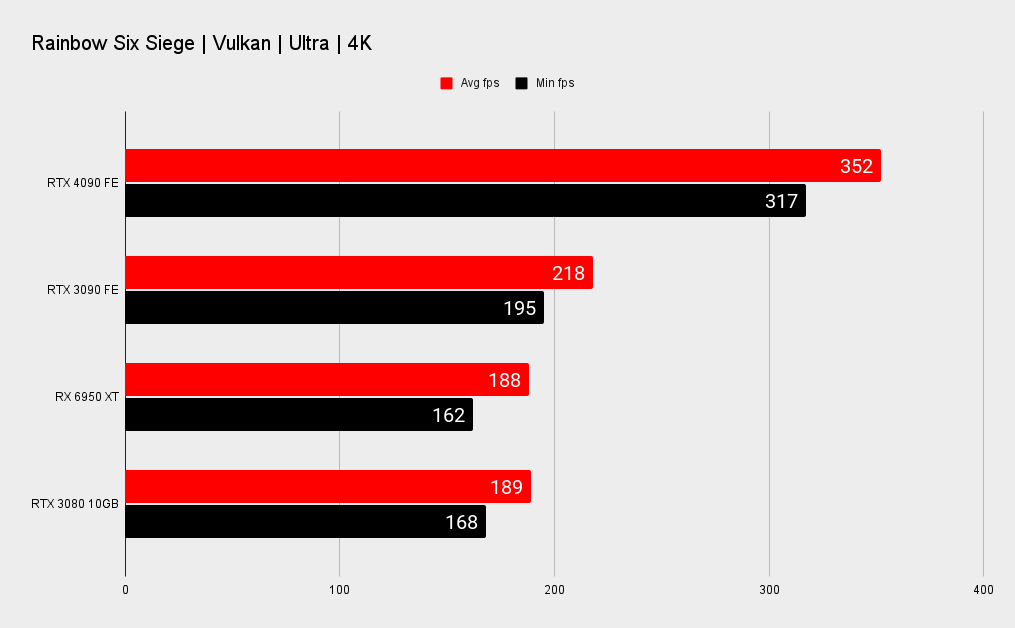

But if you're not rocking a 4K monitor then you really ought to think twice before dropping $1,600 on a new GPU without upgrading your screen at the same time. That's because the RTX 4090 is so speedy when it comes to pure rasterising that we're back to the old days of being CPU bound in a huge number of games.

Synthetic performance

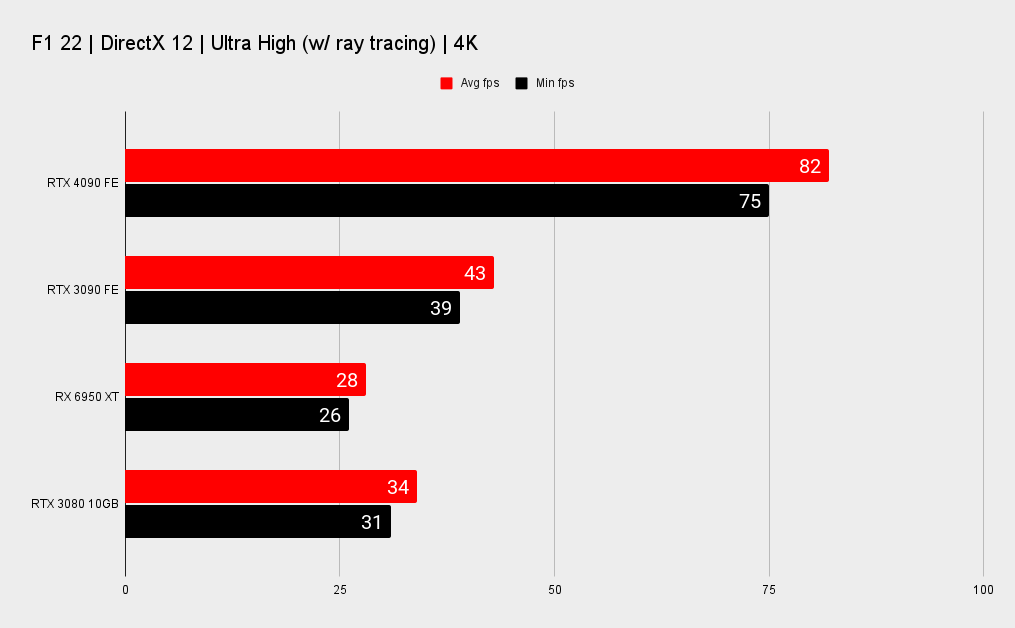

With ray tracing enabled you can be looking at 91% higher frame rates at 4K.

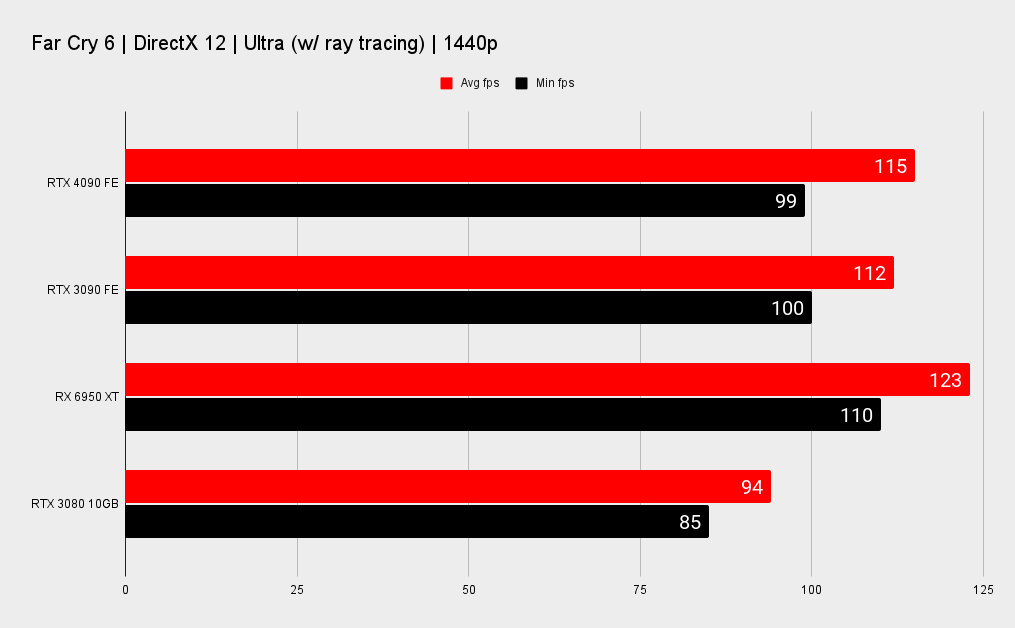

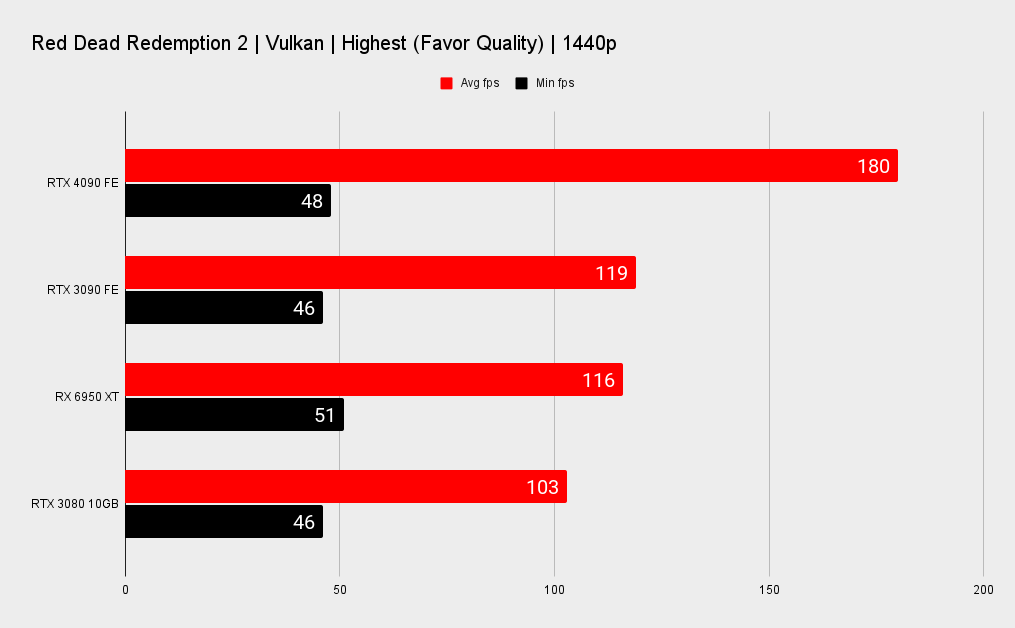

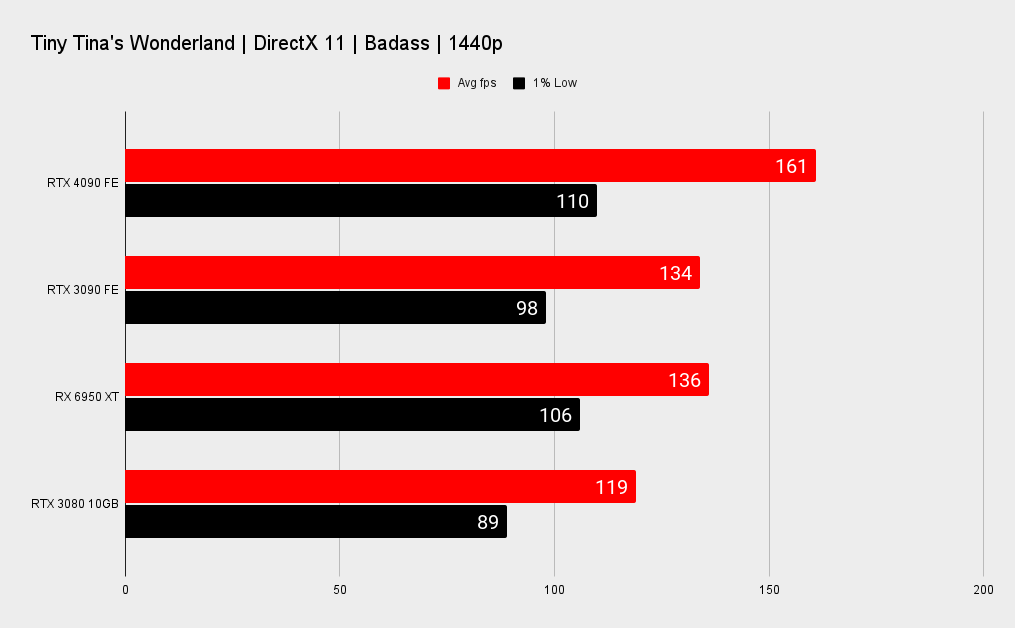

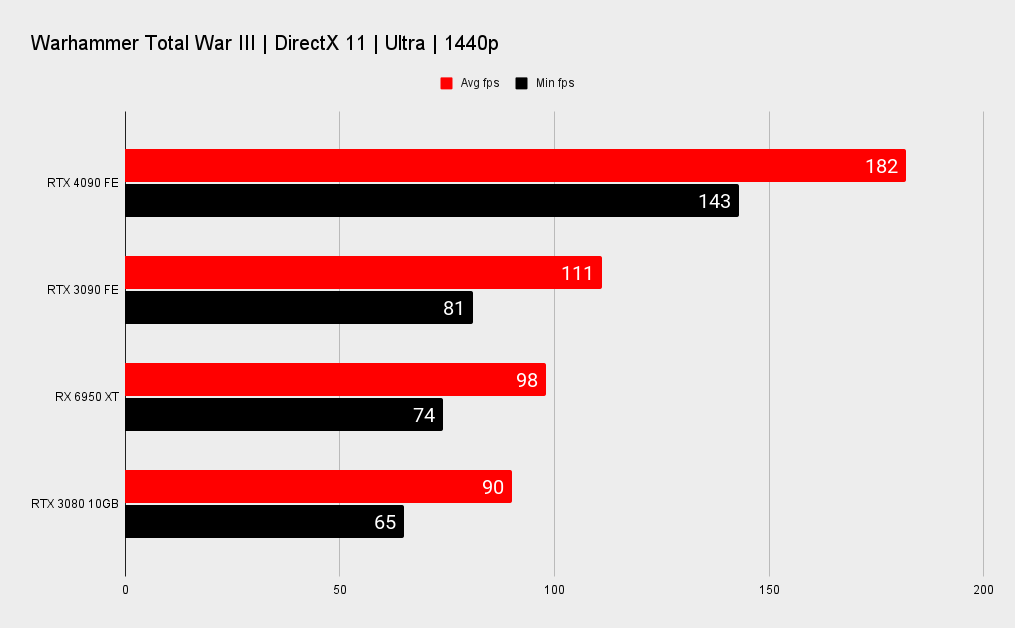

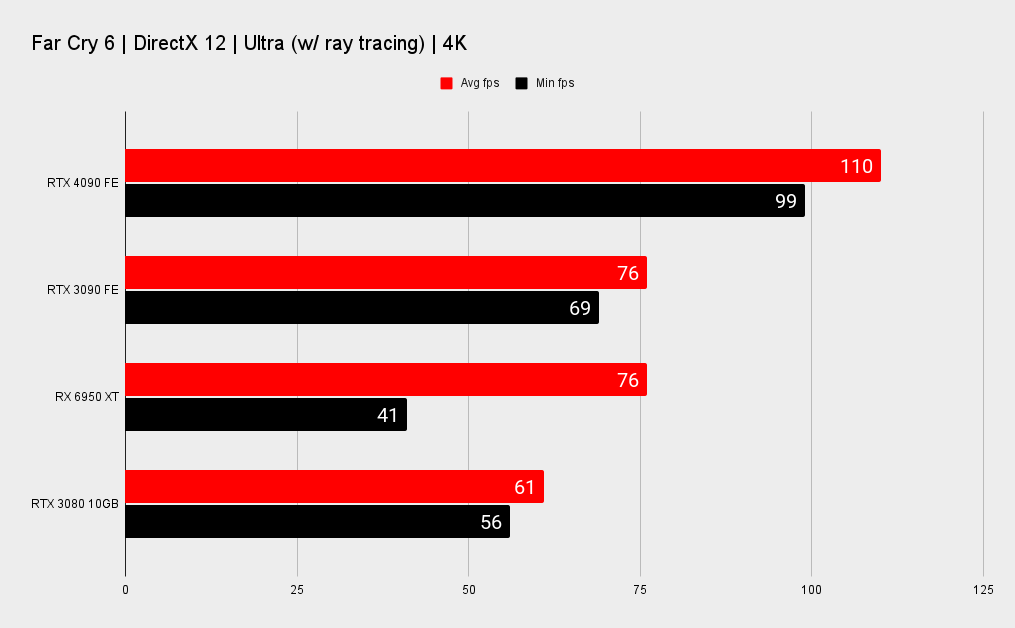

Therefore, the performance boost over the previous generation is often significantly lower when you look at the relative 1080p or even 1440p gaming performance. In Far Cry 6 at those lower resolutions the RTX 4090 is only 3% faster than the RTX 3090, and across 1080p and 4K, there is only a seven frames per second delta.

In fact, at 1080p and 1440p the RX 6950 XT is actually the faster gaming card.

That's a bit of an outlier in terms of just how constrained Far Cry 6 is, but it is still representative of a wider trend in comparative gaming performance at the lower resolutions. Basically, if you had your heart set on nailing 360 fps in your favourite games on your 360Hz 1080p gaming monitor then you're barking up the wrong idiom.

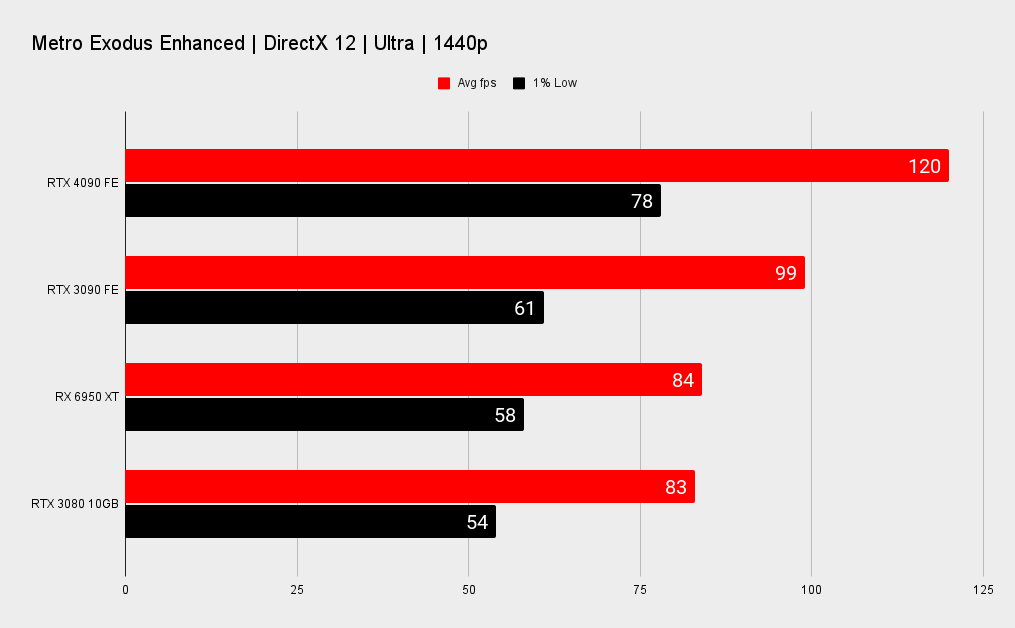

1440p gaming performance

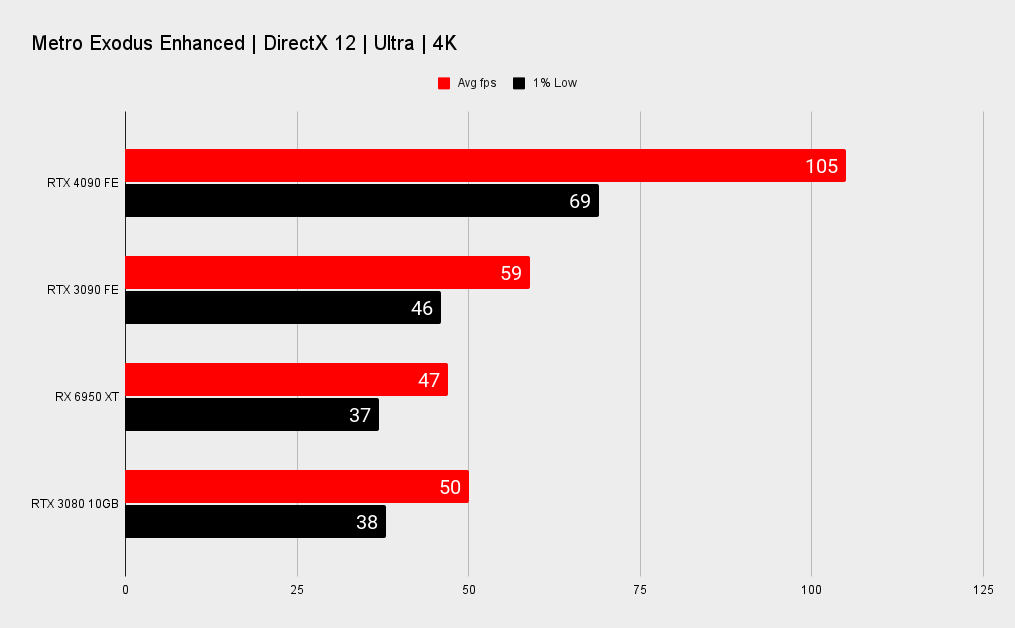

At 4K the performance uplift, generation-on-generation, is pretty spectacular. Ignoring Far Cry 6's limited gaming performance, you're looking at a minimum 61% higher performance over the RTX 3090. That chimes well with the increase in dedicated rasterising hardware, the increased clock speed, and more cache. Throw in some benchmarks with ray tracing enabled and you can be looking at 91% higher frame rates at 4K.

4K gaming performance

But rasterisation is only part of modern games; upscaling is now an absolutely integral part of a GPU's performance. We use our comparative testing to highlight raw architectural differences between graphics card silicon, and so run without upscaling enabled. It's almost impossible otherwise to grab apples vs. apples performance comparisons.

It's important to see just what upscaling can deliver, however, especially with something so potentially game-changing as DLSS 3 with Frame Generation. And with a graphically intensive game such as Cyberpunk 2077 able to be played at 4K RT Ultra settings at a frame rate of 147 fps, it's easy to see the potential it offers.

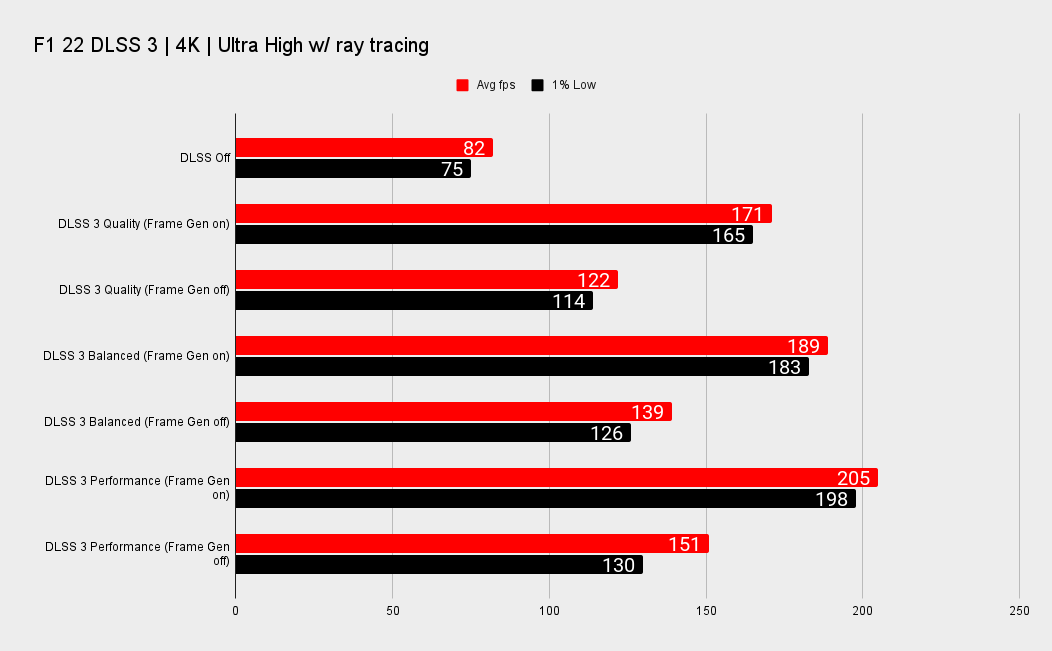

You're looking at a performance uplift over the RTX 3090 Ti, when that card's running in Cyberpunk 2077's DLSS 4K Performance mode itself, of around 145%. When just looking at the RTX 4090 on its own, compared with its sans-DLSS performance, we're seeing a 250% performance boost. That's all lower for F1 22, where there is definite CPU limiting—even with our Core i9 12900K—but you will still see a performance increase of up to 51% over the RTX 3090 Ti with DLSS enabled.

DLSS performance

Again, if you just take the RTX 4090 running without upscaling compared with it enabled at 4K you're then looking at a 150% increase in frame rates.

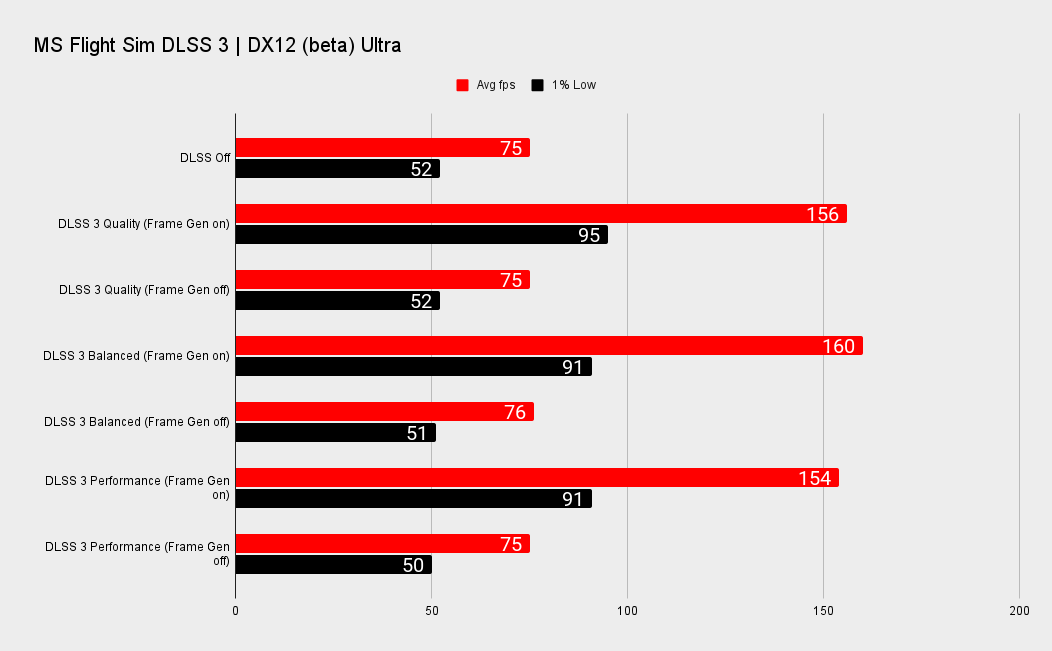

On MS Flight Sim, which we've also tested with an early access build supporting DLSS 3, that incredibly CPU bound game responds unbelievably well to Frame Generation. In fact, because it's so CPU limited there is no actual difference between running with or without DLSS enabled if you don't have Frame Generation running. But when you do run with those faux frames in place you will see an easy doubling of stock performance, to the tune of 113% higher in our testing.

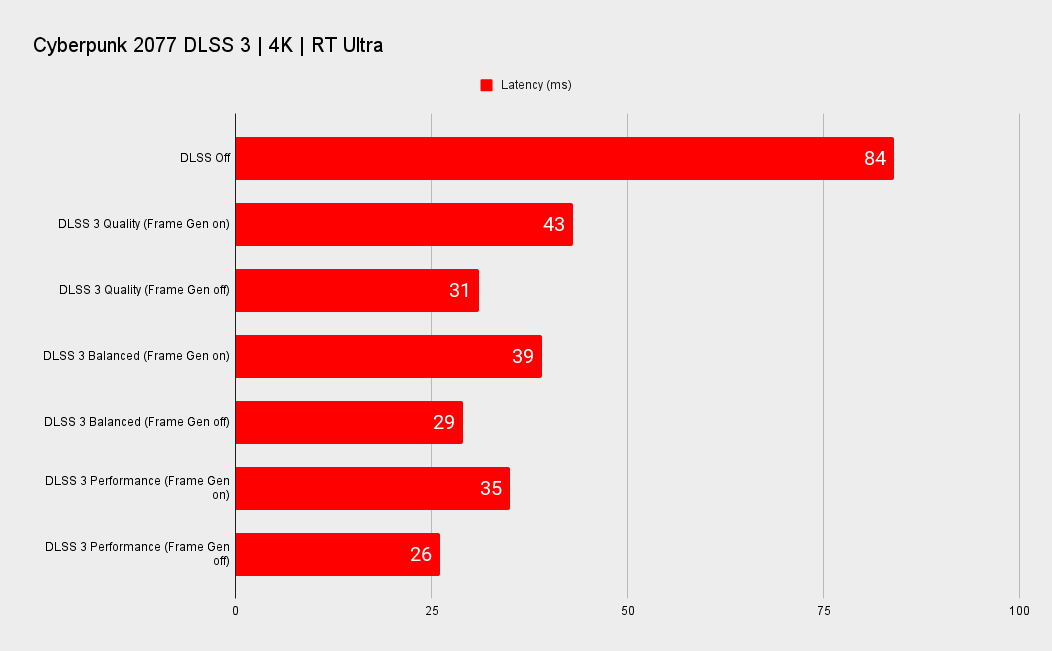

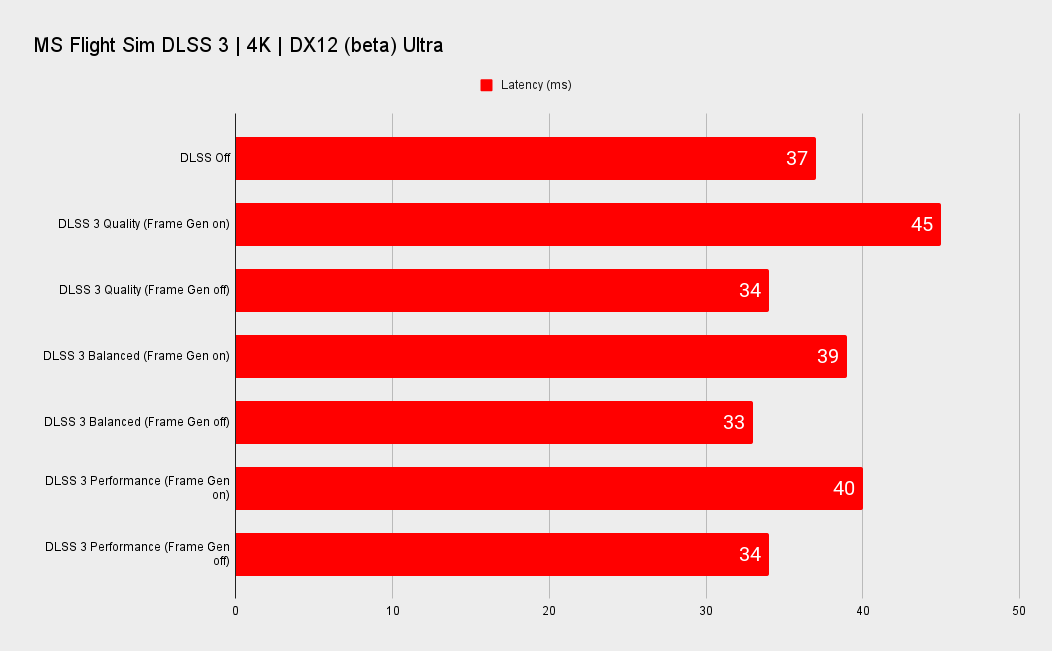

The other interesting thing to note is that Nvidia has decoupled Frame Generation from DLSS Super Resolution (essentially standard DLSS), and that's because interpolating frames does increase latency.

Just enabling DLSS on its own, however, will still radically decrease latency, and so with the minimal increase that enabling Frame Generation adds on you're still better off than when running at native resolution. That's also because Nvidia Reflex has been made an integral part of DLSS 3, but still, if you're looking for the lowest latency for competitive gaming, then not having Frame Generation on will be the ideal.

But for singleplayer gaming, Frame Generation is stunning. For me, it looks better than a more blurry, low frame rate scene, and cleans up the sometimes-aliased look of a straight DLSS Super Resolution image.

Personally, I'm going to be turning Frame Generation on whenever I can.

Which admittedly won't be that often to begin with. It will take time for developers to jump on board the new upscaling magic, however easy Nvidia says it is to implement. It is also restricted to Ada Lovelace GPUs, which means the $1,600 RTX 4090 at launch, and then the $1,200 RTX 4080 and $900 RTX 4080 following in November.

In other words, it's not going to be available to the vast majority of gamers until Nvidia decides it wants to launch some actually affordable Ada GPUs. Those which might arguably benefit more from such performance enhancements.

Power and thermals

CPU: Intel Core i9 12900K

Motherboard: Asus ROG Z690 Maximus Hero

Cooler: Corsair H100i RGB

RAM: 32GB G.Skill Trident Z5 RGB DDR5-5600

Storage: 1TB WD Black SN850, 4TB Sabrent Rocket 4Q

PSU: Seasonic Prime TX 1600W

OS: Windows 11 22H2

Chassis: DimasTech Mini V2

Monitor: Dough Spectrum ES07D03

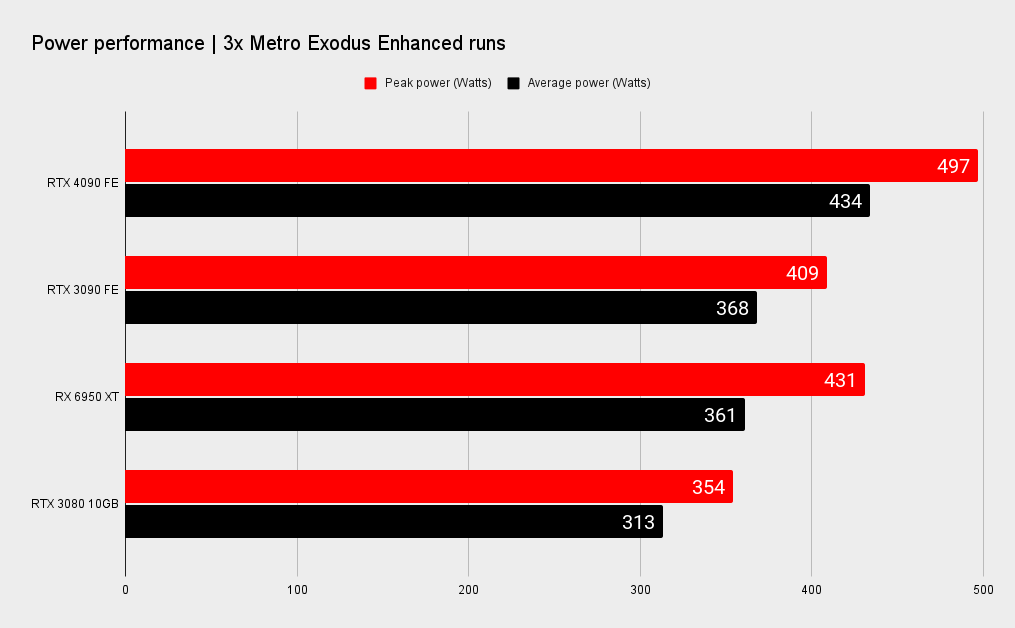

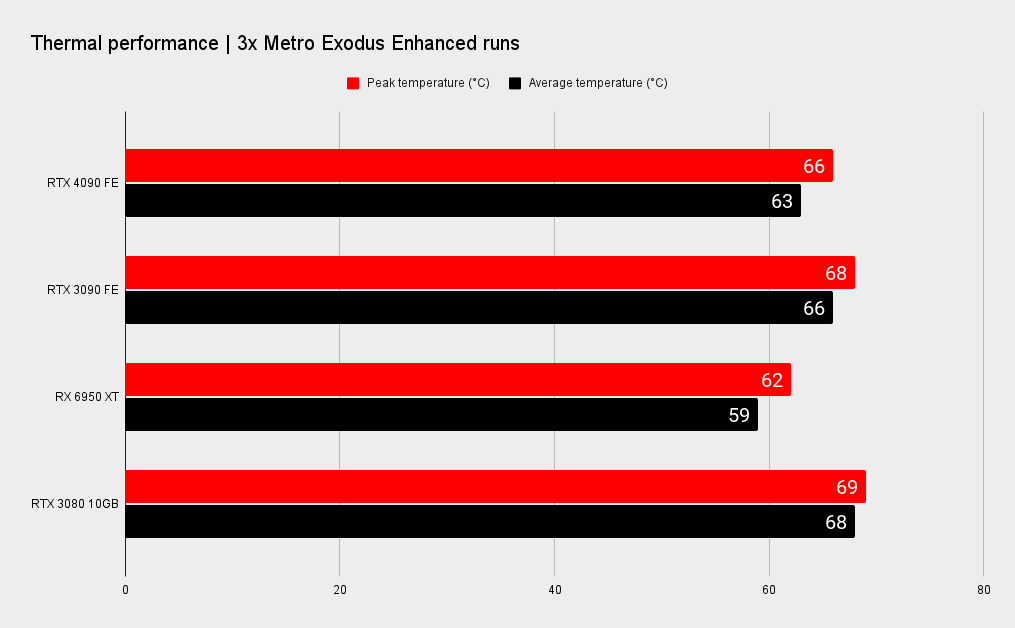

And what of power? Well, it almost hit 500W running at stock speeds. But then that's the way modern GPUs have been going—just look at how much power the otherwise efficient RDNA 2 architecture demands when used in the RX 6950 XT—and it's definitely worth noting that's around the same level as the previous RTX 3090 Ti, too. Considering the performance increase, the negligible increase in power draw speaks to the efficient 4N process.

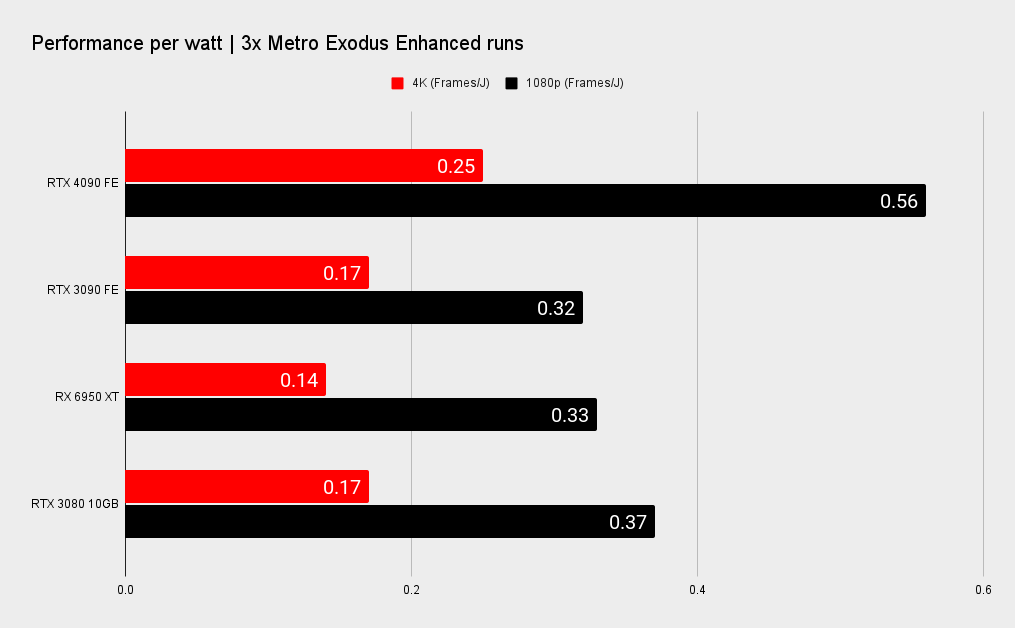

The increase in frame rates does also mean that in terms of performance per watt the RTX 4090 is the most efficient modern GPU on the market. Which seems a weird thing to say about a card that was originally rumoured to be more like a 600W TGP option.

Nvidia RTX 4090 analysis

How does the RTX 4090 stack up?

There really is no denying that the RTX 4090, the vanguard of the coming fleet of Ada Lovelace GPUs, is a fantastically powerful graphics card. It's ludicrously sized, for sure, which helps both the power delivery and thermal metrics. But it's also a prohibitively priced card for our first introduction to the next generation of GeForce GPUs.

Sure, it's only $100 more than the RTX 3090 was at launch, and $400 less than the RTX 3090 Ti, which could even make it the best value RTX 40-series GPU considering the amount of graphics silicon on offer. But previous generations have given the rest of us PC gamers an 'in' to the newest range of cards, even if they later introduced ultra-enthusiast GPUs for the special people, too.

At a time of global economic hardship, it's not a good look to only be making your new architecture available to the PC gaming 'elite'.

But the entire announced RTX 40-series is made of ultra-enthusiast cards, with the cheapest being a third-tier GPU—a distinctly separate AD104 GPU, and not just a cut-down AD102 or AD103—coming in with a nominal $899 price tag. Though as the 12GB version of the RTX 4080 doesn't get a Founders Edition I'll be surprised if we don't see $1,000+ versions from AIBs at launch.

That all means no matter how impressed I am with the technical achievements baked into the Ada Lovelace architecture—from the magic of DLSS 3 and Frame Generation, to the incredible clock speed uptick the TSMC 4N process node delivers, and the sheer weight of all those extra transistors—it's entirely limited to those with unfeasibly large bank accounts.

And, at a time of global economic hardship, it's not a good look to only be making your new architecture available to the PC gaming 'elite'.

Nvidia will argue there is silicon enough in the current suite of RTX 30-series cards to cater to the lower classes, and that eventually, more affordable Ada GPUs will arrive to fill out the RTX 40-series stack in the new year. But that doesn't change the optics of this launch today, tomorrow, or in a couple of months' time.

Which makes me question exactly why it's happening. Why has Nvidia decided that now is a great time to change how it's traditionally launched a new GPU generation, and stuck PC gamers with nothing other than an out-of-reach card from its inception?

Do the Ada tricks not seem as potent further down the stack? Or is it purely because there are just so many RTX 3080-and-below cards still in circulation?

I have a so-far-unfounded fear that the RTX 4080, in either guise, won't have the same impact as the RTX 4090 does, which is why Nvidia chose not to lead with those cards. Looking at the Ada whitepaper (PDF warning), particularly the comparisons between the 16GB and 12GB RTX 4080 cards and their RTX 3080 Ti and RTX 3080 12GB forebears, it reads like the performance improvement in the vast majority of today's PC games could be rather unspectacular.

Both Ada GPUs have fewer CUDA cores, and far lower memory bandwidth numbers, compared with the previous gen cards. It looks like they're relying almost entirely on a huge clock speed bump to lift the TFLOPS count, and the magic of DLSS 3 to put some extra gloss on their non-RT benchmarks.

In a way it might seem churlish to talk about fears over the surrounding cards, their GPU make up, and release order in a review of RTX 4090. I ought to be talking about the silicon in front of me rather than where I'd want it to exist in an ideal world, because this is still a mighty impressive card from both a gen-on-gen point of view and from what the Ada architecture enables.

I said at the top of the review there's nothing subtle about the RTX 4090, but there is a level of finesse here to be applauded. The RT Core improvements take us ever further along the road to a diminished hit when enabling the shiny lighting tech, and the Tensor Cores give us the power to create whole game frames without rendering.

Seriously, DLSS with Frame Generation is stunning.

I'm sure there will be weird implementations as developers get to grips (or fail to get to grips) with enabling the mystical generative techniques in their games, where strange visual artefacts ruin the look of it and create memes of their own, but from what I've experienced so far it looks great. Better than 4K native, in fact.

And while the $1,600 price tag might well be high, it is worth noting that spending big on a new generation of graphics cards is probably best done early in its lifespan. I mean, spare a thought for the people who bought an RTX 3090 Ti in the past seven months. For $2,000. They're going to be feeling more than a little sick right now, looking at their overpriced, power-hungry GPU that is barely able to post half the gaming performance of this cheaper, newer card.

It feels somewhat akin to the suffering of Radeon VII owners once the RX 5700 XT came out. Only more costly.

Nvidia RTX 4090 verdict

Should you buy an RTX 4090?

The RTX 4090 is everything you would want from an ultra high-end graphics card. It makes the previous top card of the last generation look limp by comparison, brings unseen new technology to gamers, and almost justifies its cost by the sheer weight of silicon and brushed aluminium used in its construction.

There's no other GPU that can come near it right now.

It's the very epitome of that Titan class of GPU; all raw power and top-end pricing.

Which would be fine if it had launched on the back of a far more affordable introduction to the new Ada Lovelace architecture. But that's something we're not likely to see until the dawn of 2023 at the earliest. The 2022 Ada lineup starts at $899, and that too is prohibitively expensive for the majority of PC gamers.

There's no denying it is an ultra-niche ultra-enthusiast card, and that almost makes the RTX 4090 little more than a reference point for most of us PC gamers. We're then left counting the days until Ada descends to the pricing realm of us mere mortals.

In itself, however, the RTX 4090 is an excellent graphics card and will satisfy the performance cravings of every person who could ever countenance spending $1,600 on a new GPU. That's whether they're inconceivably well-heeled gamers, or content creators not willing to go all-in on a Quadro card. And it will deservedly sell, because there's no other GPU that can come near it right now.

The RTX 4090 may not be subtle but the finesse of DLSS 3 and Frame Generation, and the raw graphical grunt of a 2.7GHz GPU combine to make one hell of a gaming card.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.