Nvidia says its new AI tools are like a chip foundry for large language models

Jen-Hsun Huang found NeMo, and he's swapped his clown suit for a business one.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

At Nvidia's GTC keynote today, CEO Jen-Hsun Huang announced that the company will soon be rolling out a collection of large language model (LLM) frameworks, known as Nvidia AI Foundations.

Jen-Hsun is so confident about the AI Foundations package, he's calling it a "TSMC for custom, large language models." Definitely not a comparison I was expecting to hear today, but I guess it fits alongside Huang's wistful comments about AI having had it's "iPhone moment."

The Foundations package includes the Picasso and BioNeMo services that will serve the media and medical industries respectively, as well as NeMo: a framework aimed at businesses looking to integrate large language models into their workflows.

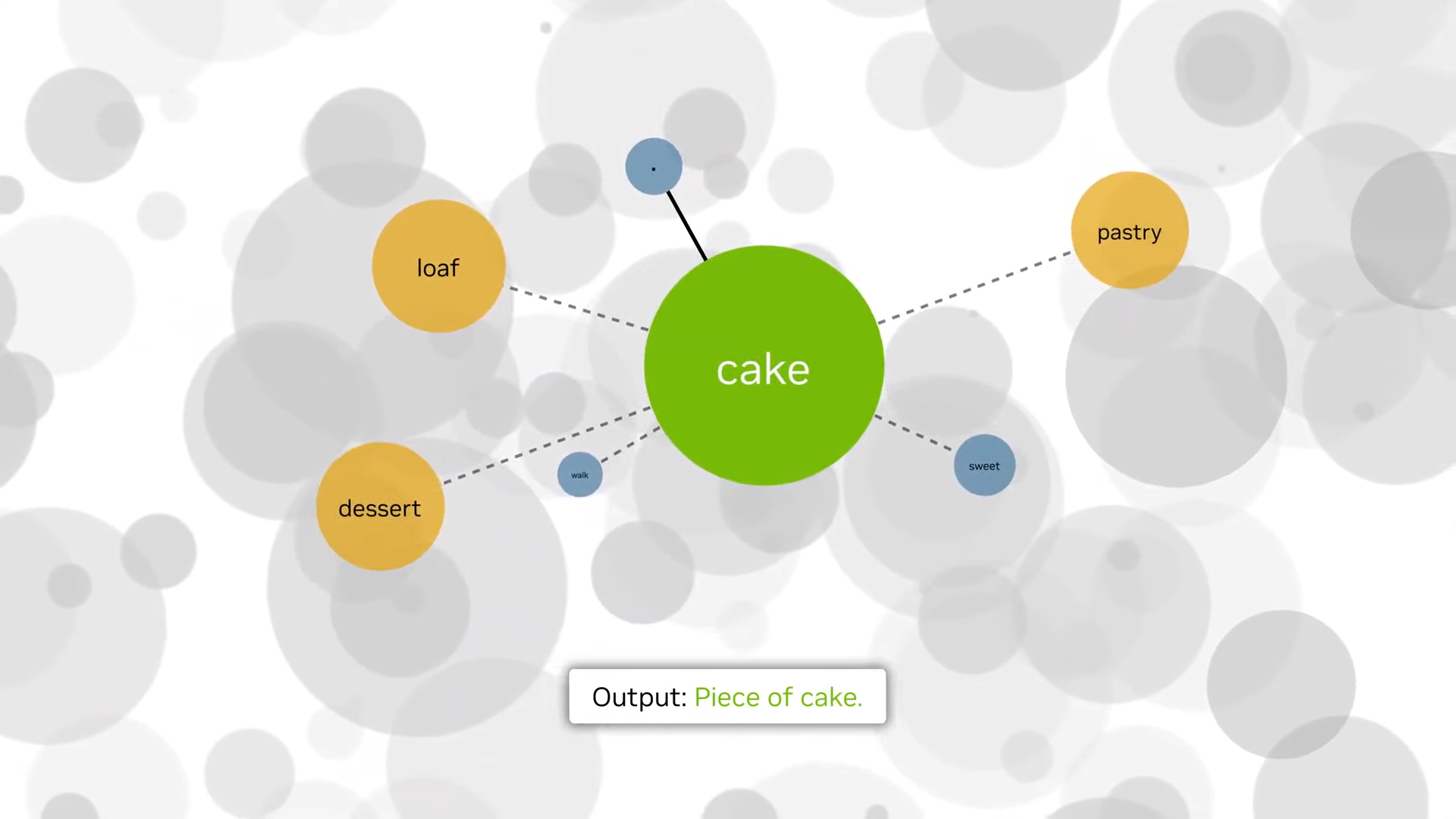

NeMo is "for building custom language, text-to-text generative models" that can inform what Nvidia calls "intelligent applications".

With a little something called P-Tuning, companies will be able to train their own custom language models to create more apt branded content, compose emails with personalised writing styles, and summarise financial documents so us humans don't have waste away staring at numbers all day—that sounds like a nightmare for me.

Hopefully it'll take some weight off the everyman, and stop your boss shouting "BUNG IT IN THE CHATBOT THING," because that's supposedly faster.

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

NeMo's language models include 8 billion, 43 billion, 530 billion parameter versions, meaning there will be distinct tiers to pick from with vastly differing power levels.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

For context, Chat GPT's original GPT-3 subsisted on 175 billion parameters, and although OpenAI isn't telling people how many parameters GPT-4 is working with at the moment, AX Semantics guesses around 1 trillion.

So, no it's not quite going to be a direct ChatGPT competitor, and may not have the same depth of parameters, but as a framework for designing large language models its sure going to change the face of every industry it touches. That's for certain.

Having been obsessed with game mechanics, computers and graphics for three decades, Katie took Game Art and Design up to Masters level at uni and has been writing about digital games, tabletop games and gaming technology for over five years since. She can be found facilitating board game design workshops and optimising everything in her path.