No amount of GPU refreshes are going to fix my disappointment in the current generation. And I say that as someone who actually bought an RTX 4070 Ti

Only a complete pricing and model segmentation reset could do that.

This article was originally published on October 13th this year, and we are republishing it today as part of a series celebrating some of our favourite pieces of the past 12 months.

All of the main releases for AMD, Intel, and Nvidia's latest generation of GPUs are now out, except for maybe something at the very bottom of the performance ladder. That means the next round of new graphics cards we're likely to see will be refreshes of some kind. For Nvidia, that used to mean a Ti or a Super version, or in AMD's case, adding a 50 to the end of the name, like the RX 6950 XT.

It used to be something to look forward to. A nicely updated card, with more shaders and higher clocks, usually at the same price as the originating model (sometimes a bit more expensive, sometimes less). But with the current AMD RDNA 3, Intel Arc, and Nvidia Ada Lovelace cards, I really couldn't care less about what the trio of chip giants are planning to bring out.

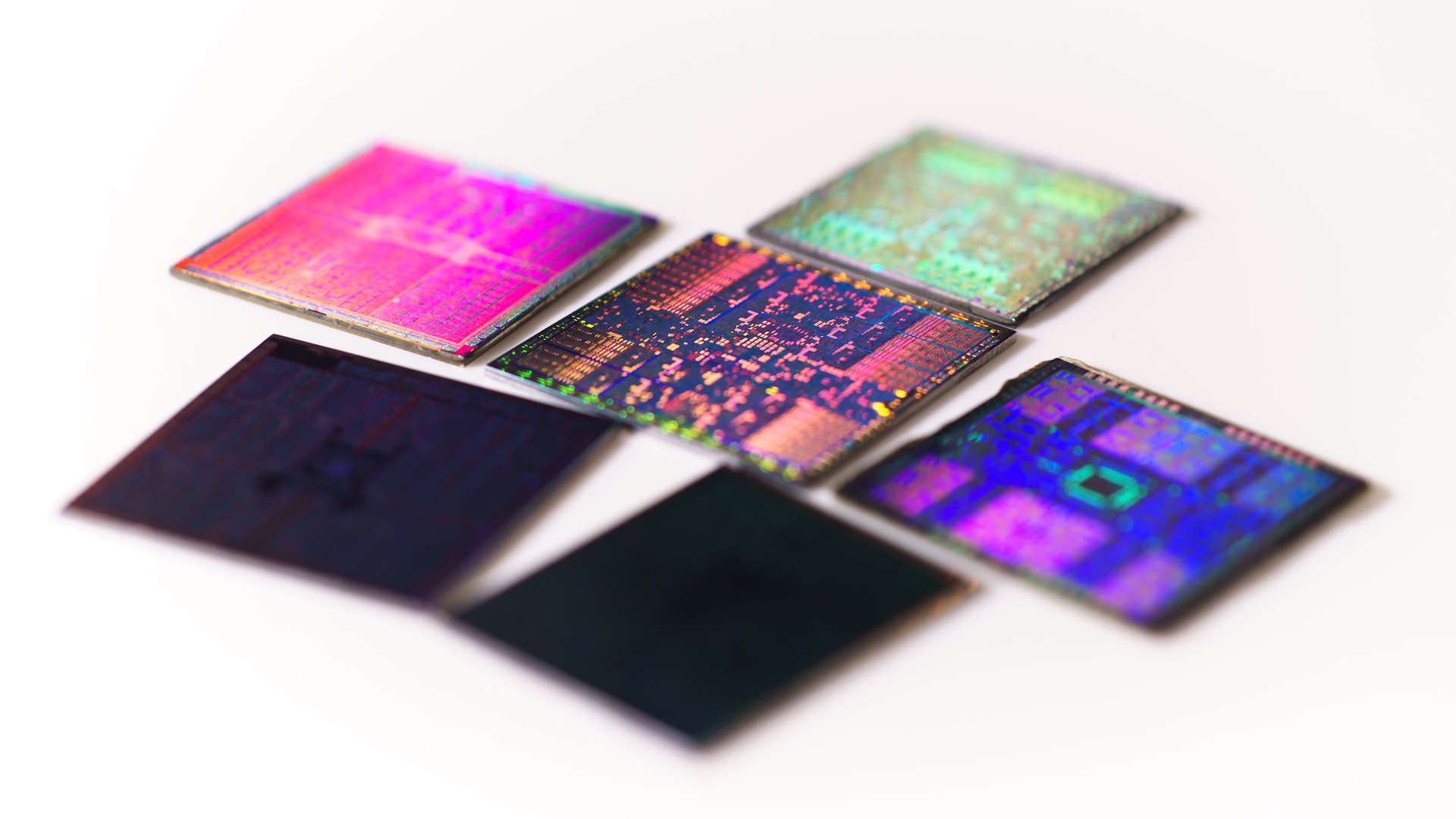

It's not about the technology that's in the chips, which is fantastic. Nvidia's RTX 40-series are rich in features, offer lots of performance, and are all very power efficient. The Radeon RX 7900 XTX and RX 7800 XT cards have shown that chiplets have a clear future in GPUs, as does stuffing huge amounts of cache in the GPU (lots of cache results in long latencies, but not in this case). Ray tracing, upscaling, and frame generation are all highly divisive topics at the moment, but in the right game with the right hardware, they're nothing short of magic.

We were chatting about this in the office earlier today and Jeremy summed it up perfectly: "If you could get an RTX 4070 for $399, wouldn't it be a fab GPU with a great architecture and feature set?" And this is the problem: you can't, and no fancy refresh is going to fix this.

No vendor is suddenly going to hack down prices, simply because senior managers are probably convinced their current market strategy is appropriate, due to the healthy profit margins that the GPU industry enjoys. Or at the very least, they're dead set on following through on it because there isn't much in the way of any competition. Sure we have seen some prices fall over the last few months, to help shift more units and match what the competition was doing, but none of the price tags were particularly enticing in the first place.

For the RTX 40-series, Nvidia has essentially taken chips that would normally be used in a very specific segment and placed them into the next tier up. If you look at the Ampere cards, the GA102 was used in the RTX 3090 and RTX 3080, with the GA104 powering the RTX 3070 cards. The GA106 found homes in RTX 3060 models and the GA107 was right down at the bottom.

The RTX 4080 uses the AD103, the RTX 4070 has the AD104, and the RTX 4060 uses the AD106. Now you can argue that this is just a nomenclature thing and just because it's a 104 chip shouldn't mean that it has to be in a 60-class product. But when you look at the GA106, it isn't a tiny chip. It has 3,840 shader units whereas the AD106 houses 4,608 of them.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

A better metric to use is the Streaming Multiprocessor (SM) count, as this contains the shader and texture units, as well as the Tensor cores and ray tracing units. The GA106 has 30 SMs, whereas the AD106 just has 6 more. That's not a big increase and if it wasn't for the fact that Ada Lovelace chips can be clocked so high, any card using the AD106 wouldn't automatically be massively faster than a previous gen model.

AMD isn't guilt-free in this aspect, either. For all the technological prowess leveraged in the Navi 31, the first of the RDNA 3 chips to be launched, most of the performance advantage it has over the Navi 21 comes from the fact that it has 20% more Compute Units and higher clock speeds. The much-lauded Dual Issue feature of the shaders requires a lot of input from developers to fully utilise, which makes AMD's FP32 throughput claims a tad misleading.

I'm okay with technology not being as good as it's hyped up to be, that's just the nature of the marketing beast. I also get that manufacturing hulking big GPUs is very expensive these days and cutting-edge process nodes are increasingly more complex and costly to design around and use.

AMD and Nvidia both feel that the higher prices reflect the fact that their products offer a lot more than just higher frame rates, especially Nvidia. The development costs for FSR and DLSS need to be paid for somehow, so it's only fair that the GPUs should cover this.

But if you were in the market for a new car and the manufacturer suddenly said, well here's our new version of your old favourite from last year, it's faster and safer, but it's now twice the price, would you buy it or switch to another company? I suspect most of us would do the latter.

Which we can't do with graphics cards. If you love PC gaming, you're stuck with AMD and Nvidia for the most part, and Intel if you're looking in a very narrow price range. Yes, it's always been a pretty expensive hobby, but for the most part, what you got for your money was well worth it. And sure, you don't need to spend thousands of dollars or pounds on a PC to have a great time.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

I don't believe for one moment that PC gaming has a dire future, but I do believe that if AMD, Intel, and Nvidia really want our hard-earned money, then they need to offer something far more compelling than what they have of late. An RTX 4070 Super, using a cut-down AD103 chip, might sport more shaders and VRAM, but it'll be at least as expensive as the current RTX 4070 Ti. AMD's prices are more palatable, but another round of RX 7600s and the like isn't going to fill its coffers.

I say all of this as someone who coughed up for an RTX 4070 Ti to replace an RTX 2080 Super, because the old GPU just wasn't up to the task in content creation. Sure it was a big leap in gaming performance, but so it should have been for a two generation gap and a $100 higher price tag.

Had I been using something like an RTX 3080 or an RX 6900 XT, then I definitely wouldn't have made the purchase. It left a sour taste in my mouth to hand over the money because I felt that I was forking more than what I really should have been.

Forget the refreshes, the Supers, the +50 models. I don't want any of them. I want to see a top-to-bottom pricing and model segmentation restructuring so that nobody experiences the same feelings that I did with my purchase. Ultimately it's about value for money and how this just seems to be missing with the current generation of GPUs. This doesn't mean everything has to be cheap–it simply means that you're paying what the product is actually worth.

Make it happen AMD, Intel, and Nvidia. Make it happen.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?