Intel's commitment to building GPUs is 'pretty self evident' as Arc lands in Meteor Lake

Tom Petersen reiterates Intel's interest in graphics is unwavering.

Intel has been a genuine player in gaming graphics cards for around a year now; more or less since the release of the Arc A770 and Arc A750 graphics cards. Whether Intel will stick around in the discrete graphics card game has come into question many times since, to the almost certain frustration of those working closely on the products. Culminating in Intel's Tom Petersen telling me "we're not going anywhere" in 2022—before even the launch of Intel's first big gaming graphics cards.

But you can see why the topic keeps coming up. Intel has been intensely cost-cutting in recent years, as CEO Pat Gelsinger streamlines or sells off parts of the business, and previous graphics ventures have been ditched pretty swiftly. Similarly, Intel's current lineup of budget graphics cards aren't yet a viable third option anywhere but the bottom end of the market.

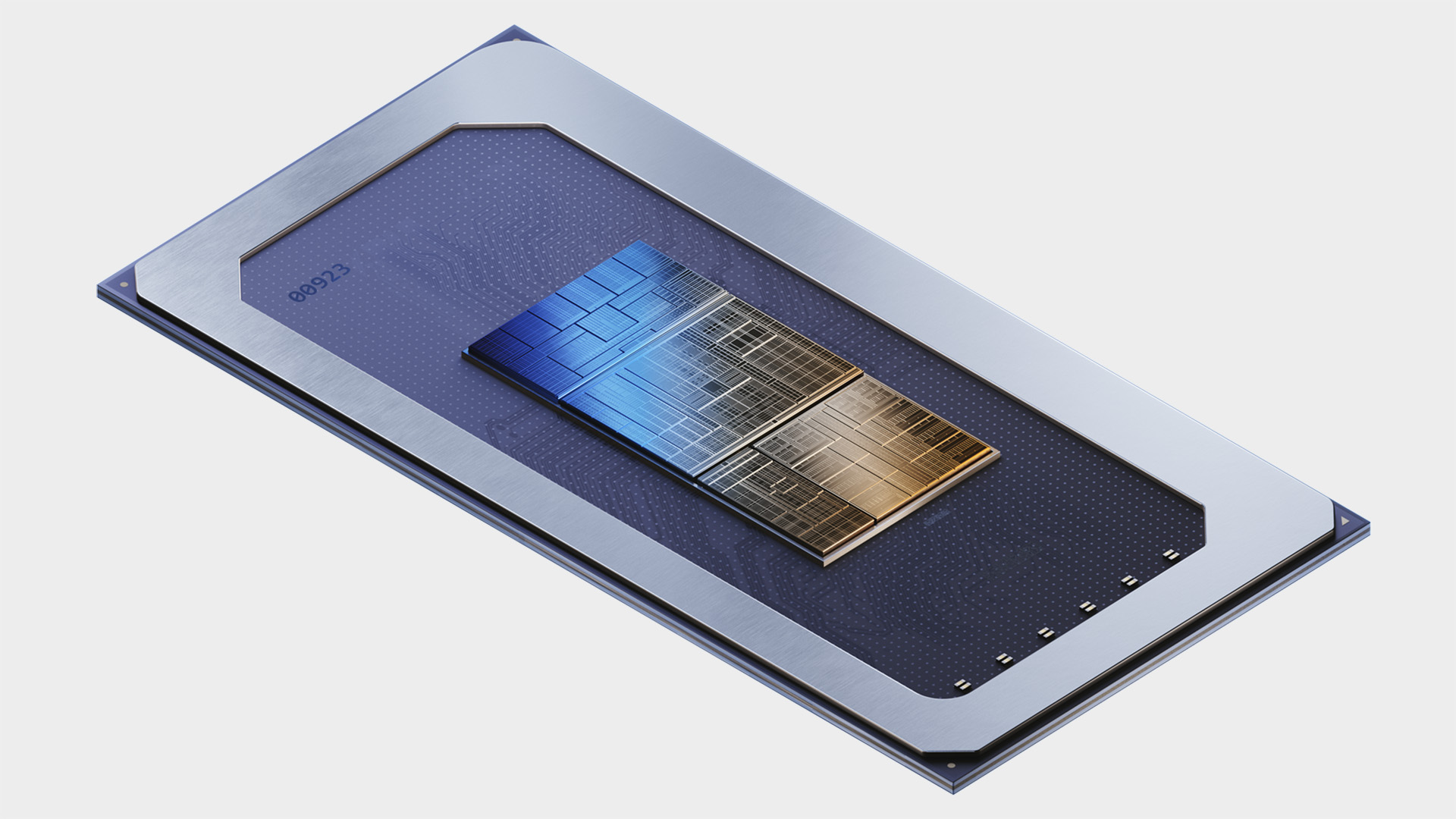

Well, it's 2023—Intel's gearing up to launch its new mobile chips, Meteor Lake, which feature an Arc graphics tile, and I had a chance to catch up with Intel's graphics guru Tom Petersen again at the briefing event. So, let's check in: Is Intel still as committed to its gaming graphics as it was one year ago?

"Is Intel committed after billions and billions of dollars of investment, and over five years of development, and now bringing that to Meteor Lake? I mean, I think it's pretty self evident," Petersen says.

He's smiling when he says that, and not actually sharpening a knife under the table to do me in for asking the same question twice in as many years. I made it out of the event alive, at the very least.

"I think the momentum is shifting where we're going from no success to okay, you can kind of see the drivers getting better and the adoption is improving. Games are getting better."

It's true that Intel's graphics drivers have improved mightily in the past twelve months. And that Intel hit a speed bump in Starfield, a game optimised for AMD GPUs that quite literally knocked the eyebrows off Intel's cards.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"If you think about what we've done over the last nine months since launch, in Q1, we improve performance by about 40% on DX9, we just launched a new driver that improves the DX11 performance by about 20%. All of that learning moves all the way down the stack.

"But we are not done," Petersen continues. "There's a ton of stuff to do. I'm not going to call it any specific block of our driver, but there are several and they know who I'm pointing at. They can't see me, but I'm pointing at them. And there's stuff to be done."

Curious to find out what Intel's learned since the release of the first generation Alchemist graphics cards, I asked Petersen how future GPU generations are shaping up with what they now know after Alchemist's launch. One interesting detail from that chat is how Intel learned the hard way that lack of native FP64 acceleration on Arc Alchemist cards would come back to bite it in the ass, and it wouldn't make that mistake again.

Best gaming monitor: Pixel-perfect panels for your PC.

Best high refresh rate monitor: Screaming quick.

Best 4K monitor for gaming: When only high-res will do.

Best 4K TV for gaming: Big-screen 4K gaming.

"A native implementation of FP64 on Arc has turned out to be a nuisance because that shows up some places [game engines] and there's stuff we have to do to make that work," Petersen says. "So as we get more mature on Arc, and we are gaming first, we know what those are.

"And I think you'll see that happen on Battlemage and future GPUs."

In as much as any part of a big company is safe from sudden cost-cutting measures, Petersen still sounds as sure as ever in Intel's ability to deliver in graphics. While it might appear to some like Intel's chasing a rainbow it'll never catch up with, you have to remember there is a very real and very large pot of gold at the end of it: GPU acceleration for AI has become an extremely lucrative business to be in. While Petersen tells me Intel remains gaming first, there's no denying the rewards are mighty for Intel if it bears through the bumpiness.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.