Intel Battlemage: What can we expect to see with the second generation of Arc GPUs?

Picking through the rumours and leaks to get the best view of what Intel has planned for its graphics cards.

- Expected release date: H2 2024

- Codename: Battlemage

- Architecture: Xe2-HPG

- Process: TSMC N4

- Performance target: Nvidia RTX 4070 (claimed)

Just two years ago, we were all getting ready for Intel's return to the desktop graphics card market, eagerly awaiting to see if the Arc series of products would be a match for AMD's Radeon and Nvidia's GeForce models. It'd been an astonishing 24 years since it had last done so, with the ill-fated i740 card, and although we knew everything about the architecture of the new GPUs, codenamed Alchemist, it was the real-life performance and prices that were of most interest.

And the very first Arc cards to pass by our doors, the 16GB A770 and 8GB A750, turned out to be…well…okay. In some games, they were pretty fast but in older DirectX 9-era games, they were far behind the competition. That was mostly down to drivers and Intel has worked on improving them ever since. On paper, the Alchemist chip should have been excellent but it was merely alright, average even, so naturally we started to think about what its successor would be like.

Intel had already named its future designs (Battlemage and Celestial) right from the start, but now it's like we're back in 2022 again, waiting to see how well the forthcoming Arc cards will fare. Except this time, Intel hasn't said anything about Battlemage yet and we've been left to sift through the rumours and guesses that precede every new GPU release these days.

So let's collate everything we have and piece together an overview of what we really want to see in Battlemage, and then scale it back a bit into something resembling what we think we'll actually get.

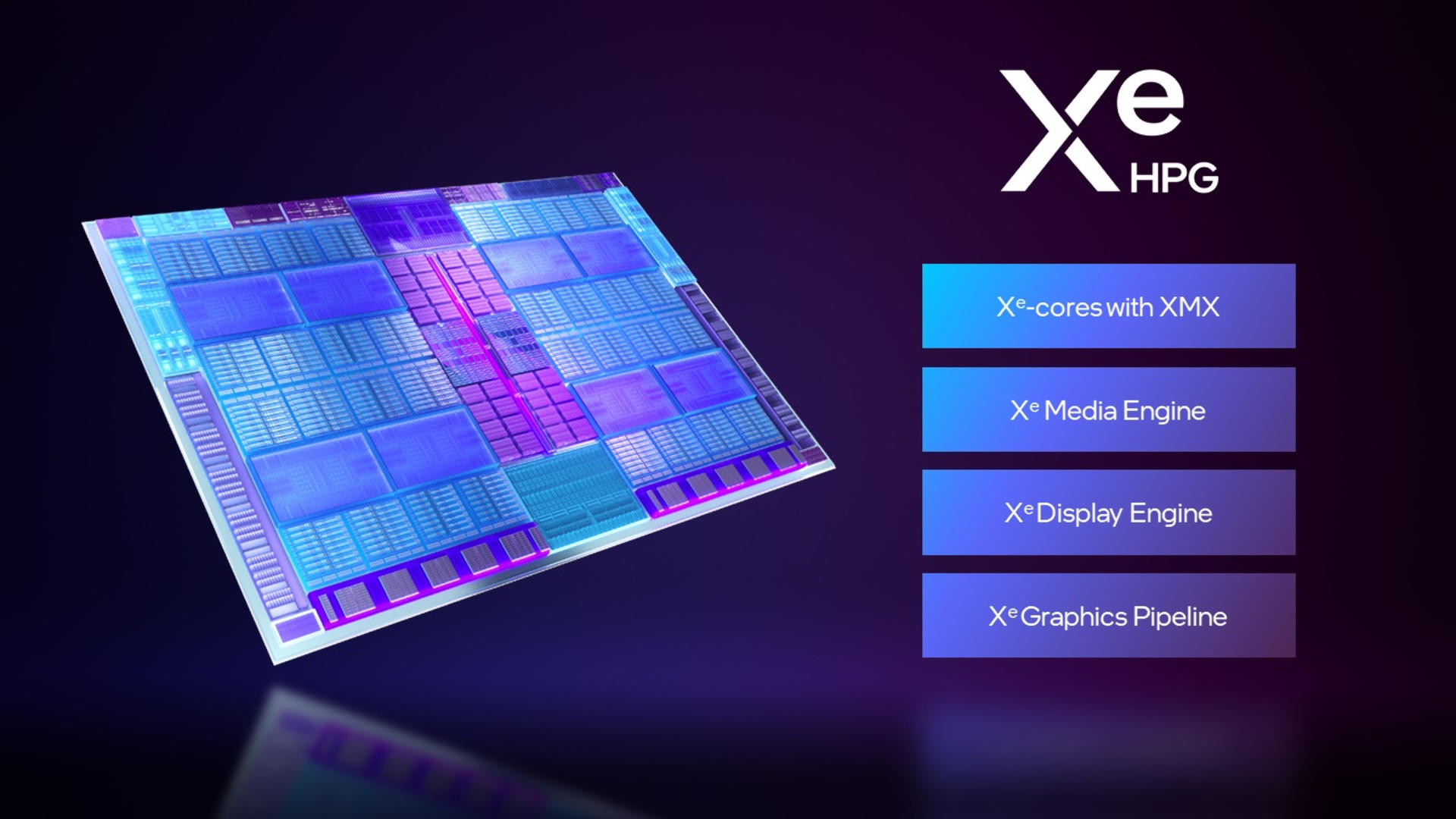

What architectural changes can we expect for Battlemage?

GPUs, no matter which vendor makes them, are all very similar, when viewed from a broad perspective. AMD, Intel, and Nvidia use different terms and phrases, though, so it's worth clarifying what each vendor is referring to once I start delving into the architecture of Battlemage.

At the highest level, graphics processors are repeated blocks of what's essentially a mini-GPU by itself. Inside these blocks are everything required to work through the necessary instructions and numbers to produce coloured pixels.

AMD calls it a Shader Engine (SE), whereas Nvidia labels it as a Graphics Processing Cluster (GPC). Intel, on the other hand, uses the term Render Slice. The amount of these within a GPU hugely affects the performance of the whole chip. For example, the Radeon RX 7900 XTX has six Shader Engines whereas the RX 7600 just has two.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

| AMD | Intel | Nvidia |

|---|---|---|

| Shader Engine (SE) | Render Slice (RS) | Graphics Processing Cluster (GPC) |

| Workgroup Processor (WGP) | n/a | Texture Processing Cluster (TPC) |

| Compute Unit (CU) | Xe-core | Streaming Multiprocessor (SM) |

| CU SIMD | Vector Engine (XVE) | SM Partition |

| Stream Processor (SP) | n/a | CUDA Core |

Peek inside an SE or GPC and you'll see that it's divided into further sections, all identical but repeated many times. The divisions continue until you're right down to the lowest view of a GPU, where you'll be staring at banks of logic units, the circuits that do all the number crunching: We generally call these shaders.

For the Alchemist architecture, Intel groups eight shaders together to form what it calls a Vector Engine (XVE); AMD's equivalent for this in RDNA 3 are 64 shaders in a Compute Unit SIMD and Nvidia bundles 32 together to form an SM partition.

This is where it's thought that Battlemage will display the first changes, as the new XVEs are reportedly going to have 16 shaders apiece. In other words, the Vector Engines will be twice as wide, working on 16 threads at a time. While this seems to be a lot lower than what AMD and Nvidia do in their GPUs, it's not necessarily a bad thing.

However, I'm at risk of going off into full-on nerd territory now, so at this point, let's just say that the above changes will just help improve the overall efficiency of the GPU. In theory, at least. In practice, it will also depend on whether the thread scheduling and dispatch units have been improved, as well as the number of load/store units, internal bandwidth, and…uh oh. Sorry.

All of the current Arc GPUs have 16 XVEs together in a block called an Xe-core, but if the Vector Engines are going to double in size in Battlemage, you might be forgiven for thinking that the chip will also double in size. That won't be the case and you only have to compare AMD's Radeon RX 7600 chip to the RDNA 2-powered RX 6600 XT to see this.

These two cards have almost identical GPUs, with the same number of Compute Units, but the newer card has 64 shaders per SIMD to the other's 32. And yet, the RX 7600's chip only has 20% more transistors than the older one.

However, it won't mean that Battlemage will be twice as fast, if the number of shaders per XVE is doubled, and AMD's Radeon cards are evidence for that, too. The RX 7600 can carry out up to 21.75TFLOPs (thousands of billions of floating point operations per second), double that of the RX 6600 XT's 10.6TFLOPS, but it's certainly not twice as fast in games.

What we will almost certainly see is Battlemage GPUs sporting more Xe-cores than in Alchemist, as this is vital to improving outright performance. I do not doubt that Intel will also improve the ray tracing units and matrix cores (akin to Nvidia's Tensor cores), too, but I've not seen anything specific about those.

However, since each Xe-core currently houses one RT unit, more of the former means more of the latter. Could Nvidia meet its match in the world of ray tracing? Possibly.

Summary: The number of shaders is rumoured to be double and Xe core count is likely to be a lot higher.

What process node will Intel Battlemage use?

Intel doesn't manufacture the GPUs for its Arc cards, despite having semiconductor foundries of its own. Instead, like AMD and Nvidia, it employs the services of TSMC and its N6 process node to churn out Alchemist GPUs. TSMC will remain Intel's foundry of choice for Battlemage but the really important thing is what production line it will use.

In rumour-land, the consensus is that Intel will use a variant of N4, the high-performance line in TSMC's 5nm portfolio. As Nvidia's RTX 40-series proves, with the right engineering skills, it can be used to produce chips that are small, very power efficient, and still house tens of billions of transistors, all switching at very high clock rates.

There isn't a huge difference between TSMC's N5 and N4 nodes when it comes to how many transistors can be packed into a given space, but the latter does offer 11% more performance and it's notably better than N6, in all aspects.

The choice of process node also affects what clock speeds the chips can reach, though it mostly determines chip size, transistor count, and power consumption. It's been suggested that Intel is targeting 3GHz for Battlemage, but given that neither AMD nor Nvidia sell GPUs with such clocks, I suspect it will be closer to 2.6 to 2.8GHz, depending on the chip's size.

Something else that smaller process nodes help with is the amount of cache that can be jammed inside the GPU, particularly what's classed as last-level cache. This ultra-fast memory is responsible for temporarily storing small chunks of data, to help reduce the amount of times the GPU has to access the far slower VRAM.

Both AMD and Nvidia have very large last-level caches in their GPUs, with even the smallest models sporting 24MB or more. The biggest Alchemist chip houses 16MB of L2 cache and while it is not a trivial task to just add in more and reap the benefits, Intel will certainly be looking to increase the cache size.

One rumour has pointed to Intel putting as much as 112MB of last-level cache into its next-gen GPUs but nobody else has confirmed this, and I suspect it's more likely to be a sensible bump of around three times that in Alchemist.

Summary: Intel will probably use one of TSMC's N4 process nodes for Battlemage, to achieve the necessary performance increases.

What specs will Battlemage graphics cards have?

GPU architectures are fascinating things but ultimately it's the overall graphics card that's more important. Here's how the current Alchemist-powered Arc series all compare:

| Header Cell - Column 0 | A310 | A380 | A580 | A750 | A770 |

|---|---|---|---|---|---|

| Xe Cores / XVE units | 6 / 96 | 8 / 128 | 24 / 384 | 28 / 448 | 32 / 512 |

| Shaders | 768 | 1,024 | 3,072 | 3,584 | 4,096 |

| Boost clock | 1.75GHz | 2.05GHz | 2.00GHz | 2.40GHz | 2.40GHz |

| L2 cache | 4MB | 4MB | 8MB | 16MB | 16MB |

| Memory bus / VRAM | 64-bits / 4GB | 96-bits / 6GB | 256-bits / 8GB | 256-bits / 8GB | 256-bits / 16GB |

| Power | 30W | 75W | 175W | 225W | 225W |

| Launch MSRP | $110 | $149 | $179 | $289 | $329 |

The A770, A750, and A580 all use the same ACM-G10 die, with the A380 and A310 both sporting the much smaller ACM-G11 chip. That only has 7.2 billion transistors (AMD's Navi 33 in the RX 7600 has 13.3 billion) so it's really a laptop chip, slapped onto a standard graphics card PCB. The G10 is a lot bigger, at 21.7 billion, but that's still less than you'd find in RTX 4070's GPU, which boasts 35.8 billion.

If Intel wants to properly compete against AMD and Intel, especially in the all-important mainstream sector, Battlemage GPUs will have to pack in a lot more nanoscale switches, without making the whole chip too large and power hungry.

Before I don my cap of foresight and gaze into a GPU-shaped crystal ball, let's consider what naming scheme Intel might use for the next generation of graphics card. They'll still be called Arc but what about the individual SKU titles? If the A in A770, for example, stands for Alchemist, then you'd expect the Battlemage equivalent to be B770. However, using the letter B, compared to A, generally invokes an impression of its being a lower tier.

That suggests Intel might follow the same nomenclature as AMD and Nvidia, e.g. stick 1 in front of all the above card names so that the top model is a 1770. That makes it far easier to name successive generations of graphics cards but as things currently stand, nobody knows what name the next Arc cards will use.

But just for the sake of being a little different, let's go with A1xxx and turn our thoughts to what specifications these cards will have. Here are the Battlemage cards we want to see:

| Header Cell - Column 0 | A1350 | A1550 | A1750 | A1770 |

|---|---|---|---|---|

| Xe Cores / XVE units | 16 / 256 | 32 / 384 | 32 / 512 | 48 / 768 |

| Shaders | 4,096 | 6,144 | 8,192 | 12,288 |

| Boost clock | 2.50GHz | 2.75GHz | 2.70GHz | 2.60GHz |

| L2 cache | 16MB | 32MB | 32MB | 48MB |

| Memory bus / VRAM | 128-bits / 8GB | 256-bits | 256-bits / 8GB | 256-bits / 16GB |

| Power | 100W | 180W | 220W | 275W |

| Launch MSRP | $249 | $349 | $449 | $549 |

The largest Battlemage chip has been claimed to have as many as 64 Xe-cores, but if the shader doubling claim is correct, it's far more likely to be a lot lower than that. It's also being rumoured that Intel isn't targeting RTX 4080-level performance but aiming for the popular mainstream segment.

You might be looking at the above figures and thinking that I'm just pulling them out of thin air, but I'm partly basing them on what Battlemage needs to be like if it's going to successfully challenge AMD and Nvidia. An 'A1770' is going to be up against the $549 RTX 4070 which has 5,888 shaders, a boost clock of 2.475GHz, 36MB of cache, and 12GB of VRAM.

But don't read too much into the shader count, though. Alchemist has lots of them already but isn't able to utilise them fully, and there's a good chance that Battlemage will be similar. The current A770 should be as fast as a Radeon RX 7700, but more often than not, it trails behind the older RX 6600. So Intel needs its next Arc cards to have extra oomph, to make up for any inherent design limitations.

You'll notice that I'm predicting Intel significantly increases the prices of its graphics cards, but those figures correlate with the expected GPU architecture changes. If those aren't as pronounced, or the final performance just isn't commensurate, then you can expect the price tag to be lower.

As Intel Fellow and GPU guru Tom Petersen told us in 2023, "as Intel becomes more competitive and more successful, will our prices begin to rise? I mean, I want to become profitable, but it's really up to the market to determine what our value is."

Summary: Battlemage Arc cards will probably have significantly more cores and cache, higher clock speeds, and be more expensive than Alchemist models.

When can we expect to see Intel Battlemage cards for sale?

At the moment, Battlemage is expected to appear at some point in 2024, although exactly when isn't clear. Earlier leaks pointed to the first half of the year, but this roadmap was already quite old and suggested that Intel was going to release an Alchemist refresh series of GPUs, which never transpired.

Given that Intel has said that Battlemage is up and running in its labs and that engineers are now working more on the software side of things, it's clearly not ready to hit the shelves. That suggests a H2 release (i.e. July onward) to be the most likely release window and I suspect it will be closer to Q4 than Q3.

We'll know for sure once the rumour mill picks up speed and once we're at the stage where you're seeing at least one Battlemage leak per week, you know that the final launch will only be a couple of months away, at most.

Summary: 2nd generation Arc cards are most likely to be available H2 2024.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?