Stutters and hitches in Unreal Engine 5 games should become rarer over time due to Epic's continuous updates, but we'll still see them for a while because of the way games are made

Yes, I know I sound like a stuck record here, but it's really not the engine that's at fault.

At this year's Unreal Fest in Orlando, not only did Epic showcase forthcoming features for Unreal Engine 5 via CD Projekt Red's Witcher 4 tech demo, but it also held numerous training sessions for developers on how best to use its software. Two of the most popular were those concerning shader compilation stutters and the main causes of performance hitches in UE games. However, a session led by a renowned game developer showed that optimisation problems are less about the engine being used and more about how games are made.

The event was packed with many such presentations, and although I only had the chance to sit through a small portion of them, I came away with a better understanding of why it seems that all Unreal Engine games have problems with performance optimisations, such as shader compilation or traversal stutters.

If one is to believe the countless remarks on social media, the problem is Unreal Engine itself, and there is an element of truth in such comments. Today's big-budget, big-graphics games use tens of thousands of shaders, many of which are extremely complex, and GPUs can't process them until they've been compiled.

Epic wrote a blog on this matter earlier this year, pointing out that the method for compiling shaders (or rather pipeline state objects, if I'm to use the correct terms here) in older versions of UE wasn't ideal. For Unreal Engine 5.2 or newer, the recommended method is to use PSO precaching, which can be used in conjunction with the old method.

Neither solution is 100% perfect, though Epic is still working on making it better, and given the progress made, I'm reasonably confident that we'll see a mechanism that nixes shader stutter eventually. I'd actually go as far as to say that if a game developer followed all of Epic's advice on performance optimisation and what to do to get rid of stutters and hitches, we'd be seeing AAA games running smooth as silk right now.

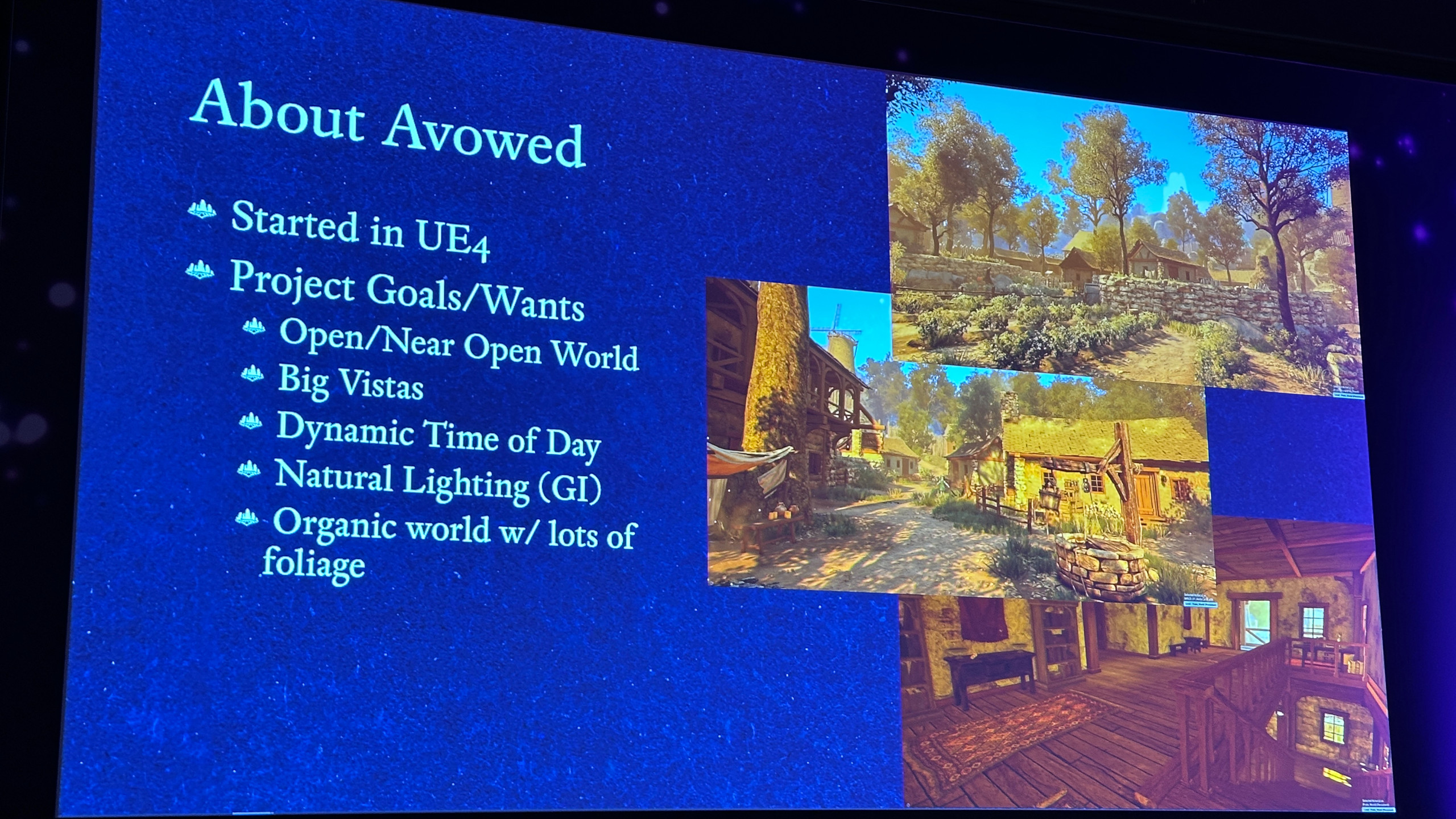

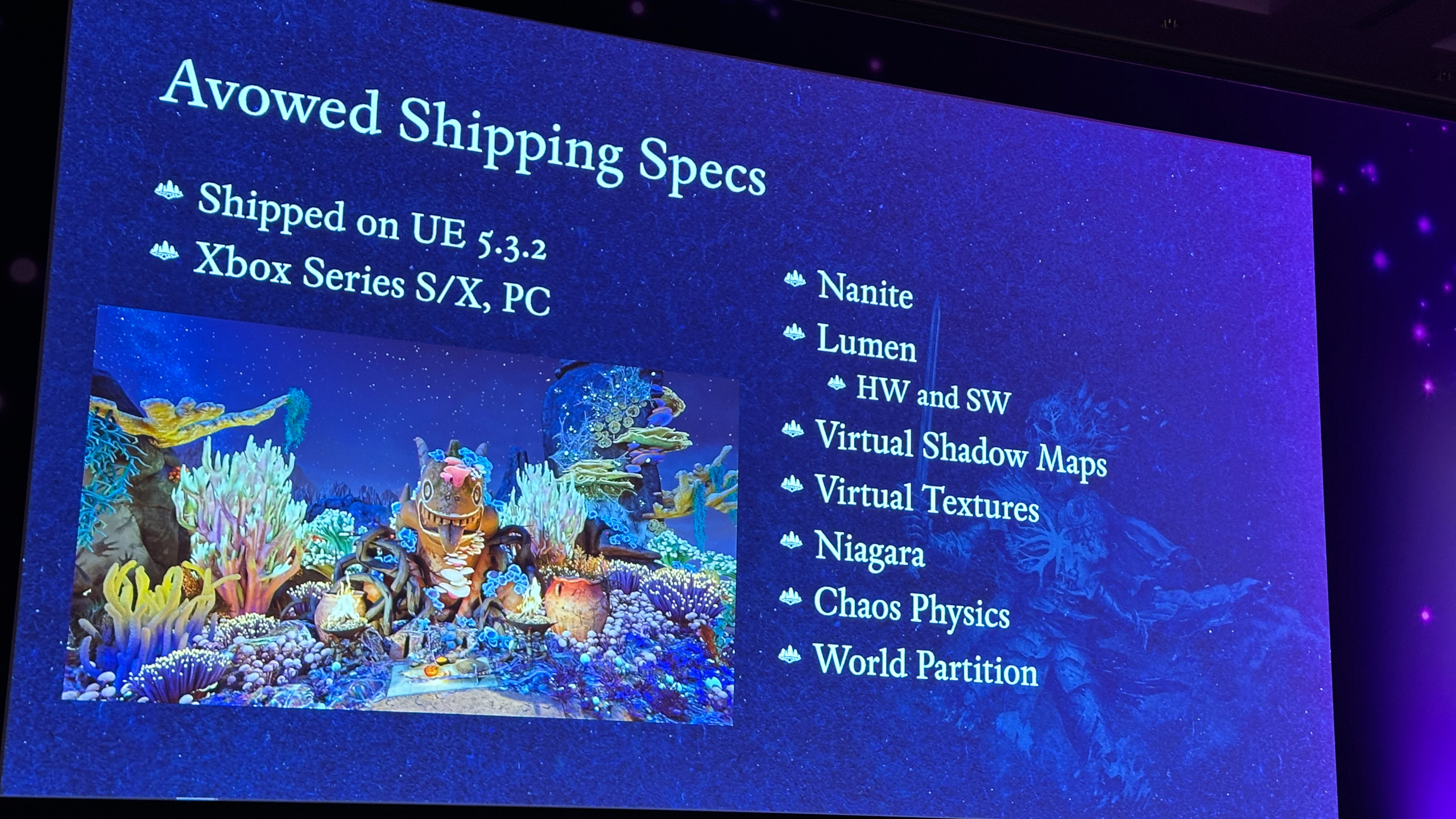

However, after sitting through Obsidian's presentation on how it made the graphics for Avowed, it's clear to me that shader stutters and the like are still going to be around for a while because of the way that many big-budget games are made. Obsidian started making Avowed in Unreal Engine 4, before switching to Unreal Engine 5 during the game's development, and shipping the final game with UE 5.3.2 back in February.

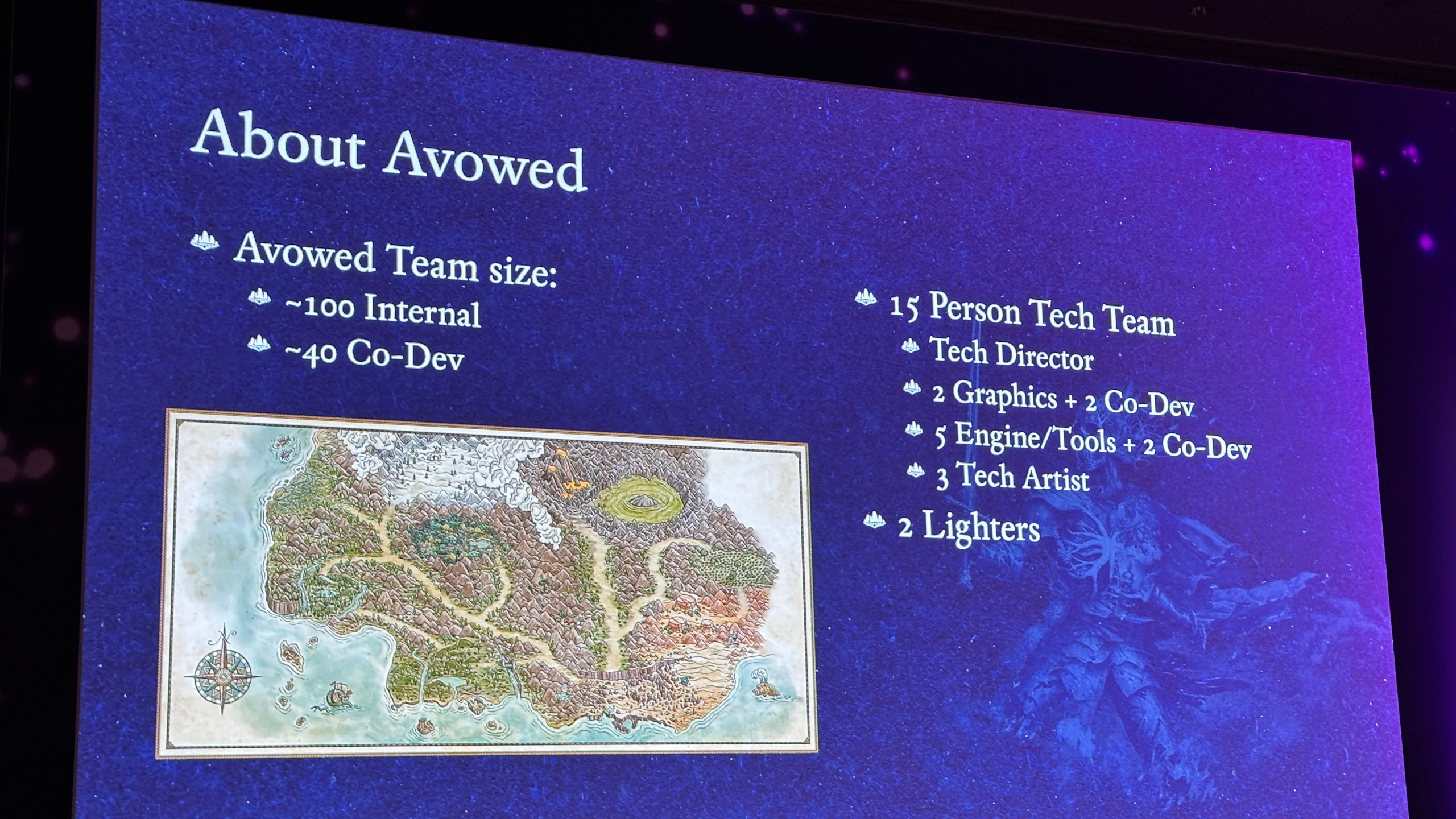

By that time, however, UE 5.5 was already available, so why didn't Obsidian use the very latest version for better performance? One possible answer is the size of the team: a total of 140 people were involved with making Avowed (100 internal to Obsidian, 40 external), but only 15 of those were part of the technical team and just four of those handled graphics programming.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Perhaps one of the co-developers had a chance to work on improving how the game processed shader compilation, but given that PSO precaching only appeared in UE 5.2, I should imagine that Obsidian probably didn't have much chance to work with it.

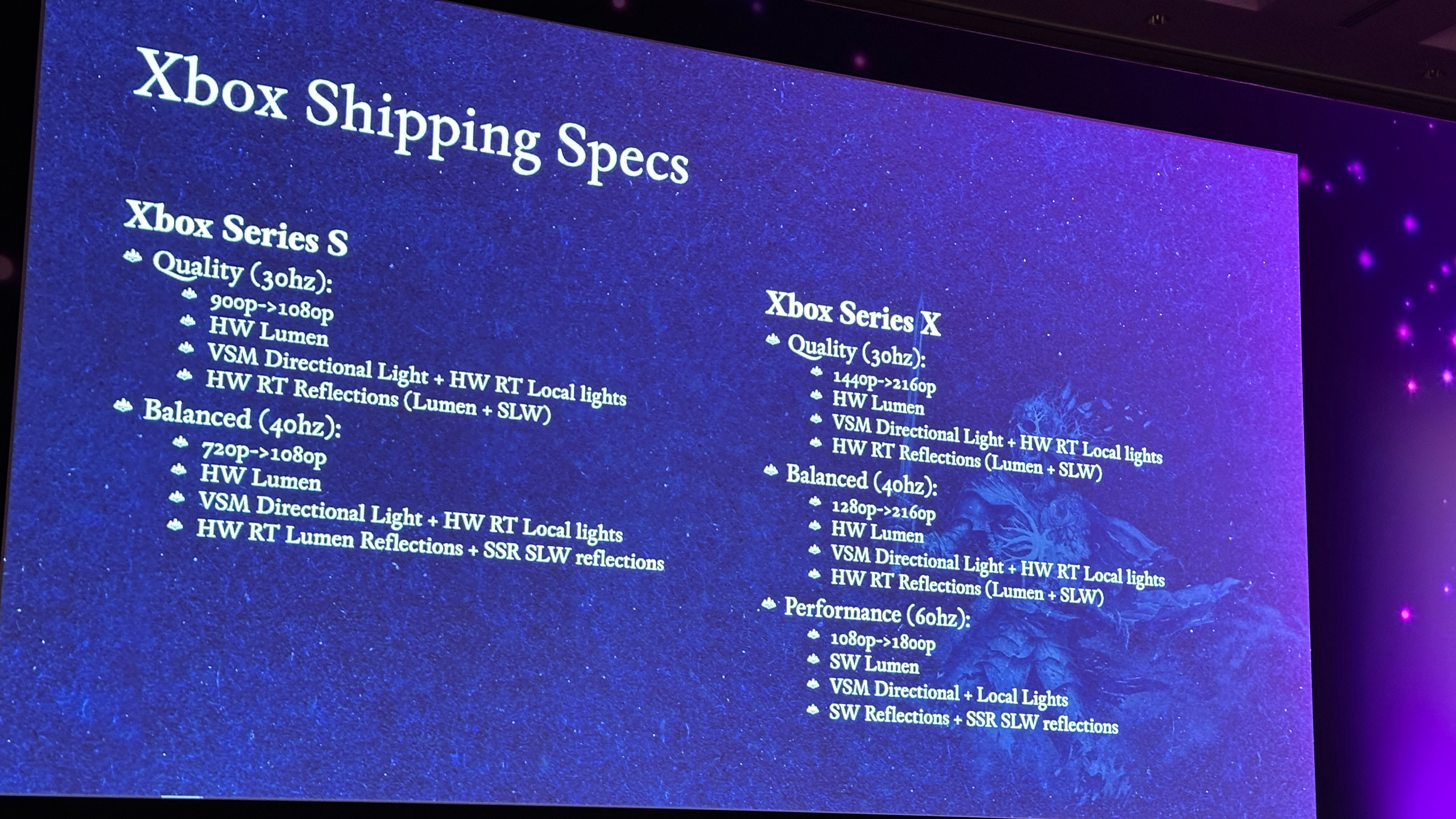

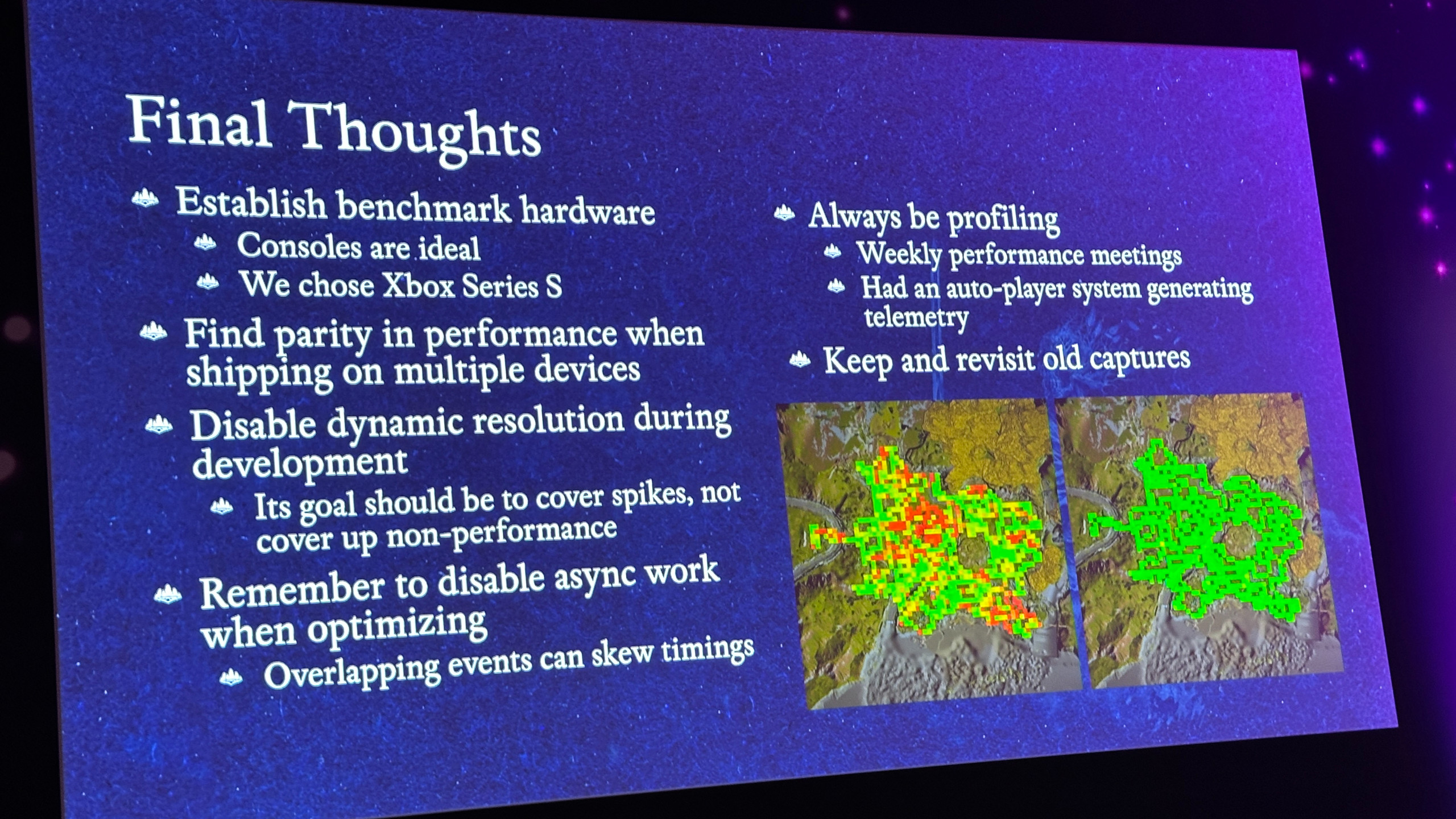

Obsidian also targeted the Xbox Series S as the benchmark hardware, ie the platform to develop performance expectations around. The developers aimed for 30 to 40 fps on that console, with Series X and PCs going up to 60 fps. When you compare the differences in resolution and what rendering techniques were used, it's clear that it was a struggle to get the intended graphics fidelity on the Series S, and I suspect it consumed a considerable amount of time.

In short, Obsidian started on an old version of Unreal Engine, used a relatively weak console as the baseline platform (one has to if one plans on publishing a game for the Series X), and only had a small number of people available for programming. It's a credit to the team that they managed to make Avowed look as good as it did, though eschewing outright performance on the PC probably helped in that respect.

I suspect that this is a similar story for many other Unreal Engine 5 games. Perhaps not the point about the number of developers, but certainly the one about starting the project with an old version of UE. The latest version, UE 5.6, is packed full of performance improvements (the Nanite Foliage tech showcased in the Witcher 4 tech demo won't appear until 5.7), but if a game is shipped on, say, 5.4 or older, then it can't take advantage of them.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

And for what UE 5.6 does better than the previous versions, few of the improvements are just a case of click and it's done. PSO precaching, for example, is enabled by default, but it still requires programmers to have a strategy on how to tackle the shader compilation and write the code for it to all work as intended (a UE blueprint for PSO precaching will be available in UE 5.6).

Epic's sessions on UE performance improvements at Unreal Fest were packed full (the one on shader compilation was so full, I couldn't get in), so it's clear that developers are mindful of how the gaming community views Unreal Engine. Hopefully, this means that the AAA and AA games we'll see in the near future will be making full use of the latest version of UE, and things like shader compilation and traversal hitches will be a thing of the past.

Until then, however, we'll just have to hope that game developers using Unreal Engine fully take on board what Epic is saying and that publishers and management give programmers the time and scope to properly explore the tools available. Optimisation isn't a one-click wonder, though, and probably takes up a lot more time than is really available. Same as it ever was, unfortunately.

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.