Security mitigations in Intel's GPUs rob up to 20% of their compute performance but it's unlikely to be a problem in games

If you're an OpenCL coder on Linux, though, you might want to consider disabling them.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

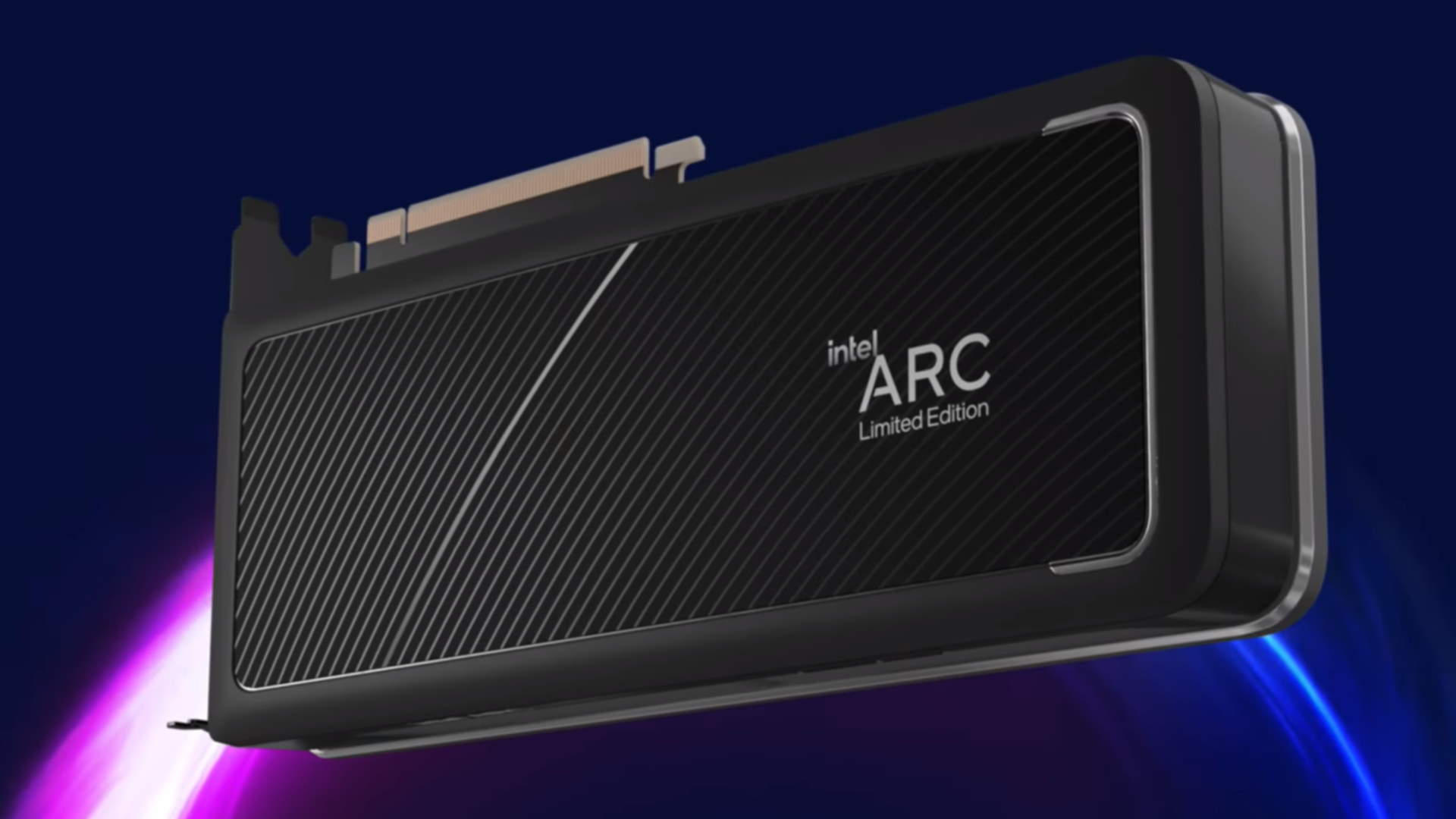

Many readers will remember the furore over Spectre and Meltdown, security exploits that targeted CPUs from AMD and Intel, but they appear so frequently now that few announcements generally hit the headlines. When it comes to the same deal with GPUs, however, most people barely notice. But if you knew that your Intel GPU could be losing up to 20% of its compute ability because of security mitigations, that could certainly raise an eyebrow or two.

Such a performance impact comes straight from the horse's mouth, or rather via an Ubuntu bug report in this case (via Phoronix). Apparently, the developers of the Linux distro are working with Intel to have the security mitigations disabled by default, in order to recoup up to 20% of lost compute performance.

One can already do this for Intel Graphics Compute software through the use of an appropriate command (a rather cool-sounding neo_disable_mitigations), but this is about having it activated for you so that you're not waving goodbye to one-fifth of your Intel GPU's processing ability.

I hasten to add that this is purely for use with Linux applications that use OpenCL or Intel's oneAPI Level Zero. Workstations with Arc GPUs, crunching through hours of compute calculations, are far less likely to be exposed to malware and other nefarious attacks than an average gaming PC, so the performance gains outweigh the increased security risk of switching off the mitigations.

What surprised me was just how big a performance impact these measures have. Countering the likes of Spectre and Meltdown barely affected CPUs, and in gaming, there's practically no difference at all. But 20%? Yikes.

While no game uses OpenCL or Level Zero, there are plenty that involve a lot of compute work for rendering, and it's not clear whether the security mitigations are permanently implemented in Windows or Intel's GPU drivers. I suspect they're not, simply because I've seen plenty of cases where an Arc graphics card performs pretty much in line with its specifications, compared to the competition.

But if your line of work involves an Intel GPU, Linux, and the aforementioned APIs, then you might want to delve a little deeper into all of this, because gaining 20% more compute with a single line of code isn't to be sniffed at.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

1. Best overall:

AMD Ryzen 7 9800X3D

2. Best budget:

Intel Core i5 13400F

3. Best mid-range:

AMD Ryzen 7 9700X

4. Best high-end:

AMD Ryzen 9 9950X3D

5. Best AM4 upgrade:

AMD Ryzen 7 5700X3D

6. Best CPU graphics:

AMD Ryzen 7 8700G

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.