Strip Fortnite is the latest in lazy exploitation on YouTube (NSFW)

'SMASH THAT THUMBS UP FOR THAT 1 KILL 1 FEMALE VIAGRA'

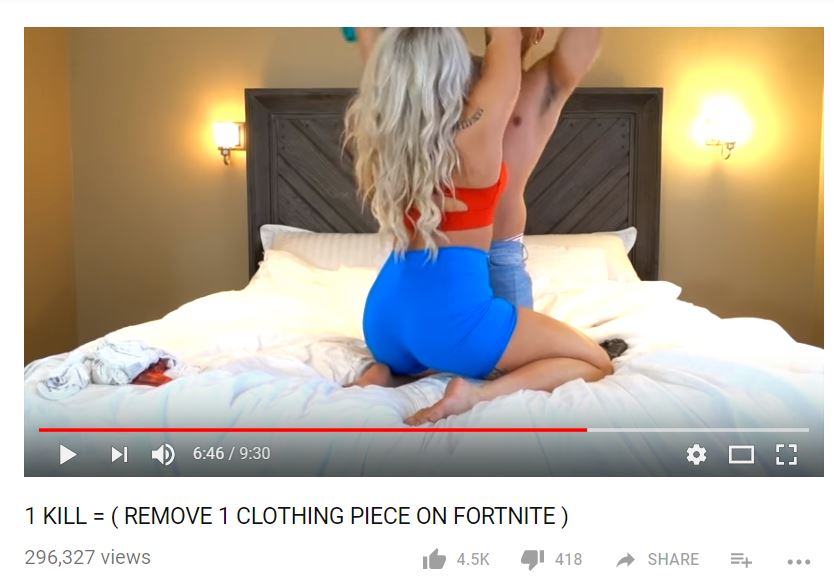

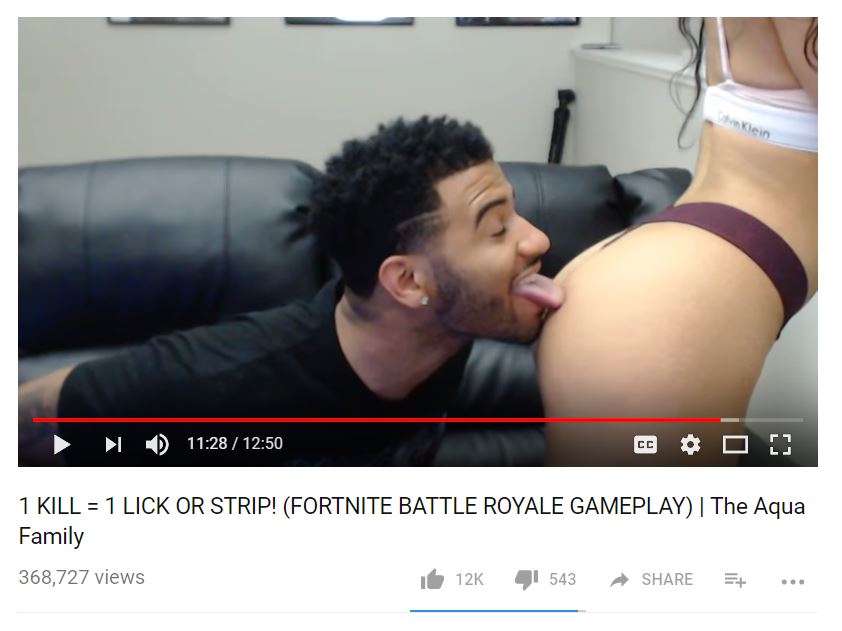

Strip Fortnite, as depicted on YouTube, is not a real thing. It is a recent YouTube trend in which view-hungry 'tubesters put on an absurd amount of clothing, and then either watch a prerecorded Ninja stream or a loud person in the room play Fortnite, typically removing one article of clothing with each kill until they approach—take a breath, preteens—the possibility of nudity, only to start plucking away at smaller and smaller bits of their wardrobe like shoelaces and socks and hairpieces to stay safely within YouTube's nudity and sexual content guidelines. Slap a provocative thumbnail on the sucker, and ship it. And that's where views come from, little Billy.

YouTube's nudity and sexual content policies should be flagging this stuff based on the the video titles alone, stating right at the top that "Sexually explicit content like pornography is not allowed. Videos containing fetish content will be removed or age-restricted depending on the severity of the act in question."

And yet I'm able to watch these videos unrestricted without logging in. They, and a torrential flood of pointed 'strip fetish' videos, are all over YouTube now, unrestricted, most of which contain content that should be age-gated at the very least, if not removed entirely.

What's new with the latest Fortnite season

The best Fortnite creative codes

The optimal Fortnite settings

Our favorite Fortnite skins

The best Fortnite toys

On top of this, YouTube has a separate policy detailing deceptive or sexually provocative thumbnail use. It reads as follows: "Please select the thumbnail that best represents your content. Selecting a sexually provocative thumbnail may result in the removal of your thumbnail or the age-restriction of your video. The thumbnail is the title card that will be shown next to your video across the site and should be appropriate for all ages."

Let's run a quick test as I'm sure some thumbnails are being unfairly judged.

OK, so there's a lot of super horny, crass imagery on YouTube and every little Billy in the world can see the lion's share of it without any restrictions, even though there are policies in place to shield poor little Billy's eyes.

Trouble over a particular YouTuber's take on the trend has drawn the most (well-deserved) ire. Content fool touchdalight recently posted and shortly after removed a video that implied he would be playing Strip Fortnite with his 13-year-old sister, a fictional premise that wasn't actually depicted in the video—they're cheap actors playing roles. But the video represents the extent to which some YouTubers will go to dance around nudity and sexual content guidelines in order to grab eyes. It's indicative of a larger problem on YouTube in which such creators conflate known, sometimes taboo, fetishes with popular search terms in order to tap into the same audience for the largest audience possible on YouTube. These videos don't actually contain explicit sexual content, some skin here and there and the occasional blurred chest, but they do allude to and tease the possibility of uncensored nudity at every opportunity. It's just enough to send little Billy's imagination of a sheer sexual cliff.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Take known idiot RiceGum's Strip Fortnite videos, for example. Each starts with a micro-montage of what is implied to occur in the video. Quick cuts between cleavage and the subjects screaming usually conclude with a half-second of a shirt beginning of come off, but the videos themselves are necessarily censored when it ever gets to that point. It's exploitative garbage.

But the strategy has worked out well for the guy: a few of his Strip Fortnite videos have over 10 million views, doubling, sometimes tripling other videos of his released at the same time. As pointed out by Polygon, popular Fortnite Twitch streamers are becoming aware of the trend, with TSM_Myth calling out RiceGum's open abuse of the Fortnite/nudity-tease killer combo.

Good on TSM_Myth for knowing his audience, though I worry YouTube's slow enforcement of their existing policy on a gathering storm of illicit content designed to exploit young Fortnite enthusiasts won't deter anyone looking for some easy views—unless YouTube comes out in force, that is.

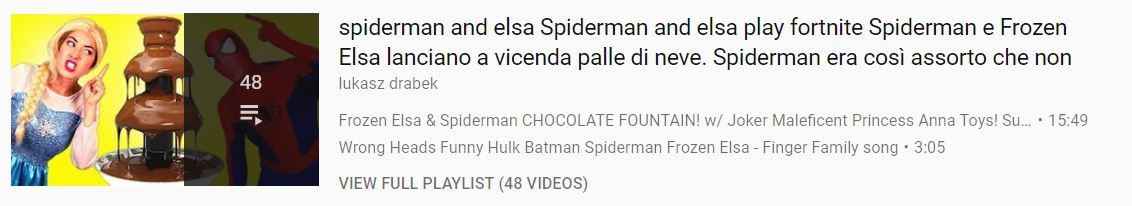

Strip Fortnite is only the latest in a string of problems with YouTube's search and suggestion algorithms. In November of 2017, James Bridle collected his findings on a then widespread trend of videos aimed at children, and often starring them, in which the children or popular superhero, cartoon, or Disney characters were placed in disturbing situations. Many of these channels had millions of subscribers, and some had billions of views, clearly reaching their target audience, disguising genuine horror beneath cute thumbnails any tech-savvy preschooler would click on.

YouTube has since acknowledged and addressed the issue by deleting around 50 channels right away and many more since (though they're unfairly forgiving to YouTube icons they can't afford to lose completely). And yet hundreds of videos still game the algorithm, dropping every popular superhero and Disney character in the title to grab the attention of a young, innocuous audience. With so many of the same youths playing Fortnite, it was only a matter of time until Elsa, Spider-Man, and Fortnite finally met.

Both of these unfortunate trends are a reminder of how far platforms like YouTube that rely on automated moderation lag behind on policing their own policies.

James is stuck in an endless loop, playing the Dark Souls games on repeat until Elden Ring and Silksong set him free. He's a truffle pig for indie horror and weird FPS games too, seeking out games that actively hurt to play. Otherwise he's wandering Austin, identifying mushrooms and doodling grackles.