Remembering Rage, a flawed but technical marvel

The things I remember most about Rage are megatexturing and a 60fps framerate cap.

There are games that leave a lasting impression—stories that will live on in memory for the rest of my life. Rage is not one of those. I couldn't remember anything about the game other than it involved vault-things called Arks being buried in the earth to survive the impact of an asteroid, which of course didn't work out as planned. I finished Rage when it was new, but besides being surprised that there was no real "big bad boss battle" climax, the main thing I recall is that Rage was the showcase for the id Tech 5 engine.

A big talking point for id Tech 5 back in the day was megatexturing. From a purely technical perspective, megatexturing (or virtual textures) wasn't even new in id Tech 5—it was also used in id Tech 4. But id Tech 5 allowed for textures up to 128Kx128K instead of 32Kx32K in id Tech 4, and more is always better, right? The main goal with megatexturing is to allow artists to build a world without worrying about hardware limitations like VRAM. The game engine streams in the textures as needed (and at quality levels that fit into memory). Every surface can have a unique texture, so you don't get the repeated textures so often seen in other games.

Rage apparently uses 1TB of uncompressed textures, which is a massive figure when you think about it. That's like a single 524288x524288 texture, and I could use up my entire monthly bandwidth allotment just downloading that much data. Rage didn't use a single overarching megatexture—there were multiple 128Kx128K megatextures, each containing the artwork for specific areas like Wellspring, Subway Town, the outdoor desert environment, etc. The problem is that an uncompressed 128Kx128K texture is still 64GiB in size, so HD Photo/JPEG XR is used to heavily compress the megatexture, and then pieces are transcoded on the fly into a usable texture.

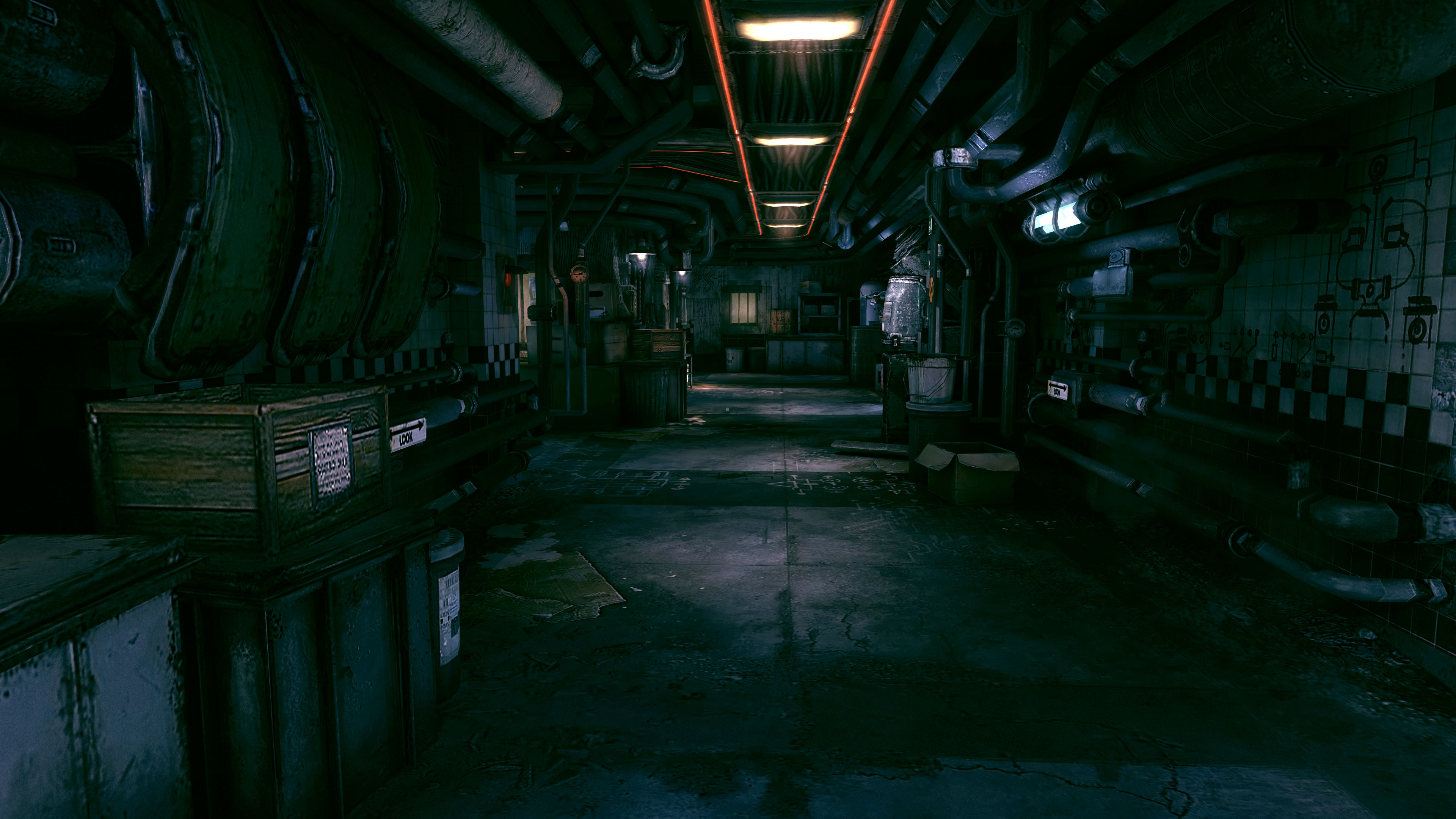

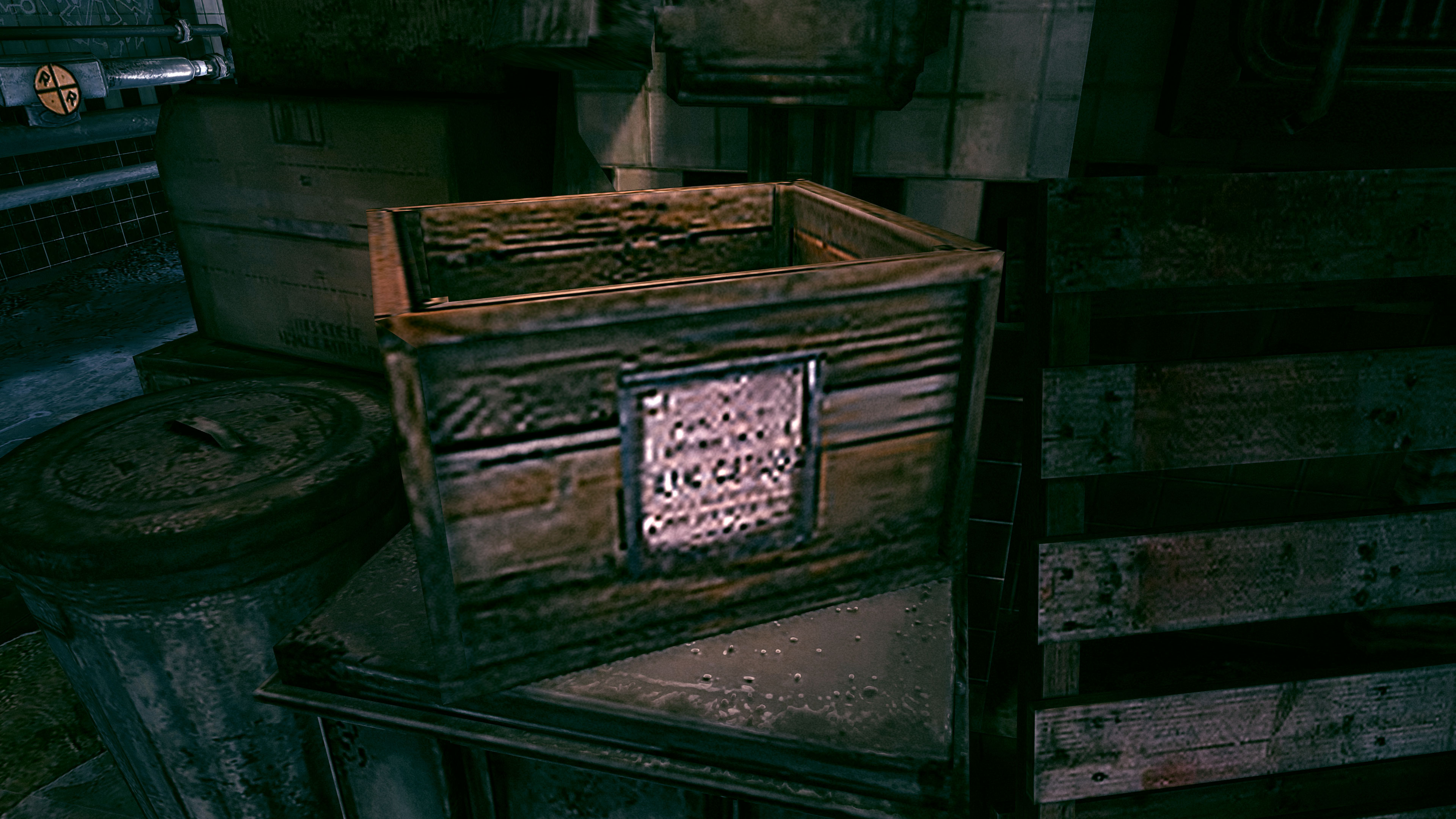

Ultimately, Rage ended up being a 20GB install (with 17GB of textures), at a time when 5-10 GB games were the norm. It's use of (mostly) unique textures for every surface made for a more visually interesting environment at times, and even now, eight years later, Rage looks decent. But while the scenery can look good from afar, getting up close to the textures shows some limitations.

The compression lends everything a somewhat grainy look, which is perhaps part of the Rage aesthetic. Look at a wall from across the room and it's not too bad, but get up close to most objects and there's a ton of blur and fuzziness. Character faces and some other objects get higher quality textures, but there's no way to store high resolution textures for every surface (without modern 50GB and larger HD texture packs, at least), and in Rage a lot of objects probably ended up with a 64x64 or even 32x32 slice of the megatexture.

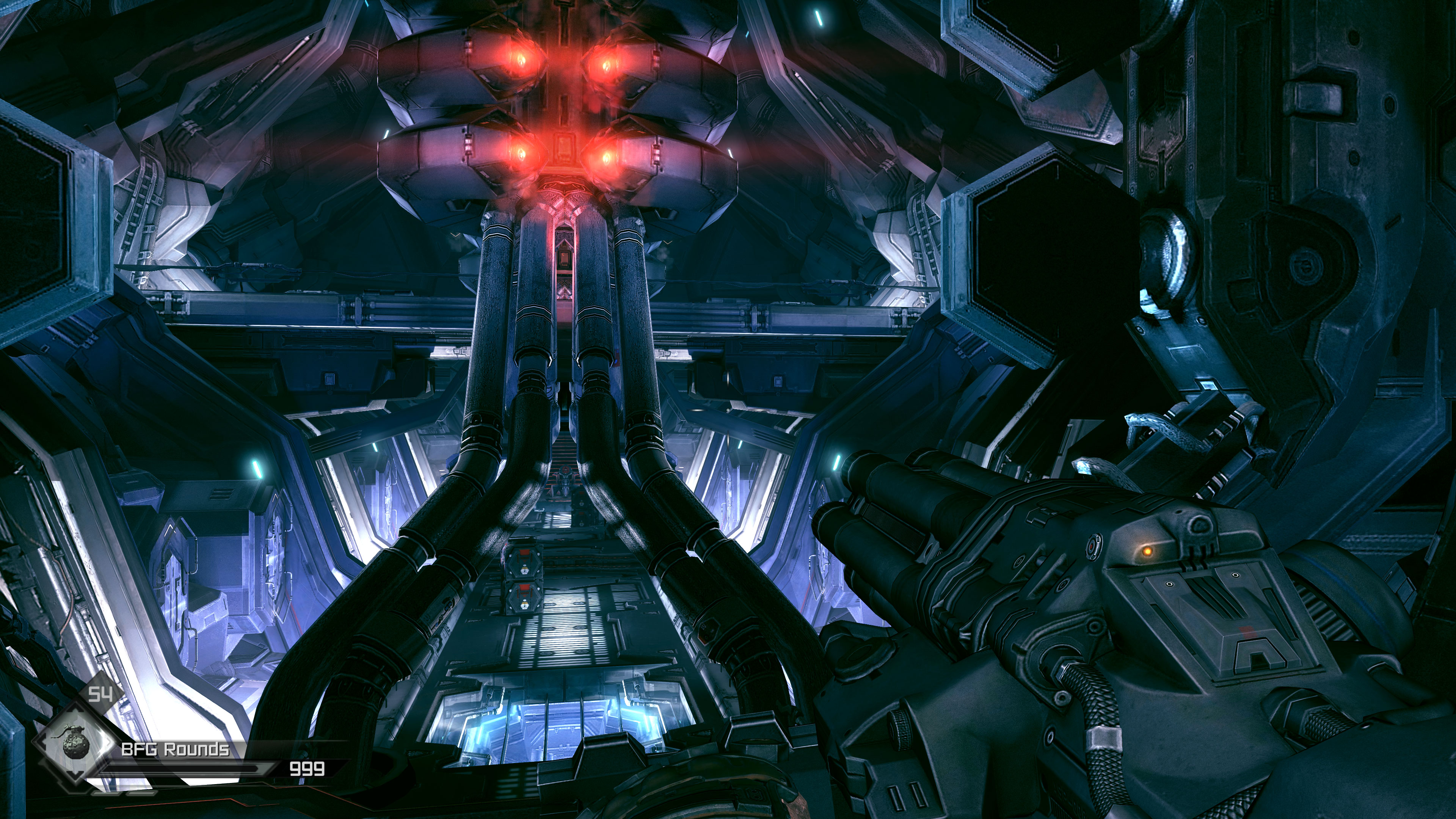

But Rage also forged new territory in other ways. Rage would dynamically scale texture and scene data based on your PC's capabilities, aiming for a steady 60fps experience. I remember playing it on a GTX 580 at launch, and even at 2560x1600 with 8xAA it would run at a steady 60fps. It would also generally run at that same resolution and 60fps on far less capable hardware—though it might not look quite as nice. Today, armed with an RTX 2080 Ti, Rage will still run at 60fps at 4k, and it will also do 60fps at 4k on just about every other dedicated graphics card currently sitting in my benchmark cave (it's like a man cave, for PC hardware nerds).

This got me wondering: How does Rage run on Intel's current integrated graphics? I fired up my trusty Core i7-8700K test system, sans graphics card and using Intel's UHD Graphics 630. Capturing the framerate data was a bit difficult as Rage appears to lock out the keyboard shortcuts for starting/stopping frametime logging (for FRAPS, OCAT, and PresentMon), but I got around that by switching to the desktop, starting the PresentMon capture, and then switching back.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The short summary: Rage is completely playable even on Intel's integrated graphics, running at 1080p and "maximum" quality settings. I get around 48fps on average, and even 1440p plugs along at a reasonable 30fps. Minimum fps does dip into the low 20s at times for 1440p, and 4k drops to 16fps average, but I can't feel too bad. As a comparison point, Borderlands (released two years before Rage) only averages 28fps at 1080p max quality using Intel's UHD 630.

Of course, it really isn't that surprising that Rage works okay on Intel's UHD 630. After all, that's a 1200MHz part with 24 EUs (192 shader cores), which means 460.8 GFLOPS. The PlayStation 3 and Xbox 360 both have about half that level of theoretical performance. Time is the great equalizer of performance.

How does Rage look running on integrated graphics? Only slightly worse than on a much faster GPU, the most noticeable difference being the streaming in of textures when you turn quickly, and that only lasts for a fraction of a second.

I mean that literally: I captured a video of Rage running on UHD 630 while spinning quickly outside, because I couldn't snap screenshots fast enough. It takes at most about five frames to go from the blurry initial image to the "final" image shown in the above gallery (note that a lot of additional compression artifacts are visible in the above gallery because I had to resort to using screenshots from a video source). On a modern dedicated GPU, it's 1-2 frames at most, and usually only the first time you see a texture.

Ultimately, for me Rage was more interesting from a technical perspective. It was the first id Tech 5 game, but its implementation had some clear flaws. Later id Tech 5 games would remove the fps limit, or at least increase it (eg, Dishonored 2 has a 120fps cap, though you can get around that with Nvidia cards by forcing vsync off). id Tech 5 was also the last of John Carmack's engines, as id Tech 6 was developed after his departure and changed many elements of the engine, though megatexturing remains. Ultimately, like other id Tech engines, id Tech 5 didn't see widespread use.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.