Assassin's Creed Origins is one of the most demanding games around

Bring a fast CPU and an Nvidia GPU for the best ancient Egypt experience.

Assassin's Creed Origins is one of the strongest entries in the long-running series. Taking a year's break paid off, and Egypt comes to life in a game that's frequently visually stunning. But there's a dark side to the brotherhood—well, another dark side—and it's that the game has some steep hardware requirements.

Ubisoft lists a GTX 660 or R9 270 graphics card as its minimum hardware requirements, with an i5-2400s or FX-6350 CPU. That doesn't sound too bad, but it's important to note that Ubisoft is talking about 30 fps at 720p minimum quality settings, not 60 fps at 1080p. The recommended hardware meanwhile is a GTX 760 or R9 280X, with an i7-3770 or FX-8350, but again that's only likely to get you 30 fps at 1080p high settings.

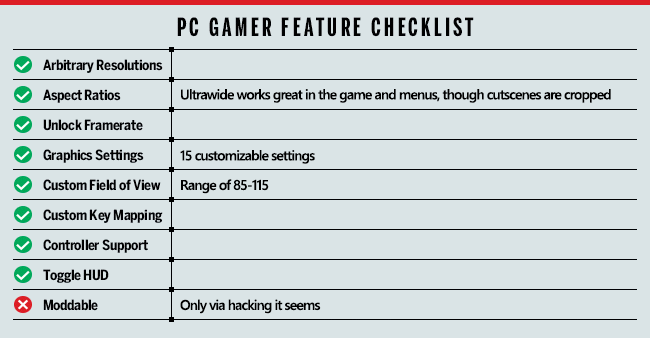

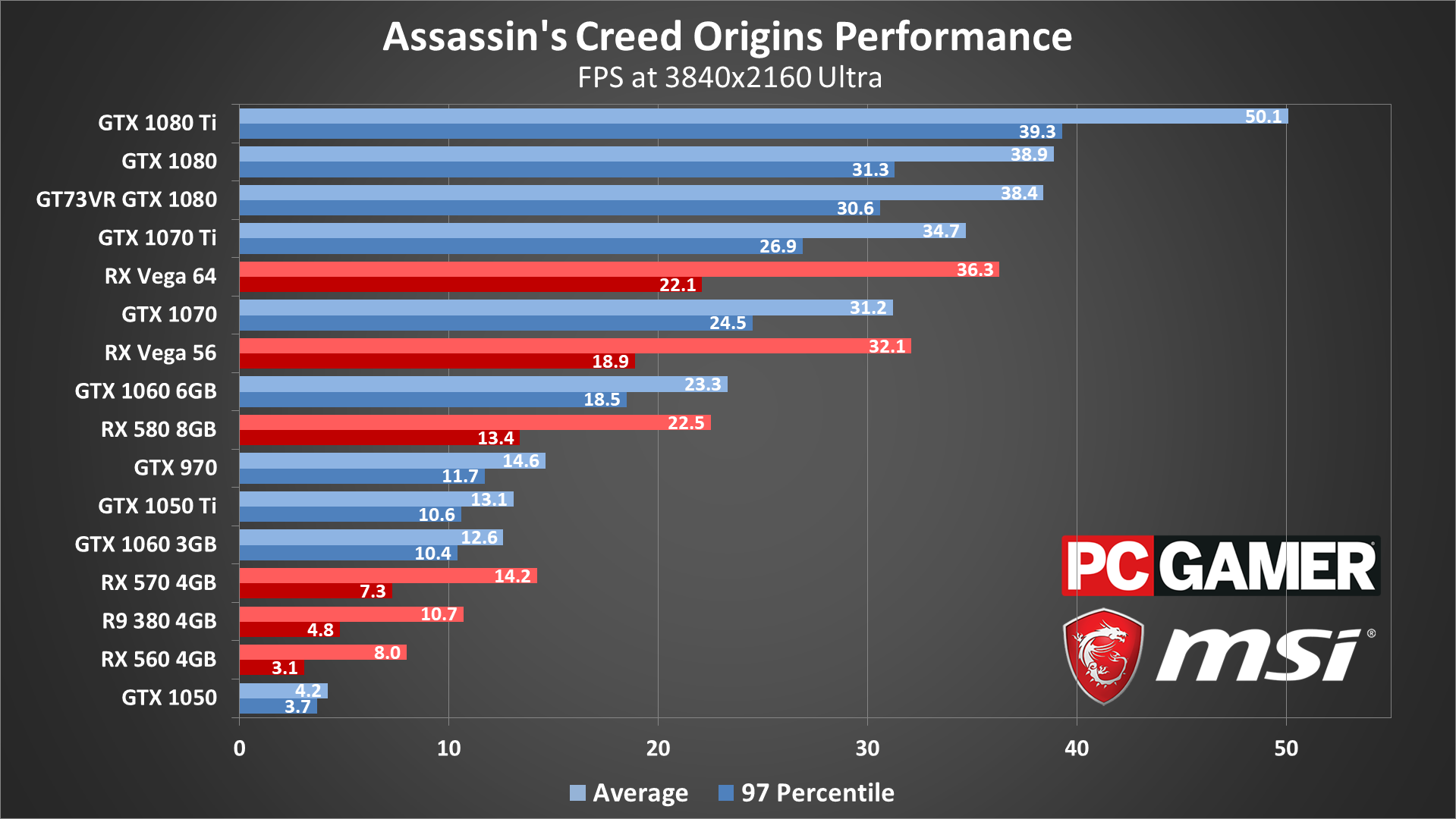

In my testing, for 1080p medium at 30 fps you'll need at least a GTX 1050—which equates to a GTX 960, GTX 770, or GTX 680. The GTX 760 is a fairly sizeable 15-25 percent step down from the 770 (which I've tested below), so 1080p high seems an unlikely target. On the AMD side, you'll need an RX 560 or R9 380 for 1080p medium, and the R9 280X is going to be around that same level of performance. 1080p high should be doable, but only at 30 fps.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Assassin's Creed Origins on a bunch of different AMD and Nvidia GPUs and laptops—see below for the full details. Full details of our test equipment and methodology are detailed in our Performance Analysis 101 article. Thanks, MSI!

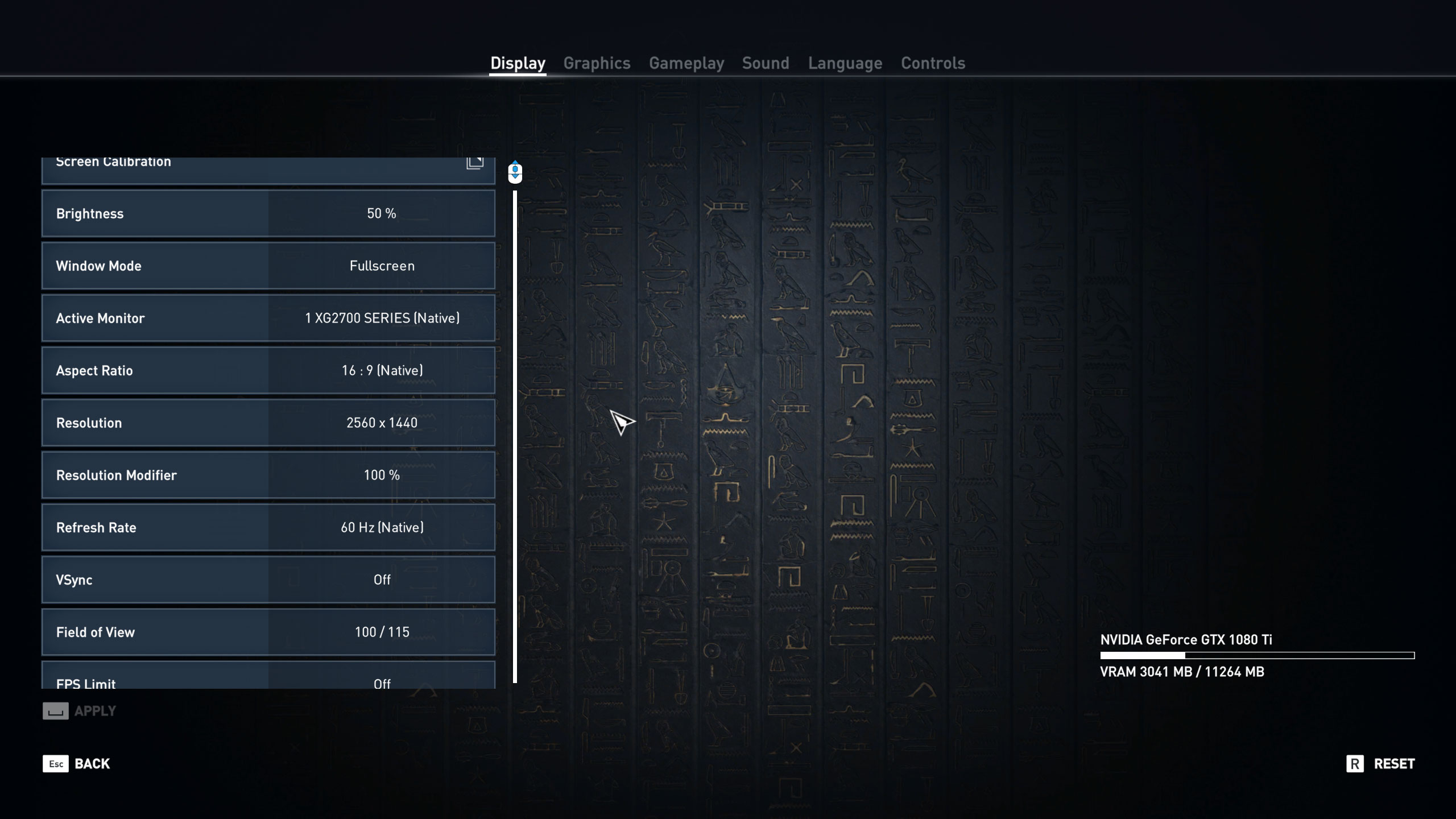

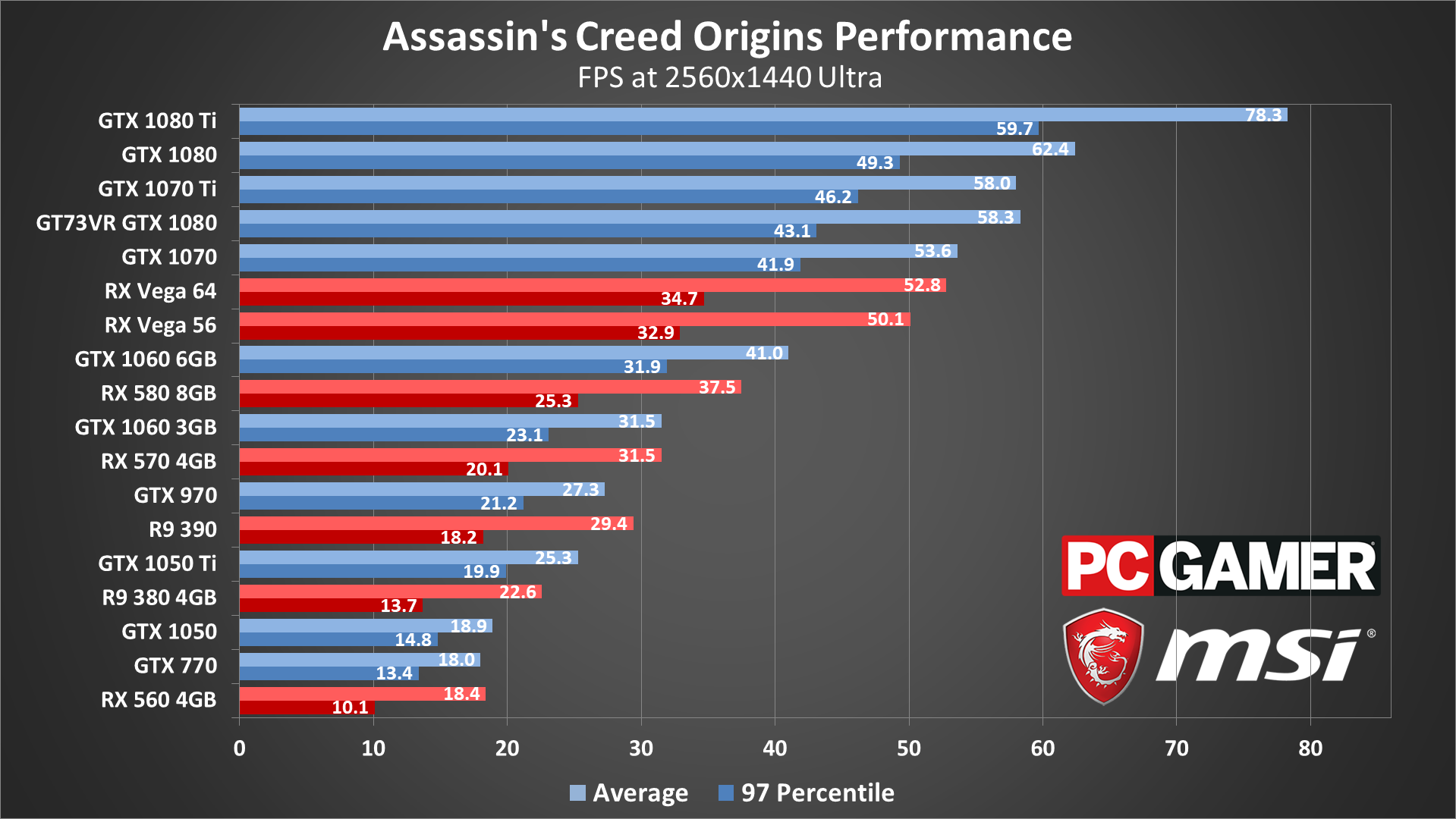

As far as features go, Assassin's Creed Origins does nearly everything right, with the exception of mod support. That's keeping with Ubisoft tradition, which is unfortunate as we'd love to see the modding community tweak some aspects of the game. There have been texture updates for some of the previous games, but not much more than that. Ultrawide support is nearly perfect, except for in the small number of cutscenes. These show black bars on the right and left, but the menus and game world all look stunning on 21:9 displays.

The graphics settings seem like a fairly comprehensive list, and I'll dig into the individual settings and their performance impact at the end of the article. The only problem is that most of the settings only cause a small change in performance. On modern GPUs like the GTX 1060 and above, or the RX 570 and above, going from ultra high quality to very low quality only improves performance by about 50 percent. Cards with less than 4GB VRAM may show greater improvements in performance, but it still requires a fast GPU if you want to get above 60 fps.

MSI provided all the hardware for my testing with its Gaming X graphics cards for most of the models. The Vega 56 and 64 are reference designs, however, as MSI has not yet announced or released any custom solutions. While I've previously used MSI's Aegis Ti3 as the primary test platform, it turns out that an i7-7700K can actually limit performance on the faster GPUs, so I've moved to MSI's Z370 Gaming Pro Carbon AC with a Core i7-8700K as the weapon of choice, with 16GB of DDR4-3200 CL14 memory from G.Skill. I also had some issues getting Origins to run on several of the MSI notebooks, so I've included the GT73VR results in the primary charts.

So what does it actually take to run the game well? You'll need both a fast CPU and a fast graphics card to get the most out of Assassin's Creed Origins on PC, and Nvidia GPUs currently show substantially better performance than AMD's GPUs.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Ubisoft says it hasn't used any Nvidia GameWorks libraries this time, and told us, "We have been working very closely with both AMD and NVIDIA for quite a while (and continue to do so) to allow us maximize performance on both GPUs. We are committed to taking advantage of any performance gains available with the new drivers to help players have the best experience they can in ACO with the hardware they have. You can expect we will take the opportunity to make any tweaks which lead to performance gains in the upcoming updates."

All of my current testing was done with the 1.03 patch, using Nvidia's latest 388.13 drivers and AMD's 17.11.1 drivers—which is an update from the initial testing I did at the time of launch. The 1.03 patch smoothed out some of the minimum fps problems, and Nvidia performance across nearly all GPUs improved substantially. AMD performance meanwhile remained flat, with some minor drops in some cases. Hopefully future patches and/or driver updates will help Radeon owners out.

AC Origins includes a built-in benchmark, under the graphics menu, which is what I used for testing. I also did a manual performance run through the starting city of Siwa, including sword fights, assassinations, and riding around on a horse, and found that the built-in benchmark gives a reasonable comparison point. Variation between runs is lower, and it's within a few percent of the performance I measured while playing the game normally. If you wander off into the desert, performance—particularly on AMD cards—is higher, but much of the game will be spent in the cities, which are more taxing.

Starting at 1080p medium, which represents a decent compromise between performance and image quality, AMD users should immediately see red flags. The fastest AMD GPUs max out at less than 60 fps, while Nvidia GPUs can break into the 100+ fps range. More critically, even the GTX 1060 3GB is able to beat the Vega 64.

I've done extensive testing of AC Origins, on multiple CPUs and motherboards, and these results are very consistent. Unless you want to benchmark the game outside of the cities, AMD GPUs come up seriously short. AMD GPU utilization was often in the 60-80 percent range, indicating other bottlenecks besides the GPU core are limiting performance. It could be geometry throughput, but even using the very low preset (which disables tessellation and sets all the other options to minimum) the RX Vega 64 only scored 71 fps at 1080p. There's obviously more work to do to get AC Origins running well on AMD hardware, and Nvidia easily wins any comparisons you might make at these settings.

It's not just AMD hardware struggling with the game, as Intel's HD Graphics 630 (running on an i7-7700K) couldn't even get above 20 fps at 720p minimum quality. You'll need a decent GPU to run Origins.

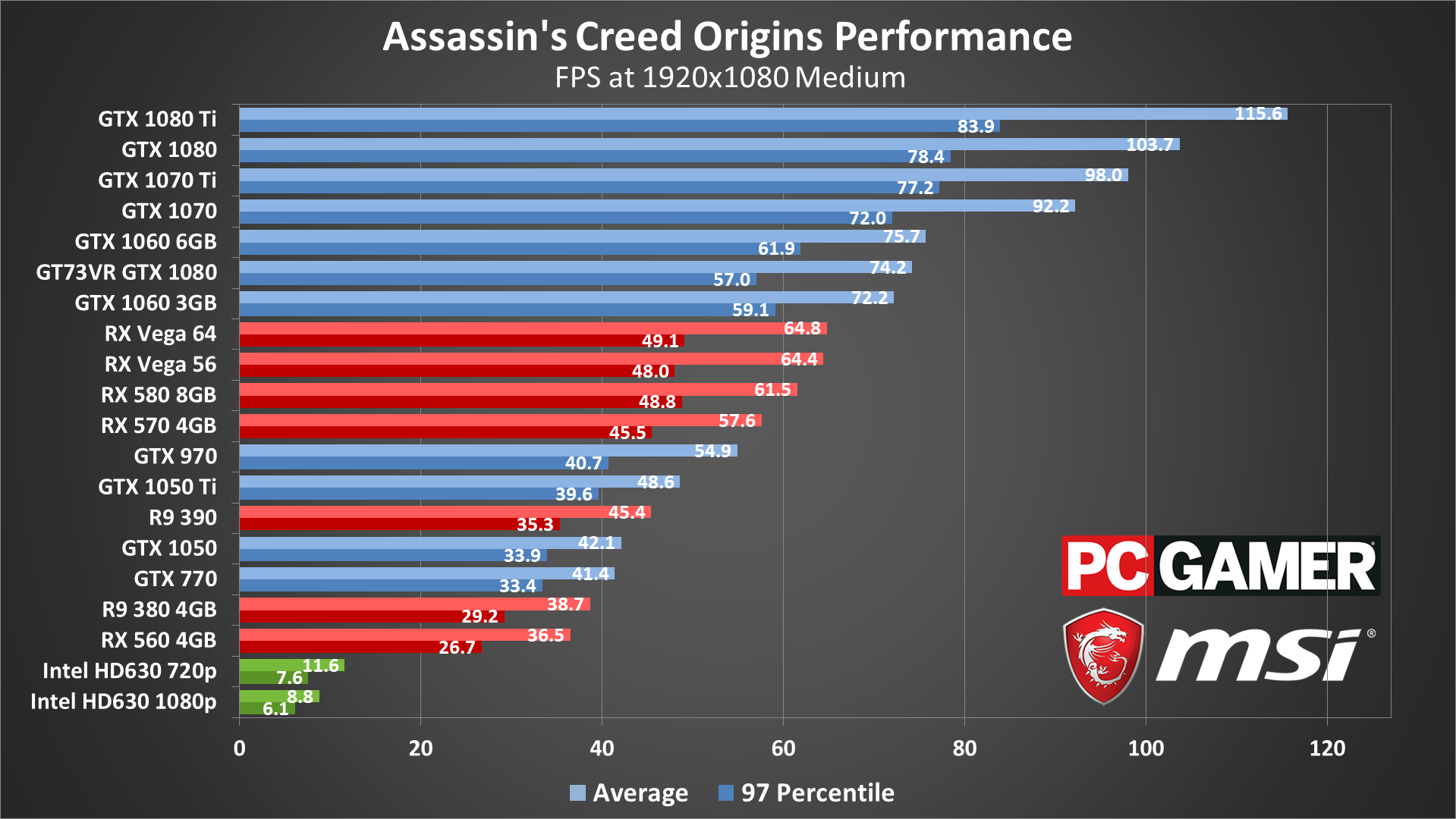

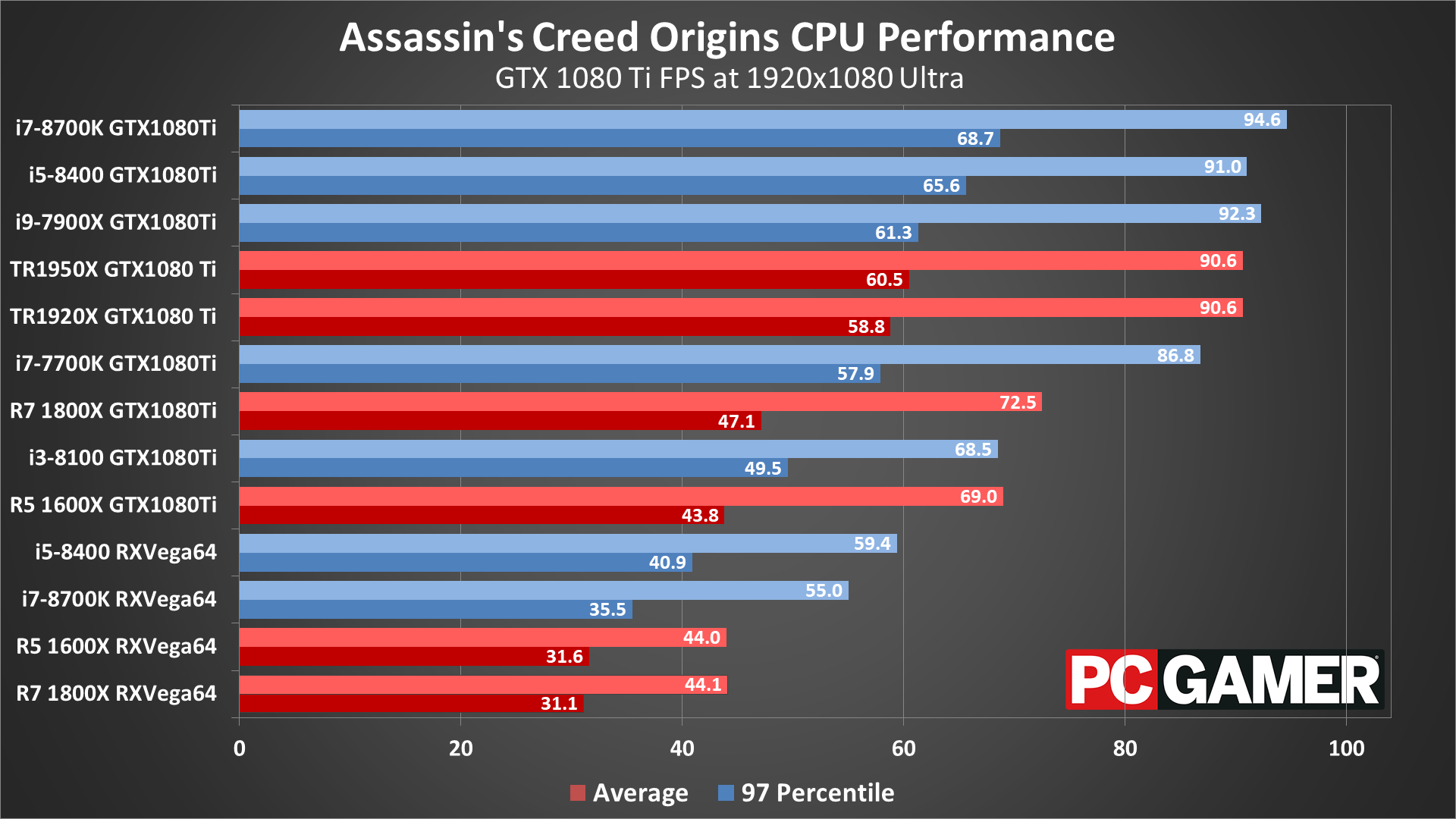

Moving up to 1080p ultra, Nvidia continues to dominate, with the 1060 6GB beating every AMD GPU. The game settings menu estimates VRAM use of about 2.8GB using these settings, so the 1060 3GB still manages to perform well enough, but for very high and ultra quality you'll likely want a 4GB GPU. I'll discuss the settings more in a moment, but AC Origins can definitely push your GPU to its limits.

As before, GPU utilization on AMD cards isn't above 90 percent on the faster models, indicating other bottlenecks. The Vega cards do fine in less populated areas, but as soon as you enter a city, framerates can drop by 50 percent or more. If you're looking for 60 fps or higher at 1080p ultra, only the GTX 1070 and above accomplish that feat, though the GTX 1060 and Vega cards can get there at 1080p high settings.

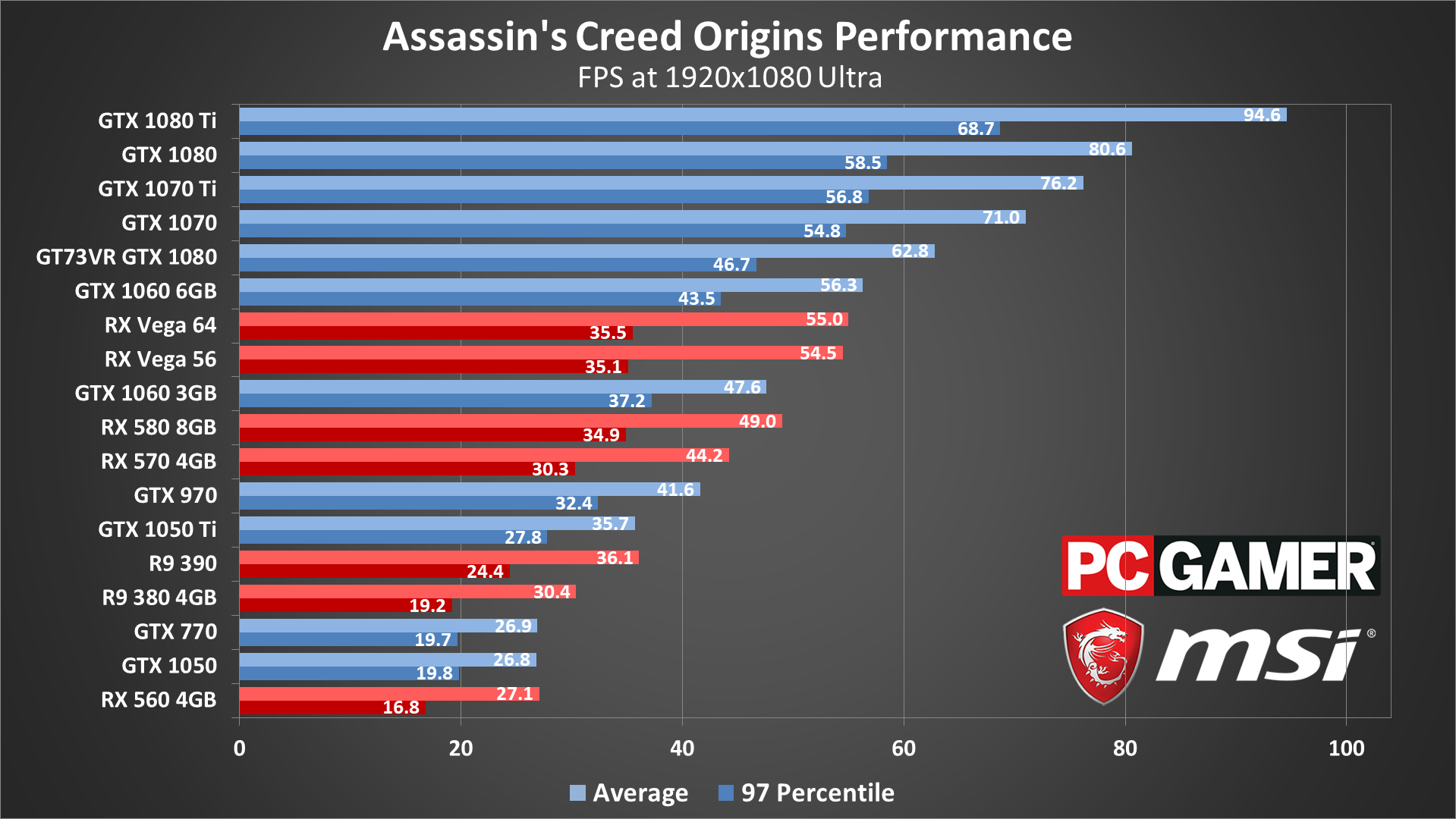

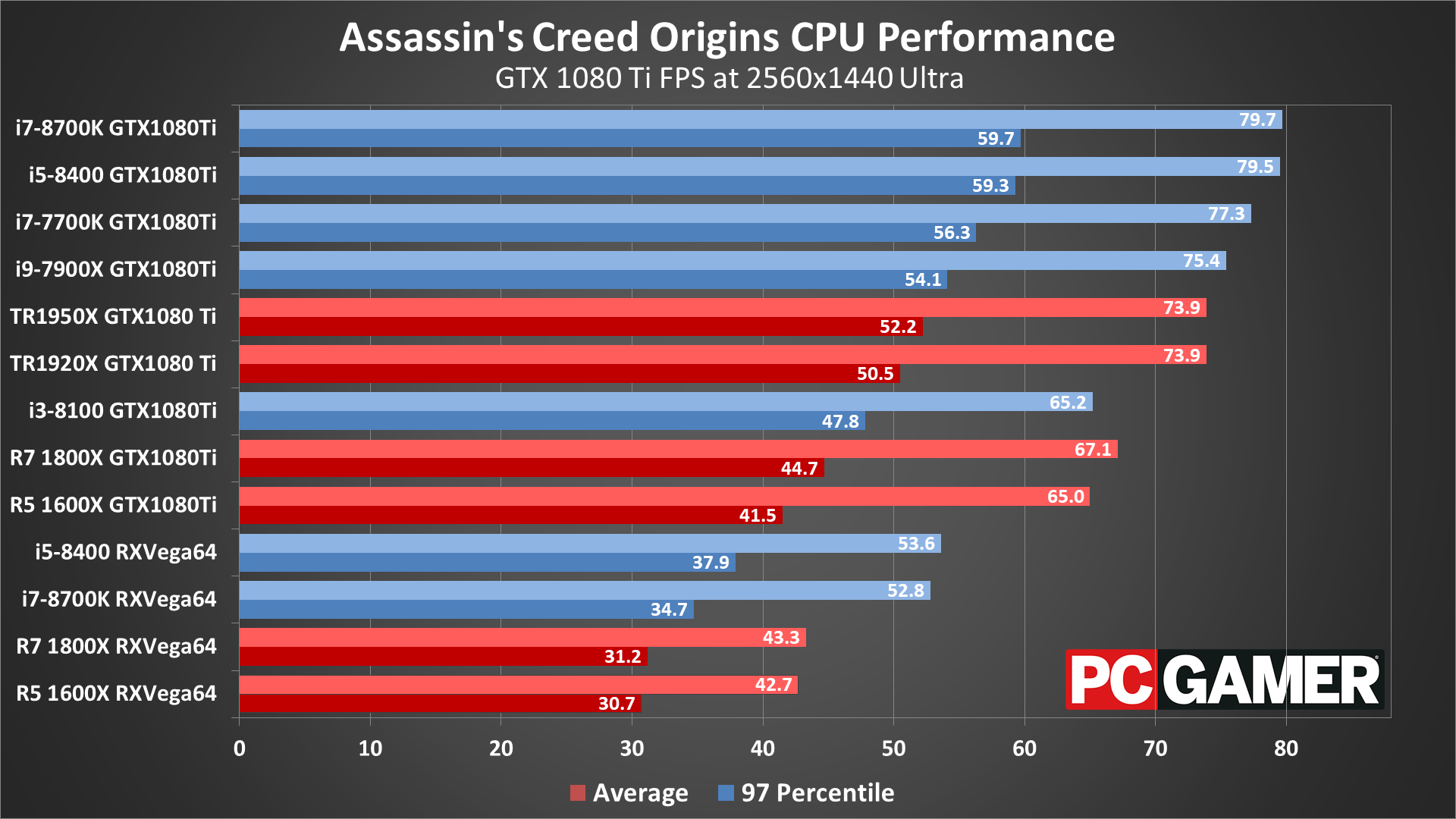

1440p requires some serious hardware if you want to come close to 60 fps—and yes, there's a very clear difference in feel between 30 and 60 fps in AC Origins. Only the GTX 1080 and 1080 Ti get there, though overclocking the 1070 Ti and maybe the 1070 (with a few tweaks to settings) should suffice as well.

Vega can also break 60 fps with the right settings, but there's a lot more microstuttering (dips below the average framerate) on AMD cards. AMD's Vega cards start to gain on Nvidia at 1440p, and GPU utilization also increases. Basically, drivers and CPU become less of a limiting factor as resolution increases.

If you're sporting multiple graphics cards, you're unfortunately out of luck—Origins won't use currently more than a single GPU. Considering the CPU limits imposed by the engine, that's probably for the best, and this is one more major release in a growing list where SLI and CrossFire won't do you any good. That may change in the future, but I wouldn't count on it.

Not surprisingly, 4k ultra is mostly out of reach for now. If you want to get 60 fps at 4k, your only real choice is going to be 1080 Ti, and then use a combination of medium and high settings. Even the GTX 1080 at minimum quality only averages in the mid-50s at 4k—and yes, it will go higher in less demanding areas of the game, but on the whole you'll see plenty of drops below 60.

4k is also the only place where the Vega cards can start to match Nvidia's 1070 and 1070 Ti. Minimum framerates still favor Nvidia hardware, but it shows that when the GPU cores are the major limitation, AMD's hardware holds up pretty well. Either the drivers or the game engine are holding the cards back at lower resolutions and settings.

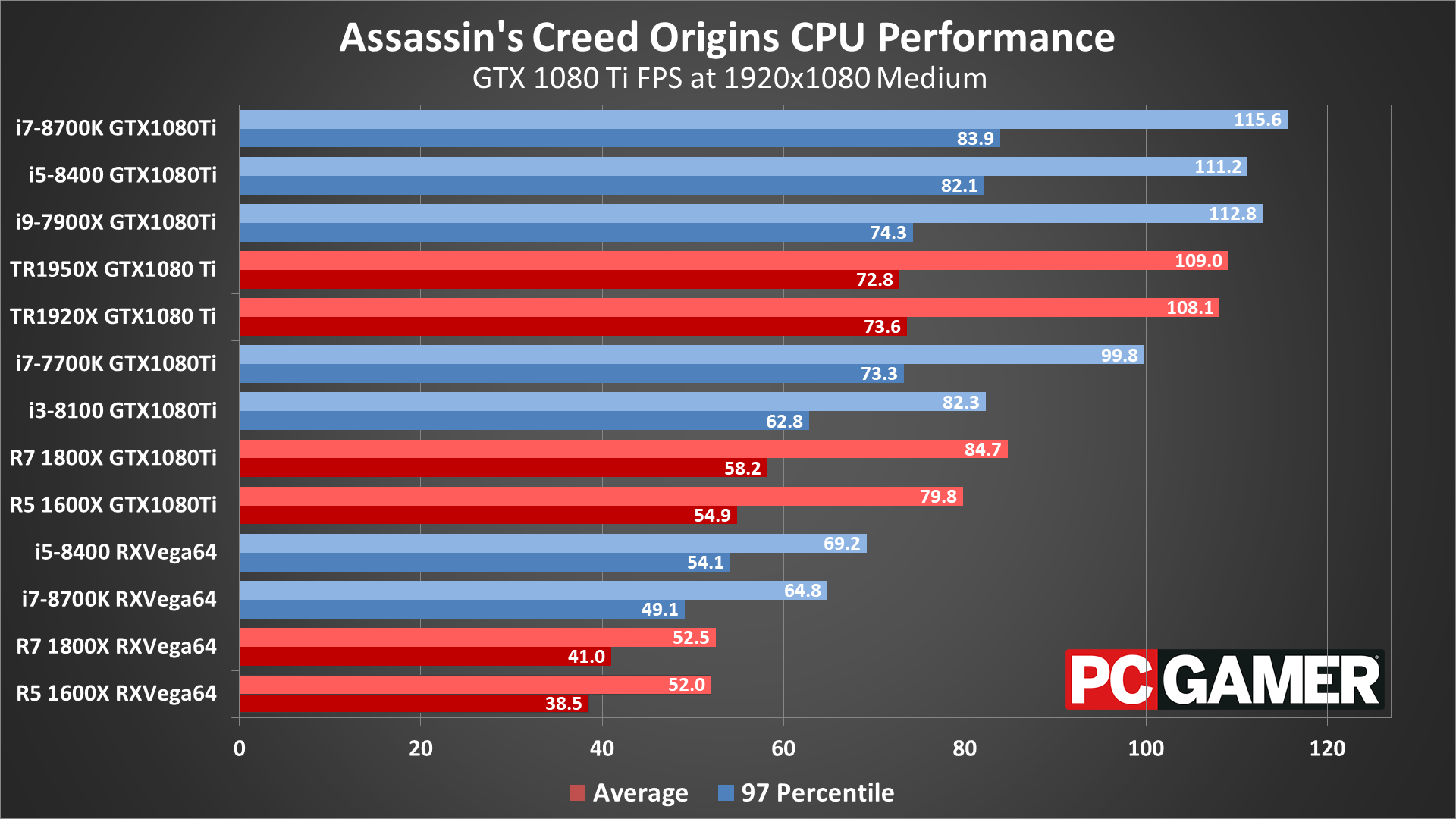

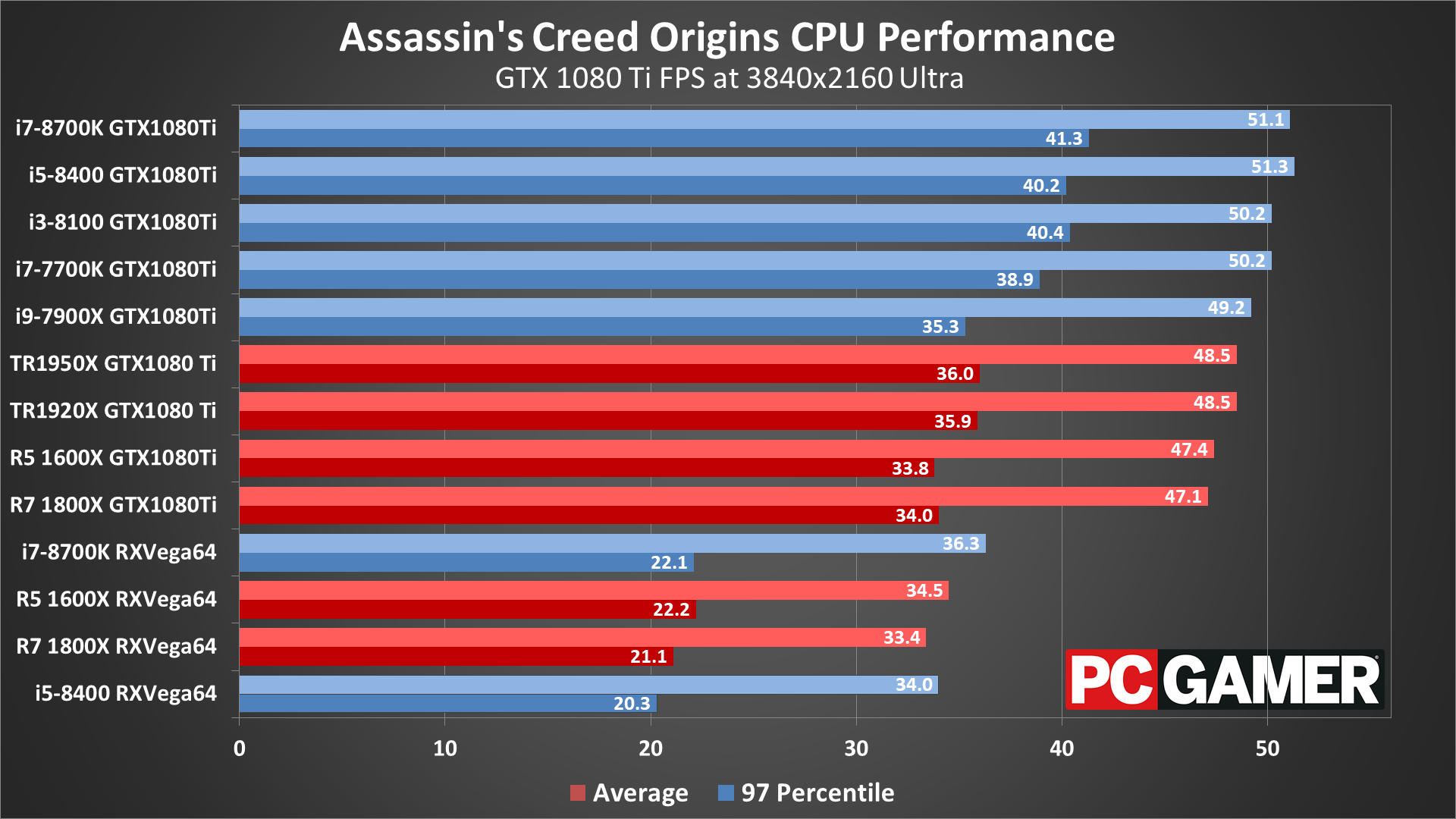

For CPU tests, I've got two different GPUs run on several CPUs. I've included a few options with RX Vega 64 to show how CPU performance limits AMD's faster GPUs. But the primary CPU testing, and what I'll focus on here, is done with the GTX 1080 Ti. For the framerate overlays, I've focused on the primary points of interest, the i7-8700K, i3-8100, Ryzen 7 1800X, and Ryzen 5 1600X, running at 1080p ultra.

The 8700K is the fastest gaming CPU in nearly every game I've tested, which isn't too surprising. Ryzen 7 1800X by comparison falls well short of that mark, though it just edges out the new quad-core i3-8100. But what's really interesting is that Threadripper 1920X and 1950X actually show substantially better performance from the AMD processors.

There aren't many games that will scale beyond 6-core/12-thread, and on Intel CPUs that remains true of Assassin's Creed Origins, but on AMD the Threadripper chips are a solid 25 percent faster than the Ryzen 1800X. It could be that Origins throws around so many threads for AI and physics that Threadripper pulls ahead, or it might be the additional memory bandwidth offered by the platform. Either way, Threadripper owners can at least be happy about gaming performance for a change. Also note that the 1.03 patch allows the game to run on CPUs with more than 24 threads, which wasn't possible with the initial release.

Assassin's Creed Origins settings guide

Desktop PC / motherboards

MSI Aegis Ti3 VR7RE SLI-014US

MSI Z370 Gaming Pro Carbon AC

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

The GPUs

GeForce GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Ti Gaming 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GE63VR Raider (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

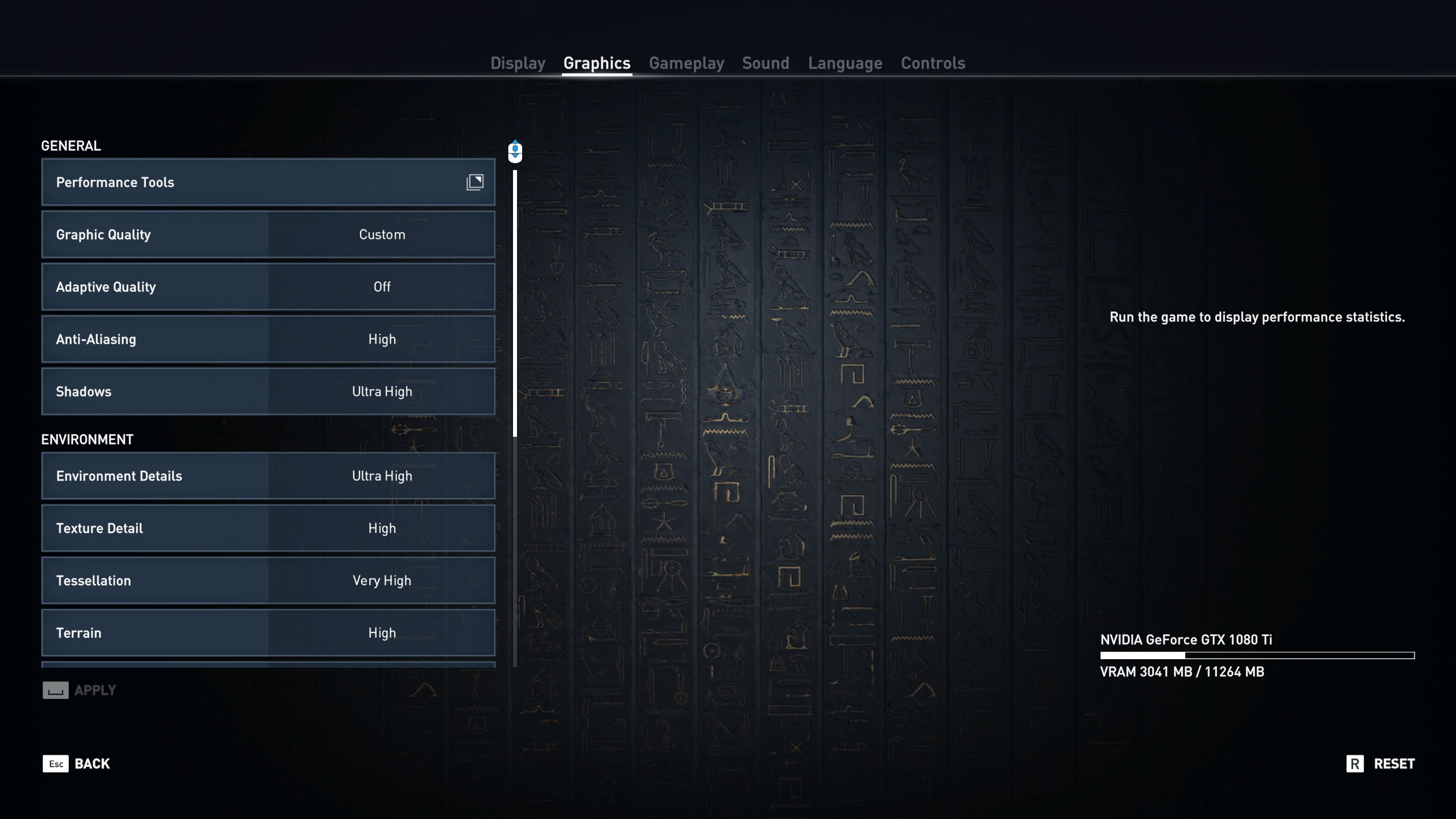

There are 15 settings that can be tweaked that directly affect image quality and help improve performance. There are also several others that are related but affect performance in other ways—like resolution scaling, adaptive quality, v-sync, and the framerate cap. Individually, most of the settings only have a moderate effect on performance, and that's when going from maximum quality to minimum quality. Here are all the major settings, along with the approximate impact on performance (measured with a GTX 1070 and Vega 56).

General Settings

Graphic Quality (Very Low/Low/Medium/High/Very High/Ultra High): a preset that provides a quick way to adjust all the other settings. Very low sets everything to minimum and ultra high sets everything to maximum (and requires the HD texture pack). There's about a 50 percent improvement in performance going from ultra to very low, and up to a 100 percent increase on GPUs with less than 3GB VRAM.

Adaptive Quality (Off/30 FPS/45 FPS/60 FPS): dynamically adjusts anti-aliasing quality to reach the desired framerate if enabled. I test with this off to ensure identical settings for all tested GPUs.

Anti-Aliasing (Off/Low/Medium/High): attempts to remove jaggies, apparently via SMAA and/or FXAA. Disabling improves performance by about four percent.

Shadows (Very Low/Low/Medium/High/Very High/Ultra High): affects the filtering quality and resolution of shadow maps. This is one of the more demanding individual settings, though turning this down still only improves performance by around six percent.

Environment Settings

Environment Details (Very Low/Low/Medium/High/Very High/Ultra High): adjusts the graphical complexity of environmental elements. Going from ultra to very low only improves performance by one percent in testing.

Texture Detail (Very Low/Low/Medium/High): the quality of textures used in the game. If you have enough VRAM, turning this down only improves performance by 1-2 percent, though on cards with less than 3GB it can cause a larger change.

Tessellation (Off/Medium/High/Very High): applies displacement maps to enhance the geometry details of surfaces. Turning this off only improves performance by 1-2 percent.

Terrain (Medium/High): adjusts the detail of the terrain, and using the lower setting improves performance by 4-5 percent.

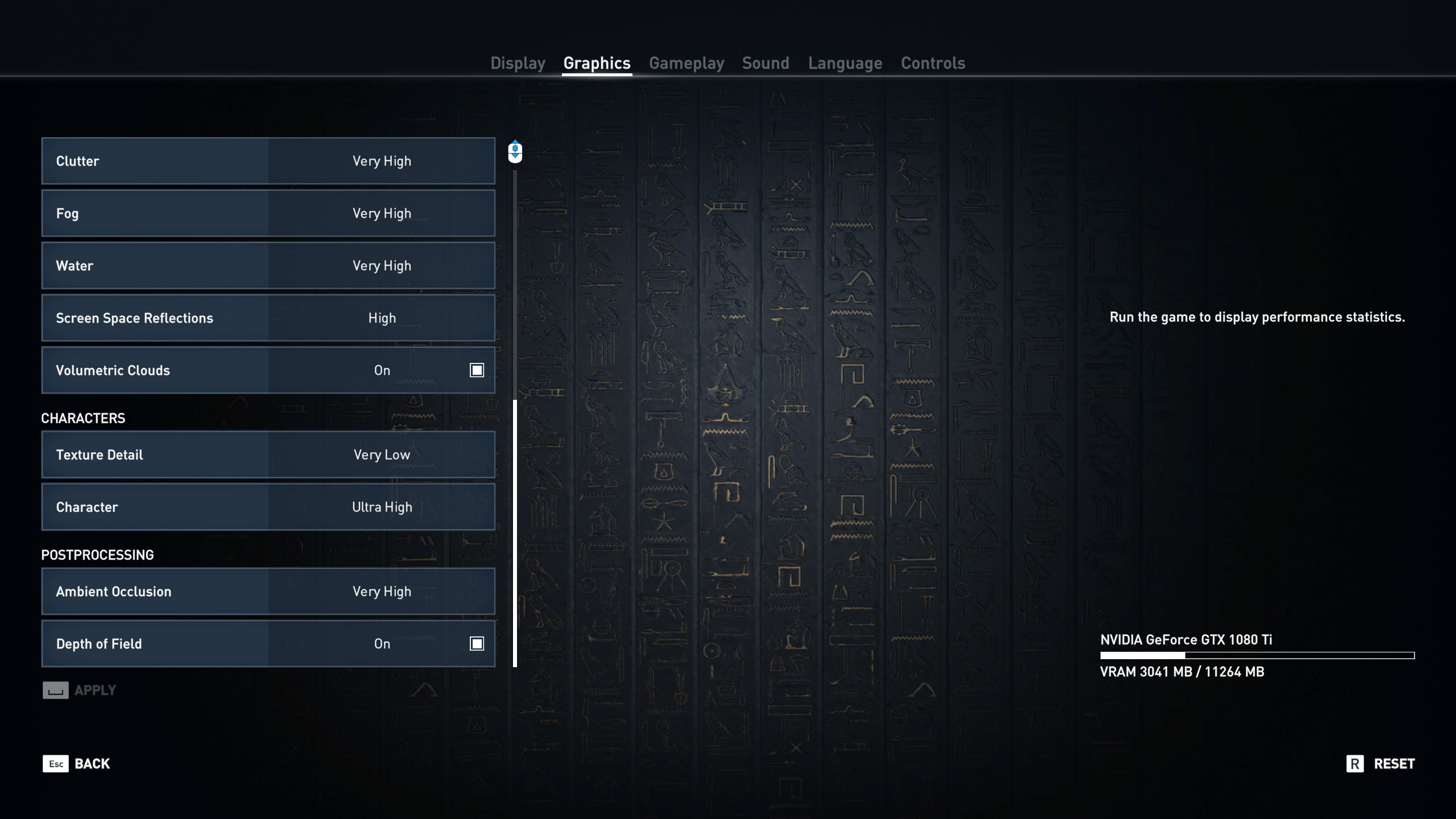

Clutter (Low/Medium/High/Very High): affects the density of various forms of clutter—grass, rocks, and other small objects. Turning this down improves performance by 3-4 percent.

Fog (Medium/High/Very High): adjusts the fog accuracy, with a relatively negligible impact on visuals. Turning this down improves performance by up to five percent.

Water (Low/Medium/High/Very High): adjust the level of detail of water. Minimum quality only improves performance by 1-2 percent.

Screen Space Reflections (Off/Medium/High): controls the rendering quality of reflections on wet surfaces. I only saw a 1-2 percent change in performance, though in water scenes it might have a bigger impact.

Volumetric Clouds (Off/On): turns the volumetric clouds on or off, with a pretty sizeable five percent change in performance, though the sky looks quite boring with this off.

Characters Settings

Texture Detail (Very Low/Low/Medium/High): adjusts texture quality of character entities (people and animals) in the world. Turning this down causes negligible 1-2 percent improvement in performance.

Character (Very Low/Low/Medium/High/Very High/Ultra High): level of detail on characters, again with a small one percent impact on performance.

Postprocessing Settings

Ambient Occlusion (Off/High/Very High): a lighting effect that controls the self-shadowing of objects. This is the most demanding setting in the game, along with shadows, and turning it off improves performance by around six percent.

Depth of Field (Off/On): simulates a camera's focus range and blurs out the background. I didn't measure any change in performance with this setting.

AC Origins is a bit interesting in that the combined impact of the settings appears to be the product of the individual settings—meaning, there aren't many 'freebies' that you can leave on with zero change in performance. Of course, most of the settings only cause a minor change in performance, so there's only a limited ability to improve performance. AC Origins ends up being demanding at virtually any setting.

In terms of visuals, going from the Very Low to the Low preset yields a good improvement in image quality with a negligible change in performance (about five percent). Similarly, going from Very High to Ultra only drops performance about three percent, so I'm not sure why Ubisoft felt the need to include six presets when two of them are mostly redundant—we would have been fine with Low/Medium/High/Ultra and just skip the Very Low and Very High alternatives.

Are the system requirements accurate?

Ubisoft gives the following minimum and recommended system requirements:

Minimum

- OS: Windows 7 SP1, Windows 8.1, Windows 10 (64-bit versions only).

- Processor: Intel Core i5-2400s @ 2.5 GHz or AMD FX-6350 @ 3.9 GHz or equivalent.

- Video card: NVIDIA GeForce GTX 660 or AMD R9 270 (2048 MB VRAM with Shader Model 5.0 or better).

- System RAM: 6GB.

- Resolution: 720p.

- Video Preset: Lowest.

Recommended

- OS: Windows 7 SP1, Windows 8.1, Windows 10 (64-bit versions only).

- Processor: Intel Core i7- 3770 @ 3.5 GHz or AMD FX-8350 @ 4.0 GHz.

- Video card: NVIDIA GeForce GTX 760 or AMD R9 280X (3GB VRAM with Shader Model 5.0 or better).

- System RAM: 8GB.

- Resolution: 1080p.

- Video Preset: High.

Based on my results from the GTX 770 and R9 380, I'm not sure a GTX 660 or R9 270 will consistently hit 30 fps. For the recommended specs, the GTX 760 is typically around 25 percent slower than the GTX 770, while the R9 280X is about equal to the R9 380 I tested. Those are only pulling around 40 fps at 1080p medium, so 1080p high is a stretch, but they should do fine for 30 fps with a few tweaks.

For the CPU side, an i3-8100 is a significant bottleneck on faster GPUs, but only if you're shooting for 60+ fps. If you're only going for 30 fps, just about any decent CPU should suffice. I'd be concerned with older 2-core/4-thread Core i3 parts, but they should still be able to get 30 fps.

My personal recommendation for AC Origins would be at least a GTX 1060 6GB. Until and unless the performance improves on AMD hardware, it's going to be less desirable in AC Origins. For 1440p gaming, the GTX 1070 Ti and the existing 1070 and 1080 cards will be your weapons of choice, assuming you can't manage to splurge on the 1080 Ti. And for your CPU, 6-core processors like the i5-8400 help if you have a top-shelf GPU, but you can still get decent framerates from a 4-core/4-thread CPU like the i3-8100 or the previous generation i5-7400.

What about this DRM business?

There have also been reports circulated about DRM causing the high CPU utilization, based on statements made by the cracker/pirate Voksi. Ubisoft denies those claims, and without an actual cracked version it's impossible to say one way or the other. AC Origins has not yet been cracked after more than two weeks, so the VMProtect + Denuvo approach is succeeding in that respect. But Voksi has offered 'proof' that DRM calls are made during character movement, which causes a heavy CPU load.

Again, it's impossible to measure the actual performance impact without a working crack, but what I've seen is that CPU utilization jumps way up in the cities, particularly if you're using a fast GPU. CPU utilization also drops substantially if you're using a slower GPU.

In other words, calculating all the AI, physics, graphics, etc. consumes a lot of CPU time. It's not character movement but rather framerate and location that largely determines CPU load. Out in the desert, framerates are substantially higher, and CPU utilization is lower, which again indicates that it is in fact AI and graphics calculations causing the CPU spikes and not some unrelated DRM calculations.

If you look at the CPU performance results above, clearly quad-core processors can be fully utilized in AC Origins. I saw consistent 100 percent CPU load on 4-core/4-thread and 4-core/8-thread CPUs, and even the 6-core/6-thread i5-8400 is close to 100 percent load in the cities.

Running at 100 percent CPU utilization isn't inherently bad—it used to be that all games would peg your CPU at 100 percent, back before we had quad-core and higher CPUs. It just means doing other stuff (livestreaming, or watching Twitch on a secondary display) will need more cores/threads than you're used to. It also means you might see occasional dips in performance if a background task kicks off. If you want a consistent 60+ fps in AC Origins, you'll want a fast graphics card and a fast CPU.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.