Cache is king when it comes to designing the gaming CPUs of the next 20 years

Facing a power wall and the limit of physics, chip makers are in a constant battle to reengineer and re-evaluate ways to build a better CPU.

This article was originally published on June 30th this year and we are republishing it today as part of a series celebrating some of our favourite pieces of the past 12 months.

Server-side: Will your CPU get sucked up into the cloud?

Time's up: Does silicon have an expiration date?

Quantum: What lies beyond classical computing?

If you remove the heat spreader from a processor, clean it up, and use specialised camera equipment, you'll see some spectacular silicon staring back at you. Each individual section of the processor is a pivotal part in delivering performance, and the number of key bits inside that processor have grown exponentially over the past half century.

Take the Intel 4004: the highly influential microprocessor that supercharged the modern era of computing in 1971. It has 2,300 transistors. Today's CPUs count transistors by the billion. Some server chips contain as many 70–80 billion, making up double-digit core counts, huge caches, and rapid interconnects, as do some of the largest GPUs.

Beyond simply 'bigger', it's near enough impossible to picture what a CPU might look like in another 50 years' time. But there are some ideals that electrical engineers are always chasing, and if we understand what those are, we might be able to better understand what a CPU needs to be to match the world's ever-growing computing requirements.

So, who better for me to speak to than engineers and educators working on this very topic to find out.

"Ideally, a CPU should have high processing speed, infinite memory capability and bandwidth, low power consumption and heat dissipation, and an affordable cost," Dr. Hao Zheng, Assistant Professor of Electrical and Computer Engineering at the University of Central Florida, says.

"However, these objectives are competing with each other, so it is unlikely to be achieved at the same time."

I like how Carlos Andrés Trasviña Moreno, Software Engineering Coordinator at CETYS Ensenada, puts it to me: "It's hard to think of a CPU that can be an 'all-terrain', since it's very dependent on the task at hand."

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

When it comes to the task we care most about, gaming, a CPU has to be fairly generalist. We use a processor for gaming, sure, but video editing, 3D modelling, encoding, running Discord—these are all different workloads and require a deft touch.

It’s hard to think of a CPU that can be an 'all-terrain'.

Carlos Moreno, CETYS

"There are a lot of specs that matter in a CPU, but probably the ones that most people look for in gaming are clock speed and cache memory," Moreno explains.

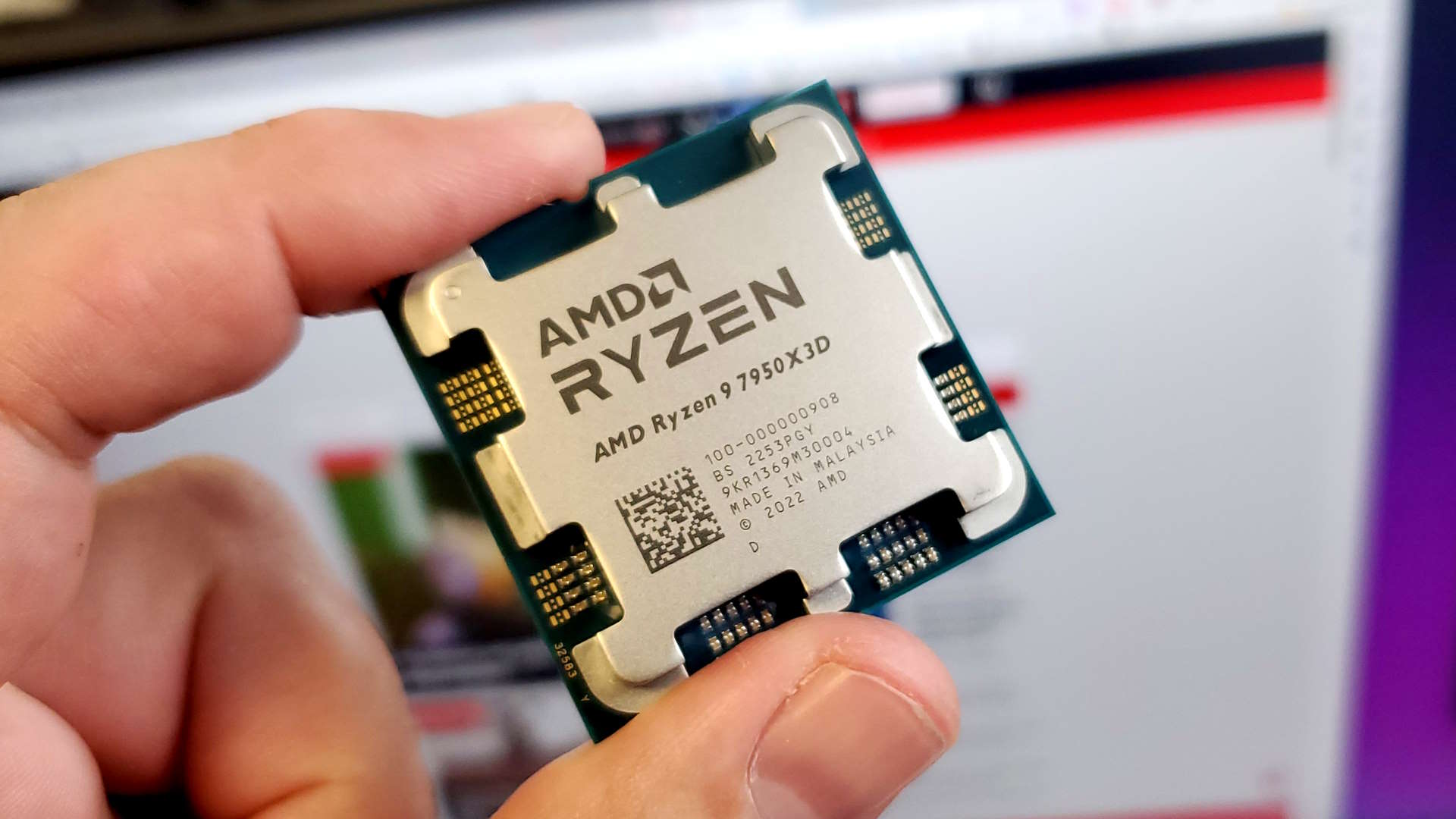

Makes sense, right? Intel has the fastest gaming processor available today at 6GHz with the Core i9 13900KS, and AMD brings the largest L3 cache on the Ryzen 9 7950X3D. And neither is a slouch. You can only assume that a chip in 20 years will exponentially higher clocks and cache sizes.

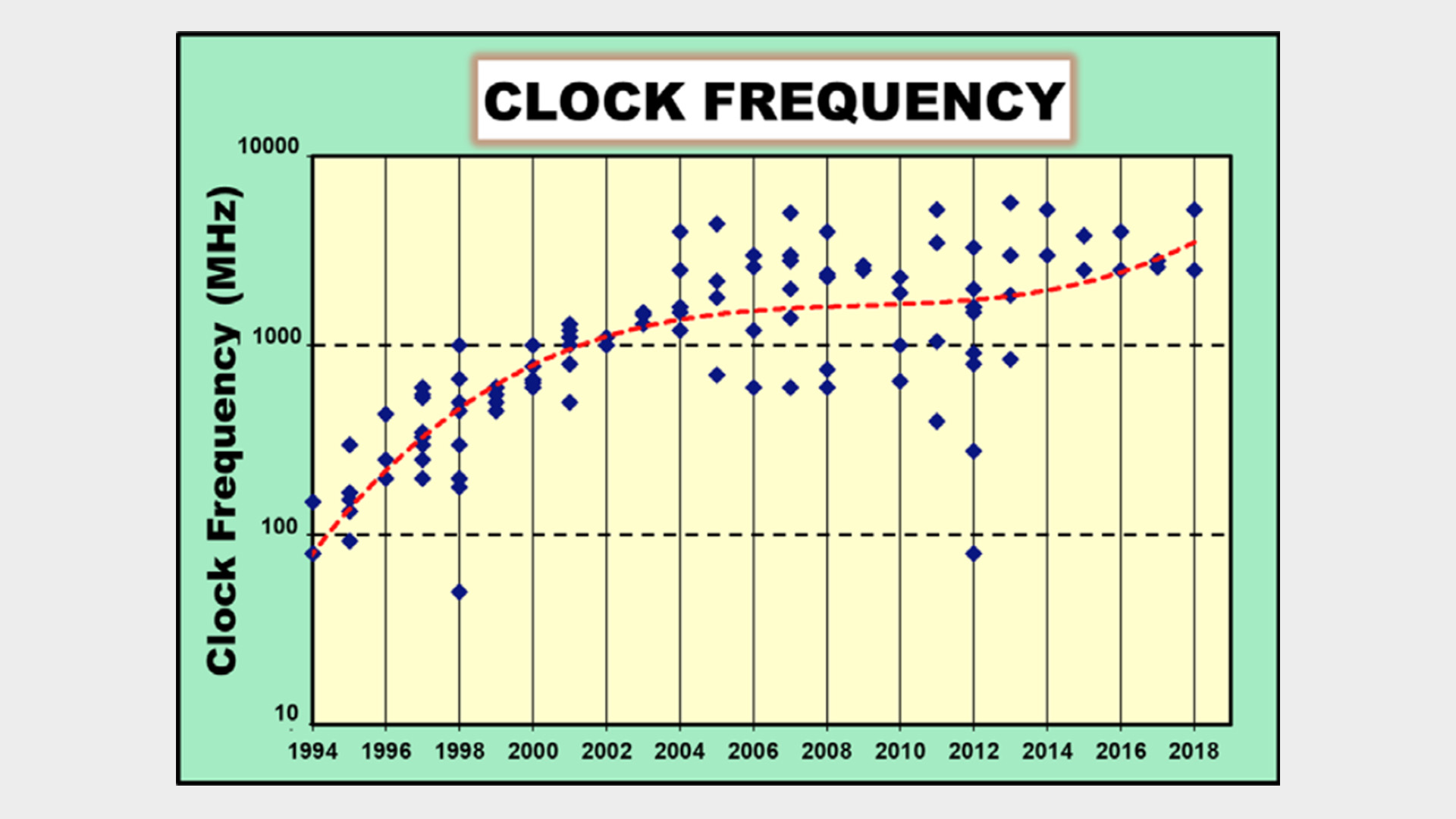

That's not strictly true. According to the IEEE's International Roadmap for Devices and Systems 2022 Edition—a yearly report on where the entire semiconductor and computing industry is headed in the future—there's a hard limit on clock speed. It's around 10GHz. If you push past that point, you run into problems with dissipating the heat generated from the power surging through the chip at any one time. You hit a power wall. Or you do with silicon, anyways, and you can read more on that in my article on silicon's expiration date.

Right now, our answer to that encroaching problem is to deal with the heat being generated by a processor with bigger, better coolers.

We're already seeing that have an effect on how we build gaming PCs. Wattage demands have generally increased over the years—the Core i9 13900KS, a particularly thirsty chip, can ask for 253W from your power supply. To put that into perspective, Intel's best non-X-series desktop chip from 2015 had a TDP (thermal design power) of 91W and would sap around 100W under load. So nowadays we need liquid coolers, or at the very least very large air coolers, to sufficiently cool our chips.

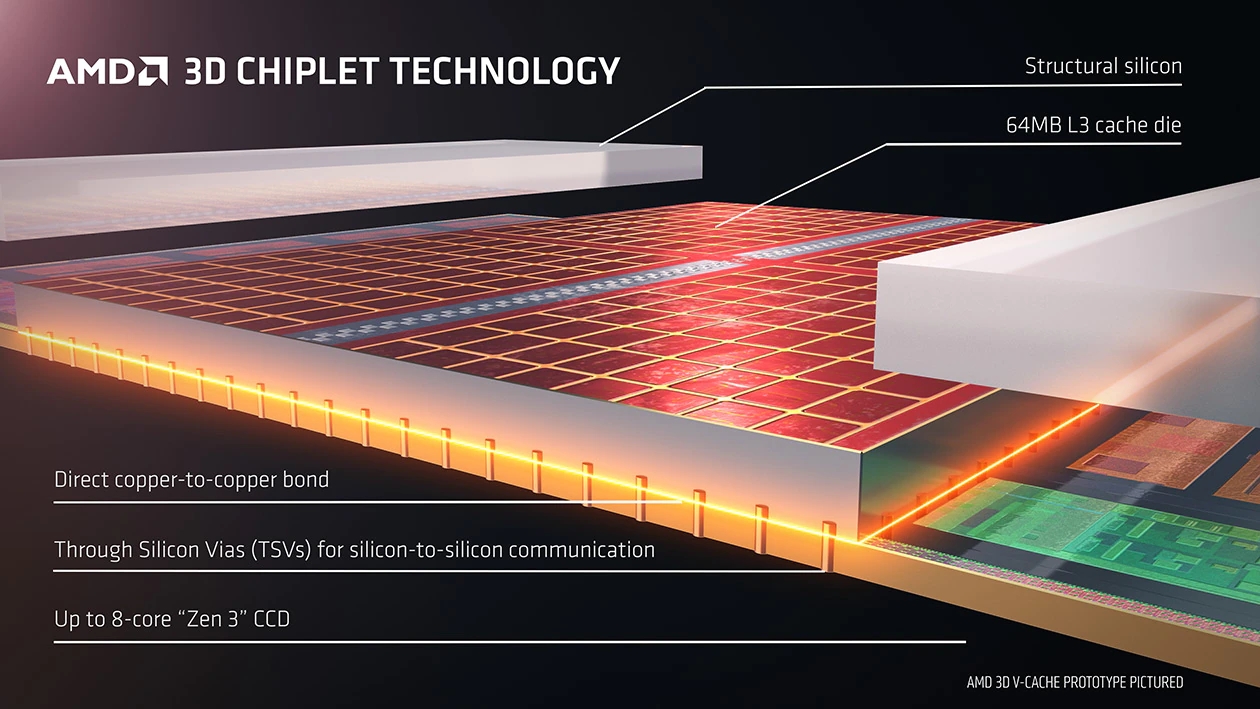

Not every chip is rubbing up against the power wall just yet. AMD's Ryzen 7 7800X3D, replete with cache, only requires around 80W under load—the benefits of a chiplet architecture that's built on a cutting-edge process node. That's the thing, there are ways around this problem, but they rely on close collaboration between chip designers and chip manufacturers and technology at the bleeding-edge of what's possible.

But generally demands for heat dispersion are going up, and no place feels the burn more than datacenters and high-performance computing, which is a more boring way of saying supercomputing. Record-breaking supercomputers are being liquid-cooled to ensure they can deal with the heat being thrown out by massively multi-core processors. And it's a real problem. Intel reckons up to 40% of a datacenter's energy consumption goes to the cooling alone (PDF), and it's desperately seeking alternatives to active air and liquid cooling solutions, which largely rely on fans and require lots of juice.

One alternative is to stick datacenters in the ocean. Or on the moon. That last one is more theoretical than practical today. In the meantime, the main alternative is immersion cooling, which reduces bills by using a direct contact with liquid to more efficiently transfer heat than air ever could. It's a truer form of liquid cooling.

I spoke to Dr. Mark Dean back in 2021 about this very subject. Dean is an IBM fellow and researcher who not only worked on the very first IBM PC, but also was a part of the team that cracked 1GHz on a processor for the very first time. This is someone that knows all too well what happens if you hit a power wall.

"I mean it's amazing what they're having to do, and not just the main processor, but the graphics processor is also watercooled. And so I'm thinking, 'Okay, wow, that's kind of a brute force approach.'

"I thought we will have to go a different way. Because we always thought that, at some point, we were not going to be able to continue to increase the clock rate."

Clock speed has been the milestone for processor advancements over the years, but its importance has been waning over the years. As it turns out, clock speed isn't the answer we need for better performance.

"Those are all bandaids, to be honest," said Dean.

Cache cache, baby

For the future of gaming CPUs, there's one thing that every engineer I spoke to agrees is extremely important: cache. The closer you can get the data required by the processing core to the core itself, the better.

"...most people fall into the trap of thinking that a higher frequency clock is the solution to obtain higher FPS, and they couldn’t be more wrong," Moreno says.

"Since GPUs tend to do most of the heavy lifting these days, it’s important for CPUs to have a fast cache memory that allows for instructions to be fetched as fast as they are demanded."

There is where the real challenge awaits for computer architects, creating bigger L1 cache memory with as low latency as achievable.

Carlos Moreno, CETYS

It's about where your bottleneck exists. A CPU's role in playing a game isn't to create an image. That's what your GPU does. But your GPU isn't smart enough to process the engine's instructions. If your GPU is running at 1000 frames per second, your CPU (and memory) has to be capable of delivering instructions at that same pace. It's a frantic pace, too, and if your CPU can't keep up then you reach a bottleneck for performance.

In order to keep up with demand, your CPU needs to store and fetch instructions somewhere close by. In an ideal world, your processing cores would have every instruction required at any given time stored at the closest, fastest cache. That's L1 cache, or even an L0 cache in some designs. If that fails, the CPU stores instructions in the second-level cache, L2. And then out to the L3 cache.

If there's no room at any of those local caches, you have to go out to system memory, or RAM, and that's a galaxy away from the processing core at a nanoscale level. When that happens you're really getting sluggish performance in applications that require lower latencies, so you want to avoid that as much as possible.

If L1 cache is so crucial to performance, why not just make it bigger?

"Well, using the current computer architecture, that would mean that we would increase the latency at that level and we would, thus, end up making a slower CPU," Moreno says.

"There is where the real challenge awaits for computer architects, creating bigger L1 cache memory with as low latency as achievable, but it requires to change the whole paradigm of what has been done so far. It’s a subject that has been researched for several years, and is still one of the key areas of research for the CPU giants."

You can be sure that cache-side improvements will continue to be important to gaming CPUs for many years. Not only is that abundantly clear from AMD's recent 3D V-Cache processors, but I've got it straight from the source at Intel, too. Marcus Kennedy, general manager for gaming at Intel, tells me we're going to see the company continue to push larger caches for its processors.

"I think more cache, locally, you're going to continue to see us pushing there. There's an upper bound, but you're going to continue to see that. That's going to help drive lower latency access to memory, which will help for the games that respond well to that."

[the] limit is on how much power you can draw, or how much money you can charge.

Marcus Kennedy, Intel

In a similar sense, more cores with more cache close by also helps solve the problem—providing your software can leverage it. Essentially, you're keeping the cache as low-latency as possible by retaining a smaller footprint close to each core, but increasing the number of cores.

And Kennedy foresees an uplift in cores and threads for the next half decade of development to this end.

"Could you leverage 32 threads? Yeah, we see that today. That's why we just put them out," Kennedy says. "Could you leverage 48? In the next three to five years? Yeah, I could absolutely see that happening. And so I think the biggest thing you'll see is differences in thread count.

"We put more compute power through more Performance cores, more Efficient cores, in the SoC (system-on-chip), so that you don't have to go further out in order to go get that. We put all of that there as close as possible."

But a limit will be reached at some point, "And that limit is on how much power you can draw, or how much money you can charge," Kennedy tells me.

That's the thing. You could build a processor with 128 cores, heaps of cache, and have it run reasonably quick with immersion cooling, but it would cost a lot of money. In some ways we have to be reasonable about what's coming next for processors: what do we need as gamers and what's just overkill?

Transistor timeline: decades of bigger, better chips

I know, it's tough for a PC gamer to get their head around—overkill, who, me?—but frankly cost plays a huge role in CPU development. There's a whole law about it, Moore's Law, named after the late founder of Intel, Gordon Moore. And before you say that's about fitting double as many transistors into a chip every two years, it's not strictly true. It began as an observation on the doubling in complexity of a chip "for minimum component costs" every 12 months.

"That's really what Moore's Law was, it was an economic law, not really a performance law," Kennedy says. "It was one that said, as you scale the size of the transition gates down, you can make a choice as to whether you take that scale through dollars, or whether you take that scale through performance."

Moore's Law has remained a surprisingly decent estimation of the growth of semiconductors since the '60s, but it has been revised a few times since. Nowadays it's said to mark the doubling of transistors every two years, which takes the pressure off. Generally laws don't change, either, and that's let to disagreements on the concept as a whole.

Moore's Law has become a hotly-debated topic. Nvidia and Intel's CEOs fall on different sides as to whether it's still alive and well today or dead and buried, and there'll be no prizes for guessing who thinks what. For the record, it's Nvidia's CEO Jensen Huang that says Moore's Law is dead.

Looking at the chart above, which logs the number of transistors per microprocessor using data from Karl Rupp, and you might side with Intel CEO Pat Gelsinger, however.

If we did use Moore's Law to snatch a rough number out of the ether—providing there are no wildly massive breakthroughs or terrible disasters for the chip making industry along the way—using Apple's M2 Ultra for our current-day benchmark at 134 billion transistors, we can estimate a microprocessor 20 years from today could feature as many as 137 trillion transistors.

But is that necessarily going to happen? Never doubt engineers, but the odds are stacked against ever-growing transistor counts. And it's down to the atomic structure of what a transistor is. In theory, even with a single-atom thick later of carbon atoms, a gate can only be shrunk down so much—perhaps around 0.34nm, if that—before it no longer functions. A silicon atom is only so big, around 0.2nm, and smaller and smaller transistors can fail due to quantum tunnelling, or in other words, an electron zipping right through the supposed barrier.

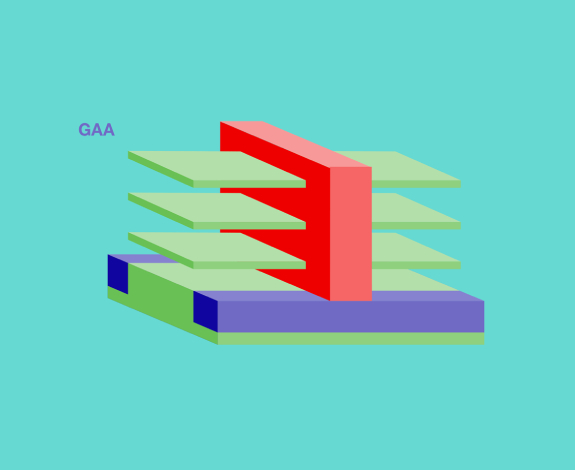

Of course there are ways to delay the inevitable, most of which has been in the works for decades. We're about to hit a big milestone with something called Gate All Around FET (also known as GAAFET, Intel calls it RibbonFET), a design of a transistor that can be shrunk down further than current FinFET designs without leaking, and which should keep the computing world running for a while longer yet. Phew.

Ultimately, we can't use Moore's Law for determining the exact amount of transistors in a chip 20 years from now. With Moore's Law not really being a law, physics not being on our side, and growing costs and difficulties in developing new lithographic process nodes, our magical crystal ball into the future of CPUs has been muddied.

Another concept that was once quite handy for future-gazing but isn't so much now is Dennard scaling. This was the general idea that with the number of transistors in a given space increasing, that would afford a reduction in power demands that would allow for higher frequencies. But as I mentioned before, that push for higher and higher frequencies already hit a power wall, back in the early 2000s.

Clock speed is no longer an effective means of improving CPU performance.

Dr. Zheng, UCF

We're in a post-Dennard scaling world, people.

"In recent years, with the slowing of Moore's Law and the end of Dennard Scaling, there has been a shift in computing paradigms towards high-performance computing. Clock speed is no longer an effective means of improving CPU performance," Dr. Zheng says.

"The performance gains of gaming CPUs mostly come from parallelism and specialization."

Could you leverage 48 [threads]? In the next three to five years? Yeah, I could absolutely see that happening.

Marcus Kennedy, Intel

That's the key takeaway here, and it goes back to the idea that no one CPU can be "all-terrain". A CPU has to be adaptable to how it's going to be used, and so as we reach more and more mechanical limits, be they clock speed or cache size, it's in specialisation within the silicon where the big changes will come.

One flexibility afforded to modern CPU manufacturers comes in how they connect all their cores, and even discrete chips, together. The humble interconnect has become a key way through which the CPUs of today and the future will continue to provide more and more performance.

Do we have to worry about the CPU of the future or will we be too busy gaming on GeForce Now? Cloud gaming is here, and it's good, but it might not be PC gaming's entire future.

With a high-bandwidth, compact, and power-savvy interconnect, a chip can be tailored to a user in a way that doesn't require a million and one different discrete designs or everything stuffed into a single mammoth monolithic design. A monolithic chip can be expensive and tricky to manufacture, not to mention we're already reaching the limits for the size of chips due to something called the reticle limit. Whereas a multi-chip design can be smaller and far more flexible.

So, about that gaming CPU 20 years from now. Such a thing has got to be cache-heavy, low-latency, stuffed with cores, and probably chiplet-based.

I guess I did see that one coming from the get-go. But go further into the future than that, and you're looking beyond silicon, maybe even beyond classical computing, at much more experimental concepts. Yet something much closer to reality that could change the future of gaming CPUs is something you're sure to already be familiar with today: cloud gaming.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.