Silicon won't run our gaming PCs forever but it's far from finished

The sheer mass of manufacturing might behind silicon will keep it thriving for a long time even if there are better options out there.

This article was originally published on June 30th this year, and we are republishing it today as part of a series celebrating some of our favourite pieces we've written over the past 12 months.

Server-side: Will your CPU get sucked up into the cloud?

Next-gen: What will a CPU look like in the future?

Quantum: What lies beyond classical computing?

My favourite explanation of what a processor really is comes from a tweet from daisyowl, way back in 2017. It said, "if you ever code something 'that feels like a hack but it works,' just remember that a CPU is literally a rock that we tricked into thinking." Followed up by: "not to oversimplify: first you have to flatten the rock and put lightning inside it."

A great summary, but you also need the right type of rock. That would be a semiconductor, an element that is both a conductor and an insulator. The one we've found best suited to the job for its abundance, stability, and atomic structure is silicon.

It's not really a rock, either, but a metalloid (something between a metal and a non-metal) found in rocks and I think that still counts.

Silicon has seen us through the past half century of processors, and look how big this computing thingy is now, so why bother changing things?

"We are reaching the limits of silicon chips, not only in size but also in the temperatures that it can handle," Carlos Andrés Trasviña Moreno, Software Engineering Coordinator at CETYS Ensenada tells me.

There are heaps of other 'rocks' to choose from: germanane, gallium nitride, molybdenite, boron arsenide, and graphene (i.e. carbon nanotubes). But it's a cutthroat talent show. You need the whole package to get through to the final.

if you ever code something that "feels like a hack but it works," just remember that a CPU is literally a rock that we tricked into thinkingMarch 15, 2017

Think about it, you need to find an atomically sufficient solid that is not only electrically suitable to be a semiconductor, but stable enough to use in a range of applications, easy enough to get lots of it, and cheap enough to be mass produced. Or at the very least suitable enough for mass production that you can make it cheap after a good while.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"The main issue with all of these is that, so far, scientists haven’t been able to create a functioning IC that can equal the current size and speed of microchips," Moreno continues.

"Building them is hard," Dr. Mark Dean told me in 2021.

In an ideal world we'd have harnessed carbon nanotubes, built out of rolled up graphene, to create masses of CNFETs, or carbon nanotube field-effect transistors. These transistors have multiple benefits, but the main ones being they're massively more energy efficient than silicon chips and they can be manufactured at lower temperatures, making transistors easier to stack on top of one another.

But where researchers have built chips out of CFNETs, the problem that remains is still making them cheap and easy to manufacture. There have been some big steps towards this goal, however, with researchers finding new ways to create CNFET wafers using pre-existing foundry manufacturing facilities intended for silicon chips. That's important, as using pre-existing foundries ensures the shift over to any new semiconductor material would make for a cheaper changeover, albeit likely still a very gradual one.

There is some hope for chips made out of gallium nitride (GaN). It's reaching some degree of mass adoption that makes it cheap enough for public consumption, too. But you're not going to see a gaming CPU made out of GaN anytime soon.

GaN is a wide bandgap semiconductor. That means more energy is needed to free an electron and conduct current. It's a semiconductor playing by different rules to silicon, and better suited to high operating temperatures and voltages. You're far more likely to find a GaN charger for your laptop than ever finding a GaN CPU inside it.

We've seen the introduction of some different semiconductor materials. I can't speak on all of it, because some of that is secret sauce stuff.

Marcus Kennedy, Intel

What gives? GaN offers higher efficiency, is reportedly better for the environment [PDF], and generally shrinks down into smaller spaces than silicon. Didn't we just talk about how silicon gets too hot and hits a power wall, why isn't GaN already powering my PC?

That's because the properties of the material aren't the be-all and end-all for its suitability. GaN has been floated as an alternative to silicon for decades, but silicon still rules the roost because of the economies of scale.

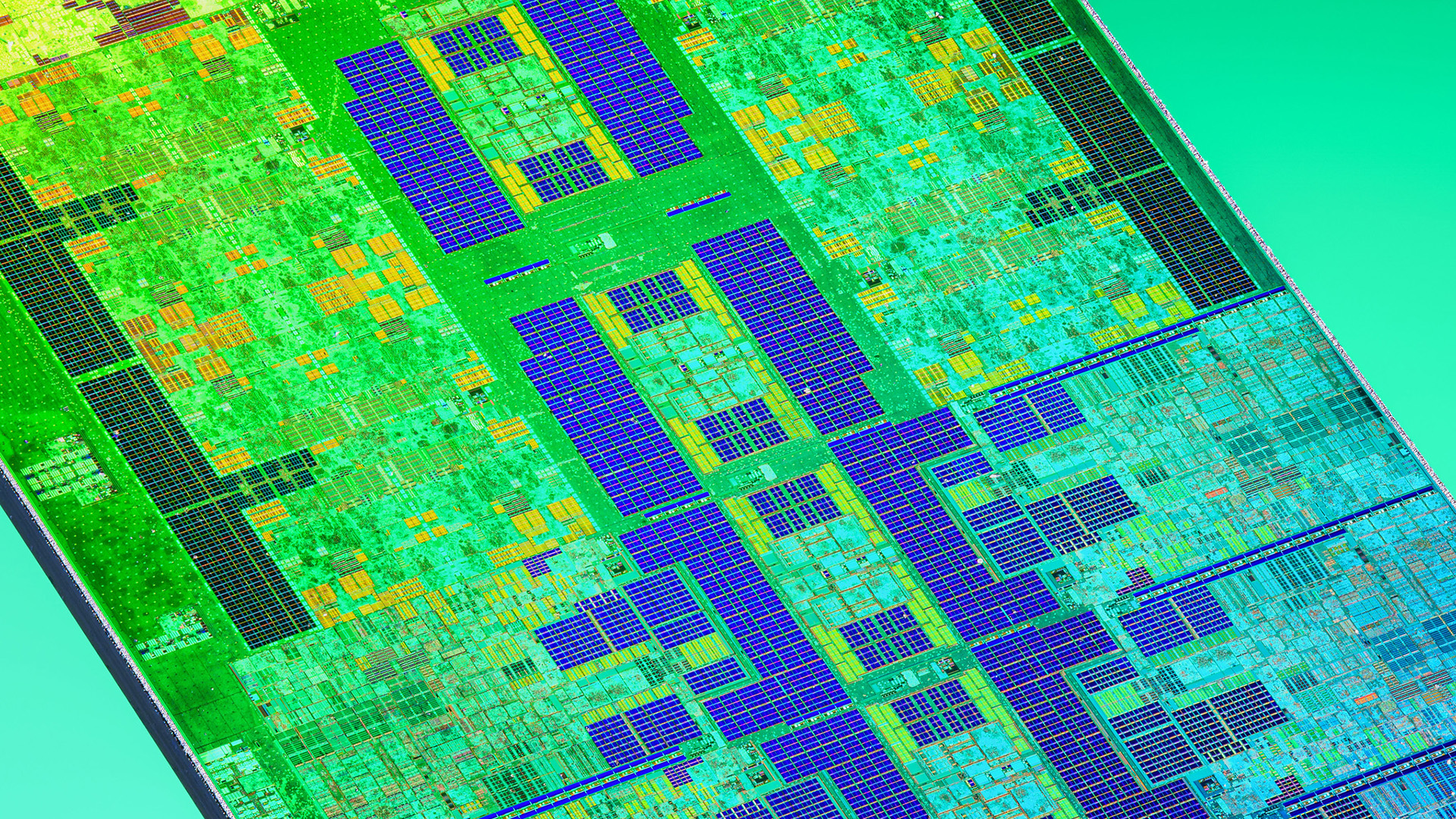

Manufacturing computer chips is a massive undertaking, and those doing it today are some of the very few remaining still able to afford to do it. It would be very difficult, and prohibitively expensive, to move away from silicon at the scale required to keep the world ticking over for computing power. If anything were to replace silicon, it has to catch up to silicon's already impressive at-scale manufacturing, and someone has to foot the bill for that.

"There's a huge semiconductor industry built up around leveraging silicon at scale," Marcus Kennedy, general manager for gaming at Intel, tells me.

"It's that scale that drives the cost of the industry. And that cost is what drives the rest of the industry. It's what allows us to put chips inside of things like cars, or inside your little supercomputer in your pocket. Changing to a totally different material will disrupt the entire supply chain and disrupt the entire system."

Billions upon billions of dollars have been allocated just these past few years to newer, better fabs across the globe, from TSMC in Arizona to Intel in Germany. You can take a look at the map below for a better idea of what sort of scale we're talking about here.

I don't think that we've fully explored the limits of what silicon can do yet.

Marcus Kennedy, Intel

It just takes time to get to that point, and when silicon still works it's going to be a slow process. But the good news is that there's life in that silicon dog yet.

"I don't think that we've fully explored the limits of what silicon can do yet," Kennedy says.

Silicon's expiration date will come, eventually. In the meantime, there are other ways to expand computing power.

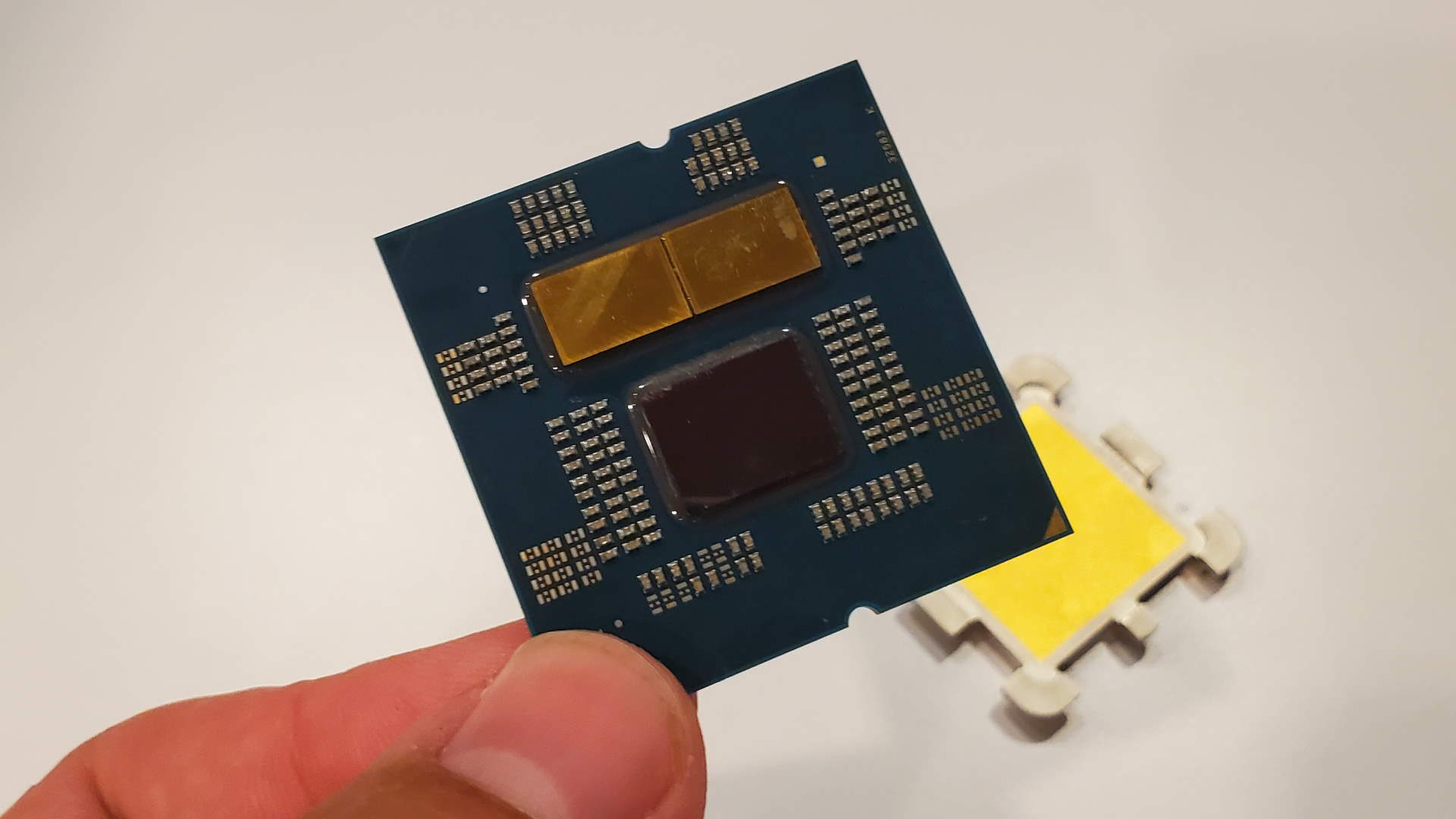

Kennedy believes we'll see more of something known as "disaggregation". Disaggregation, in how it relates to a processor, is the concept of pulling apart a larger system into smaller, discrete components, via chiplets and interconnects. But in this regard, Kennedy believes we'll also see some disaggregation of semiconductor materials.

Quantum supremacy: quantum computing is edging closer. And let's not forget the promising future of neuromorphic computing—basically a chip taking design notes from nature.

"We've seen a little bit of that already. We've seen the introduction of some different semiconductor materials. I can't speak on all of it, because some of that is secret sauce stuff. But we've seen some differences even today, inside an SoC, some different semiconductors being used inside the same compute tiles. I think you'll see more of that moving forward."

There might not be a single replacement for silicon, rather a mix-and-match of semiconductor materials to best fit the task at hand, and made possible by chiplets and interconnects.

But long before any of these materials run out of steam entirely, we may see another change in computing that comes to the rescue. "And it’s here where we enter into the world of quantum computing and neuromorphic computing," Moreno says.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.