Our Destiny 2 performance analysis confirms the game is legit on PC

What we learned about Destiny 2's system requirements from the open beta.

The Destiny 2 open beta on PC has come and gone, and the good news is that the game is looking good and running well. When the PC version was revealed, Bungie promised it would be "legit on day one", and the general consensus is that they've delivered. Credit for that must go to the work done by the dedicated PC teams within Bungie and Vicarious Visions who worked on what is certain to become the definitive version, even if we do have to wait a little longer for it.

I spent much of the first half of this week running benchmarks and I gave a preview of 4K performance requirements. Now, as promised, this is the full rundown of performance with the Destiny 2 beta. Obviously, with two months until the official launch on PC, there's plenty of time for things to change and improve, so this is merely an early taste. If you're planning on getting into the PC gaming scene with Destiny 2, it's also a guide for the hardware you'll want to buy, depending on your intended resolution and performance.

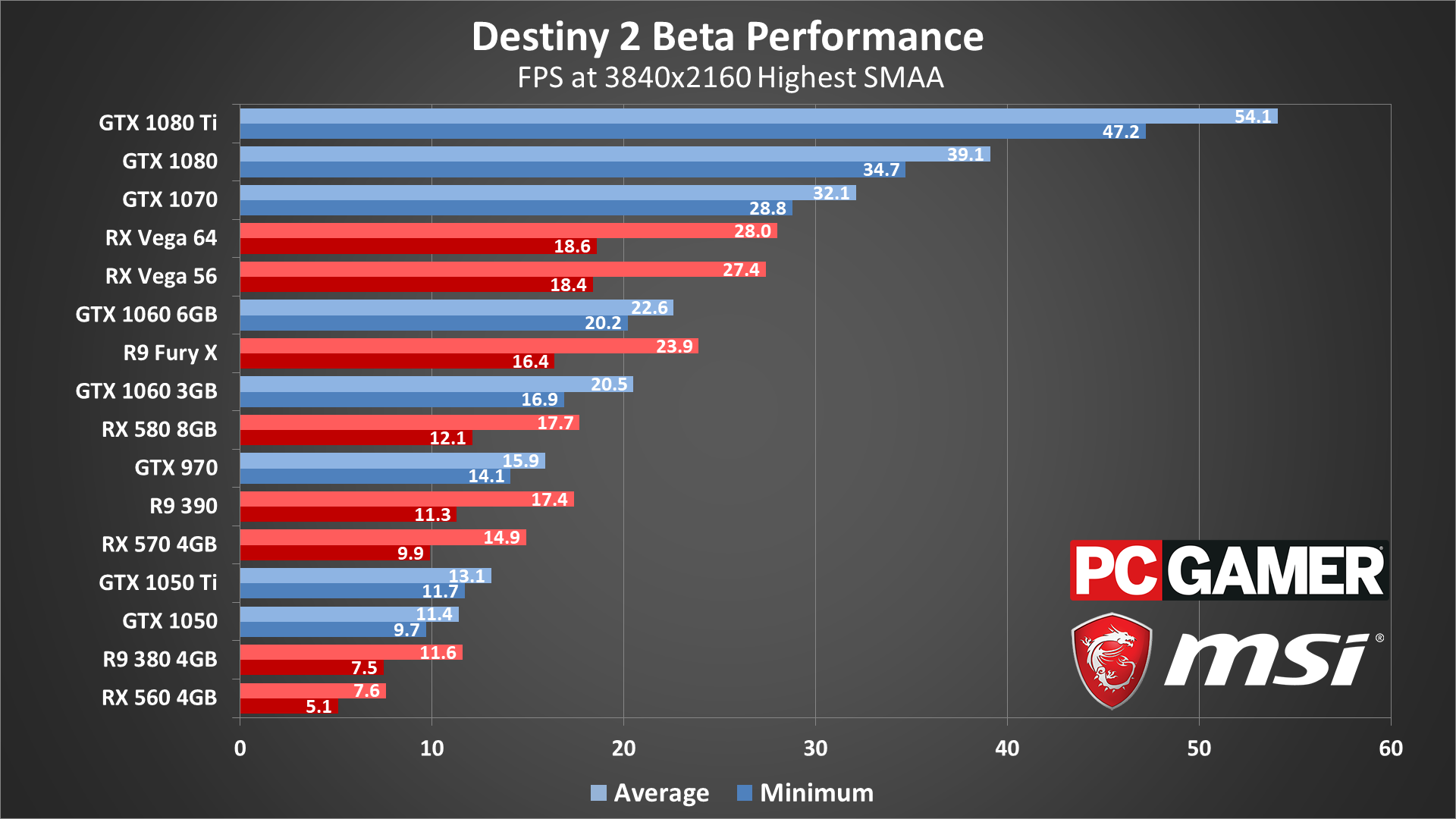

One thing to get out of the way is that Destiny 2 is being heavily promoted by Nvidia, so just like AMD Gaming Evolved releases tend to be better optimized for AMD GPUs, Destiny 2 will likely run better (at least initially) on Nvidia hardware. AMD graphics hardware could use some tuning, and given the popularity of Destiny on consoles and the anticipation for the PC release of Destiny 2, having AMD put in an extra effort on its drivers would make sense.

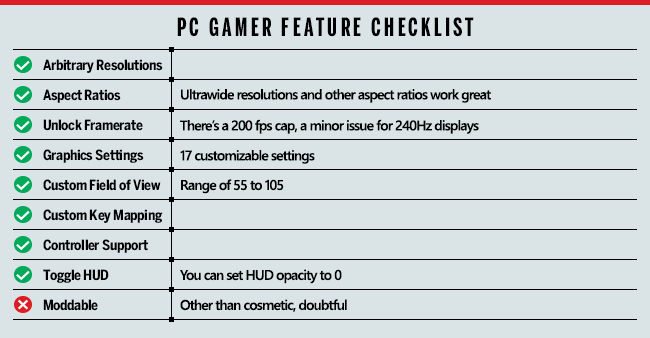

The good news is that, as billed, Destiny 2 isn't just a checkbox port of the console version. Bungie is putting in the requisite effort to provide the experience PC gamers want, including unlocked framerates, adjustable field of view, arbitrary resolution support—including ultrawidescreen displays—and plenty of options to tweak. Here's a quick look at the features checklist:

Even in beta form, Destiny 2 checks off most of the important items. There's a 200 fps framerate cap for now (sorry 240Hz monitor users), and full modding support is unlikely (unless this sort of modding is what you're after). Otherwise, there's no vital missing piece to complain about. Ultrawidescreen support in particular deserves a shout out, as even loading screens and cutscenes are properly formatted for 21:9 displays—though I'm not sure if 32:9 or multi-display support is fully enabled. It's something we'll look at with the full release, but 21:9 at least is about as good as it gets, with only a few HUD elements not (currently) scaling.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Destiny 2 on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops—see below for the full details. Full details of our test equipment and methodology are detailed in our Performance Analysis 101 article. Thanks, MSI!

As far as graphics settings, Destiny 2 has 17 individual settings you can tweak, along with four presets: low, medium, high, and highest. As is often the case, many of the settings have very little impact on performance, with only a few big ticket items: anti-aliasing, depth of field, screen space ambient occlusion, and shadow quality (in that order).

MSAA (multi-sample AA) is currently a work in progress, and it caused a massive 40 percent drop in performance in limited testing, so I recommend sticking with FXAA (fast approximate AA), which is basically free, or the slightly better and only barely more taxing SMAA (subpixel morphological AA). For more details on the various settings, skip to the bottom of the article, but outside of these four options, most settings will only affect performance by 1-2 percent (though texture quality can impact cards with less than 4GB VRAM if you set it too high).

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

On that note, kudos to Destiny 2 and Bungie for following in the footsteps of GTA 5 by providing an indication of how much VRAM the current settings use. If you have a 2GB card and your settings require 3GB, as an example, you'll end up with a lot of textures and data getting sent over the PCIe bus, which can cause increased stuttering and major drops in performance. Even at maximum quality and 4K, however, VRAM use didn't go above 4GB—something to keep in mind. Having a bit of extra VRAM (eg, a 6GB card) could help a bit, but for the majority of high-end GPUs you should be fine.

The hardware

MSI provided all of the hardware for this testing, mostly consisting of its Gaming/Gaming X graphics cards. These cards are designed to be fast but quiet, and the fans will shut off completely when the graphics card isn't being used. Our main test system is MSI's Aegis Ti3, a custom case and motherboard with an overclocked 4.8GHz i7-7700K, 64GB DDR4-2400 RAM, and a pair of 512GB Plextor M8Pe M.2 NVMe solid-state drives in RAID0. There's a 2TB hard drive as well, custom lighting, and more.

MSI also provided three of its gaming notebooks for testing, the GS63VR with GTX 1060, GT62VR with GTX 1070, and GT73VR with GTX 1080. Sadly, the beta test period expired before I managed to get around to testing the laptops, so we'll have to come back to those in October. For CPU testing, MSI has provided several different motherboards. In addition to the Aegis Ti3, I have the X299 Gaming Pro Carbon AC for Skylake-X testing, Z270 Gaming Pro Carbon for additional Core i3/i5 Kaby Lake CPU testing, X370 Gaming Pro Carbon for high-end Ryzen 7 builds, and the B350 Tomahawk for budget-friends Ryzen 3/5 builds.

Destiny 2 beta benchmarks

For the benchmarks, I've used my standard choice of 1080p medium as the baseline, and then supplemented that with 1080p, 1440p, and 4K 'highest' performance, with SMAA instead of MSAA. Some may opt to disable certain graphics settings to try and gain a competitive advantage, though in my testing, most settings at high or lower will result in very similar performance.

Testing performance of the Destiny 2 beta was difficult, since there was a limited window for playing on PC, and the multiplayer modes often had lengthy queue times. After doing some initial scouting of the single player experience and the multi-player maps, I found the beginning of the single player level provided a good view of typical performance—a few areas are more demanding, others less so, but overall it's representative of both single and multi-player modes. Also, it's far easier to access for the purposes of benchmarks, and the test sequence is repeatable.

I ran through the first 45 seconds or so of the level, fighting a few Cabal, up to the point where you first encounter Cayde. Performance was consistent in looking at multiple runs on the same hardware, less than three percent variation, though some of the wide-open spaces with lots of foliage can drop framerates more than shown in the benchmark.

Starting out with 1080p medium, these are pretty close to the maximum framerates you're likely to see from Destiny 2 right now—dropping to minimum quality only improved performance by a few percent, and in fact medium quality only runs about four percent faster than the high preset. If you were hoping to hit 240+ fps, obviously you're out of luck, since there's a 200 fps framerate cap. Even 144 fps is going to be a bit of a stretch for most systems, with the 1070, 1080, and 1080 Ti being the only cards to hit that mark. Note that even with an i7-7700K, the 1080 Ti is hitting a CPU bottleneck, but faster DDR4-3200 memory from G.Skill allowed a different desktop to average over 170 fps.

I've said it many times in the past, but G-Sync and FreeSync displays remain a great way to overcome fluctuations in framerate, particularly if you have a model that can do 144Hz or higher. Barring that, the next best solution is a fixed refresh rate of 144Hz, which most people will find fast enough that tearing nearly becomes a non-issue. After playing on a 144Hz display, though, 60Hz panels definitely come up lacking.

Not surprisingly, Nvidia's GPUs currently hold a clear performance advantage. AMD's 17.8.2 beta drivers list support for Destiny 2, but I'd venture to say that performance isn't fully tuned yet—not even close. Vega 64 in many other games ranks much closer to the GTX 1080, and Vega 56 often matches and even slightly exceeds the performance of the GTX 1070. Here, however, even the 1070 is able to come out with a noticeable 18 percent lead over Vega 64. Ouch. Clearly AMD has some work to do.

Speaking of low-end hardware, while I didn't have time to test it personally (the beta literally expired while I was in the middle of running benchmarks—it took me a few minutes to figure out why I suddenly got booted and then couldn't get back in!), don't expect much from integrated graphics solutions. Intel's HD Graphics 630 is typically about one fourth the performance of the RX 560. That means 1080p, even at minimum settings, is out of the question. However, if you're willing to stretch things, 1280x720 at minimum quality, with resolution scaling at 75 (960x540), apparently the HD 630 can get 20-30 fps. If you have an older Haswell chip (HD 4600), you'll probably be a lot closer to the 20 fps end of that scale.

Increasing the quality to the 1080p highest preset (minus MSAA), the 1080 Ti remains CPU limited, while the performance hit on other GPUs ranges from 13 percent on the GTX 1080 to as much as 30 percent on the RX 560. Considering medium quality is already close to maximum performance, however, that's a pretty negligible drop. That means your best bet for improving framerates will often end up being resolution—either via resolution scaling or just by dropping to a lower res.

Most of the cards are definitely playable, with only the GTX 1050 Ti and below failing to break 60 fps—and turning down one or two settings could get it above 60 fps as well. If you're willing to set your sights a bit lower than 60 fps, all of the cards I tested are still able to exceed 30 fps on average, so even an RX 560 or GTX 1050 can handle Destiny 2 at near-maximum quality.

The good news for AMD is that, once the CPU bottleneck is reduced, the gap between the Vega cards and the GTX 1070 becomes much smaller.

The good news for AMD is that, once the CPU bottleneck is reduced, the gap between the Vega cards and the GTX 1070 becomes much smaller. Still, the 1070 is beating the Vega 64, which isn't something you want to see from a nominally $500 card going up against a $380 card (current prices notwithstanding). AMD cards in general struggle in the minimum framerate department, leading to situations like the GTX 970 providing a better overall experience compared to the RX 570—even though the latter has higher average framerates, it has substantially lower minimum framerates, leading to periodic stuttering.

I'd guess a lot of the performance hit on AMD hardware comes down to lower geometry throughput relative to Nvidia, though I could be wrong. Still, look at the R9 Fury X, where minimum fps is lower than the RX 580. The newer Polaris and Vega GPUs have better geometry throughput than the old Fiji and Hawaii architectures, which could explain the inconsistencies in framerates.

What that means for older hardware is that Nvidia's 900 series and AMD's R9 series are generally more than sufficient. Go back another generation to the 700 series and HD 7000 and you may start running into problems, particularly on older midrange parts, and for the GTX 600 series you're basically at minimum spec for the game. Anecdotally, however, I've read that even modest cards from several years back are able to handle the game, just not at maximum quality.

Once we get to 1440p, all of the cards begin to show substantial drops in performance. The GTX 1080 Ti performance drops by 35 percent, while most of the other GPUs drop by 45-50 percent. Interestingly, that's a larger drop than I would expect, since there are only 77 percent more pixels to render at 1440p compared to 1080p. If you have a 144Hz panel and you're hoping to max out the refresh rate, an overclocked GTX 1080 Ti might do the trick, but only if you're willing to turn off a few settings (eg, depth of field). Otherwise, you're better off contenting yourself with 60-100 fps, depending on your GPU and settings.

Nvidia cards continue to dominate, with the GTX 1070 still leading RX Vega 64 by over ten percent. 1070 and above are also the only way to get 60+ fps at the highest preset, though the high preset improves things by around 20 percent and would be a good solution for the Vega cards. For GTX 1060 and below, if you want 60 fps at 1440p, you're going to be running pretty close to minimum quality, and even that might not be sufficient. Unless we get further optimizations by the time the game launches, naturally.

I haven't mentioned it so far, but I also did some SLI testing with Destiny 2—and didn't have time to get CrossFire testing done, though others have reported it helps as well at 1440p and 4K. GTX 1080 shows reasonable gains once we get to 1440p, where the second GPU improved performance by 57. GTX 1080 Ti SLI is still hitting CPU limits, however, and the additional load of doing SLI results in a relatively small 15 percent gain at 1440p. 4K on the other hand benefits a lot more, with 80 percent scaling on GTX 1080 and 50 percent scaling on GTX 1080 Ti. Or you could always enable MSAA, which will tax the GPUs quite a bit more and should enable closer to 80 percent scaling even at 1440p.

As is often the case, running 4K at maxed out (or nearly so) quality proves to be a challenge. I covered this in much more detail earlier, so I'll refer back to the previous article on the subject, but basically you'll need at least an RX Vega or GTX 1070 card to get 60+ fps at 4K. Everything below that comes up short, unless you're willing to use the resolution scaling setting to come up with an in-between resolution that you're happy with.

But what if you don't want to compromise? Good news, as SLI GTX 1070, 1080, and 1080 Ti are all able to break 60 fps quite easily, especially if you disable depth of field, which helps boost minimum fps quite a bit. CrossFire reportedly works as well, giving about 50 percent scaling at 4K according to some users. That's not enough to push CrossFire Fury and Vega above 60 fps, unless you drop some of the quality settings as well, but it should do pretty well at the high preset.

Does CPU performance matter?

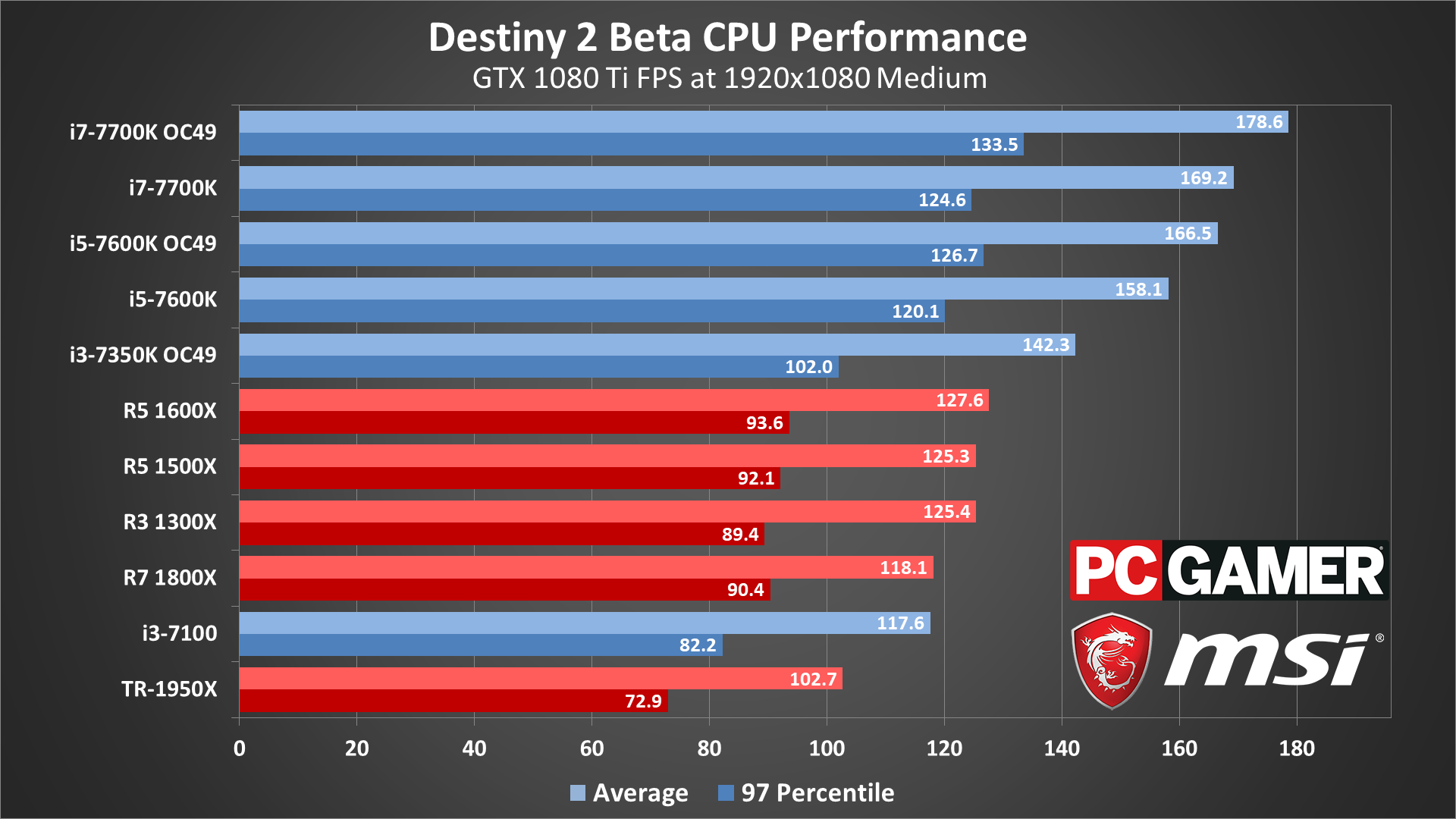

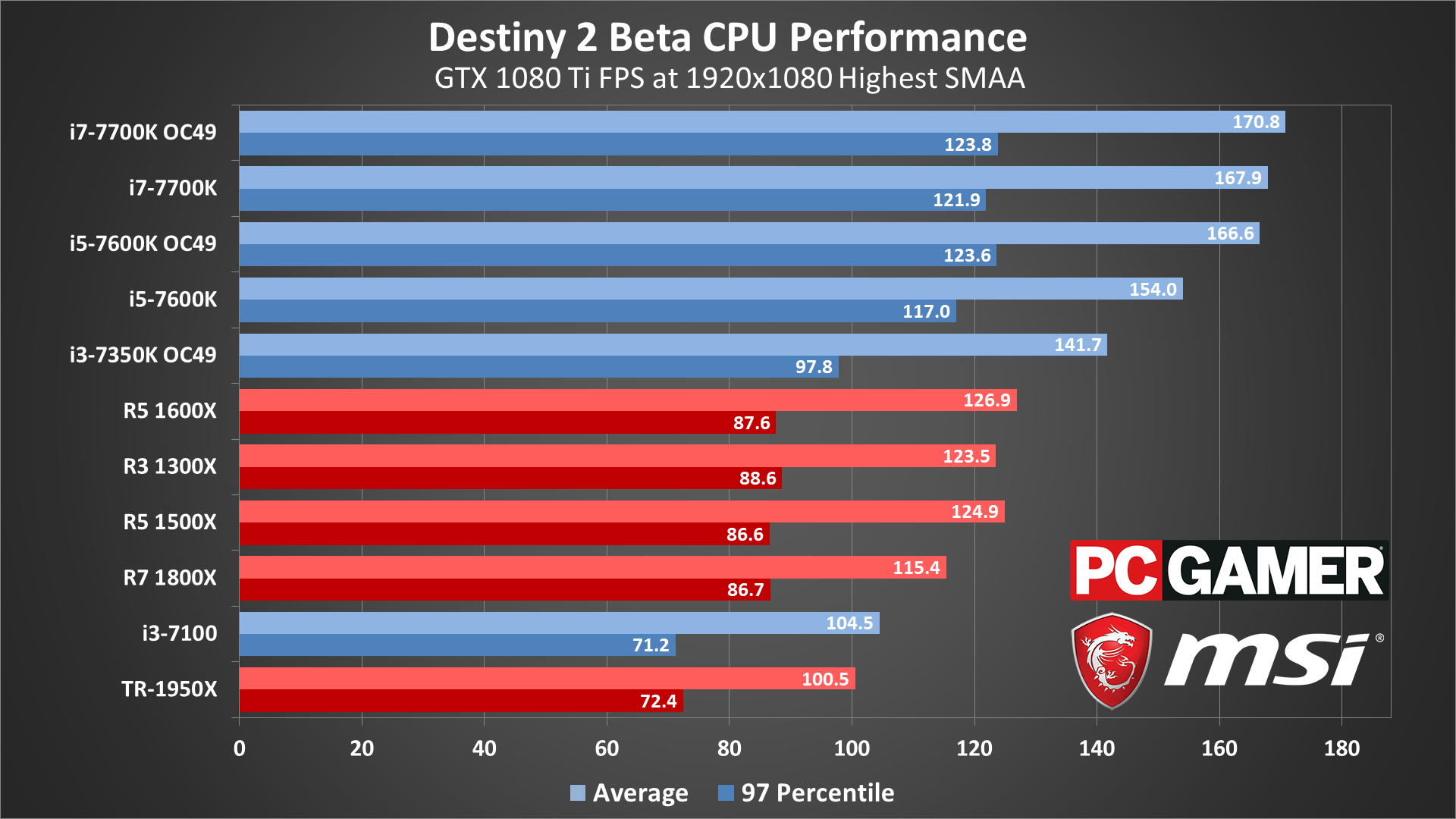

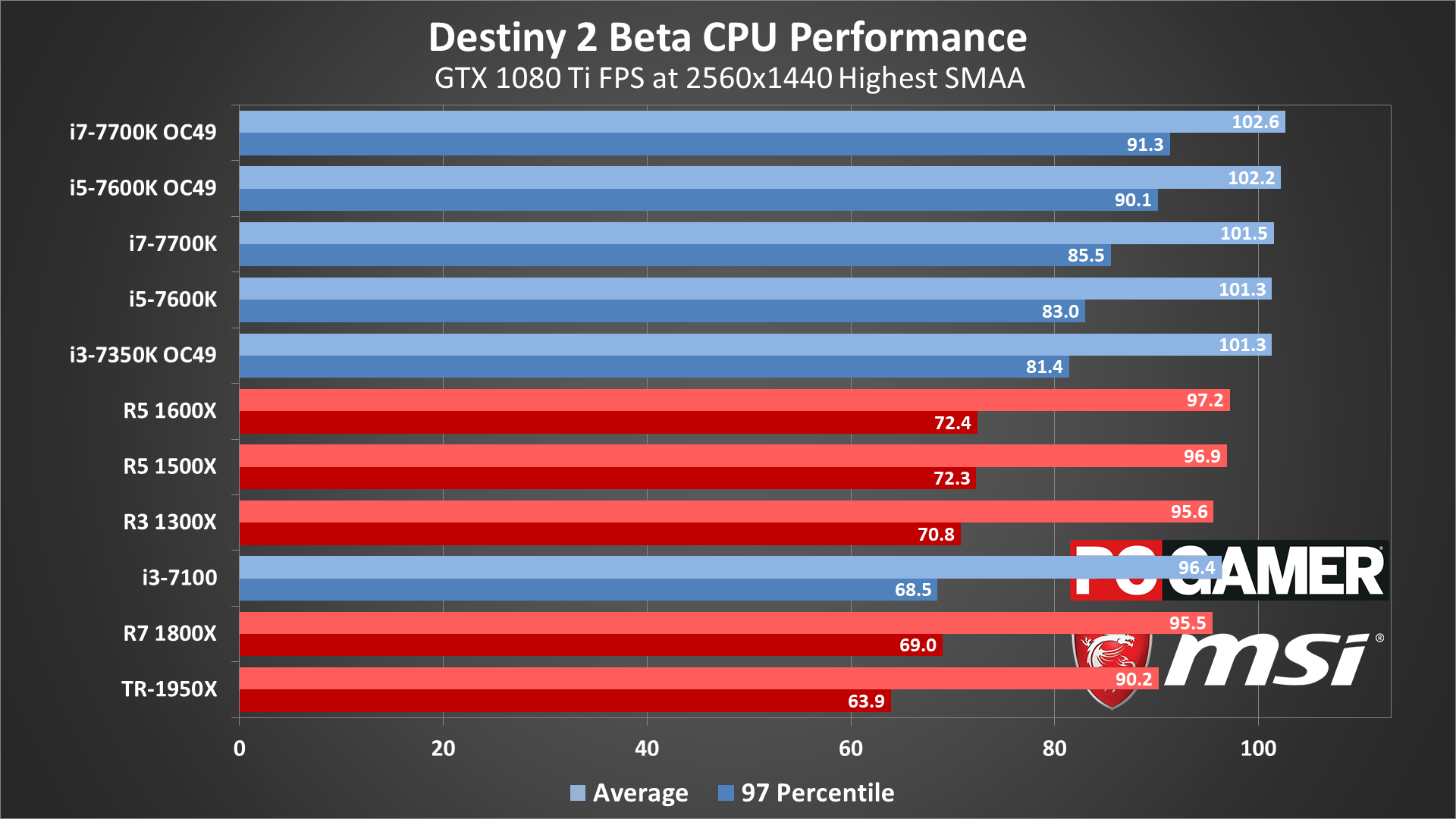

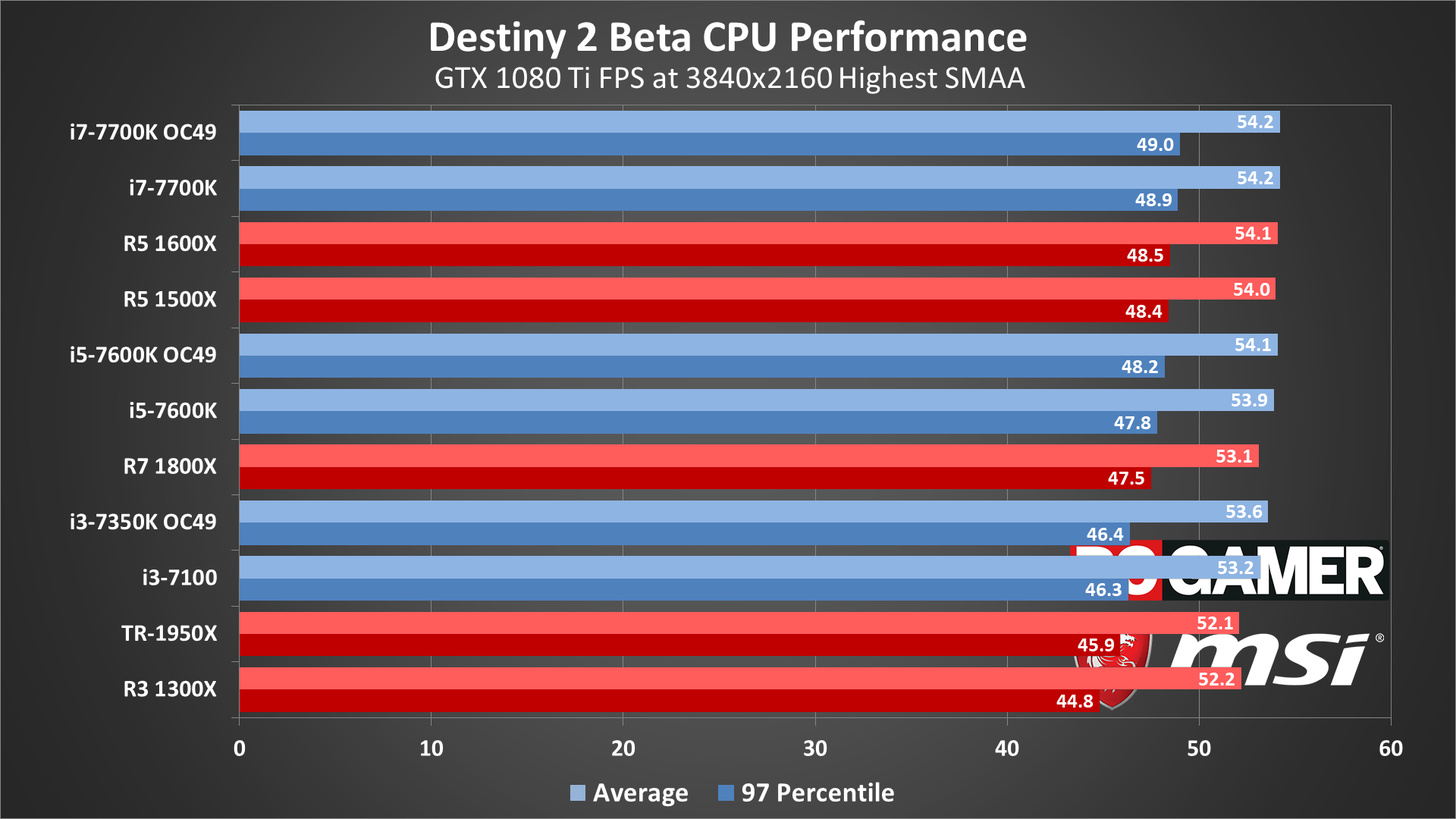

Moving over to CPU testing, this is where the beta expired on me, so I didn't get all the results I wanted. Basically, I was trying to test GTX 1060 and RX Vega 64 on all the CPUs, along with the GTX 1080 Ti. I nearly finished all the testing with 1080 Ti, but didn't get a change to test X299 CPUs. I'll briefly discuss the results I did get from 1060 and Vega below, but let's start with CPU scaling using the fastest single GPU solutions.

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Ouch. This isn't good, at least if you were hoping for Destiny 2 to "use all the cores" on your CPU, which was a rumor from a month or two back. Threadripper and its 16-core/32-thread configuration gets killed at lower quality settings where the CPU can make the biggest difference. Oddly, even the Ryzen 7 1800X doesn't do so well, falling behind the Ryzen 5 and Ryzen 3 parts I tested. What's going on is likely a case of threads getting scheduled in a less than optimal fashion, maybe some cache latencies coming into play as well.

On the other hand, if you have an older CPU, things aren't quite so bad. An i3-7100 with the fastest single GPU is obviously slower than an overclocked i7-7700K, but at well over 100 fps it's hardly slow. The same thing applies to all the Ryzen CPUs—they may not be as fast as the Intel i7 chips, but unless you're really set on getting above 144 fps it shouldn't matter. The CPU still plays a role at 1080p highest+SMAA, but as soon as the resolution hits 1440p it starts to matter a lot less. We go from a 70 percent performance advantage (for the overclocked i7-7700K) at 1080p, to a mere 10 percent advantage at 1440p, and at 4K the difference is less than four percent.

Going beyond 4-core isn't really beneficial, unless you're using those extra cores for video streaming or other tasks.

I was in the process of testing other GPUs as well, as mentioned above, and basically those results show exactly what you'd expect. Slower GPUs make your CPU even less of a factor. With the RX Vega 64, Threadripper 1950X is still the slowest CPU tested at 1080p medium, but the gap is a bit smaller. The overclocked i7-7700K is still about 60 percent faster at 1080p medium, but at 1080p highest+SMAA the gap shrinks to 30 percent, and the Ryzen 7 1800X is basically tied with the fastest Intel CPUs. At 1440p, all of the CPUs fall land within the 55-61 fps range. Go down another step with the GTX 1060 6GB, and even the 1080p medium results only show a 30 percent spread, while at 1080p highest the CPUs all fall in the 85-90 fps range.

What happened to Destiny 2 making use of all your CPU cores? Well, it's not a complete loss—the 2-core/4-thread Core i3 parts for example trail the 4-core/4-thread Core i5, even when Core i3 is overclocked. But going beyond 4-core isn't really beneficial, certainly not on the AMD side of things, unless you're using those extra cores for video streaming or other tasks.

Fine tuning performance

Test System

MSI Aegis Ti3 VR7RE SLI-014US

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

Graphics Cards

MSI GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GT62VR Dominator Pro (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

I mentioned the biggest factors in improving performance earlier, but here's the full rundown of the various settings, along with rough estimates of performance changes. The numbers given here aren't from the complete benchmark using a bunch of different cards, but were gathered using a single GTX 1070 running at 1440p highest, and comparing the minimum setting on each item to the maximum (highest) setting using the average framerate. The test location as always could be a factor—some areas might be affected more by things like the foliage settings, but time constraints prevent full testing of each option.

Graphics Quality (Low/Medium/High/Highest/Custom): This is the global preset, with four settings plus custom. Going from highest (with SMAA, as the default enables MSAA) to low improves performance by around 50 percent, while going from highest to high improves performance by 40 percent. Like I've said, there aren't a ton of ways to dramatically reduce the GPU requirements right now, other than just running a lower resolution.

Anti-Aliasing (Off/FXAA/SMAA/MSAA): MSAA is a performance killer right now, and dropping to SMAA boost framerates by nearly 70 percent. Going from SMAA to Off meanwhile only improves performance an additional five percent.

Screen Space Ambient Occlusion (Off/HDAO/3D): Turning SSAO off improves framerates by nearly 10 percent relative to the 3D setting. HDAO is a middle ground that improves performance by around five percent.

Texture Anisotropy (Off/2x/4x/8x/16x): Has almost no impact on performance with modern GPUs, though older cards may benefit more. From 16x to off only improved performance one percent.

Texture Quality (Lowest/Low/Medium/High/Highest): For cards with 4GB or more VRAM, this has very little impact on performance—I measure a one percent increase with the GTX 1070 by setting this to lowest, but the visual impact is far more significant.

Shadow Quality (Lowest/Low/Medium/High/Highest): Affects not just the resolution of shadow maps but also other factors. Going from highest to lowest improved performance by around six percent.

Depth of Field (Off/Low/High/Highest): Causes a large drop in performance at highest setting. Turning this off improves performance nearly 20 percent, while the high setting increases performance by over 10 percent.

Environmental Detail Distance (Low/Medium/High): I measured no difference in performance between low and high.

Character Detail Distance (Low/Medium/High): I measured no difference in performance between low and high.

Foliage Detail Distance (Low/Medium/High): I measured no difference in performance between low and high.

Foliage Shadows Distance (Medium/High/Highest): I measured no difference in performance between medium and high.

Light Shafts (Medium/High): I measured no difference in performance between medium and high.

Motion Blur (On/Off): I measured no difference in performance between off and on.

Wind Impulse (On/Off): I measured no difference in performance between off and on.

Render Resolution (75-200): At the lowest setting of 75, performance was 50 percent higher—not too surprising, as there are 44 percent less pixels to render.

HDR (On/Off): Not testing (I don't have an HDR display), but it shouldn't affect performance.

Chromatic Aberration (On/Off): I saw a one percent increase in performance by turning this off.

Film Grain (On/Off): I measured no difference in performance between off and on.

Thanks to MSI for providing the graphics cards, desktop PC, and motherboards for testing. All the desktop results were run on MSI's Aegis Ti3, a custom PC that includes an overclocked i7-7700K, 64GB RAM, and 1TB of RAID0 M.2 NVMe solid state storage. Additional CPU scaling testing was done with various MSI motherboards for sockets LGA2066, LGA1151, AM4, and TR4. All testing was done with the latest Nvidia and AMD drivers available at the time of testing, Nvidia 385.41 and AMD 17.8.2 beta.

If you're shopping for a new graphics card, you should also know that MSI is running a promotion through September 5, where you can get a code for Destiny 2 with the purchase of an MSI GTX 1080, GTX 1080 Ti, or a notebook with a GTX 1080 graphics card.

I'll revisit the topic of Destiny 2 performance again when the final PC version launches on October 24. If you're just getting into PC gaming, and you're interested in Destiny 2, right now the safe bet is to go with Nvidia graphics cards. From the high-end to the budget offerings, Nvidia beats the equivalently priced AMD solutions at every level. Obviously, things could change by launch, but given the Nvidia promotions tied into Destiny 2, that's probably not a sure thing.

The good news is that, regardless of your GPU vendor, you should be able to get acceptable performance out of Destiny 2 on just about any recent graphics card. You might have to set your phasers for stun rather than vaporize, but at 1080p even a souped-up potato should manage 30 fps. Which is basically what console gamers will have to live with. On the PC, GeForce GTX 960 4GB and above and Radeon R9 380 4GB and above can break 60 fps at 1080p medium, and faster cards like the GTX 1070 and GTX 1080 can offer an experience that consoles won't match this side of 2020.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.