Nvidia's GPU-powered AI is creating chips with 'better than human design'

So, it's using AI accelerated by its GPUs to accelerate its GPU development.

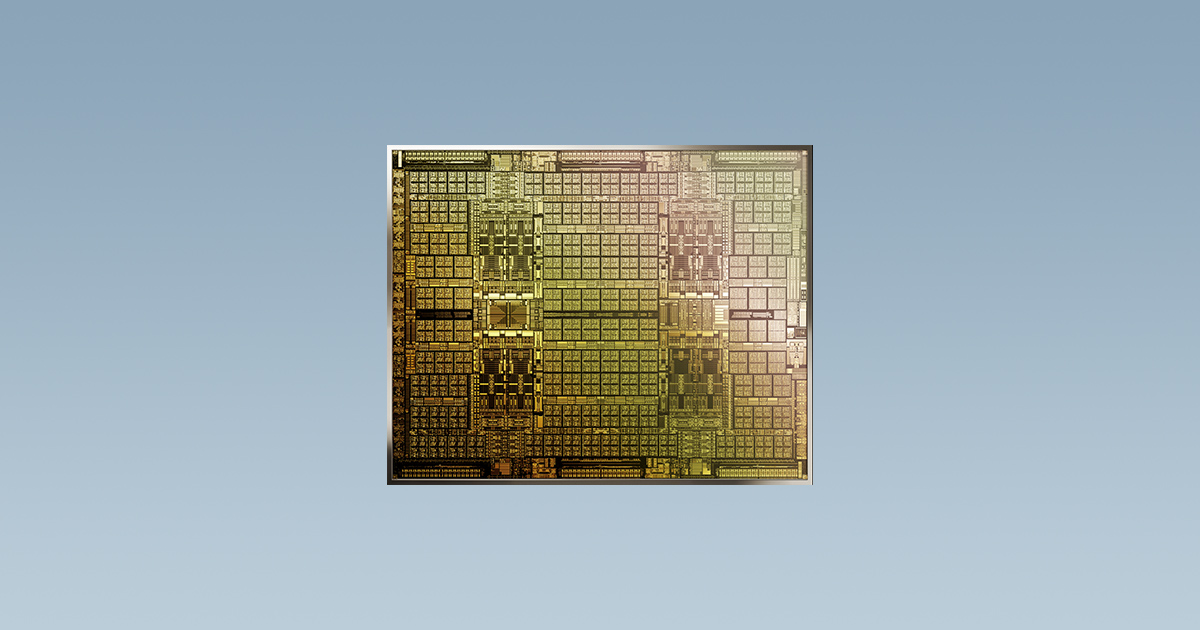

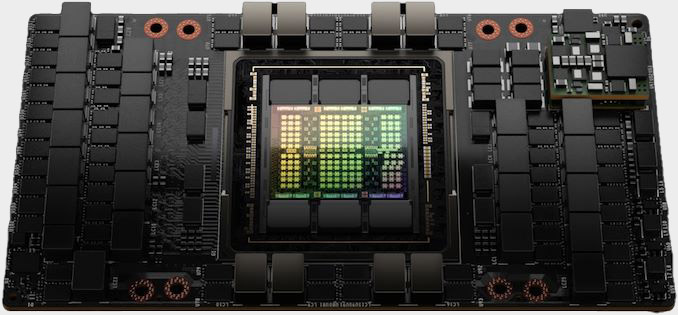

Nvidia has been quick to hop on the artificial intelligence bus一with many of its consumer facing technologies, such as Deep Learning Super Sampling (DLSS) and AI-accelerated denoising exemplifying that. However, it has also found many uses for AI in its silicon development process and, as Nvidia's chief scientist Bill Dally said in a GTC conference, even designing new hardware.

Dally outlines a few use cases for AI in its own development process of the latest and greatest graphic cards (among other things), as noted by HPC Wire.

"It’s natural as an expert in AI that we would want to take that AI and use it to design better chips," Dally says.

"We do this in a couple of different ways. The first and most obvious way is we can take existing computer-aided design tools that we have. For example, we have one that takes a map of where power is used in our GPUs, and predicts how far the voltage grid drops一what’s called IR drop for current times resistance drop. Running this on a conventional CAD tool takes three hours."

"...what we’d like to do instead is train an AI model to take the same data; we do this over a bunch of designs, and then we can basically feed in the power map. The inference time is just three seconds. Of course, it’s 18 minutes if you include the time for feature extraction.

"...we’re able to get very accurate power estimations much more quickly than with conventional tools and in a tiny fraction of the time," Dally continues.

Dally mentions other ways AI can be handy for developing next-generation chips. One is in predicting parasitics, which are essentially unwanted elements in components or designs that could be inefficient or simply cause something to not work as intended. Rather than use human work hours to scope these out, it's possible to reduce the number of steps required in designing circuits by having an AI do it. Sort of like a digital parasitic sniffer dog.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Furthermore, Dally explains that crucial design choices in designing the layout of Nvidia's chips can be aided by AI. Think of this job as avoiding traffic jams with transistors and you'd probably not be that far off. AI may have a future ahead of it in simply pre-warning designers where these traffic jams may occur, which could save heaps of time in the long-run.

Perhaps the most interesting of all the use cases Dally explains is in automating standard cell migration. Okay, it doesn't sound all that interesting, but it actually is. Essentially, it's a way of automating the process of migrating a cell, like a fundamental building block of a computer chip, to a newer process node.

So this is like an Atari video game, but it’s a video game for fixing design rule errors in a standard cell.

Bill Dally, Nvidia

"So each time we get a new technology, say we’re moving from a seven nanometer technology to a five nanometer technology, we have a library of cells. A cell is something like an AND gate and OR gate, a full adder. We’ve got actually many thousands of these cells that have to be redesigned in the new technology with a very complex set of design rules," Dally says.

"We basically do this using reinforcement learning to place the transistors. But then more importantly, after they’re placed, there are usually a bunch of design rule errors, and it goes through almost like a video game. In fact, this is what reinforcement learning is good at. One of the great examples is using reinforcement learning for Atari video games. So this is like an Atari video game, but it’s a video game for fixing design rule errors in a standard cell. By going through and fixing these design rule errors with reinforcement learning, we’re able to basically complete the design of our standard cells."

The tool Nvidia uses for this automated cell migration is called NVCell, and reportedly 92% of the cell library can be migrated using this tool with no errors. Then 12% of those cells were smaller than the human-designed cells.

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

"This does two things for us. One is it’s a huge labor saving. It’s a group on the order of 10 people will take the better part of a year to port a new technology library. Now we can do it with a couple of GPUs running for a few days. Then the humans can work on those 8 percent of the cells that didn’t get done automatically. And in many cases, we wind up with a better design as well. So it’s labor savings and better than human design."

So Nvidia's using AI accelerated by its own GPUs to accelerate its GPU development. Nice. And of course most of these developments will be useful in any form of chipmaking, not just GPUs.

It's a clever use of time for Nvidia: developing these AI tools for its own development not only sees it speed up its own processes, it also allows it to better sell the benefits of AI to its customers, whom it provides GPUs to accelerate AI with. So I imagine Nvidia sees it as a win-win scenario.

You can check out the full talk with Dally over on the Nvidia website, though you will need to sign up to Nvidia's Developer Program to do so.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.