DLSS 2.0 tested: why you should finally turn on Nvidia's AI-powered upscaling

We let Nvidia's super sampling artificial intelligence take the wheel in Wolfenstein: Youngblood and Control.

Nvidia has officially released the second generation of Deep Learning Super Sampling, otherwise known as DLSS 2.0. It promises superior image quality with the new and improved AI image bot, along with vital easy integration upgrades to make for a swift turnaround when adding game support. All of which sounds like just what DLSS requires to really make a name for itself.

In order to do that it needs to deliver higher frame rates—through the power of AI upscaling—with minimal impact on visual fidelity. That is, after all, what was promised with DLSS back at RTX 20-series launch in August 2018. The initial AI-based super resolution algorithm did deliver a hearty increase in frame rates but lost a great deal in picture clarity. As a result, the performance-enhancing feature, that was often key to bearable frame rates with ray tracing effects turned on, was left on the sidelines.

So what's new with DLSS 2.0, and why should you take another look at Nvidia's technology? Perhaps the biggest enhancement with Nvidia's neural network are the new temporal feedback techniques, which it says can deliver sharper image details and improved stability frame to frame. What it claims should soothe a lot of the complaints of its users.

But DLSS also requires support to gain notoriety. To lessen the burden on its own team—which have already put "blood, sweat, and tears" into DLSS—Nvidia has removed the requirement to train an AI network on a per game basis. The system will still need to be trained, and support added for a game individually, but it can do so with non-game specific information. Thus delivering the all-important flexibility that any fledgling game technology requires to get up-and-running across the industry.

An RTX 20-series graphics card is still required for DLSS, however, which will keep the technology at arm's length for some. DLSS requires these cards' AI-accelerating Tensor Cores, which the Nvidia Turing architecture integrates into the die for AI workloads. You won't get far without them, unfortunately.

But really what matters most of all is how DLSS 2.0 shapes up in-game. To answer that, I've put it to the test in two of the four currently supported titles: Control and Wolfenstein: Youngblood. One game offering the very best RTX implementation available today, and the other an up-to-date engine making the most of ray-traced reflections. Plus Wolfenstein's DLSS implementation is easy to use by comparison, a relative godsend in these times.

Never one for modesty, I've opted for an Nvidia GeForce RTX 2080 in the test rig, fit with 2,944 CUDA Cores and 368 Tensor Cores. That's paired with an Intel Core i7 9700K at stock speeds, 16GB of DDR4-2400 memory, and a pleasingly speedy WD Black NVMe SSD.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Yet even an RTX 2080 can start to sweat with RTX on max at 1440p, especially when you're after 144Hz refresh rates. That brings us onto the two main pillars of DLSS: performance and quality. You're always going to be striking a balance between the two, as ever.

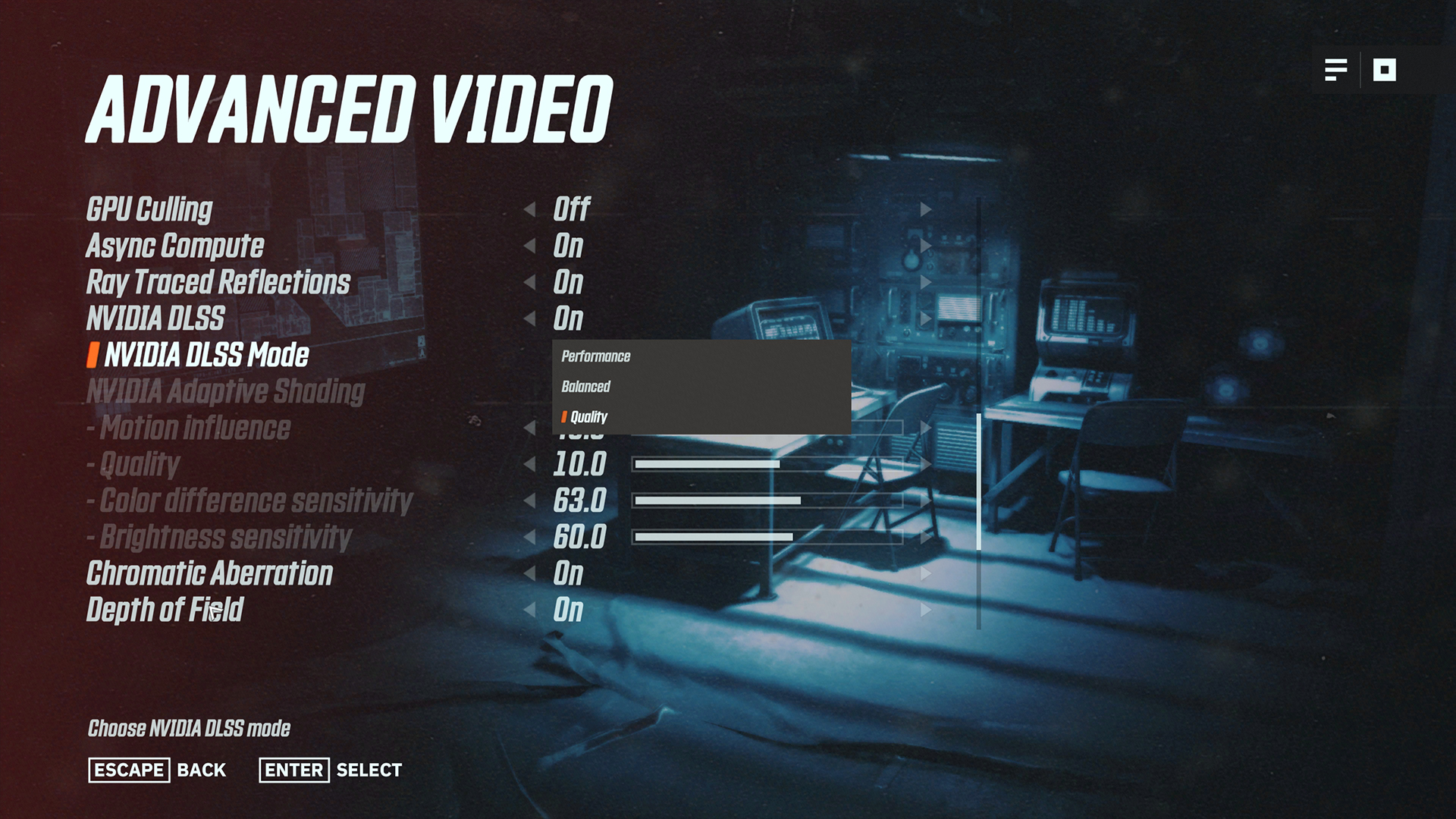

With DLSS 2.0, Nvidia has integrated that very thinking into the options menu. It's obviously signposted in Wolfenstein: Youngblood, for example. Once DLSS is enabled, three tiers open up: Performance, Balanced, and Quality.

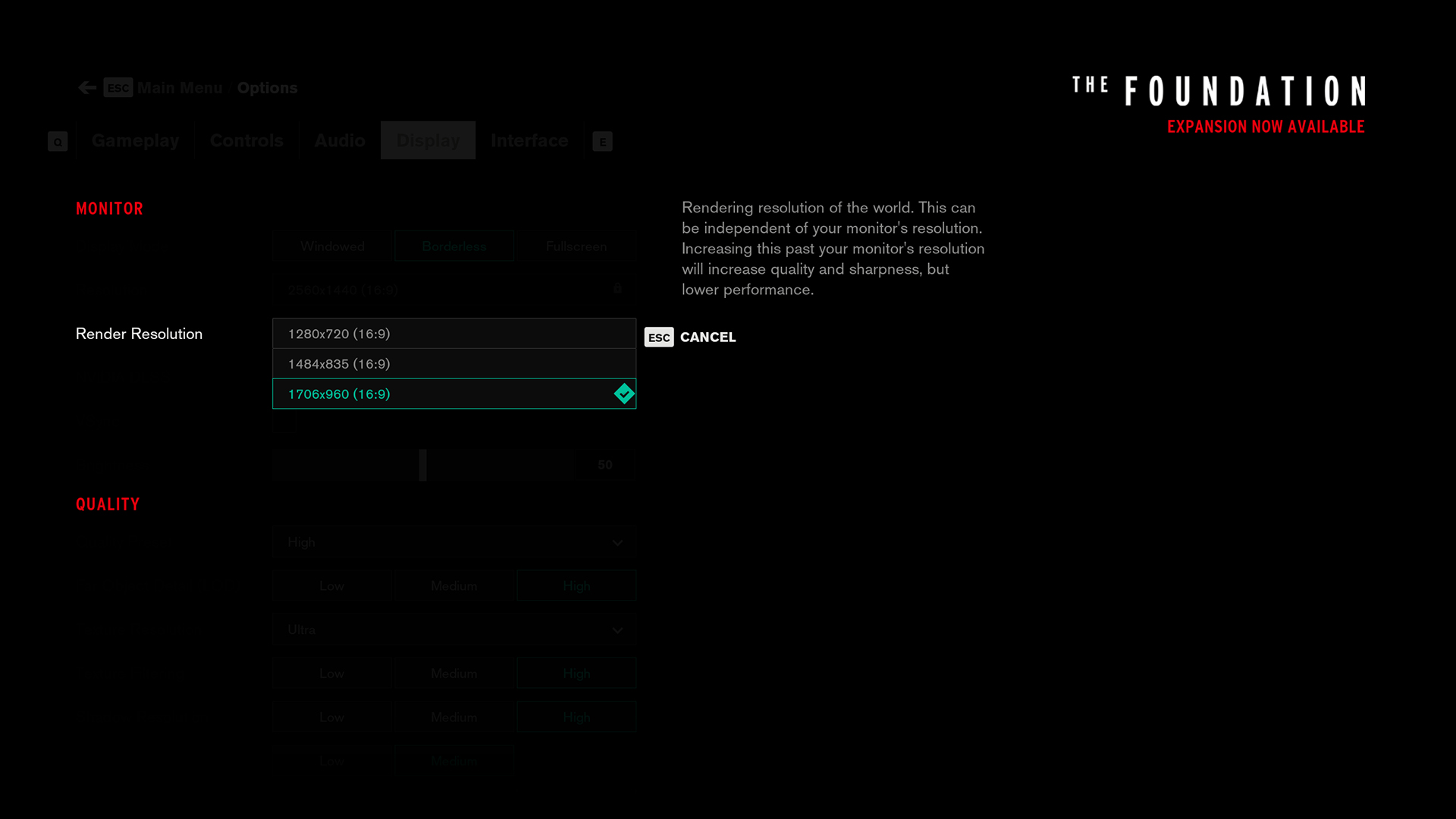

In Control the DLSS signage doesn't shine quite so bright. The three tiers still exist, they're just down as three render resolutions, which is far from intuitive. With an output resolution of 1440p (this will depend on your monitor resolution), we can select between three presets: 1280x720, 1484x835, and 1706x960. These are then upscaled to your output resolution through the magic of AI and DLSS. Each value effectively represents one of the tiers: 1280x720 is equal to Performance, 1484x835 is Balanced, and 1706x960 is Quality. The lower the resolution, the worse the fidelity of the final image—but also the lower the load on the GPU, ergo greater performance.

If your output resolution is set to 1080p or 4K, the three resolutions available to choose from will differ from those listed above. Not to worry, just pick the lowest resolution for best performance, and the highest resolution for the best quality.

Nvidia is touting 4x upscaling with DLSS 2.0. Meaning, if you were to set your render resolution to 1080p and your output resolution to 4K, three out of every four of those pixels on screen will be generated by a neural network. Spooky, right?

Let's start with Control. Like I said, I truly believe this is one of the best examples of ray tracing in games today. Let's see if that still stands when Minecraft with RTX rolls around later this year, but for now let's stick with what we know.

With Nvidia's latest driver package, 445.75, which also optimises for DLSS 2.0, our Control benchmark run through the Oldest House, ducking and diving into combat with a select few enemies in an explosive environment, proved a demanding task for our RTX 2080. At the 'high' graphics preset it managed just 57fps on average for the run, with a 47fps minimum (99th percentile. That's with ray tracing turned off, too.

| Header Cell - Column 0 | Avg (fps) | Min (fps / 99th percentile) |

|---|---|---|

| Native (445.75) | 57 | 47 |

| DLSS 2.0 | Row 1 - Cell 1 | Row 1 - Cell 2 |

| Performance (1280x720) | 124 | 99 |

| Balanced (1484x835) | 107 | 78 |

| Quality (1706x960) | 91 | 74 |

Control benchmarks were carried out at 1440p, high preset, with RTX disabled.

Compare that to DLSS 2.0 in Performance mode at 124fps average and 99fps minimum, and it's clear the benefit of having Nvidia's neural network do all the heavy lifting. Yet that's nothing new. DLSS 1.0 sure cranked up the frame rate, but it was unable to do so without reducing your crystal clear image into a shadow of its former self. The same holds true in many respects for DLSS 2.0's Performance mode. Favouring frame rate over fidelity, it still washes the scene in a little too much fuzz for my liking, and I'd be hesitant to leave it on throughout a 10+ hour title—mostly for fear of missing out on experiencing the best of a world the devs spent so long to create.

That's where DLSS 2.0 Quality mode comes in. Each frame takes a little more time to process, but, in doing so, Nvidia's AI net is able to run a fine-tooth comb through the scene. It uses this time to carefully select a polygon here, or a striation of hair there, and upscales them with the utmost accuracy.

The results of DLSS 2.0 in Quality mode are something quite spectacular in Control. Strong detail emerges from even the background of the scene, such as the tent guy lines in the rear of the frame, or the cracks in Jesse's leather jacket that add a few more months of wear and tear to her outfit. Hair's still a sticking point, however, and aliasing where the AI has struggled to upscale the source material shows there's room to deliver more computer smarts per pixel in the future.

Yet with a performance boost in the 60% range between DLSS 2.0 Quality and Native 1440p rendering, some foibles can be ignored. It's the difference between sub-60fps and resoundingly smooth gameplay even in the worst case scenario: native 1440p at 57fps average, and 47fps minimum; DLSS 2.0 quality at 91fps average, and 74fps minimum.

Control also offers us an opportunity to play spot the difference first-hand between a DLSS scene upscaled from 720p (Performance mode) to a native 720p image. If you compare the images below, it's quite remarkable how the neural network is able to replicate the information required to fill in the gaps between the native 720p frame and the 720p image upscaled to 1440p with DLSS 2.0.

Let's shift our attention over to Wolfenstein: Youngblood, where the daunting weight of an impending AI super-intelligence inflection point bears down to almost dizzying effect. At least that's my experience so far. And a sentiment shared by many of the colleagues I asked to correctly identify the natively rendered scene from the DLSS upscaled one to mixed results.

The AI's proclivity for recreating a million piece jigsaw puzzle with only a handful of the pieces available, and with near-perfect accuracy, is little short of startling.

As with Control, Wolfenstein's Performance mode (which is labelled clearly as such in the settings menu) delivers an admirable performance gain over native 1440p, ultra preset rendering. We're testing this title with RTX on, across both the Riverside and Lab X benchmark runs and averaging the results. While native rendering manages a steady 79fps average and 56fps minimum—near-enough the bare minimum we'd want to achieve—with DLSS 2.0 Performance enabled our averages shot up to 106fps and 79fps. If only the resulting image wasn't quite so fuzzy, or we'd leave it there.

Balanced mode offers moderate improvements to the overall image quality, but once again leaves a hint of unwanted myopia to a scene—and I've enough of that already, thank you. Instead you'll want to be eyeing up Quality mode: this is where Wolfenstein: Youngblood and DLSS 2.0's gears mesh in perfect sync.

From a native average of 57fps, the Quality setting offers a 60% performance uplift to 91fps. Similarly, you'll only see drops down to 74fps as opposed to native's 47fps. That alone wouldn't be too convincing if that shortsightedness was still enveloping the background. However, it's dissipated to an all too true image for something dreamed up by some neural net.

| Header Cell - Column 0 | Avg (fps) | Min (fps) |

|---|---|---|

| Native (445.75) | 79 | 56 |

| DLSS 2.0 | Row 1 - Cell 1 | Row 1 - Cell 2 |

| Performance | 127 | 85 |

| Balanced | 117 | 85 |

| Quality | 106 | 79 |

Wolfenstein: Youngblood benchmarks were carried out at 1440p, Ultra preset, with ray-traced reflections enabled.

Between DLSS 2.0 Quality and native rendering few of the telltale upscaling signs remain. Gratings, perforated objects, and other complex, repeated geometry are all but immaculately rendered copies of their native selves. Character models are clearly distinguishable from their surroundings, and even objects off in the distance are remarkably detailed for, what can only be, the result of an AI's best guess.

What's truly surprising is that, true to Nvidia's word in some ways, the DLSS 2.0 scene is often a little more clearly defined than its native counterpart. I know, who saw that coming? Due to the intensive, heavy-handed temporal super sampling anti-aliasing (TSSAA) set as standard on Wolfenstein's Ultra preset, some textures, when viewed at certain oblique angles from a distance, lose their crisp detail. For example, the lines distinguishing the tiling on the floor in front of a row of computer screens in the distance. Or the cladding above the first staircase to the right of the scene.

You could argue that, in some respects, the native resolution is truer to the source of what the developers intended, and therefore correct. Because, well, it is. And you're right. But in some places the native scene trips up in its need to apply further anti-aliasing to a scene. The below screenshots show the difference between three AA techniques in-game: TSSAA 8TX, SMAA 1TX, and FXAA 1TX. The latter two tend to blur the overall scene, foreground and background, to a greater extent than TSSAA, whereas it only tends to lose clarity the further into the background you explore.

Frankly, all this only goes to prove one thing: I'm fickle, and I think a lot of you might be too when it comes to your game's visuals. I won't accept less than the limit for my hardware, no matter how worthy the numbers may look on paper. And they should be convincing enough. Just look at those performance improvements: 61% in Wolfenstein: Youngblood, 118% in Control. Despite my best interests when it comes to hitting my monitor's lofty refresh rates, I want to enjoy that game world with all its nuance and finely-crafted detail to its fullest without some newfangled AI coming in and messing it all up.

Few of us seem willing to make that visual sacrifice, as evidenced with first-gen DLSS and the tepid response its limited first-wave release received. And so a purely performance-focused DLSS would, and has, faltered in gaining traction among the industry. And most importantly with us PC gamers at large.

The AI's proclivity for recreating a million piece jigsaw puzzle with only a handful of the pieces available, and with near-perfect accuracy, is little short of startling.

Rather it's the performance uplift, at little to no expense, delivered by DLSS 2.0's Quality preset that could, and I would like to think will, gain momentum. They say there's no such thing as free performance, but I think DLSS 2.0 and its successors will be as close as you can get once the necessary silicon is pervasive across an entire GPU lineup. And the progenitor to all that is Wolfenstein: Youngblood.

Which does make you wonder what else can AI and machine learning achieve in gaming? And how long can AMD avoid such technology when the pace of improvement in neural network upscaling is so rapid year-over-year? An industry in waiting need only implement DLSS effectively across a majority of upcoming titles for Nvidia to be effectively able to tack on a performance improvement of some 60% without ever even touching its silicon.

I don't doubt AMD will strike back in kind with a Radeon product to match—perhaps via the GPUOpen initiative. It's already got the workings of such a thing in FidelityFX Content Adaptive Sharpening (CAS).

Nvidia also has some hurdles before anything like DLSS becomes ubiquitous. Most of all implementation. We'll have to keep an eye on every nuanced use of the tech to see if they can shimmy under the bar set by Wolfenstein, and don't instead end up kicking out their heels and collapsing in a fuzzy heap. There's also the issue of how you convince users, no matter the results, to switch DLSS on in the first place. We can't exactly have it on by default, after all the market's not dominated by RTX 20-series cards capable of running it. What's a GPU giant to do?

Which brings us onto another big hurdle, and that's of course the problem of getting silicon capable of DLSS into player's gaming PCs. It's up to Nvidia to deliver with its next-generation of graphics cards—and the cost of entry has to be lower than it is today with the $299 RTX 2060.

Still, there's certainly something to be said for DLSS 2.0 and the team responsible for it at Nvidia. After a limited initial run with DLSS, I thought it surely a feature to be marked as tried and tested then left to wither on the vine. Instead, DLSS 2.0 is an effective and immediate gateway to a gratuitous performance bump with little to no visual impact. And its growing availability across a number of graphics cards and games could signal a groundswell in AI applications across gaming yet to come.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.