Nvidia's bringing its AI avatars to games and they can interact with players in real-time. With voiced dialogue. And facial animations

Nvidia announces ACE for Games at Computex 2023.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

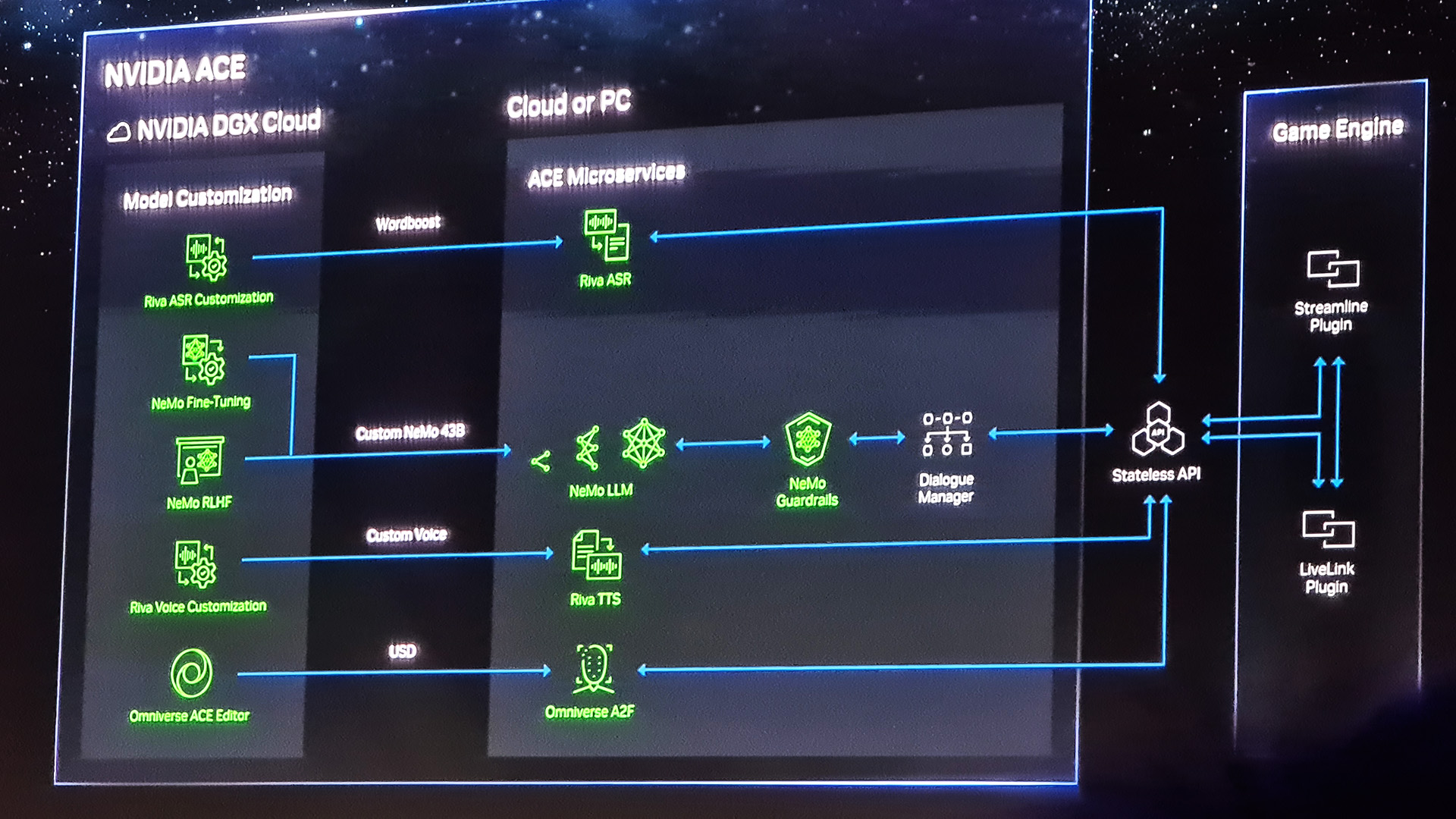

Nvidia has just announced ACE for Games at its Computex keynote, a version of its Omniverse Avatar Cloud Engine, to animate and give a voice to in-game NPCs in real-time.

CEO Jensen Huang explained that ACE for Games integrates text-to-speech, natural language understanding—or in Huang's words, "basically a large language model"—and automatic facial animator. All under the ACE umbrella.

Essentially, an AI created NPC will listen to a player's input, for example asking the NPC a question, and then generate an in-character response, say that dialogue out loud, and animate the NPC's face as they say it.

Huang also showed off the technology in a real-time demo crafted in Unreal Engine 5 with AI startup Convai. It's set in a cyberpunk setting, because of course it is (sorry, Katie), and shows a player walk into a ramen shop and talk to the owner. The owner has no scripted dialogue but responds to the player's questions in real-time and sends them off on a makeshift mission.

You can watch the demo for yourself here.

It's pretty impressive, and undoubtedly a look into how games may utilise this technology in the future. As Huang said, "AI will be a very big part of the future of videogames."

Best gaming PC: The top pre-built machines from the pros

Best gaming laptop: Perfect notebooks for mobile gaming

Of course, he would say that. Nvidia is the company most set to gain by the sudden surge of AI demand with sales of its AI accelerators. And we have seen some basic integrations of ChatGPT into games already, like when Chris added it to his Skyrim companion and it failed to solve a simple puzzle. But this new ACE platform does appear a lot more polished and properly real-time.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

What we don't know is what it took to run the ACE for Games demo, only that it also was running ray tracing and DLSS. It could require more than your average GeForce GPU to run right now, or require a cloud-based component. Huang was a bit light on the details, but I'm sure we'll hear more about this tool as some games actually make moves to use it.

"The neural networks enabling NVIDIA ACE for Games are optimized for different capabilities, with various size, performance and quality trade-offs. The ACE for Games foundry service will help developers fine-tune models for their games, then deploy via NVIDIA DGX Cloud, GeForce RTX PCs or on premises for real-time inferencing," Nvidia says.

"The models are optimized for latency—a critical requirement for immersive, responsive interactions in games."

Latency is going to be a big one here. I'd hate to be subjected to the NPC equivalent of an awkward pause as it loads in its response from the cloud.

So far, Nvidia has confirmed two games using the facial animation technology component of ACE for Games, called Audio2Face. That's S.T.A.L.K.E.R. 2: Heart of Chernobyl and Fallen Leaf, but hopefully we'll get some examples of the whole platform combined. I'd be keen to see the tech in action outside of a demo.

Jacob earned his first byline writing for his own tech blog, before graduating into breaking things professionally at PCGamesN. Now he's managing editor of the hardware team at PC Gamer, and you'll usually find him testing the latest components or building a gaming PC.