New ChatGPT bot is out, promises to hallucinate less

Prepare for maybe a bit less gaslighting from your newest AI companion.

OpenAI is bringing us GPT-4, the next evolution of everyone's favourite chatbot, ChatGPT. On top of a more advanced language model that "exhibits human-level performance on various professional and academic tests" the new version accepts image inputs, and promises more stringent refusal behaviour to stop it from fulfilling your untoward requests.

The accompanying GPT-4 Technical Report (PDF) warns, however, that the new model still has a relatively high capacity for what the researchers are calling "hallucinations". Which sounds totally safe.

What the researchers mean when they refer to hallucinations is that the new ChatGPT model, much like the previous version, has the tendency to "produce content that is nonsensical or untruthful in relation to certain sources."

Though the researchers make it clear that "GPT-4 was trained to reduce the model’s tendency to hallucinate by leveraging data from prior models such as ChatGPT." Not only are they training it on its own fumbles, then, but they've also been training it through human evaluation.

"We collected real-world data that had been flagged as not being factual, reviewed it, and created a ’factual’ set for it where it was possible to do so. We used this to assess model generations in relation to the ’factual’ set, and facilitate human evaluations."

The process appears to have helped significantly when it comes to closed topics, though the chatbot is still having trouble when it comes to the broader strokes. As the paper notes, GPT-4 is 29% better than GPT-3.5 when it comes to 'closed-domain' chats, but only 19% better at avoiding 'open-domain' hallucinations.

ITNEXT explains the difference between open- and closed-domain, in that "Closed-domain QA is a type of QA system that provides answers based on a limited set of information within a specific domain or knowledge base." Open-domain QA systems instead "provide answers based on a vast array of information available on the internet, and is best suited for specific, limited information needs."

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

So yeah, we're still likely to see Chat GPT-4 straight up lying to us about stuff.

Of course, users are going to be upset about the chatbot feeding them false information, though this isn't the biggest problem. One of the main issues is "overreliance". The tendency to hallucinate "can be particularly harmful as models become increasingly convincing and believable, leading to overreliance on them by users" the paper says.

"Counterintuitively, hallucinations can become more dangerous as models become more truthful, as users build trust in the model when it provides truthful information in areas where they have some familiarity." It's natural for us to trust a source if it's been accurate before, but a broken clock it right twice a day, as they say.

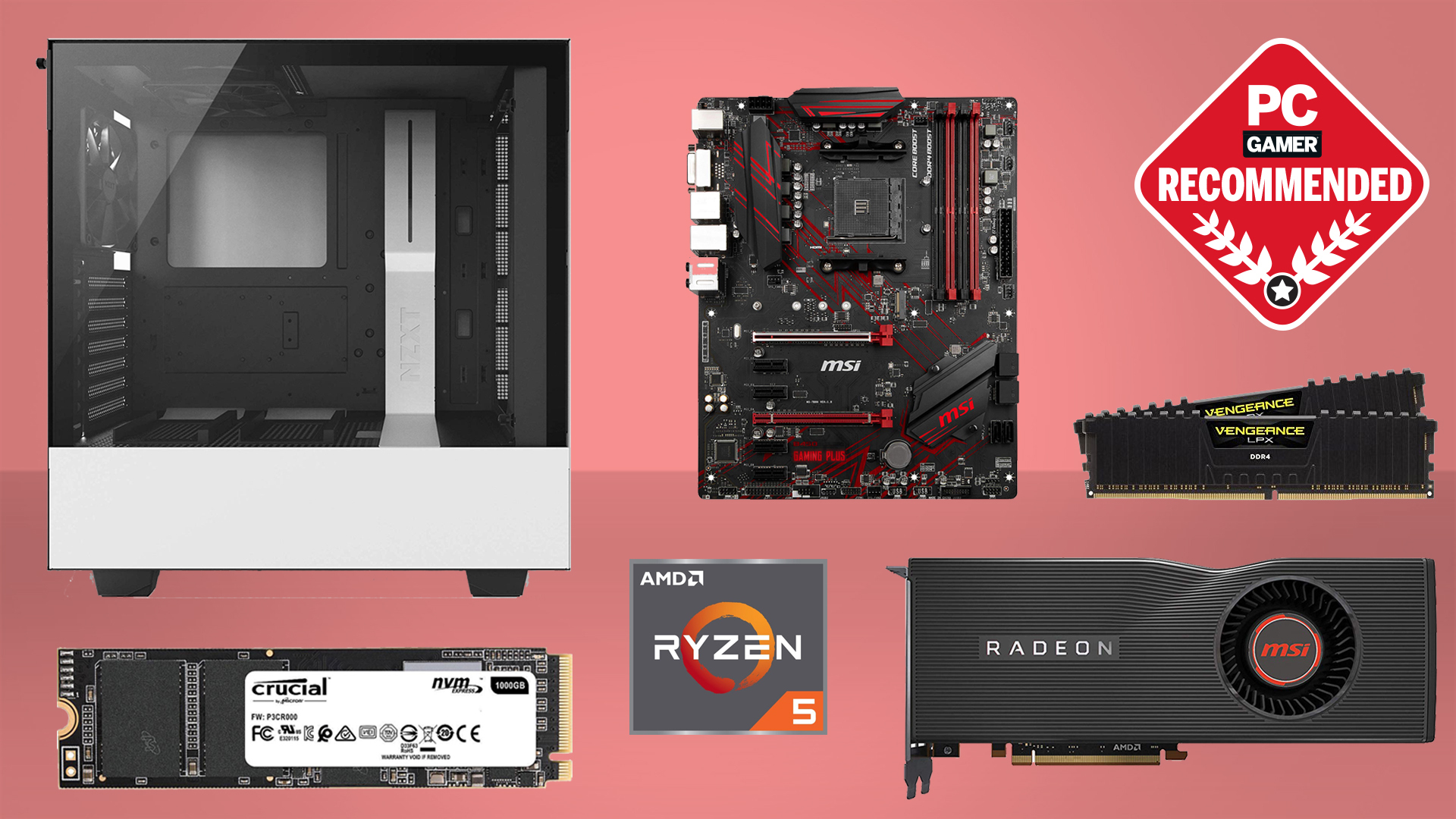

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

Overreliance becomes particularly problematic when the chatbot is integrated into automated systems that help us make decisions within society. This can cause a feedback loop that can lead to "degradation of overall information quality."

"It’s crucial to recognize that the model isn’t always accurate in admitting its limitations, as evidenced by its tendency to hallucinate."

Issues aside, the devs seem pretty optimistic about the new model, at least according to the GPT-4 overview on the OpenAI site.

"We found and fixed some bugs and improved our theoretical foundations. As a result, our GPT-4 training run was (for us at least!) unprecedentedly stable".

We'll see about that when it starts up with the gaslighting again, though the meltdowns we've been hearing about are mostly coming through Bing's ChatGPT integration.

ChatGPT-4 available right now for ChatGPT Pro users, though even paying customers should expect the service to be "severely capacity constrained".

Having been obsessed with game mechanics, computers and graphics for three decades, Katie took Game Art and Design up to Masters level at uni and has been writing about digital games, tabletop games and gaming technology for over five years since. She can be found facilitating board game design workshops and optimising everything in her path.