YouTube alone would eat up over 100 times the world's total bandwidth without video compression

Video streaming is way cooler than you thought.

There are plenty of familiar examples of technological marvels. Cramming billions of transistors into a computer chip is pretty amazing, for instance. But did you know just how cool streaming video is?

Intel's Tom Petersen recently gave Gamers Nexus a deep dive into the nuts and bolts of video compression and the details are genuinely fascinating even if you already had a rough idea of how it all works. Just for starters, without the availability of video compression technology, YouTube alone would eat up well over 100 times the entire world's total internet bandwidth thanks to its one billion hours of served video per day.

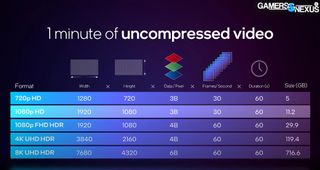

To put some numbers on that, Petersen says the world's current internet bandwidth comes in at 1.2Pbits per second. If you assume streaming at 1080p SDR, YouTube would need 155Pbits per second to stream its content in uncompressed video.

You can multiply that figure by about 10 for full HDR 4K video. So, if YouTube streamed everything in uncompressed 4K HDR, it would require about 1,000 times the world's available internet bandwidth. Wild.

The solution, of course, is video compression. Petersen provides a whistle stop tour of the five key components of compression. First up is colour downsampling, which relies on the fact that the human eye is more sensitive to luminance than colour and also more sensitive to some colours than others.

That means you can effectively discard some colour data without changing the subjective appearance of an image. That buys you up to two times compression.

Next is spatial and temporal compression. One aspect of that means only updating the pixels that change colours from one frame to the next. You can also use vectors to move pixels rather than simply updating their colour data in full, which uses less data. The net result is up to 20x compression.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

From there, things get more complicated and, frankly, difficult to understand. There's some error correction that's required following the spatial and temporal compression. Then comes frequency quantisation.

This is the hardest step in the process to grasp, but ultimately allows a whole load of pixels to be discarded without impacting image quality. Check out the video to find out more, but this step alone can add up to a further 40x compression.

Finally, there's symbol encoding, which essentially involves using abbreviated coding for frequently recurring patterns in the binary image data itself. That can achieve up to two times further compression.

And there you have it, your entire compression pipeline. Exactly how much bandwidth YouTube uses after compression is variable depending on the video content and the resolution being streamed.

But YouTube's own guidance for sustained speeds for 4K video is 20Mbps, while 1080p comes in at 5Mbps. That's 2.5MB per second and 0.625MB per second, respectively. So, how does that compare to raw uncompressed video?

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

At 8-bit colour, regular SDR 1080p would require roughly 185MB/s, while full 4K HDR would clock up a staggering 2GB per second. All pretty mind numbing numbers.

Anyway, to decompress the encoded video for viewing, you go through the compression steps in reverse order. All modern GPUs including Intel's integrated hardware in its CPUs and its Arc graphics cards have dedicated media engines with fixed function hardware for each of the five compression steps, allowing them to get the job done on the fly while using remarkably.

But when you dig down into the details, video compression definitely rates as a lesser sung but absolutely stunning technical achievement.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

Most Popular