Does RAM speed and capacity matter for gaming?

And if so, how much?

RAM manufacturers make a great deal out of the importance of memory speed and capacity for performance, as you'd expect—it's one of the limited number of factors by which they can distinguish themselves from the competition, and it's core to selling their product and ensuring there's a consistent market for upgrades. RAM is core not just to the system as a whole, but even to components like the best graphics cards. But how much do speed and capacity actually matter for gaming?

Before we dive deep into benchmarks and conclusions, let me make a few things clear up front. Speed in this instance refers specifically to frequency, the measure in MHz of how many commands your RAM can process per second, and not timings/latency (the four numbers that determine the delay in the RAMs internal functions, like accessing specific lines and columns of data). Frequency is usually listed immediately after the DDR version—DDR4-3200 is DDR4 memory running at a frequency of 3200MHz.

I tested some of the best RAM for gaming packages in two pretty high end, custom machines, one packing a Core i7-9700K CPU and one loaded with a Ryzen 7 2700X, both sporting a GeForce RTX 2080 Ti and speedy, identical PCIe SSDs. I wanted to eliminate any hardware bottlenecks and see how the RAM performed under close-to-ideal conditions in both an AMD and an Intel test bed, which leads to two crucial observations.

The first is that if your system is being hamstrung by slower hardware somewhere else, swapping in more or faster RAM won't yield much more performance. Your RAM isn't going to affect your processor's speed or the transfer rate of your storage drives.

The one exception to this maxim is the second, slightly contrary point I want to raise. For rendering graphics, VRAM does a lot of the grunt work, which means that if you're working with an older GPU with a limited stash of VRAM, you're likely to see much more significant improvements in performance by increasing the amount of RAM available to your system. Any time an application can utilize RAM in lieu of virtual memory on a hard drive you'll see improved performance, particularly in the consistency of frame times (swapping between RAM and disk storage can result in noticeable microstuttering).

With all that in mind, let's jump into the testing.

Testing methodology

I tested RAM packages against three modern triple-A titles with built-in benchmarks, Shadow of the Tomb Raider, Total War: Warhammer 2, and Metro Exodus. I ran the benchmarks at 1440p Ultra settings in each game five times and then averaged the results, as well as calculating 97 percentile results for each game and package. Because our focus here was performance in games, I didn't test other applications or lean on additional synthetic testing.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

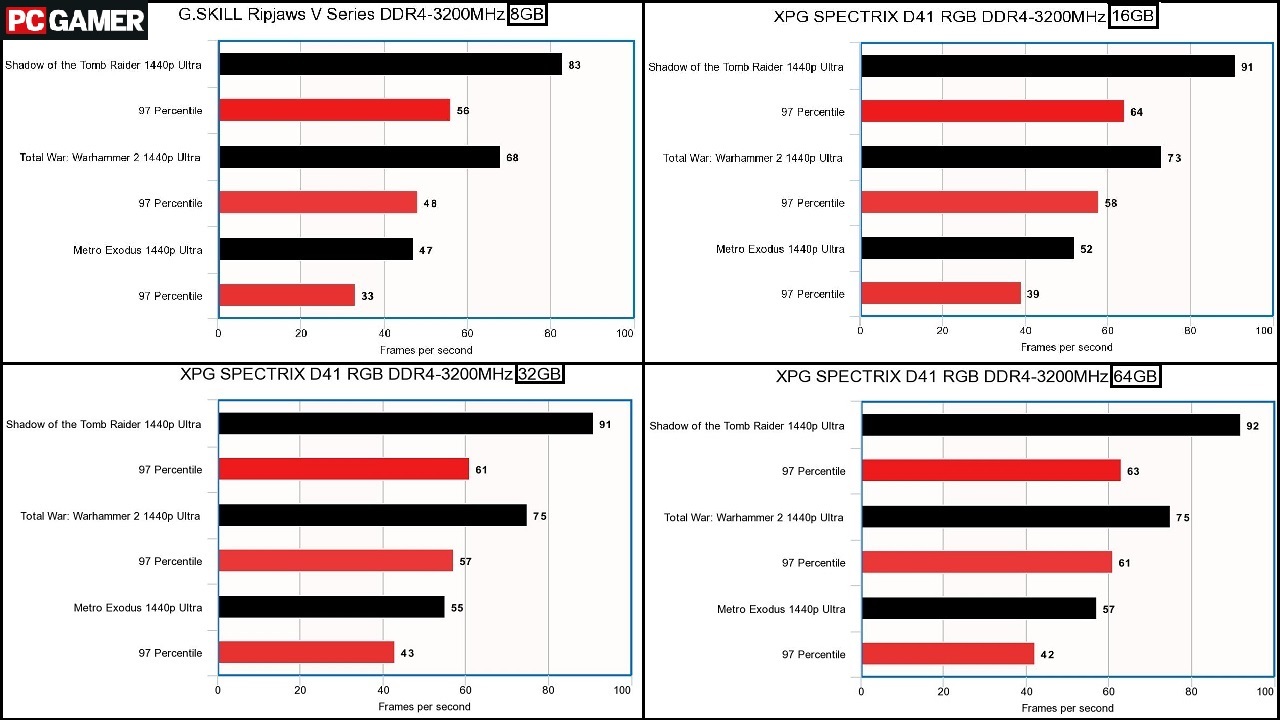

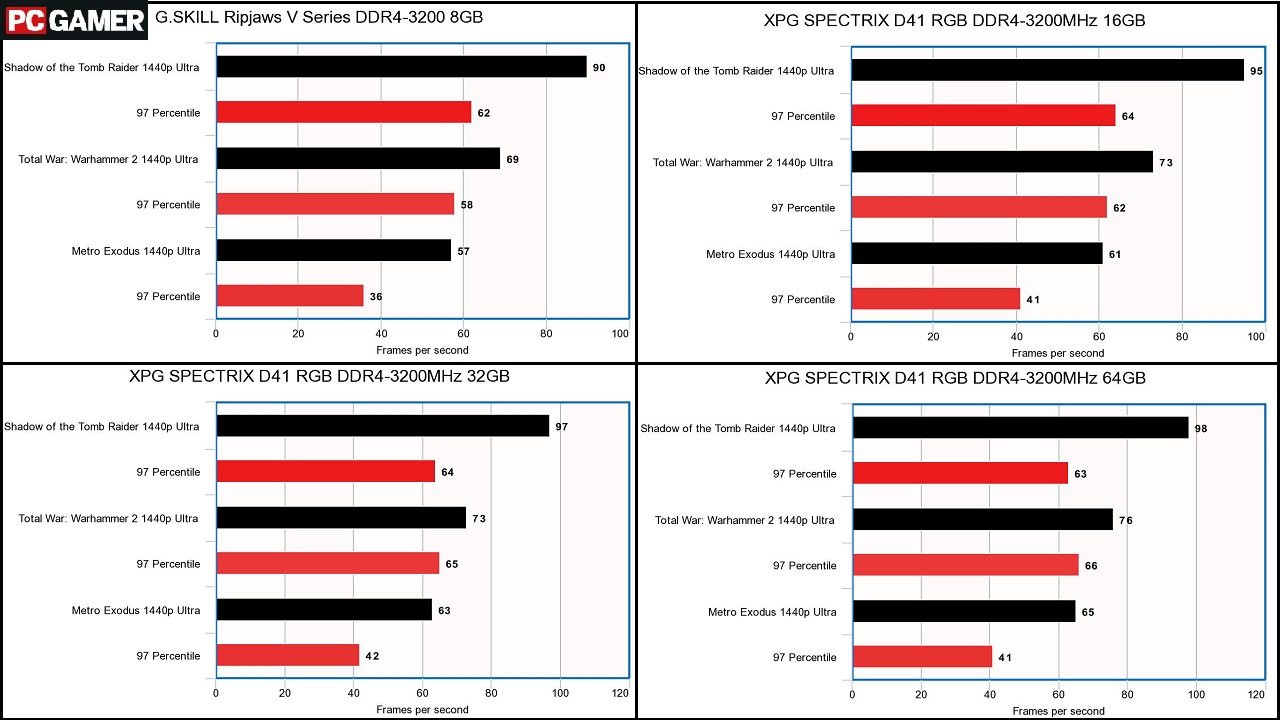

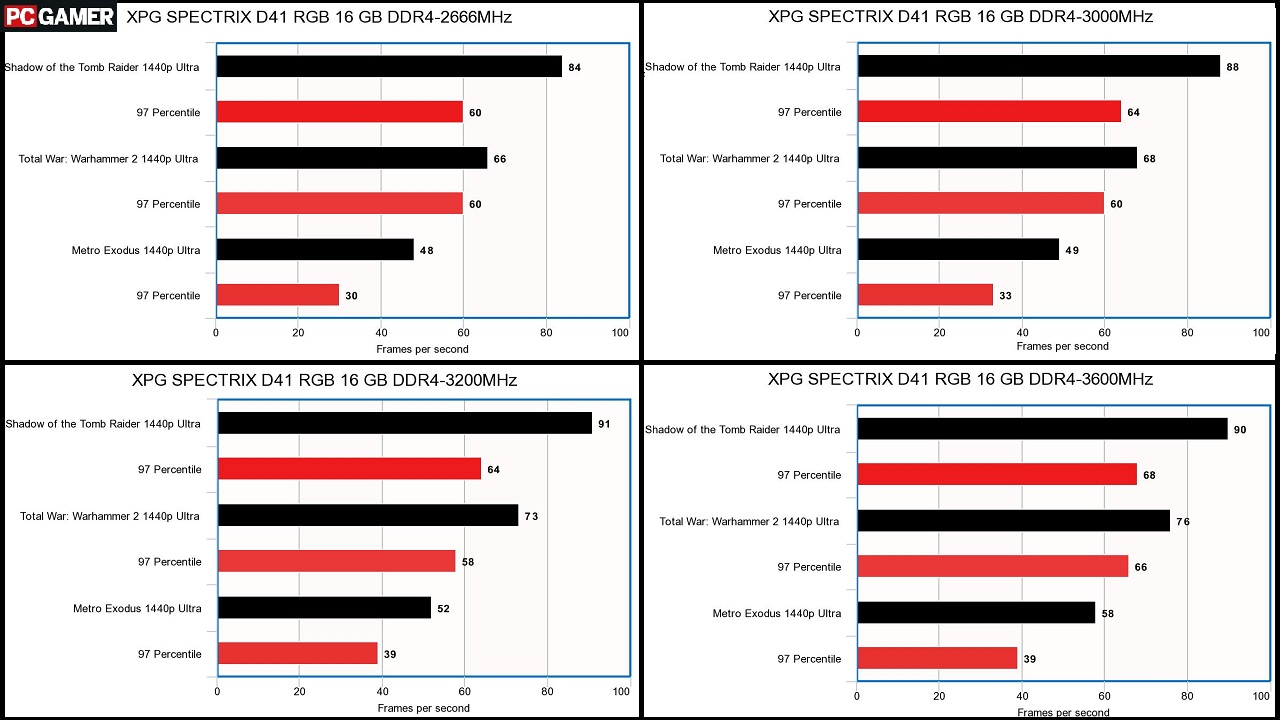

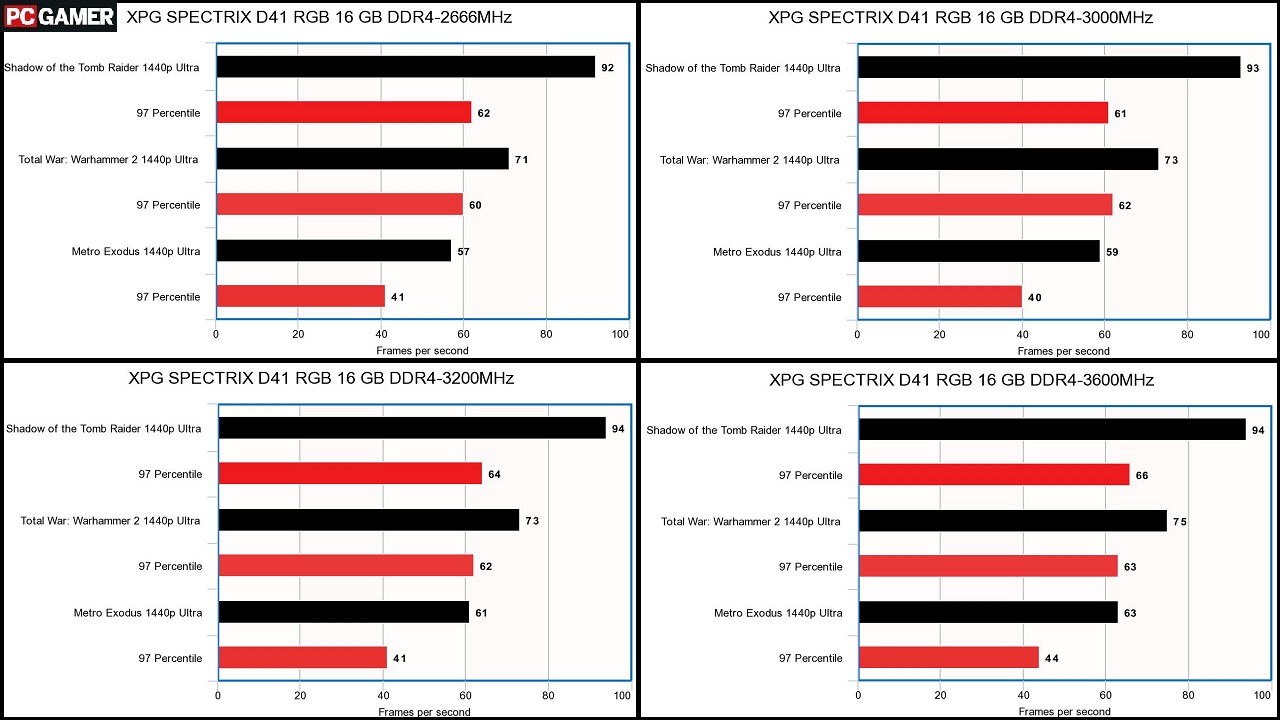

For each system, I tested four capacity thresholds—8GB, 16GB, 32GB, and to ensure we were testing at a capacity ceiling beyond which we'd only see plateaued results, 64GB. Unfortunately, I didn't have an 8GB stick that matched the XPG Spectrix package I used for the other capacity tests, but I did ensure the G.Skill RAM I substituted had identical voltages, timings, and frequency to get as close as possible. I then tested the Spectrix RAM at four different frequencies in both systems—2666MHz, 3000MHz, 3200MHz, and 3600MHz.

Capacity results

Our capacity results showed a pretty steady, fairly obvious trend. In 2019, 8GB is not enough RAM for a lot of triple-A titles, even with a GPU loaded with VRAM doing a lot of the heavy lifting. While the results here certainly showcase very playable frame rates, especially in Shadow of the Tomb Raider and Total War: Warhammer 2, in lesser systems those numbers would dip into uncomfortable ranges. Between 8GB and 16GB capacity in the AMD system we saw a 9 percent performance increase in Shadow of the Tomb Raider, 14 percent in Total War, and 10 percent in Metro, while the Intel machine showed improvements of 5 percent in Shadow of the Tomb Raider and Total War, and 7 percent in Metro. Significant, if not mind blowing, and those performance gains were also reflected in the 97 percentile scores.

On the other hand, performance increases from 16GB to 32 GB, all the way up to 64GB, were fairly flat. Any improvements fell into the margin of error except in the case of Metro Exodus, which yielded a 5 fps or 9 percent improvement from 16GB to 64GB in the Ryzen 7 machine and 4 fps or 6 percent in the Intel rig.

Frequency results

Frequency results varied more significantly between the two systems. On the AMD side, we saw substantive frame rate improvements moving from 2666MHz up to 3600MHz; a 7 percent total improvement in Shadow of the Tomb Raider from the slowest to fastest with a steady increase across frequencies, 15 percent in Total War, and a very solid 20 percent in Metro where every frame is crucial.

Tests in the Intel machine yielded much less dramatic results. Climbing from the lowest frequency to the highest only yielded a 2 percent fps improvement in Shadow of the Tomb Raider, well inside the margin of error, 5 percent in Total War, and 10 percent in Metro.

Conclusions

All of this testing revealed some definite trends, some obvious, some quite interesting. We've definitely hit a point where 8GB of RAM is presenting a performance bottleneck, even in a system with a GPU equipped with a spacious 11GB of VRAM. 16GB is still enough in this scenario, but with system memory allocation running up to 12 or 13GB in some triple-A titles that may not be the case for very long. To future proof your machine, pushing up to 32GB of RAM is looking more and more reasonable, particularly with memory prices dipping. And if you're looking for an inexpensive way to boost performance in a rig with an aging GPU, adding a couple of more RAM sticks is a reasonable stopgap.

There's also a significant difference in results between the AMD and Intel test beds. According to our tests, a Ryzen 7 2700X equipped system benefits significantly more than one with a Core i9-9700K. While I don't have enough data to state concretely that AMD systems broadly benefit more from speedier RAM (something I may explore in a future feature), that is certainly the case in this particular processor comparison. And I need to note that I can't rule out the choice of motherboard, as BIOS and firmware could also be impacting the results.

On the whole, does RAM speed and capacity really matter for gaming? The answer is a qualified yes. Capacity will really only have a significant impact upgrading from 8GB or less, and speed will yield a modest spike in performance in specific instances but never anything approaching the increases you're likely to see upgrading performance parts like the CPU or GPU.

My general advice when buying RAM, building a PC, or shopping prebuilt with the best gaming PCs is to aim for at least 16GB with room to upgrade, and to buy the fastest RAM that fits into your budget without splurging on top speeds if the price gap is too wide. Generally, if you're paying much more than $20 or $30 to step up to the next speed tier in the same RAM package, you're being overcharged.