How Processors Work

An in-depth look into what gives your computer its brain power

When asked about how a central processing unit works, you might say it’s the brain of the computer. It does all the calculations on math and makes logical decisions based on certain outcomes. However, despite being built upon billions of transistors for today’s modern high-end processors, they’re still made up on basic components and foundations. Here, we'll go over what goes on in most processors and the foundations they're built on.

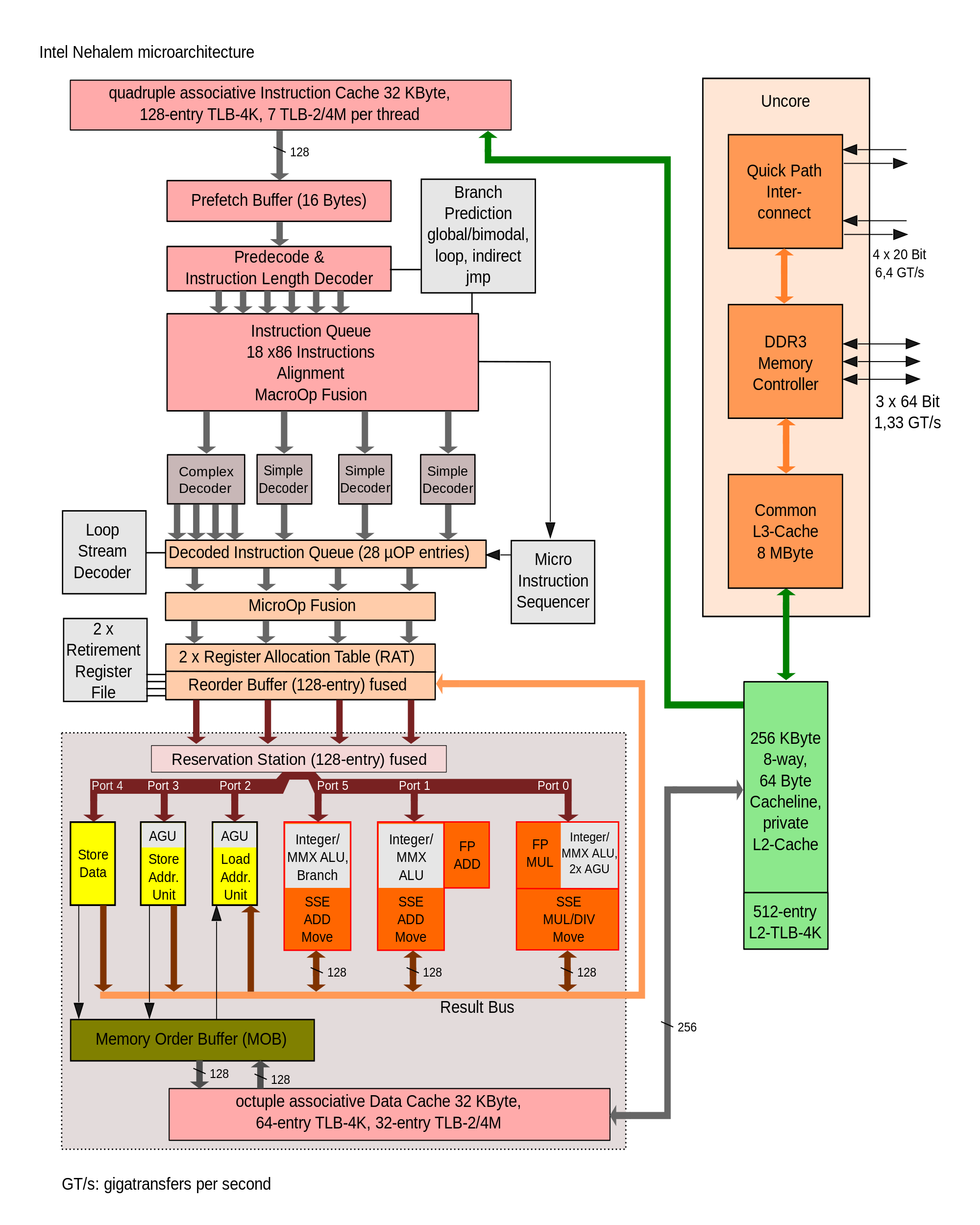

This graphic is a block diagram of Intel’s Nehalem architecture that we can use to get an overview. While we won’t be going over this particular design (some of it is specific to Intel’s processors), what we’ll cover does explain most of what’s going on.

The Hard Stuff: Components of a Processor

Most modern processors contain the following components:

- A memory management unit, which handles memory address translation and access

- An instruction fetcher, which grabs instructions from memory

- An instruction decoder, which turns instructions from memory into commands that the processor understands

- Execution units, which perform the operation; at the very least, a processor will have an arithmetic and logic unit (ALU), but a floating point unit (FPU) may be included as well

- Registers, which are small bits of memory to hold important bits of data

The memory management unit, instruction fetcher, and instruction decoder form what is called the front-end. This is a carryover from the old days of computing, when front-end processors would read punch cards and turn the contents into tape reels for the actual computer to work on. Execution units and registers form the back-end.

Memory Management Unit (MMU)

The memory management unit’s primary job is to translate addresses from virtual address space to physical address space. Virtual address space allows the system to make programs believe the entire address space possible is available, even if physically it’s not. For instance, in a 32-bit environment, the system believes it has 4GB of address space, even if only 2GB of RAM is installed. This is to simplify programming since the programmer doesn’t know what kind of system will run the application.

The other job of the memory management unit is access protection. This prevents an application from reading or writing in another application’s memory address without going through the proper channels.

Instruction Fetcher and Decoder

As their names suggests, these units grab instructions and decode them into operations. Notable in modern x86 designs, the decoder turns the instructions into micro-operations that the next stages will work with. In modern processors, what gets processed into the decoder typically feeds into a control unit, which figures out the best way to execute the instructions. Some of the techniques that are employed include branch prediction, which tries to figure out what will be executed if a branch is to take place, and out-of-order execution, which rearranges instructions so they’re executed in the most efficient way.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Execution Units

The bare minimum a general processor will have is the arithmetic and logic unit (ALU). This execution unit works only with integer values and will do the following operations:

- Add and subtract; multiplication is done by repeated additions and division is approximated with repeated subtractions (there’s a good article on this topic here)

- Logical operations, such as OR, AND, NOT, and XOR

- Bit shifting, which moves the digits left or right

A lot of processors will also include a floating point unit (FPU). This allows the processor to work on a greater range and higher precision of numbers that aren’t whole. Since FPUs are complex, often enough to be their own processor, they are often excluded on smaller low-power processors.

Registers

Registers are small bits of memory that hold immediately relevant data. There’s usually only a handful of them and they can hold data equal to the bit-size the processor was made for. So a 32-bit processor usually has 32-bit registers.

The most common registers are: one that holds the result of an operation, a program counter (this points to where the next instruction is), and a status word or condition code (which dictates the flow of a program). Some architectures have specialized registers to aid in operations. The Intel 8086, for example, has the Segment and Offset registers. These would be used to figure out address spaces in the 8086’s memory-mapping architecture.

A Note about Bits

Bits on a processor usually refers to the largest data size it can handle at once. It mostly applies to the execution unit. However, this does not mean that a processor is only limited to processing data of that size. An eight-bit processor can still process 16-bit and 32-bit numbers, but it takes at least two and four operations, respectively, to do so.

The Soft Stuff: Ideas and Designs in Processors

Over the years of computer design, more and more ideas and designs were realized. These were developed with the goal of making the processor more efficient at what it does, increasing its instructions per clock cycle (IPC) count.

Instruction Set Design

Instruction sets map numerical indexes to commands in a processor. These commands can be something as simple as adding two numbers or as complex as the SSE instruction RSQRTPS (as described in a help file: Compute Reciprocals of Square Roots of Packed Single-Precision Floating-Point Values).

In the early days of computers, memory was very slow and there wasn’t a whole lot of it, and processors were becoming faster and programs more complex. To save both on memory access and program size, instruction sets were designed with the following ideas:

- Variable-length instructions, so that simpler operations could take up less space

- Perform a wide variety of memory-addressing commands

- Operations can be performed on memory locations themselves, in addition to using registers, or as part of the instruction

As memory performance progressed, computer scientists found that it was faster to break down the complex operations into simpler ones. Instructions also could be simplified to speed up the decoding process. This sparked the Reduced Instruction Set Computing (RISC) design idea. Reduced in this case means the time to complete an instruction is reduced. The old way was retroactively named Complex Instruction Set Computing (CISC). To summarize the ideas of RISC:

- Uniform instruction length, to simplify decoding

- Fewer and simple memory addressing commands

- Operations can only be performed on data in registers or as part of the instruction

There have been other attempts at instruction set design. One of them is the Very Long Instruction Word (VLIW). VLIW crams multiple independent instructions into a single unit to be run on multiple execution units. One of the biggest stumbling blocks is that it requires the compiler to sort instructions ahead of time to make the most of the hardware, and most general purpose programs don’t sort themselves out very well. VLIW has been in use in Intel’s Itanium, Transmeta’s Crusoe, MCST’s Elbrus, AMD’s TeraCore, and NVIDIA’s Project Denver (sort of, it has similar characteristics)

Multitasking

Early on, computers could do only one thing at a time and once it got going, it would go until completion, or until there was a problem with the program. As systems became more powerful, an idea called "time sharing" was spawned. Time sharing would have the system work on one program and if something blocked it from continuing, such as waiting for a peripheral to be ready, the system saved the state of the program in memory, then moved on to another program. Eventually, it would come back to the blocked program and see if it had what it needed to run.

Time sharing exposed a problem: A program could unfairly hog the system, either because the program really had a long execution time or because it hung somewhere. So the next systems were built such that they would work on programs in slices of time. That is, every program gets to run for a certain amount of time and after the time slice is up, it moves on to another program automatically. If the time slices are small enough, this gives the impression that the computer is doing multiple things at once.

One important feature that really helped multitasking is the interrupt system. With this, the processor doesn’t need to constantly poll programs or devices if they have something ready; the program or device can generate a signal to tell the processor it’s ready.

Caching

Cache is memory in the processor that, while small in size, is much faster to access than RAM. The idea of caching is that commonly used data and instructions are stored in it and tagged with their address in memory. The MMU will first look in cache to see if what it’s looking for is in it. The more times the data is accessed, the closer its access time reaches cache speed, offering a boost in execution speed.

Normally, data can only reside in one spot in cache. A method to increase the chance of data being in cache is known as associativity. A two-way associative cache means data can be in two places, four-way means it can be in four, and so on. While it may make sense to allow data to just be anywhere in cache, this also increases the lookup time, which may negate the benefit of caching.

Pipelining

Pipelining is a way for a processor to increase its instruction throughput by way of mimicking how assembly lines work. Consider the steps to executing an instruction:

- Fetch instruction (IF)

- Decode instruction (ID)

- Execute instruction (EX)

- Access memory (MEM)

- Write results back (WB)

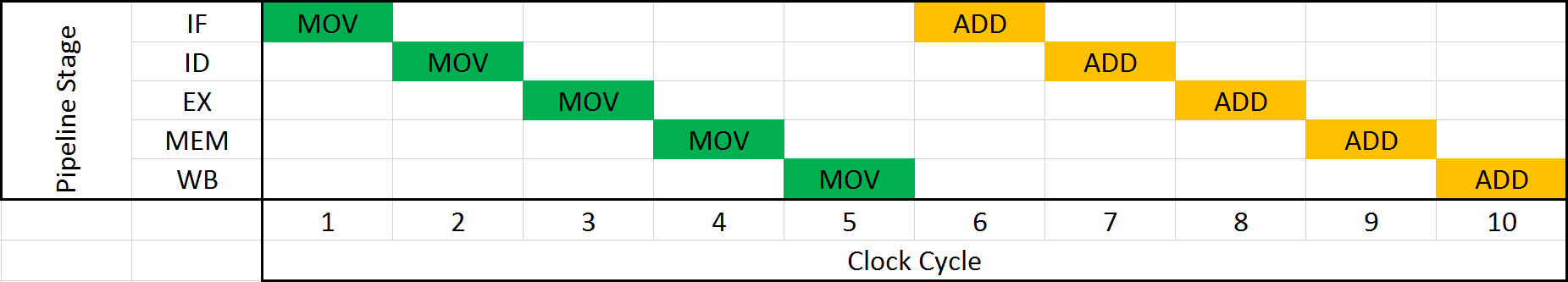

Early computers would process each instruction completely through these steps before processing the next instruction, as seen here:

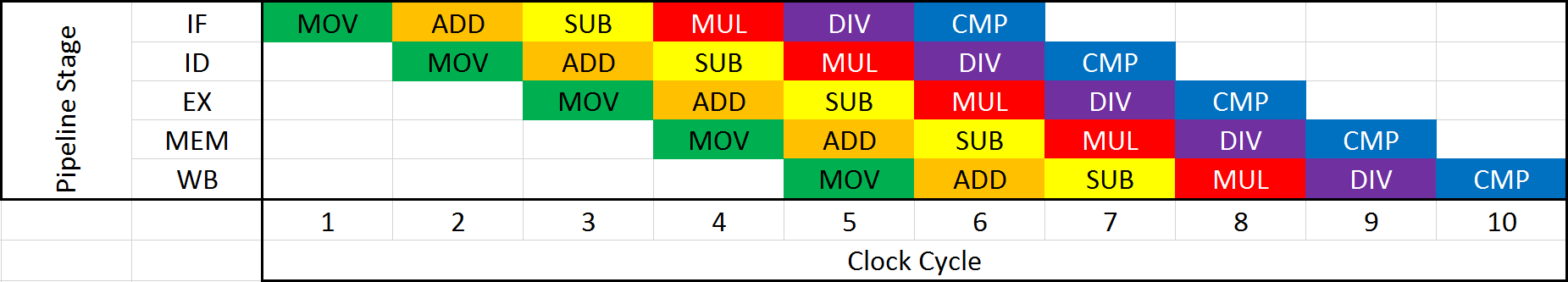

In 10 clock cycles, the processor is completely finished with two instructions. Pipelining allows the next instruction to start once the current one is done with a step. The following diagram shows pipelining in action:

In the same 10 clock cycles, six instructions are fully processed, increasing the throughput threefold.

Branch Prediction

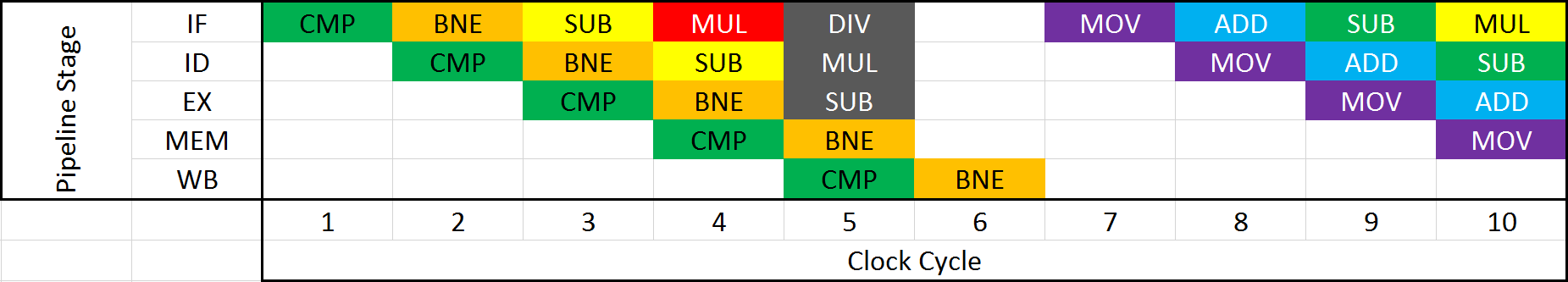

The major issue with pipelining is that if any branching has to be done, then instructions that were being processed in earlier stages have to be discarded since they no longer are going to be processed. Let’s take a look at an situation where this happens.

The instruction CMP is a compare instruction, e.g., does x = y? This sets a flag of the result in the processor. Instruction BNE is “branch if not equal,” which checks this flag. If x is not equal to y, then the processor jumps to another location in the program. The following instructions (SUB, MUL, and DIV) have to be discarded because they’re no longer going to be executed. This creates a five-clock-cycle gap before the next instruction gets processed.

The aim of branch prediction is to make a guess at which instructions are going to be executed. There are several algorithms to achieve this, but the overall goal is to minimize the amount of times the pipeline has to clear because a branch took place.

Out-of-Order Execution

Out-of-order execution is a way for the processor to reorder instructions for efficient execution. Take, for example, a program that does this:

- x = 1

- y = 2

- z = x + 3

- foo = z + y

- bar = 42

- print “hello world!”

Let’s say the execution unit can handle two instructions at once. These instruction are then executed in the following way:

- x = 1, y = 2

- z = x + 3

- foo = z + y

- bar = 42, print “hello world!”

Since the value of “foo” depends on “z,” those two instructions can’t execute at the same time. However, by reordering the instructions:

- x = 1, y = 2

- z = x + 3, bar = 42

- foo = z + y, print “hello world!”

Thus an extra cycle can be avoided. However, implementing out-of-order execution is complex and the application still expects the instructions to be processed in the original order. This has normally kept out-of-order execution off processors for mobile and small electronics because the additional power consumption outweighs its performance benefits, but recent ARM-based mobile processors are incorporating it because the opposite is now true.

A Complex Machine Made Up of Simple Pieces

When looked at from a pure hardware perspective, a processor can seem pretty daunting. In reality, those billions of transistors that modern processors carry today can still be broken down into simple pieces or ideas that lay the foundation of how processors work. If reading this article leaves you with more questions than answers, a good place to get started learning more is Wikipedia’s index on CPU technologies.