Our Verdict

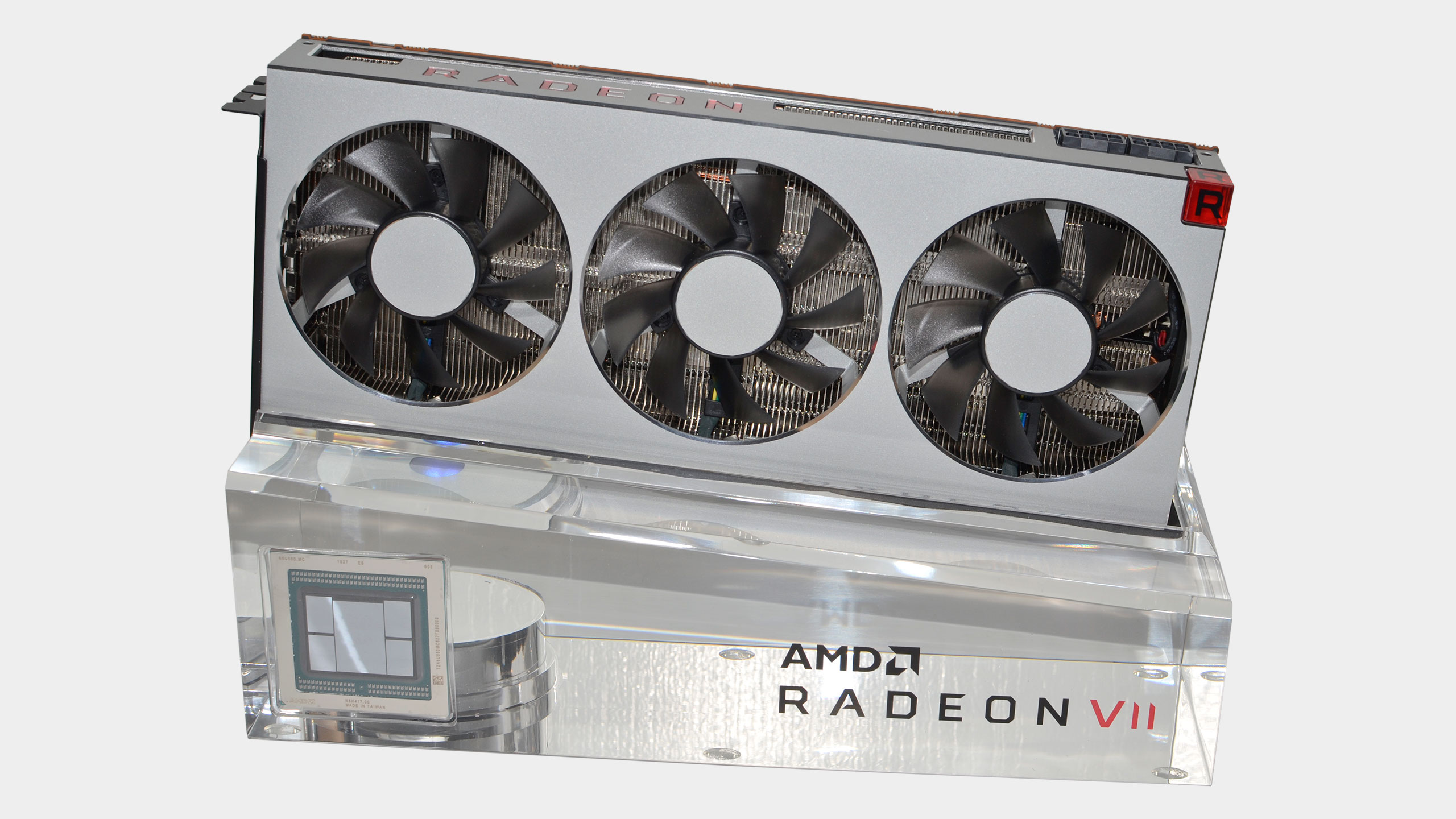

Radeon VII is a modest improvement over Vega helped by a die shrink, but it doesn't overcome architecture limitations or warrant the price… unless you need 16GB VRAM.

For

- First 7nm GPU

- Good 1440p/4k performance

- Lots of VRAM and bandwidth

Against

- Slower than RTX 2080

- Lacking in features

- Worse efficiency

PC Gamer's got your back

Building a capable gaming PC is relatively simple: you start with the best graphics card you can afford, and combine it with an appropriate CPU, motherboard, and other components. With every generation of GPU normally improving in features, performance, and pricing relative to its predecessor, the launch of any new GPU is cause for celebration. But AMD's Radeon VII is both more and less than other GPU launches.

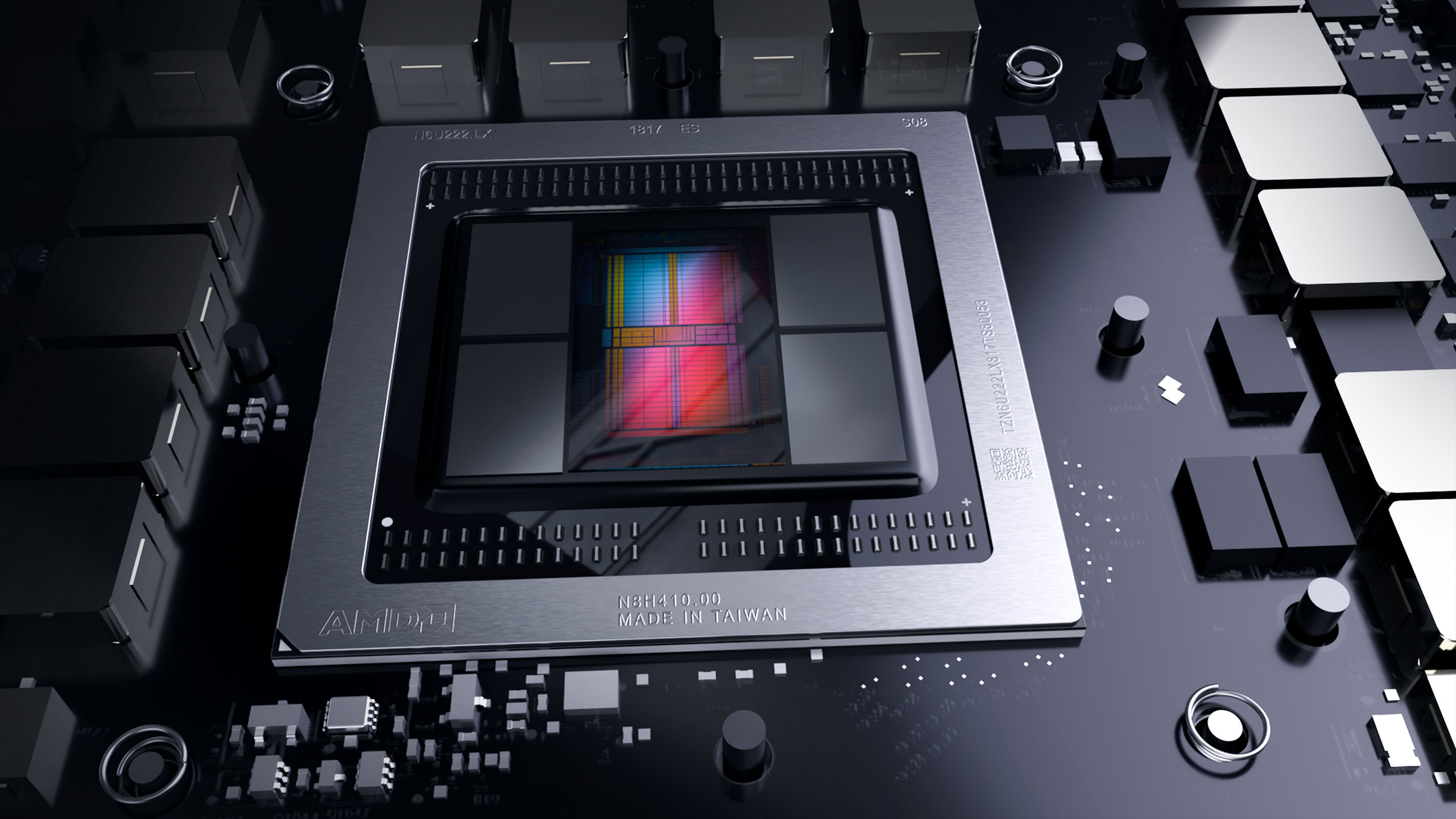

Radeon VII is the first GPU to be manufactured using 7nm lithography, a bold statement from AMD. On the face of things that's a big jump from the previous 14nm technology (and 12nm/16nm as well). However, the numbers used for a manufacturing process often contain a fair amount of marketing. In the Radeon VII's case, it's good progress, but it's not a revolution. And it's not enough to push the Radeon VII ahead of the competition, especially at a steep $700.

Architecture: Vega 20

Lithography: TSMC 7nm FinFET

Transistor Count: 13.2 billion

Die Size: 331mm2

Compute Units: 60

Shader Cores: 3,840

Render Outputs: 64

Texture Units: 240

Base Clock: 1400MHz

Boost Clock: 1750MHz

Memory Speed: 2 GT/s

Memory Bandwidth: 1024GB/s

GDDR6 Capacity: 16GB

Bus Width: 4096-bit

TDP (BDP): 300W

AMD doubled the number of HMB2 memory stacks, so you get 16GB and a whopping 1TB/s of memory bandwidth. The GPU core on the surface is largely the same, and with slightly fewer GPU cores (3,840 compared to 4,096) and a modest 200MHz improvement in clockspeed, in theory it's only six percent more computational performance. However, AMD increased the number of integer and floating-point accumulators, and while it mentions these will help with compute performance, they could help in games as well.

One thing the Radeon VII doesn't do is add any major new features. For better or worse, Nvidia took a different approach last year with its GeForce RTX line, pushing ray tracing hardware and deep learning functions. Many have complained and said, in effect, we wish Nvidia had used the die space on more GPU cores instead of ray tracing and deep learning. AMD meanwhile doesn't waste space on those forward-looking architectural changes, but neither does it add any cores.

What does that mean for games? With no dedicated hardware for ray tracing, performance in such workloads will likely be a fraction of the RTX line. But then, there's currently only one ray tracing game, two if you count the user-created executable for Quake, and one full ray tracing benchmark. The Radeon VII can't even try to run any of those as they appear to specifically target Nvidia's RTX GPUs (or at least they require DXR enabled drivers, which AMD hasn't released yet).

Peering into the hazy future with my crystal ball, there's a reasonable chance we won't get 'acceptable' ray tracing performance until the second generation of DXR hardware. As for deep learning, AMD has decent FP16 performance—about 28 TFLOPS, or twice the performance of FP32. It's not at the 80 TFLOPS level of the RTX 2080's Tensor cores, never mind the RTX 2080 Ti's 108 TFLOPS, but FP16 isn't used much in games anyway. For most gamers, it won't matter.

The price is a bit of a slap to the face for anyone hoping for relief from the GeForce RTX cards. Some gamers balked at Nvidia's RTX pricing—its CEO even went so far as to call the poor launch sales a "punch in the gut." The RTX cards are generally faster then their outgoing GTX counterparts and include new features, but they're not substantially faster in existing games. Performance ends up being a baby step forward for roughly the same price.

The Radeon VII is even more of a stumble than the RTX cards. It's clearly a high-end card going after the same market as the RTX 2080, with the same base price. Unfortunately, it looks a lot more like a direct competitor to the outgoing GTX 1080 Ti, about two years after that card came out. Add to that higher power use and the missing features and it starts to feel a lot like the R9 Fury X and RX Vega 64 launches: Too little, too late.

AMD does sweeten the deal a bit by including three free games with the Radeon VII. You get the Resident Evil 2 remake, Devil May Cry 5 (March 8), and The Division 2 (March 15). If you don't own those already and plan to buy them, that's potentially $150 in savings (not that AMD paid the publishers that much).

The big question is how all of this plays out in the real world, running actual applications—and by applications, I mean games. AMD lists various other applications that can benefit, mostly for content creators, but I'll leave the discussion of how Radeon VII compares to other GPUs in content creation tasks to our colleagues at AnandTech and Tom's Hardware. 4k and 8k video editing workloads may be meaningful to some people, but here we're focusing on gaming performance, and for that I've run benchmarks at stock and overclocked settings on the Radeon VII.

Radeon VII Overclocking

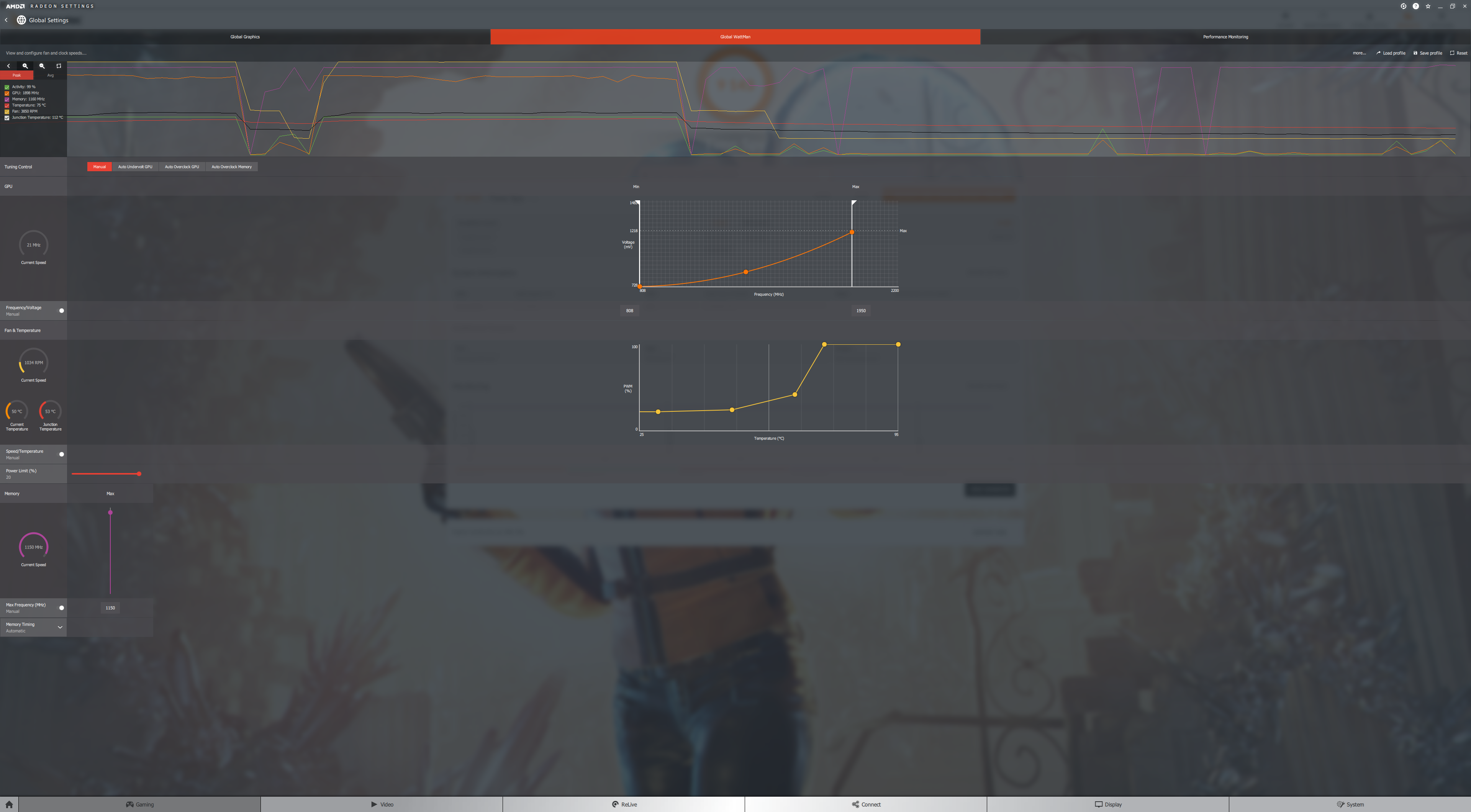

MSI Afterburner doesn't properly support the Radeon VII (yet), but the WattMan functionality of the Radeon Adrenalin drivers provides all the options you need. To establish the upper limit on overclocking, I first maxed out fan speed (it's a bit loud—the card normally peaks at 75 percent fan speed), maxed out the power slider at 20 percent, and then went about tweaking the GPU and HBM2 clocks.

With that in place, after a bit of trial and error (and some system crashes), I ended up with the GPU clock at 1950MHz and the HBM2 at 1175MHz, but that's the maximum rather than static GPU clock. Like other GPUs, the clockspeed can fluctuate based on temperature, power, and other factors. I also dropped the GPU voltage to 1174mV after adjusting the clockspeed slider (otherwise it would have been at 1223mV for 1950MHz).

The result of the above isn't a massive jump in performance, and long-term stability with overclocking is always a concern. Peak 'traditional' GPU temperatures, aka edge temperatures, are around 75C, but the new junction temperature (the hottest part of the chip, measured via 64 thermal nodes) hits 110-115C with these overclocks. AMD sets the junction temperature limit at 110C by default, with an absolute shutdown temperature of 120C, so it seems even with the OC I'm still within specs. Regardless, I wouldn't want to run the GPU using these settings for long, though further tuning (especially of the fan speed and possibly voltage) could help.

AMD Radeon VII Performance

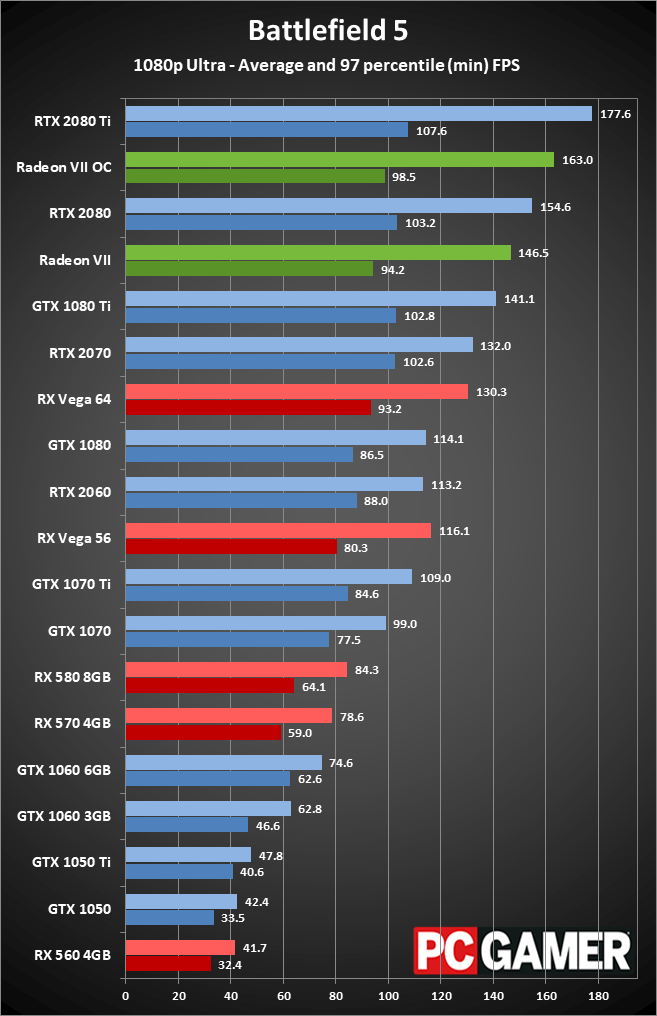

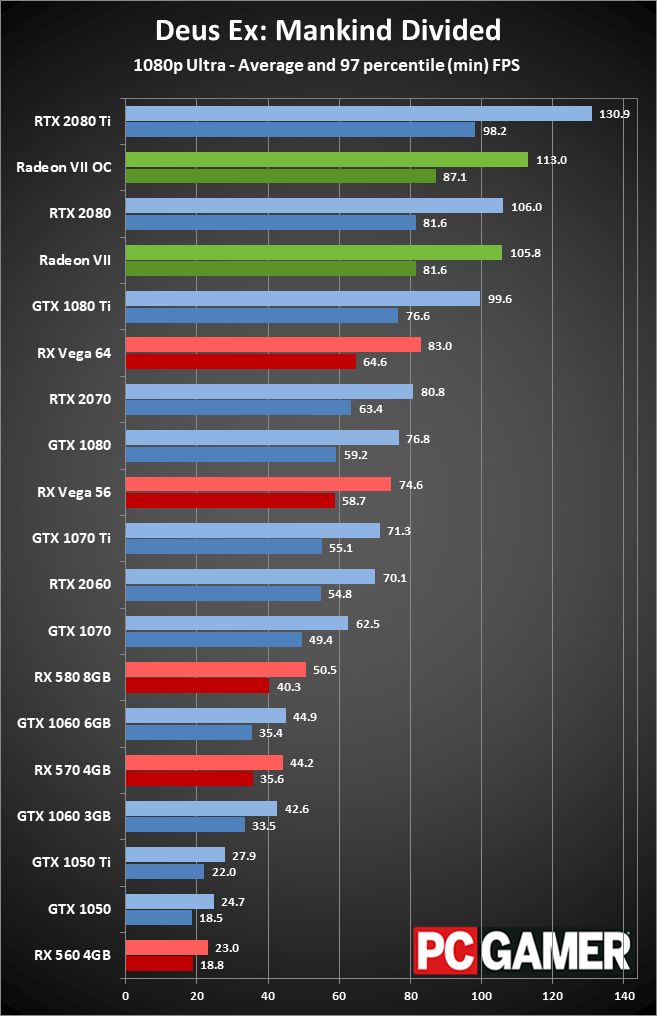

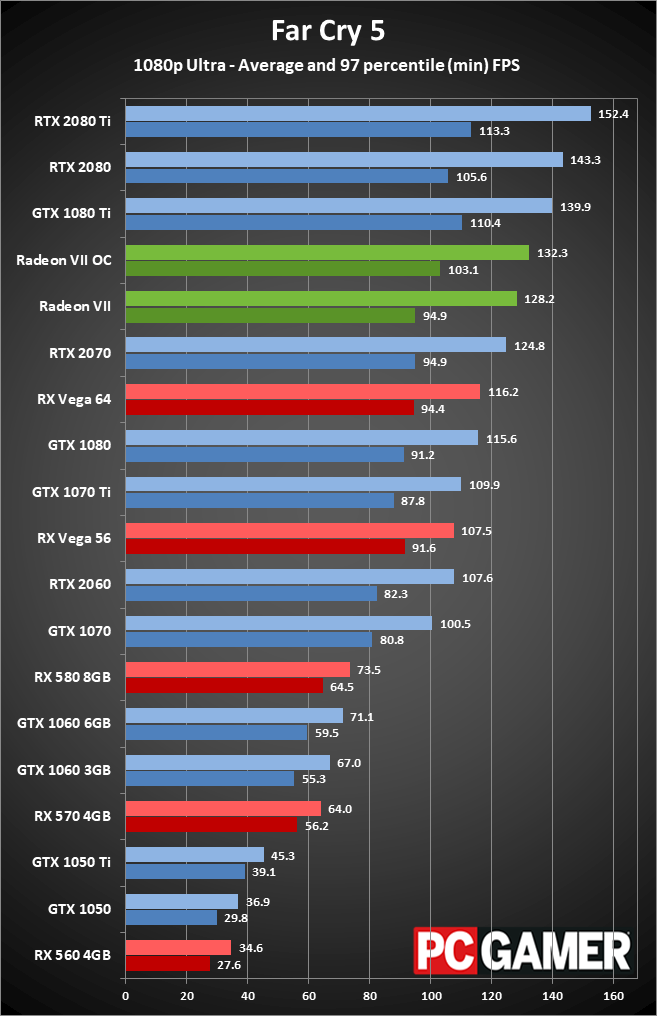

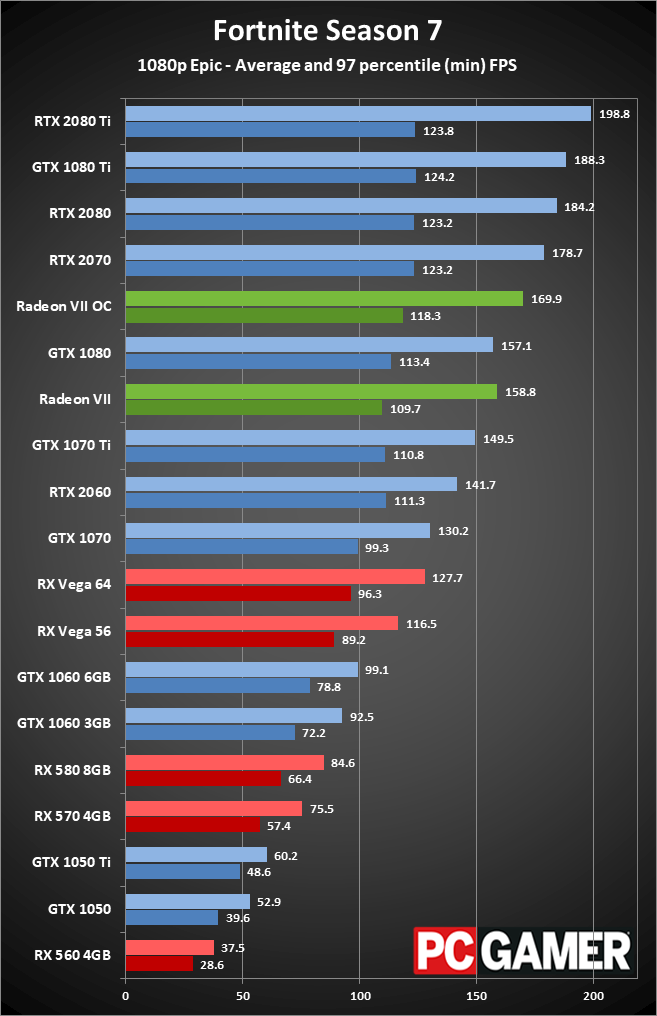

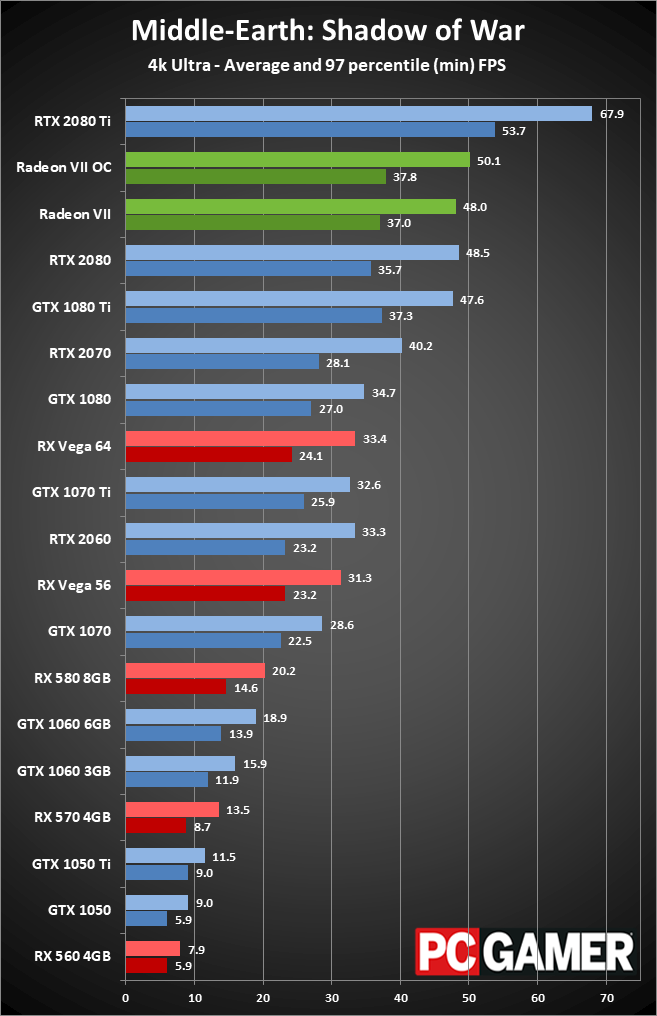

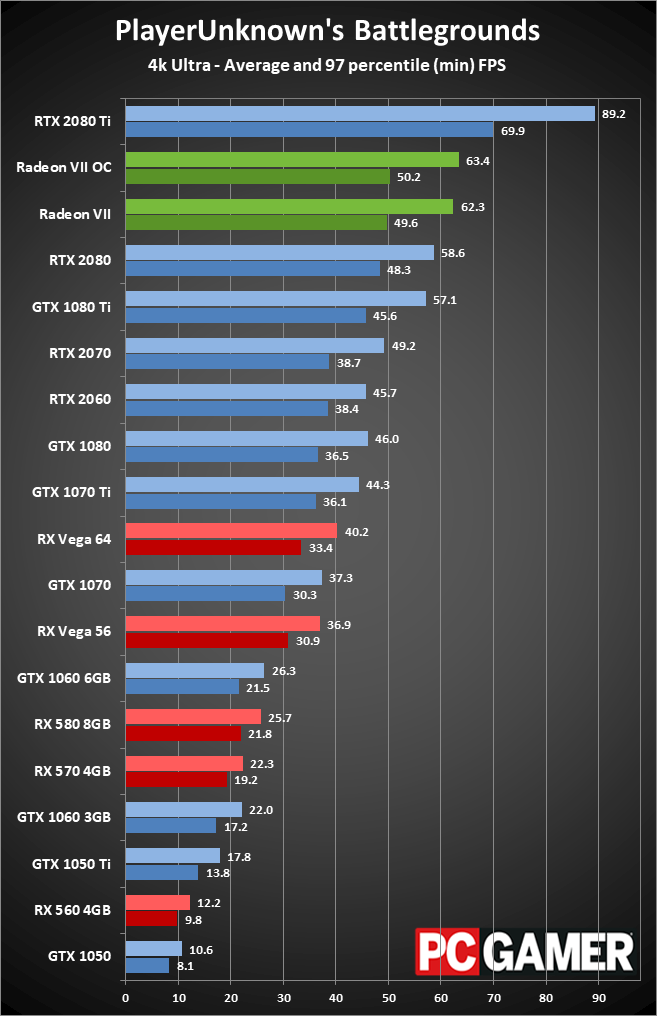

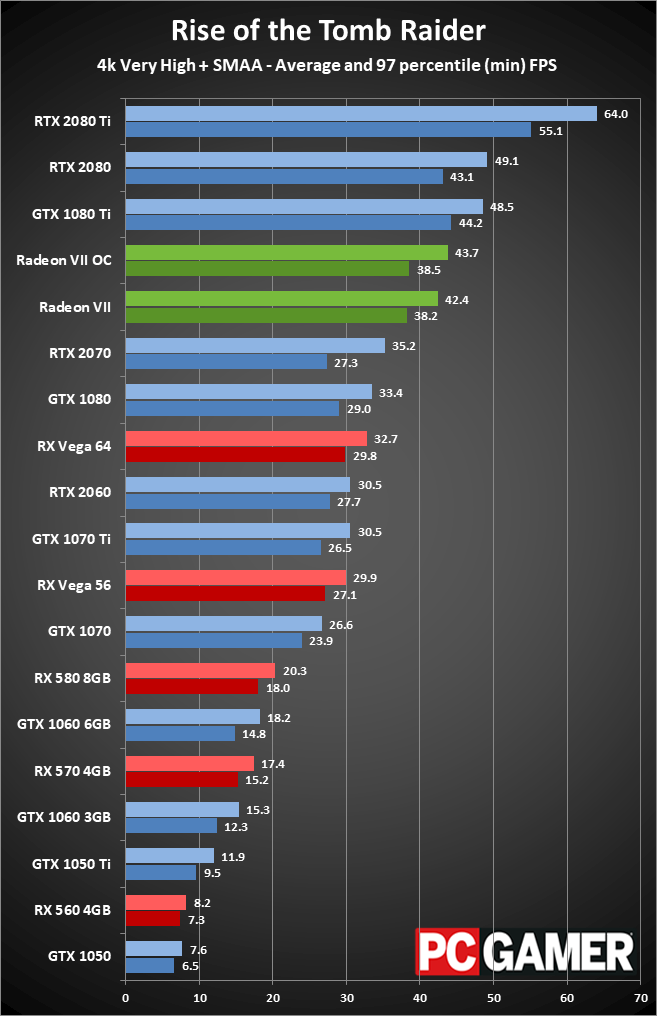

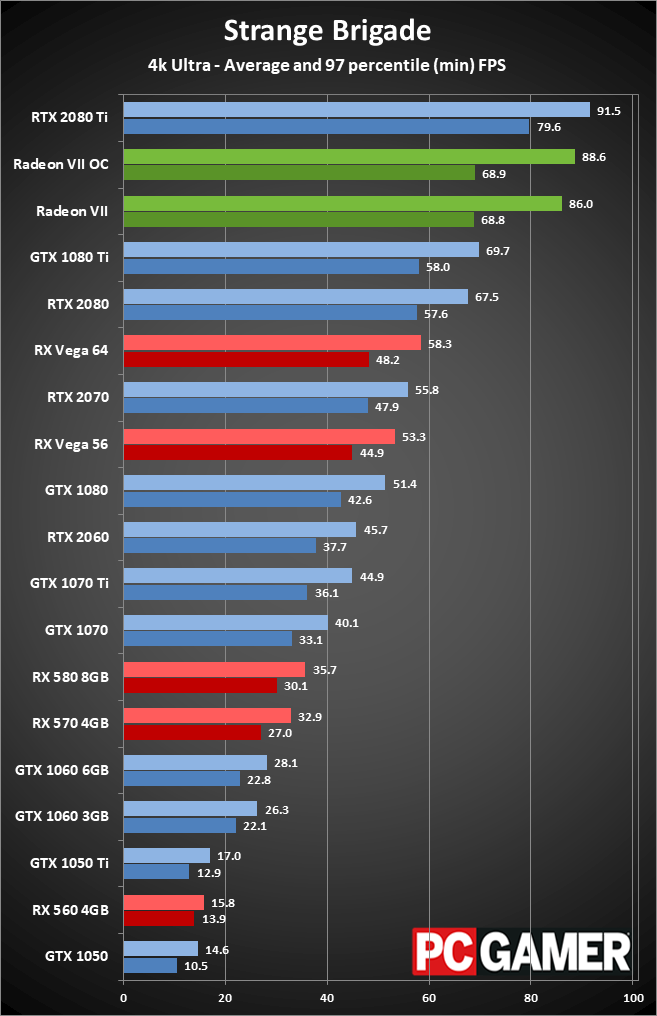

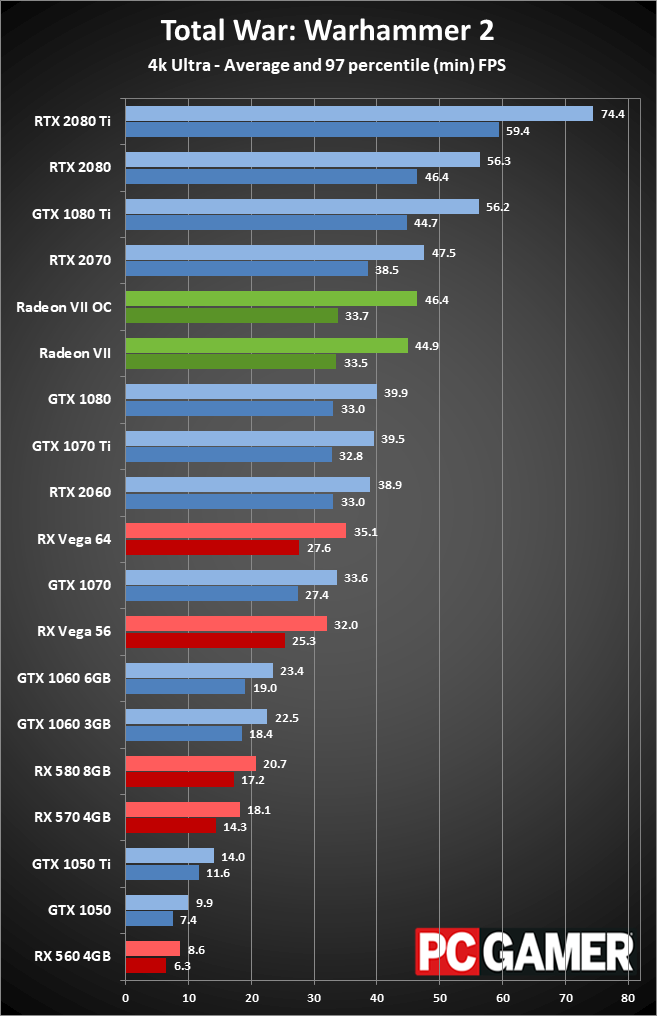

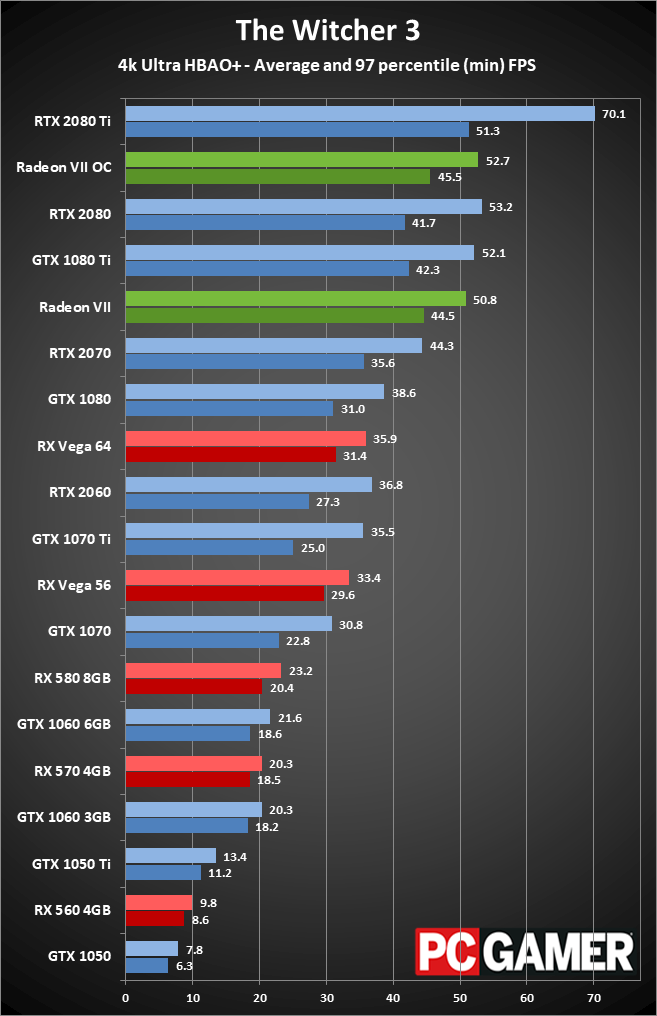

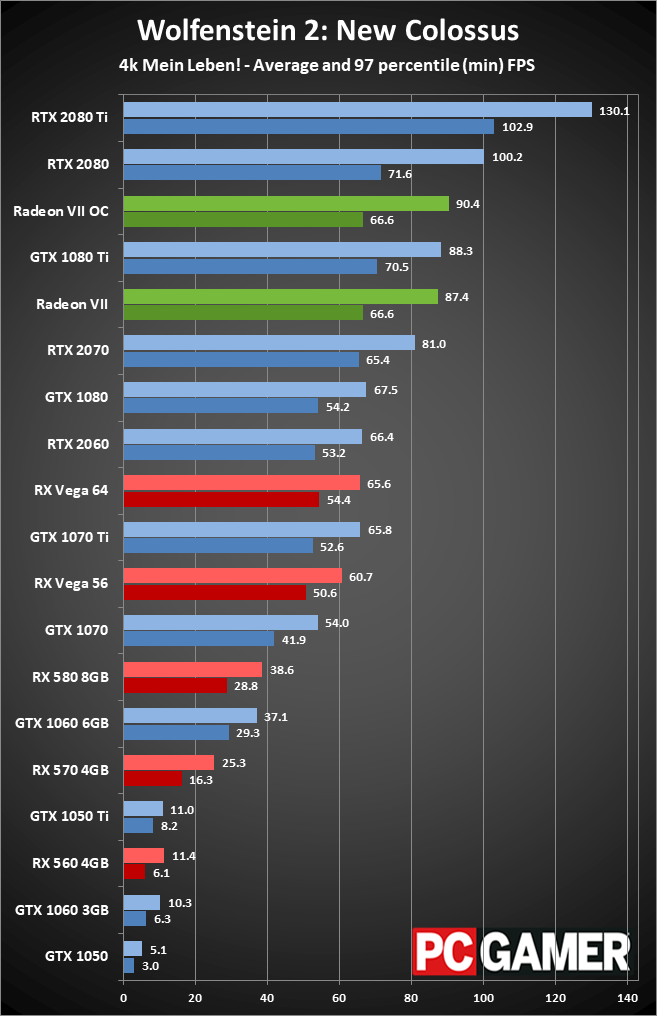

I retested all the graphics cards for Nvidia's Turing launch last September, as well as adding several new games since then. The new games were tested with the latest drivers available at the time, and the Radeon VII is using pre-release drivers that appear similar in features and functionality to the public 19.2.1 drivers. I haven't retested every GPU with the latest drivers currently available, but there shouldn't be more than a minor shift in performance at most.

My GPU test system uses a Core i7-8700K overclocked to 5.0GHz to help minimize CPU bottlenecks during testing, along with other high-end components. You can see the full details in the boxout on the right. For budget and midrange graphics cards, the test system is perhaps overkill, but I want to ensure high-end GPUs like the Radeon VII and GeForce RTX cards are able to reach their performance potential as much as possible.

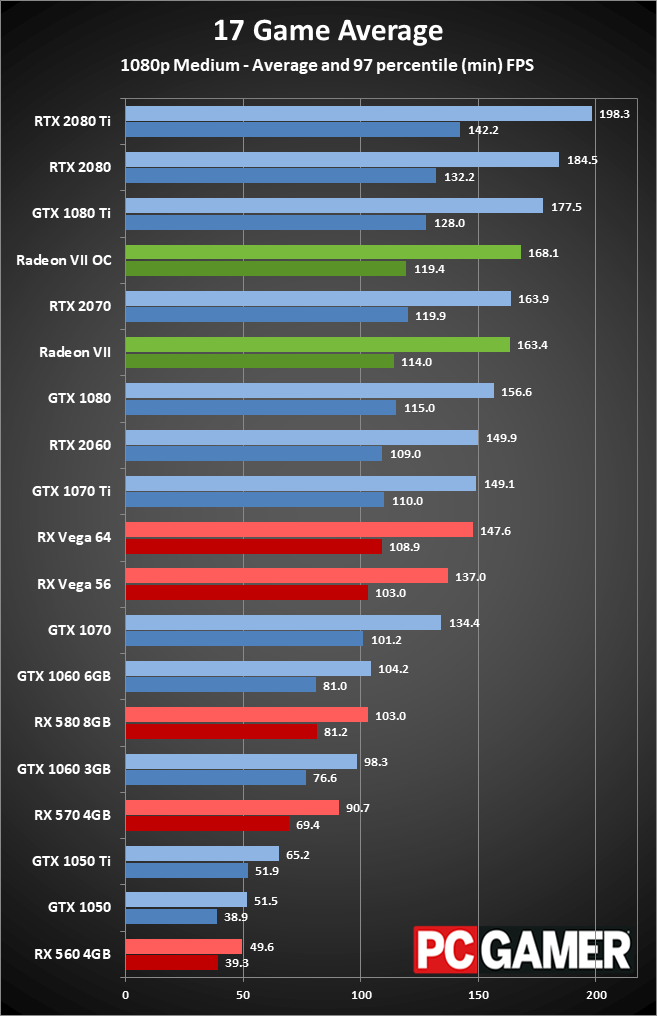

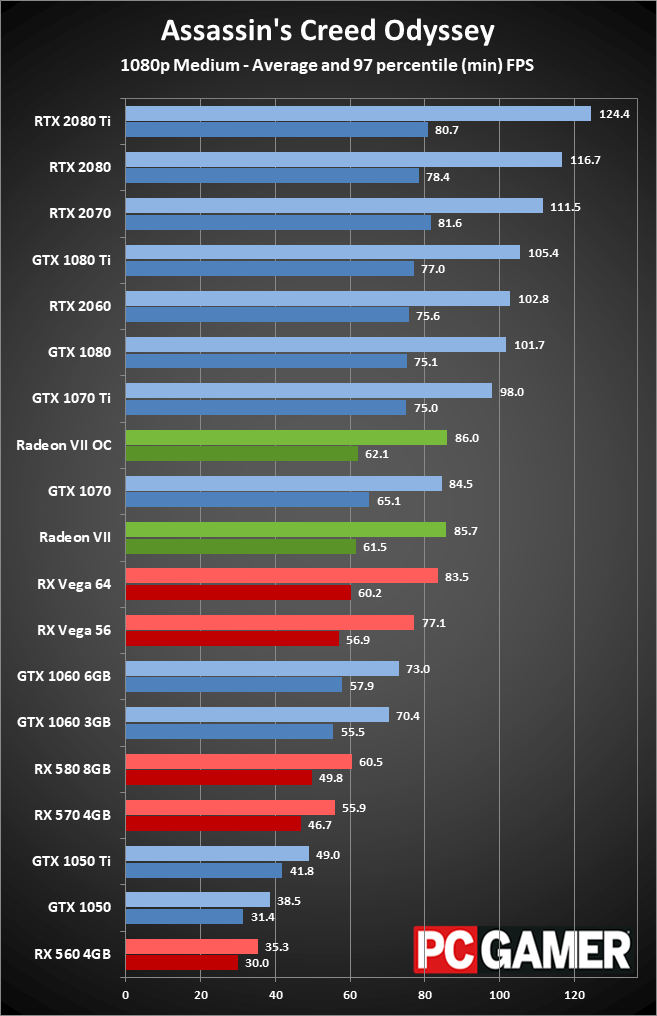

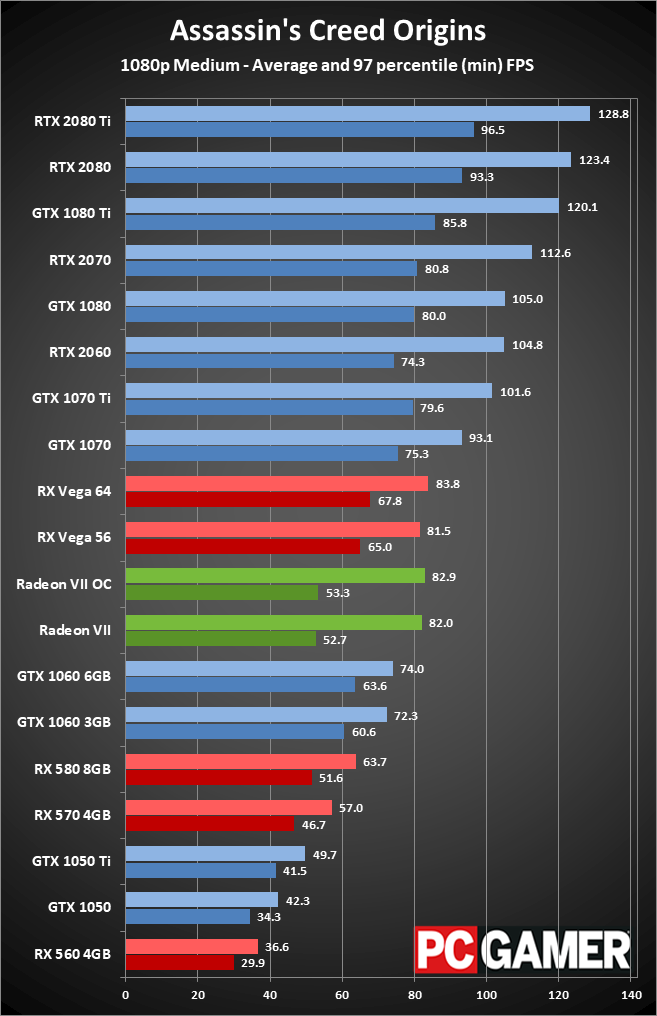

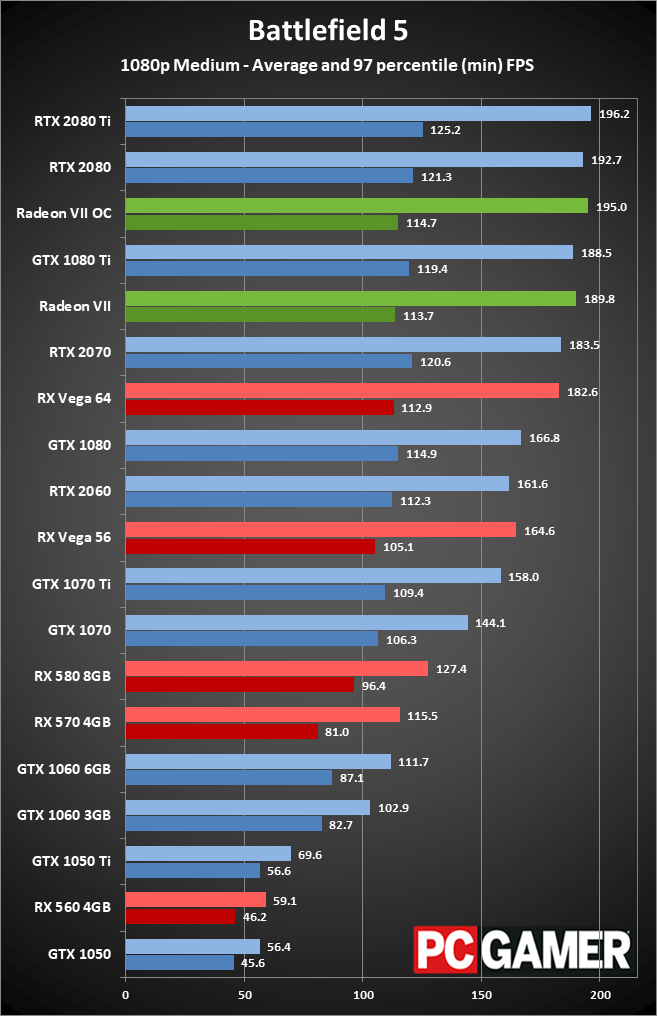

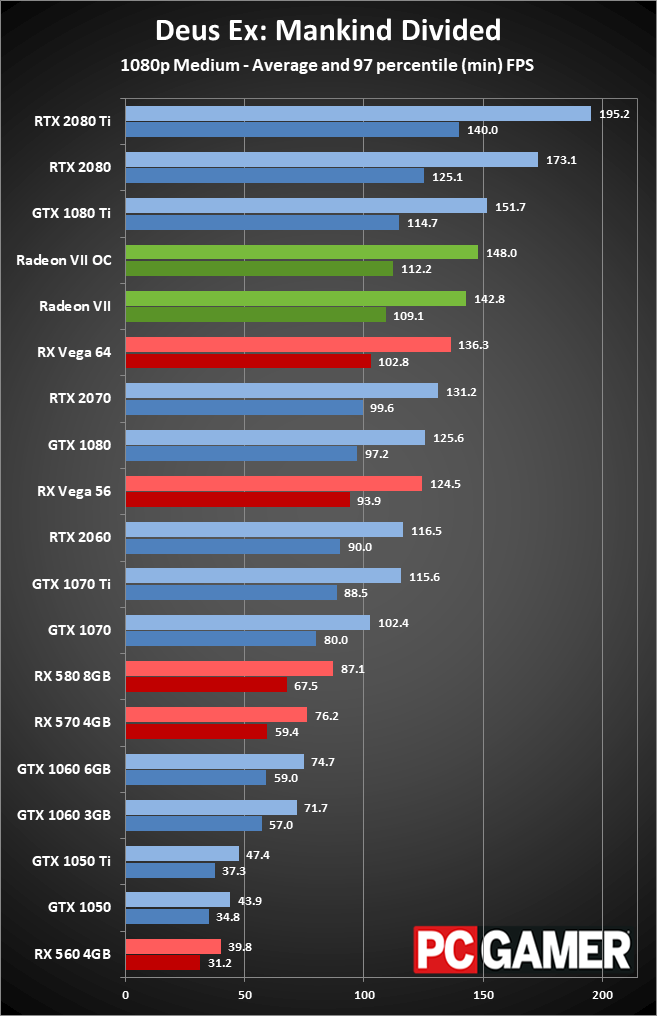

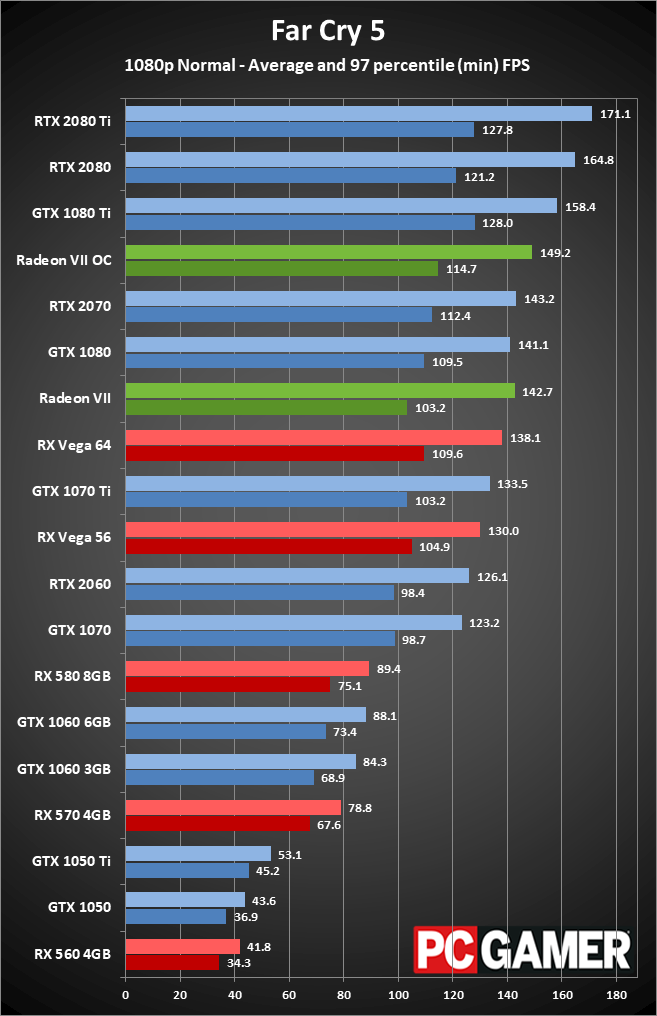

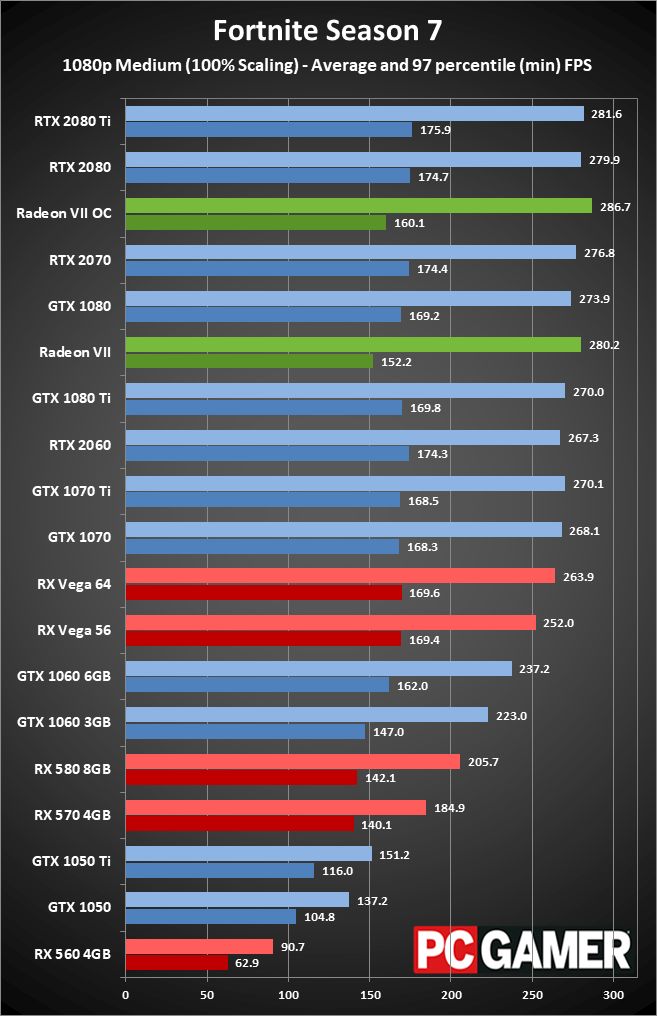

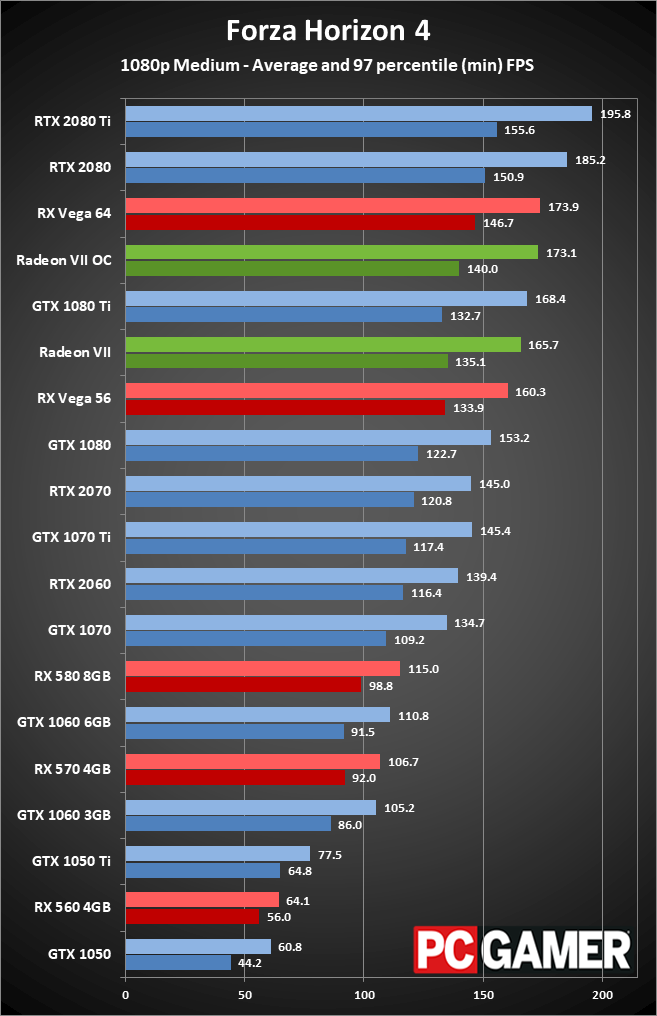

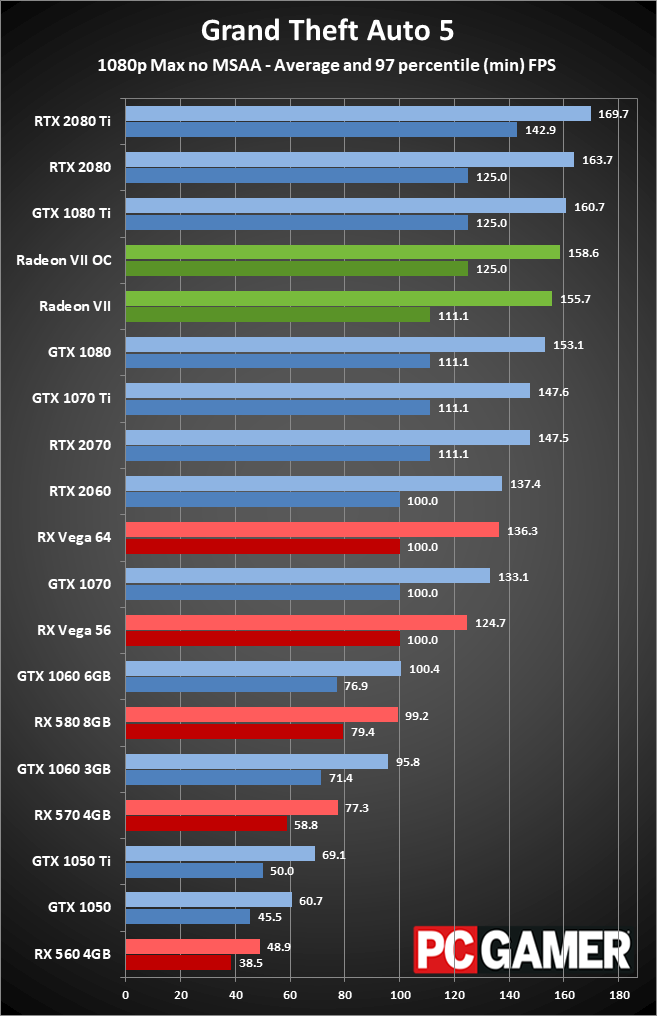

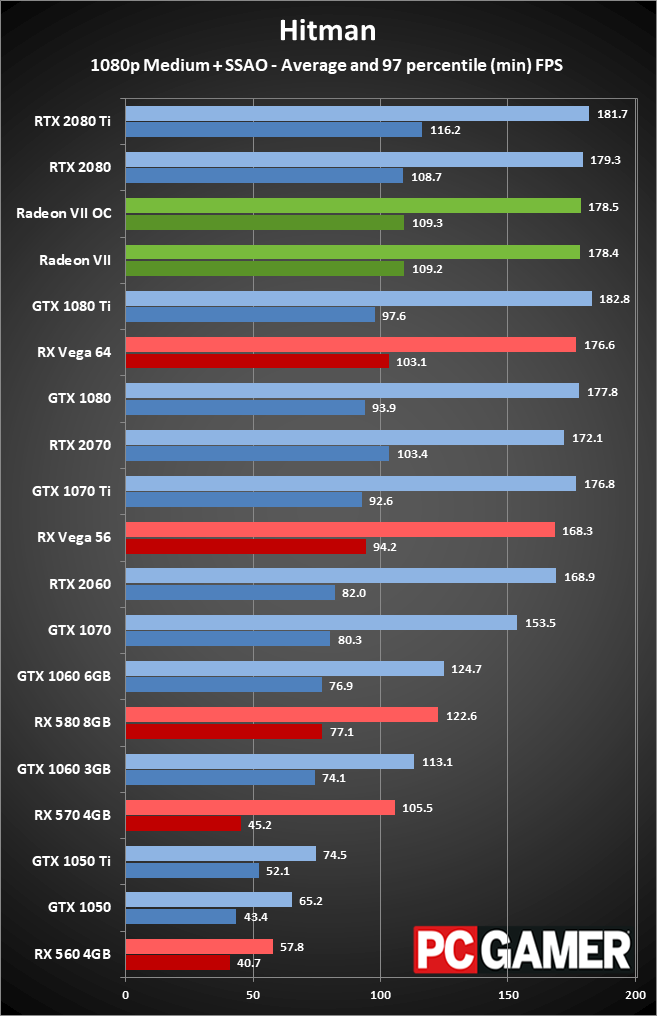

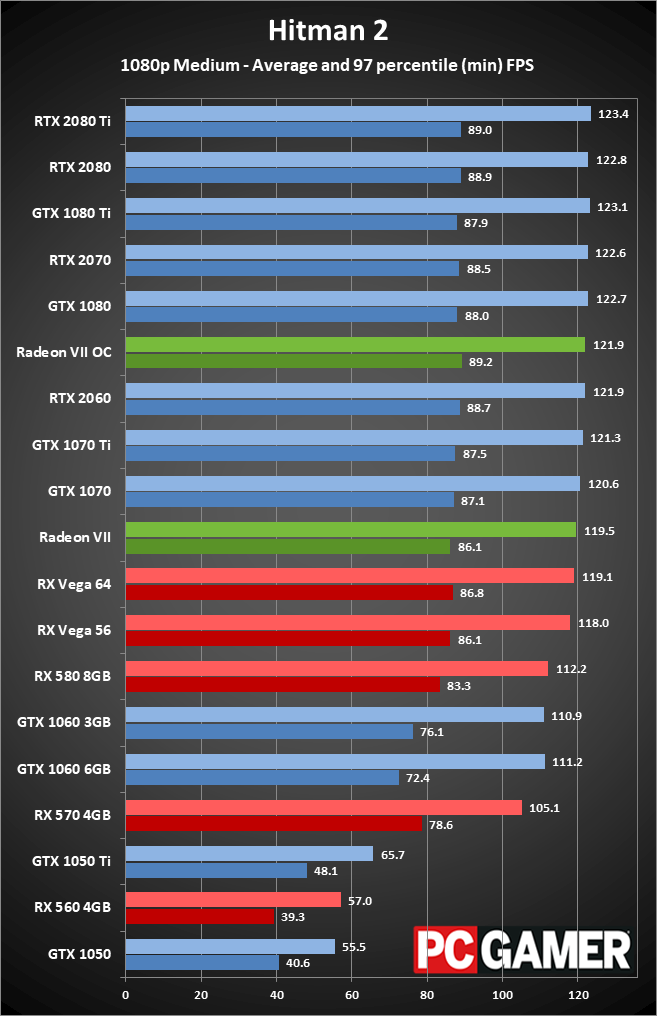

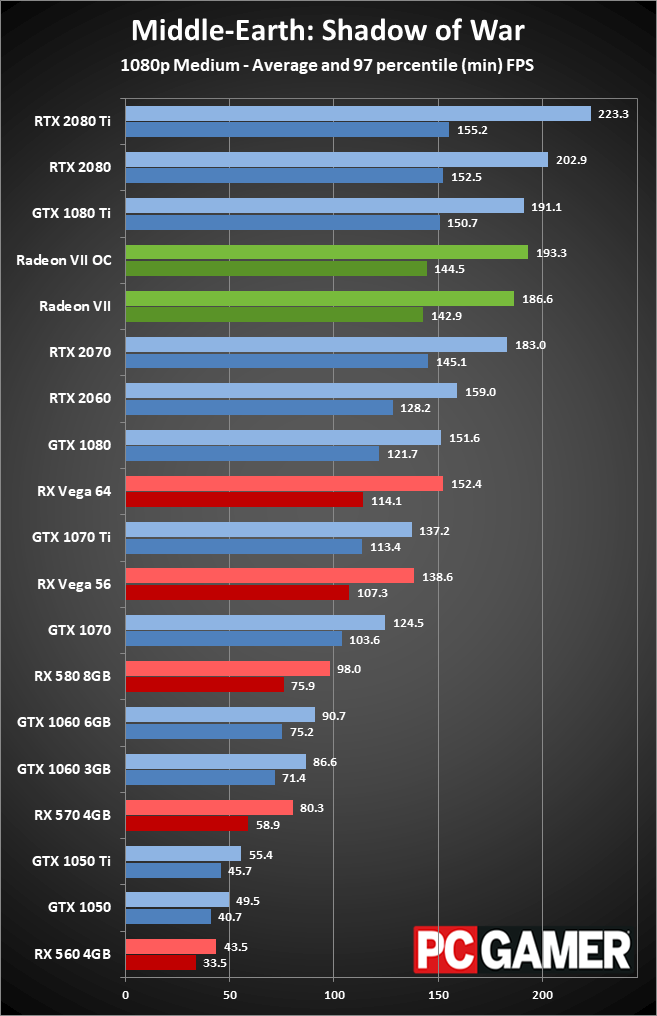

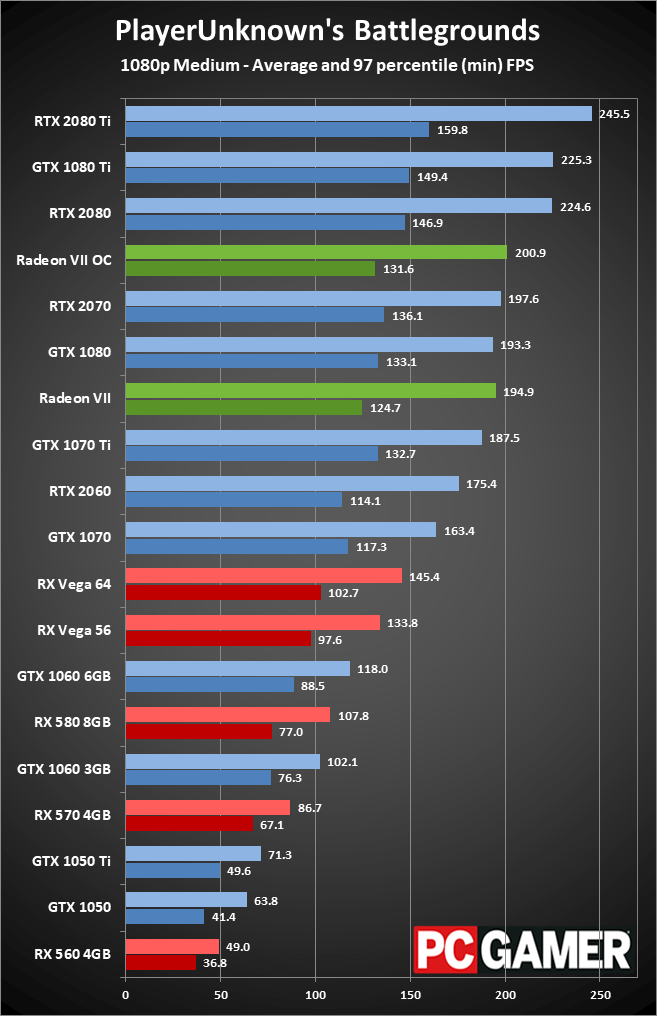

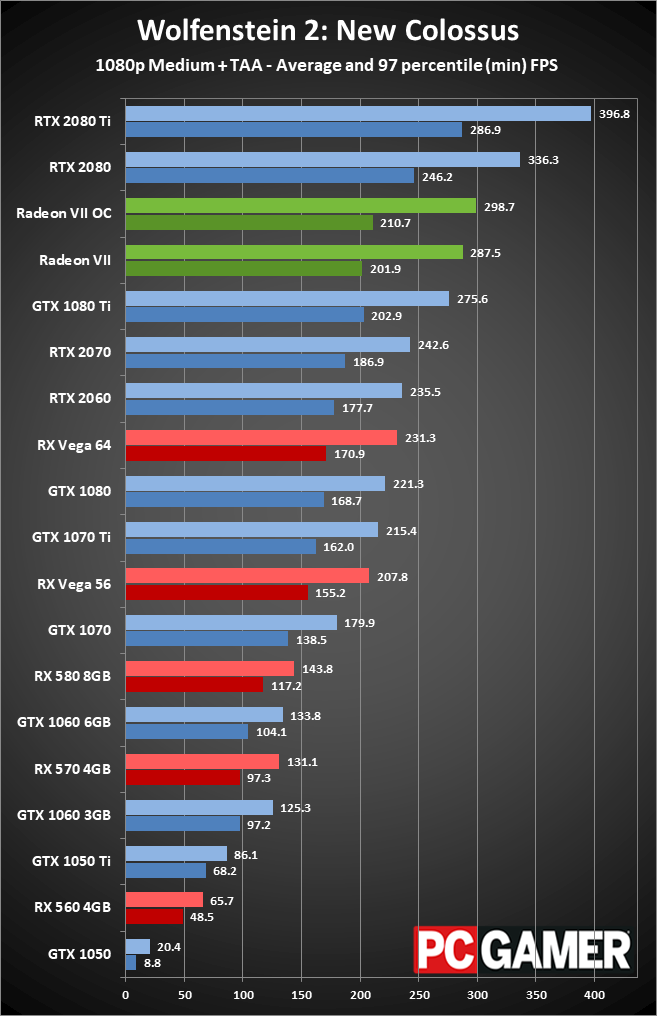

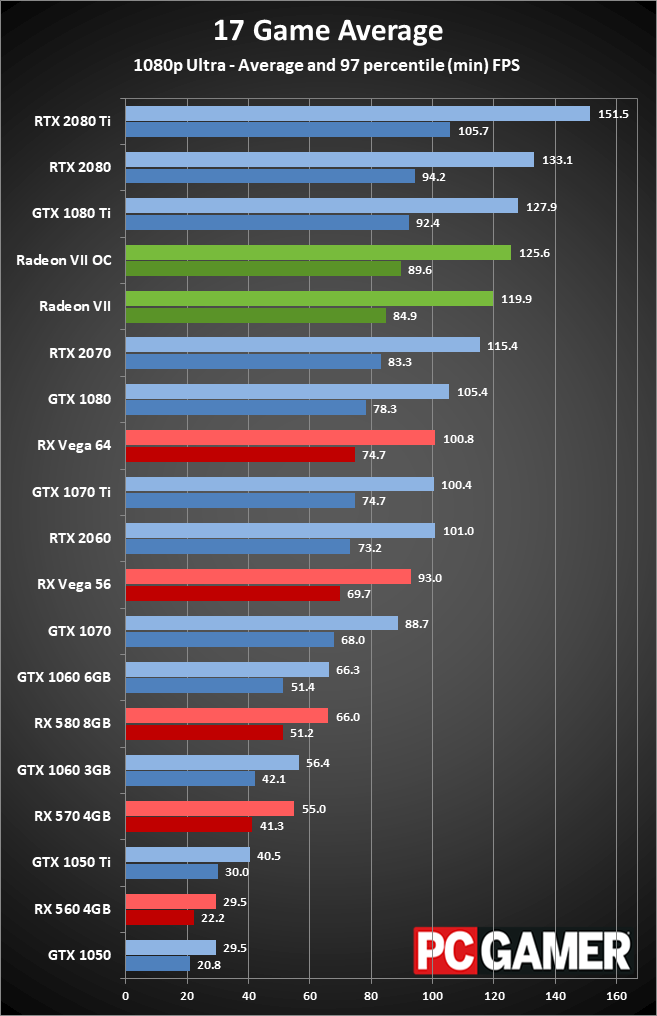

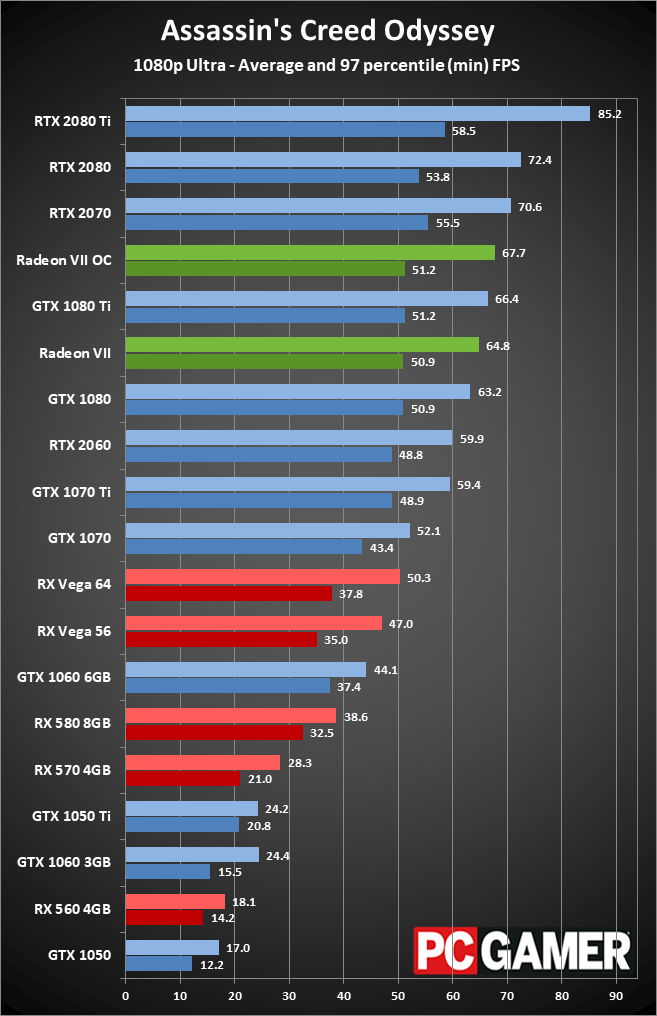

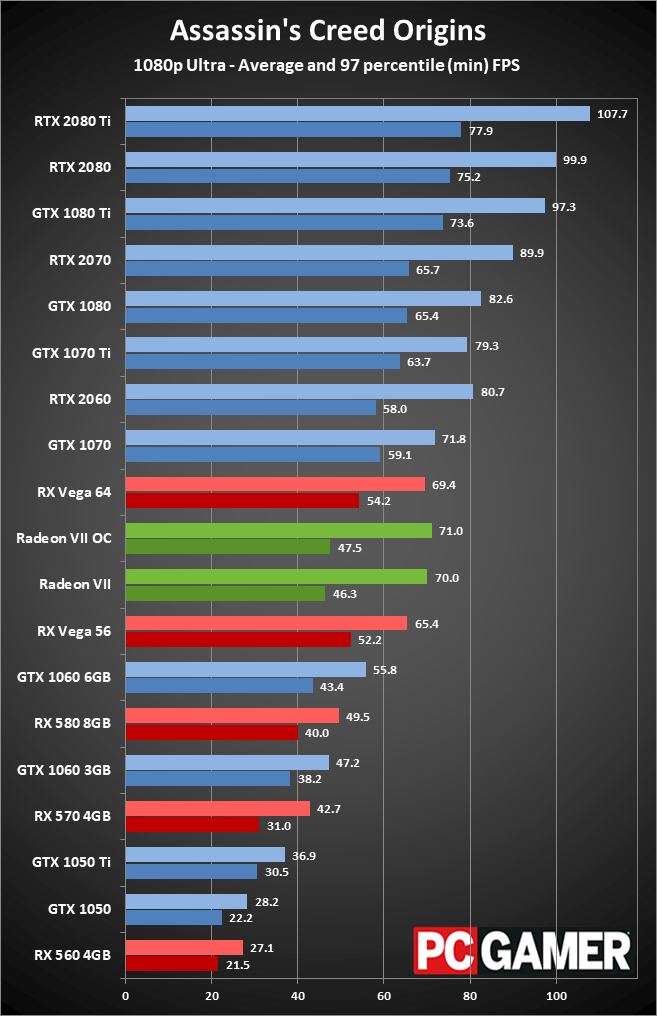

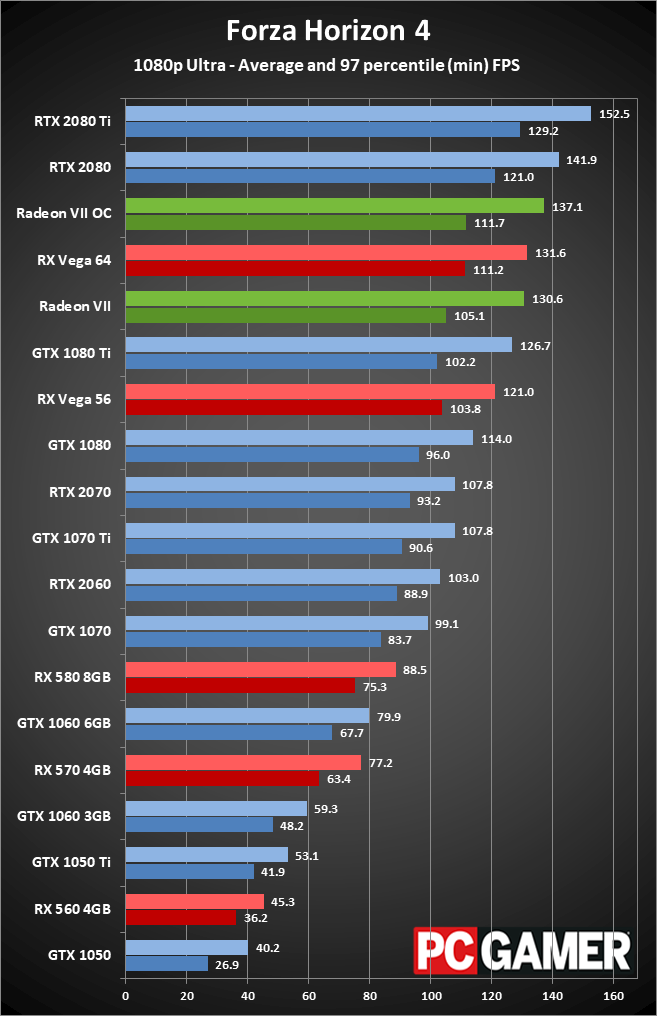

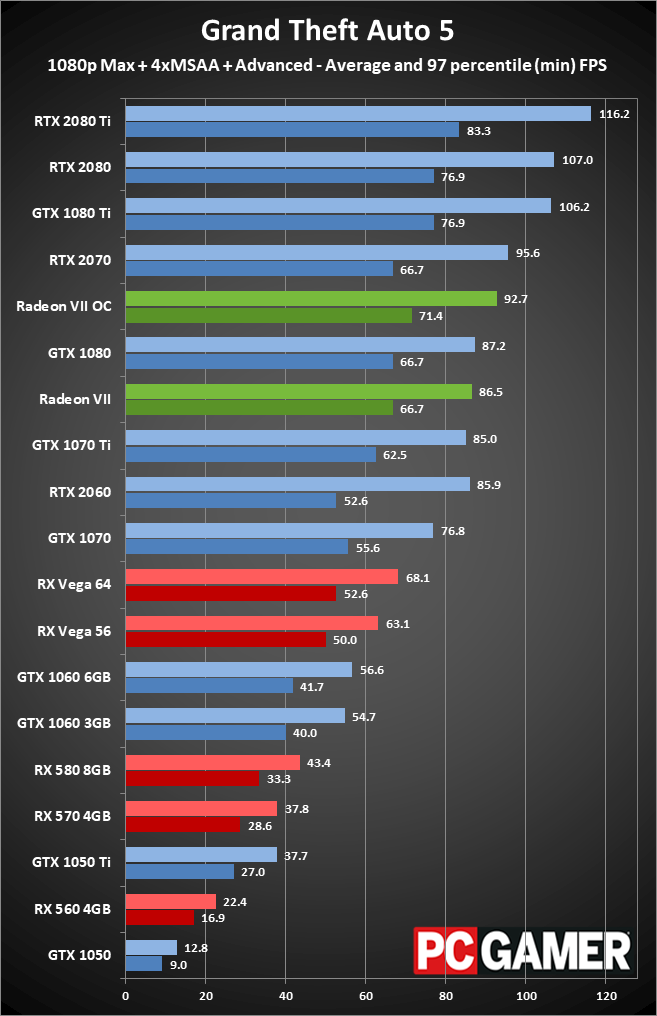

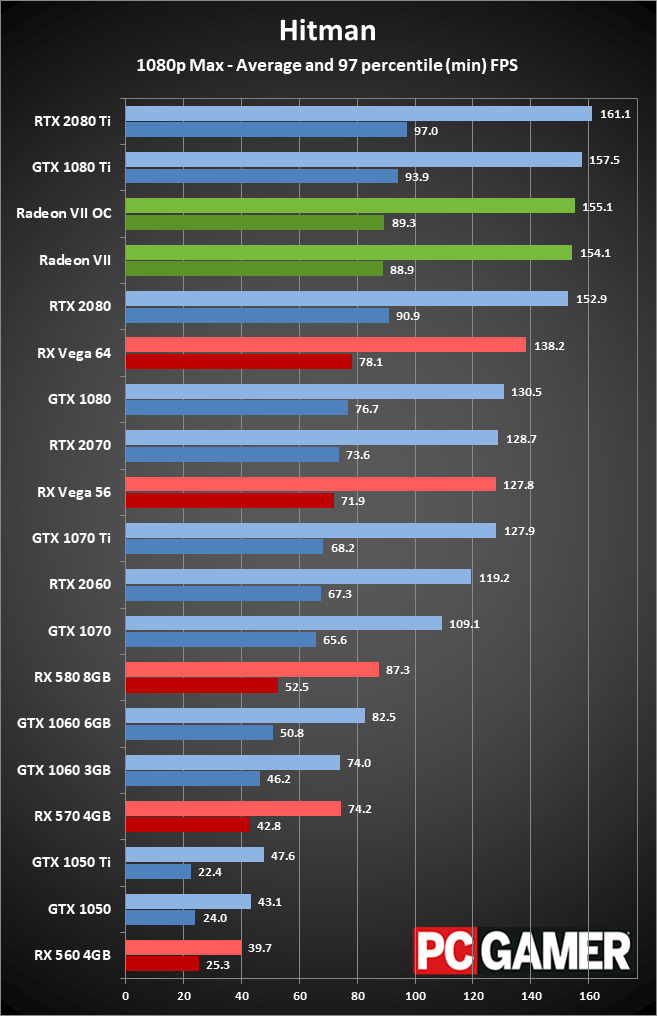

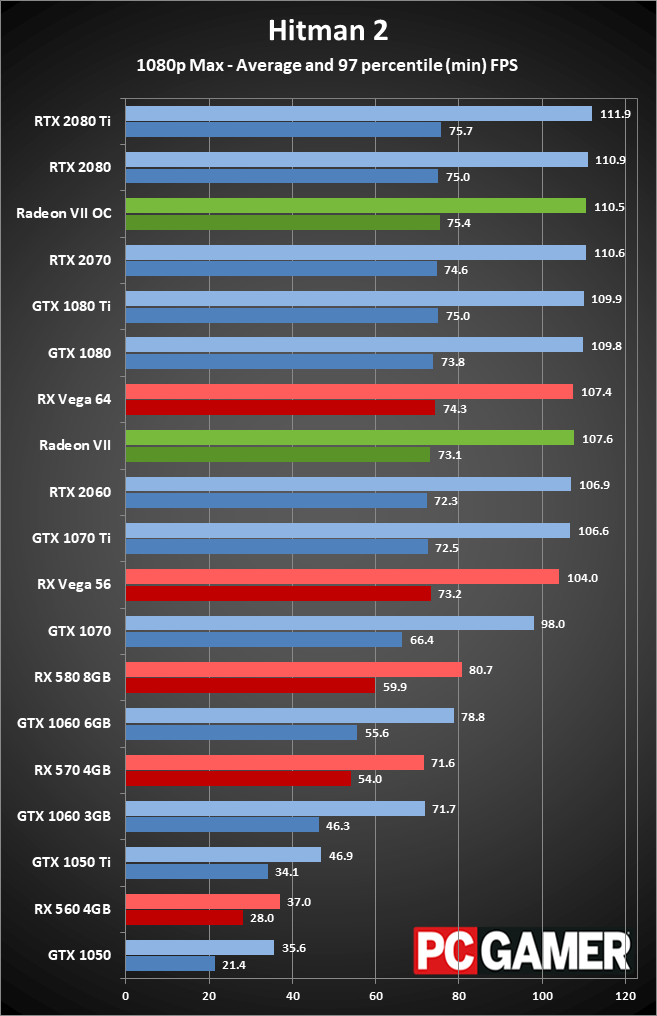

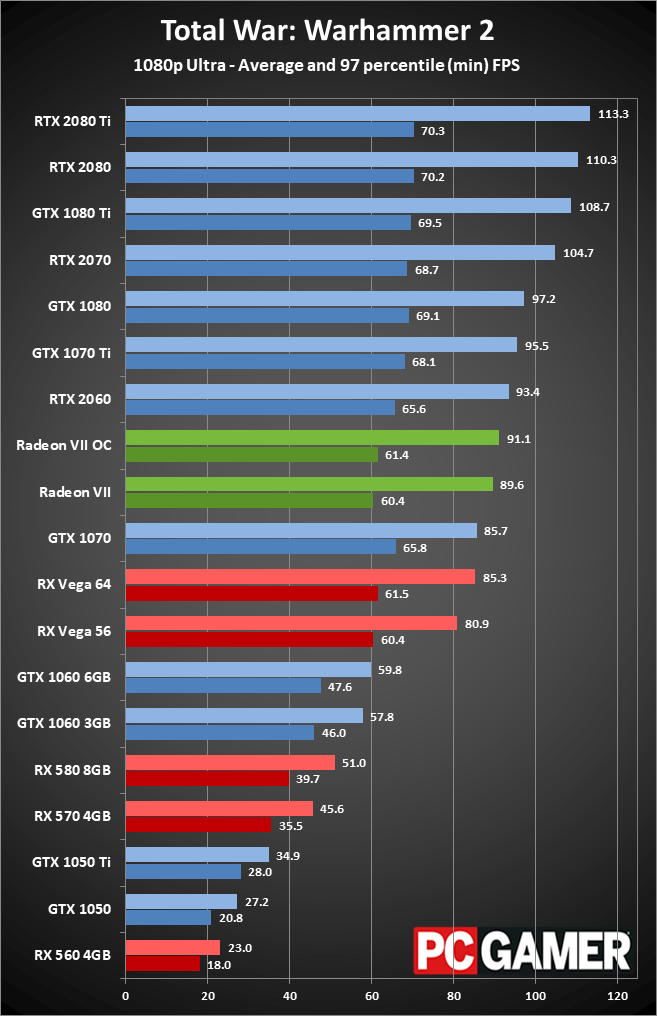

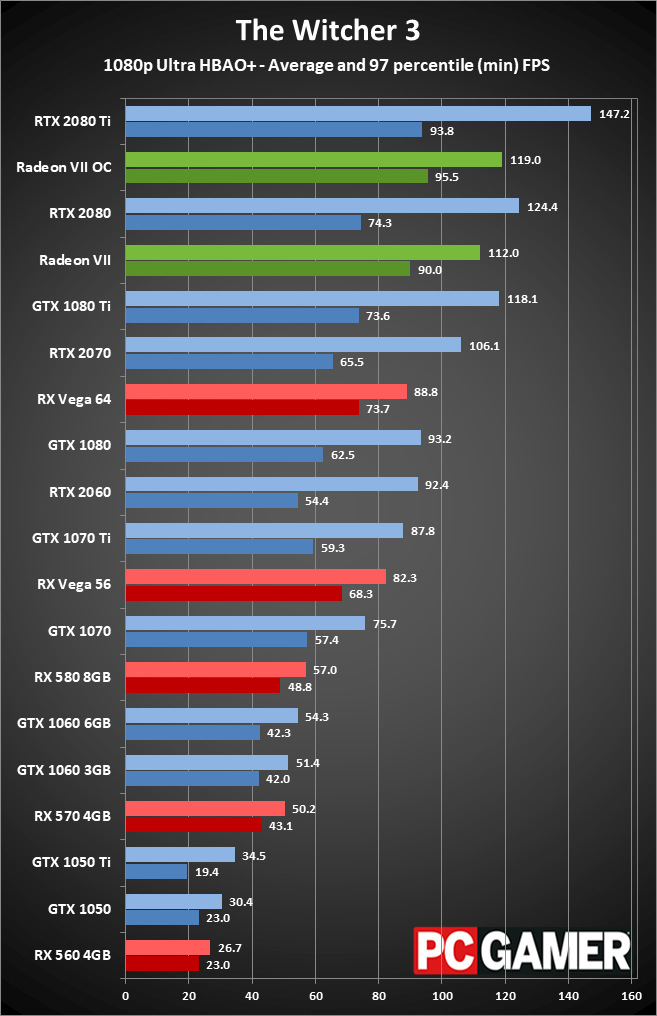

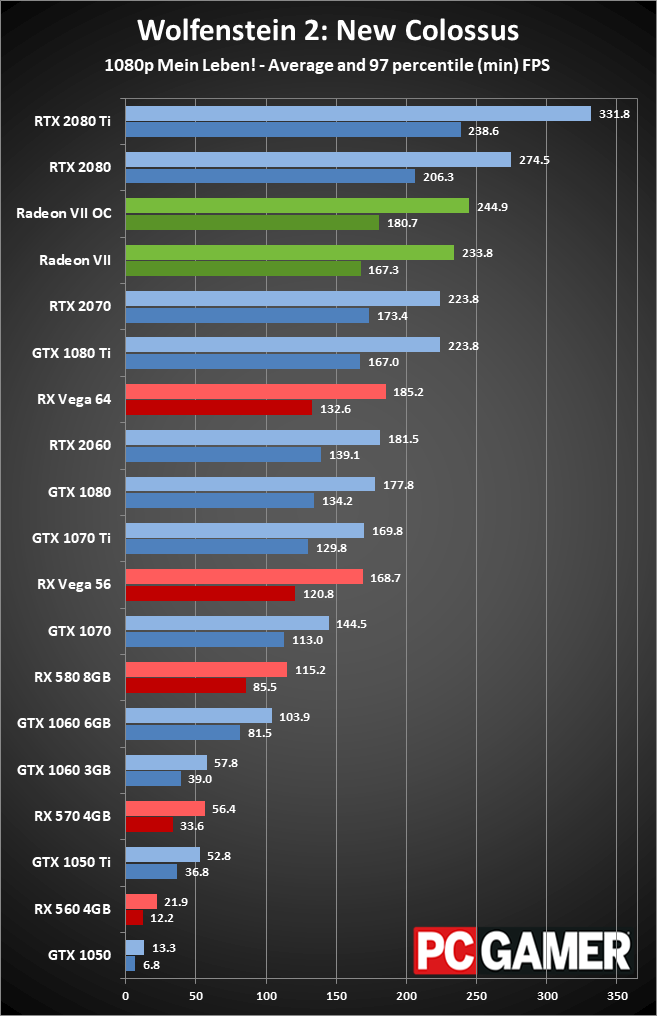

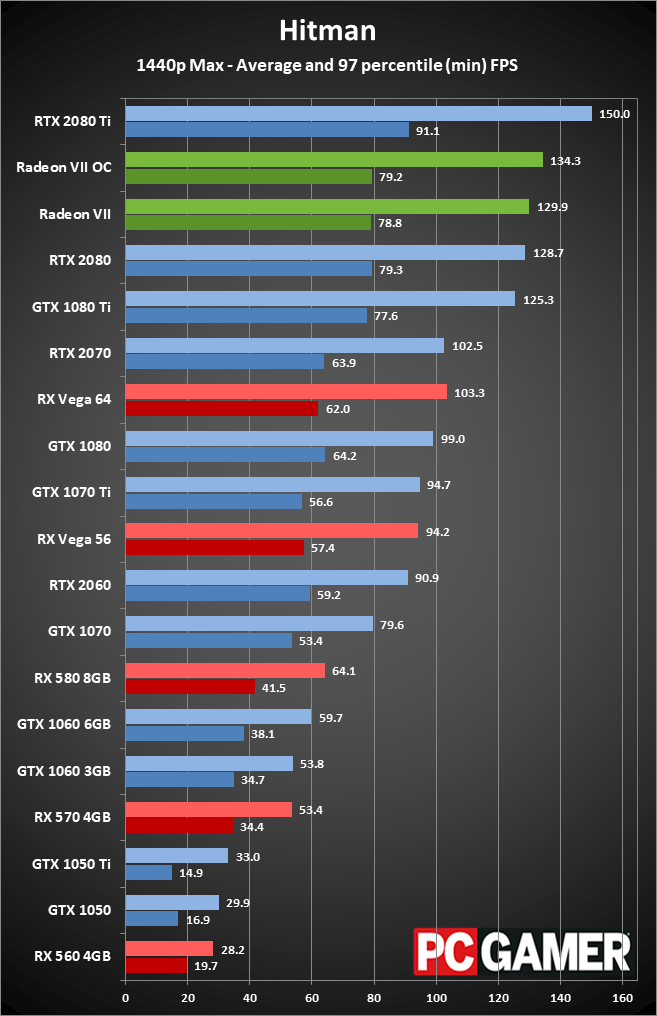

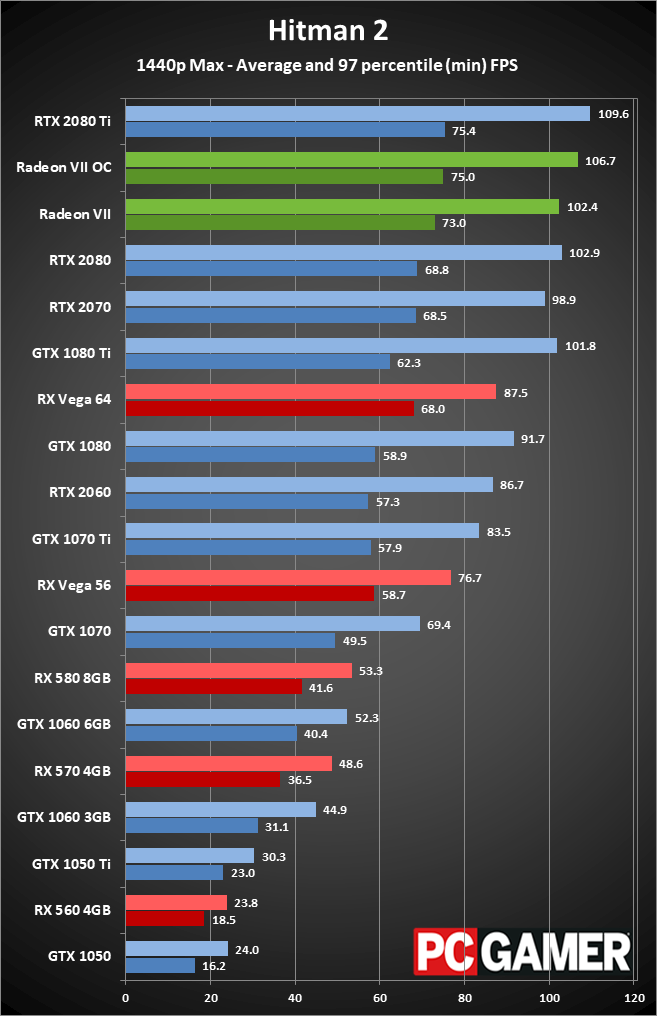

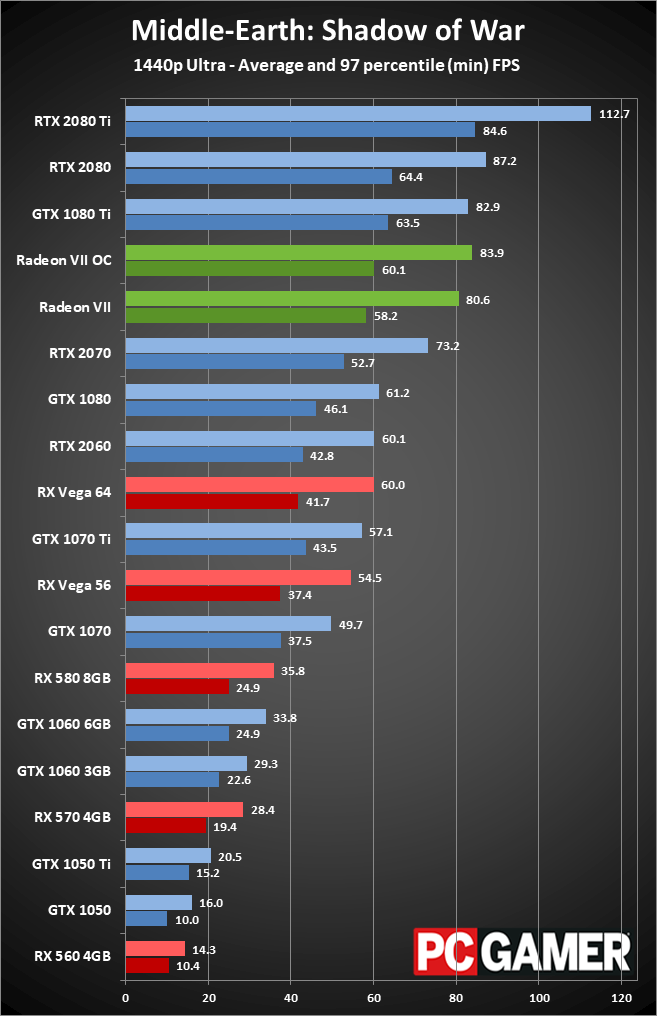

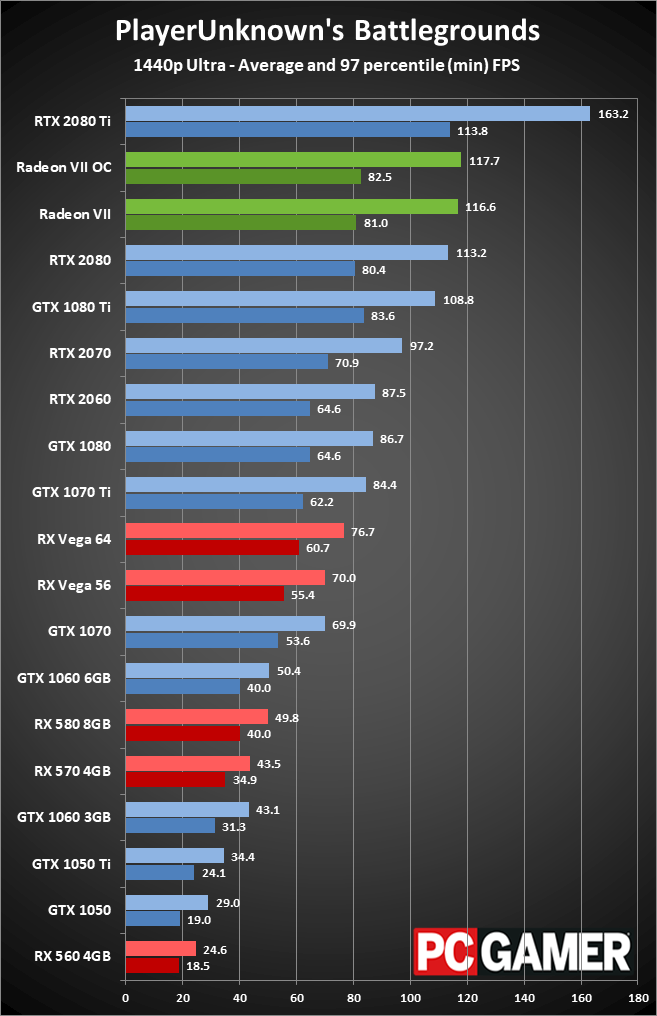

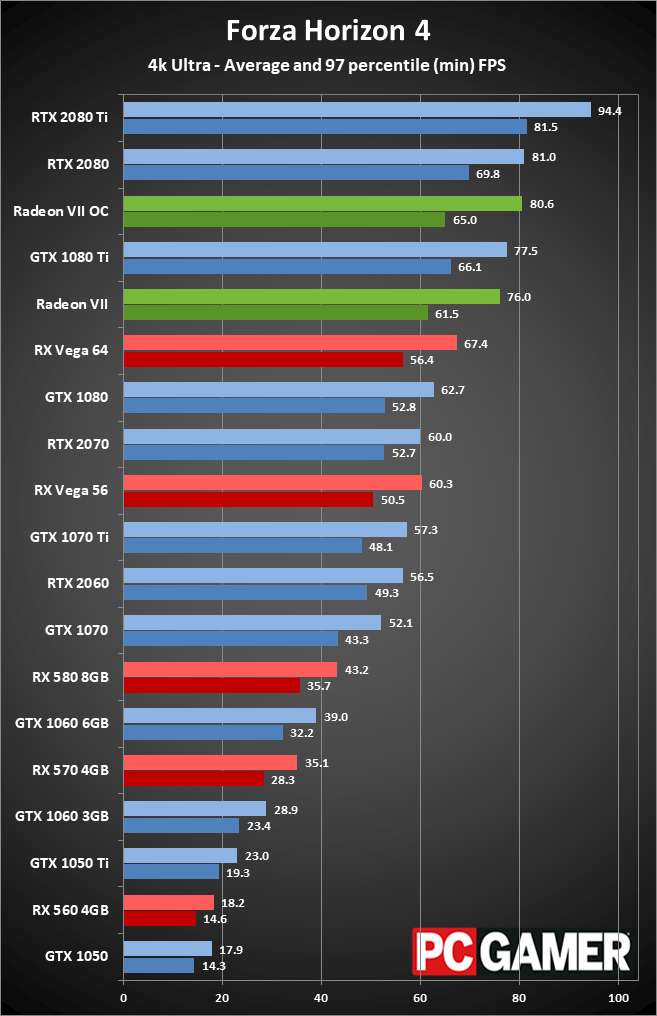

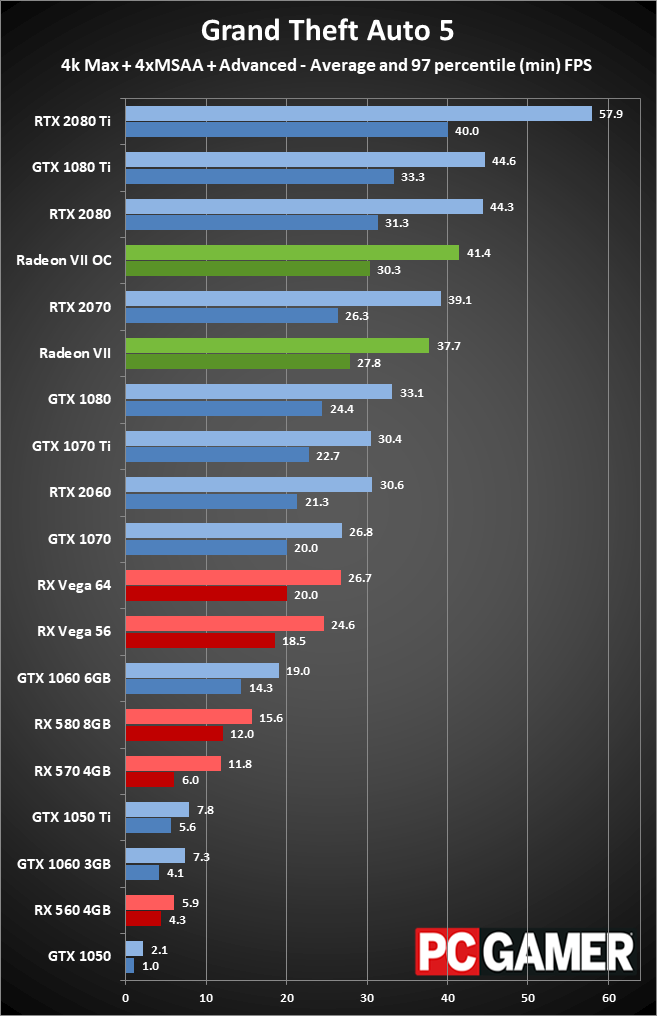

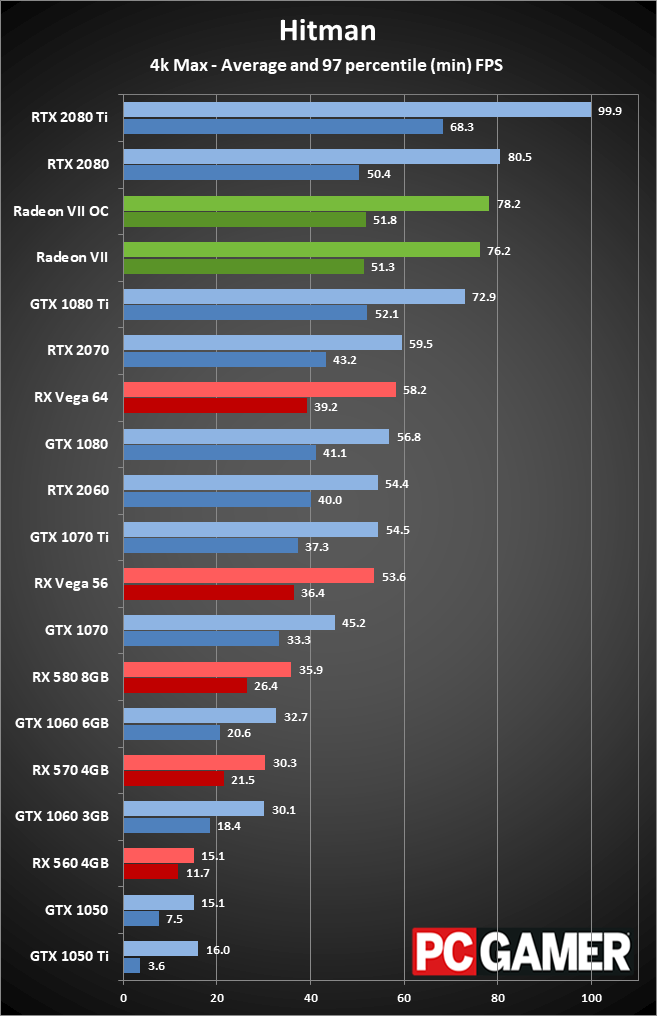

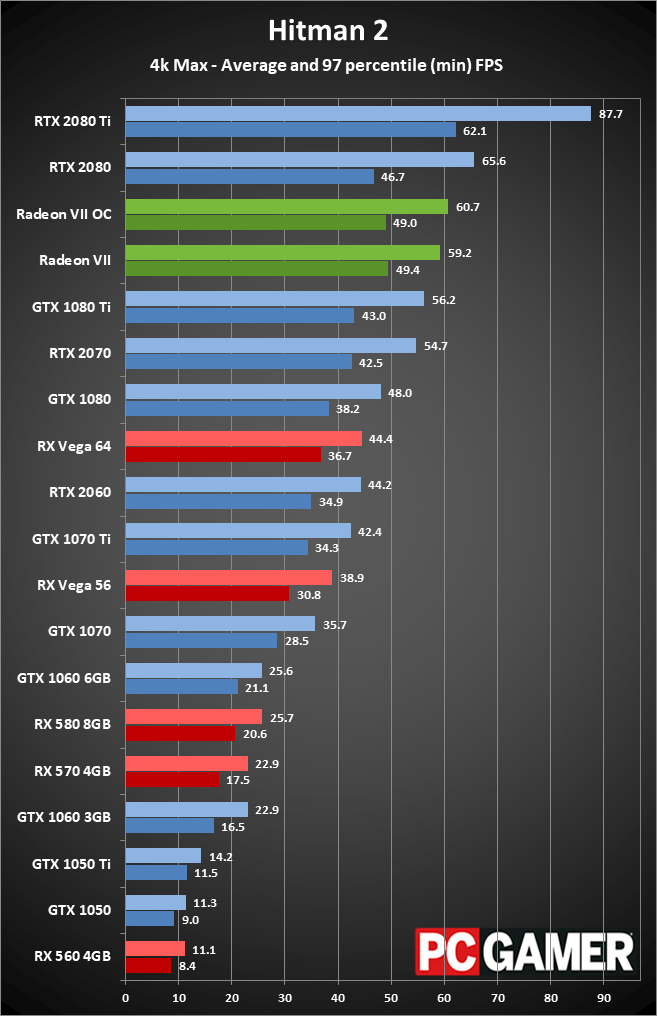

For this review, I'm trimming the fat and looking at 17 games tested on 19 graphics cards, at four setting/resolution combinations per game. (If you want to see results from older GPUs like the GTX 900 series and AMD's R9 cards, check out the GeForce RTX 2060 review.) Testing is conducted at 1080p 'medium' quality, along with 'ultra' quality at 1080p, 1440p, and 4k. In most games the ultra preset maxes out all the options, while games like Hitman and GTA5 don't include presets. For those, I've enabled all the extras (eg, GTA5's advanced graphics menu, other than superscaling). Some games punish cards with less than 6GB or even 8GB of VRAM at these settings, but in general the high-end cards like the Radeon VII should be fine.

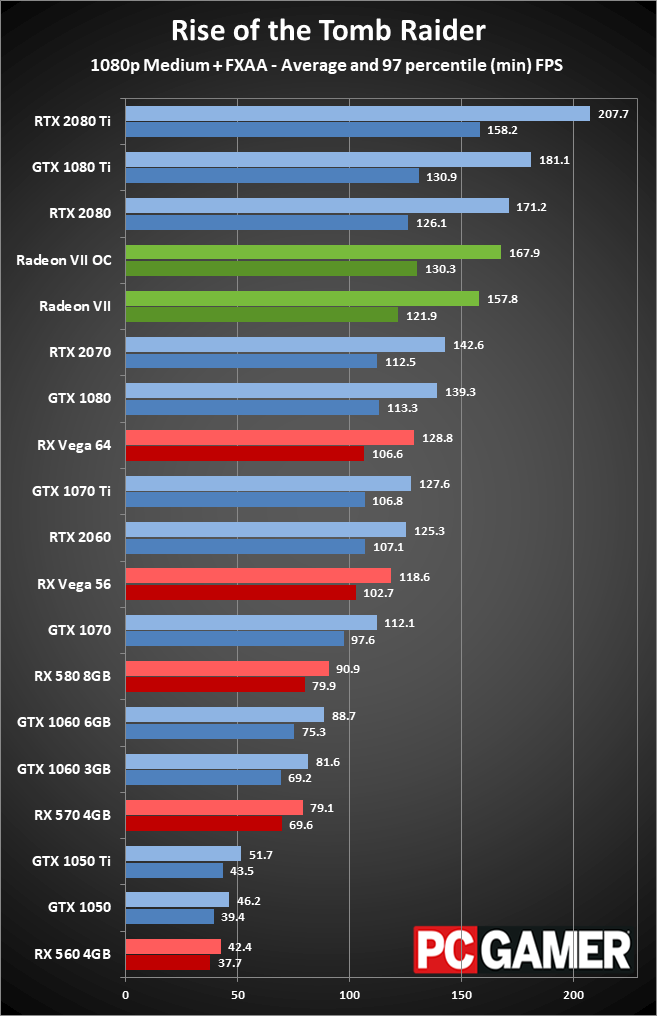

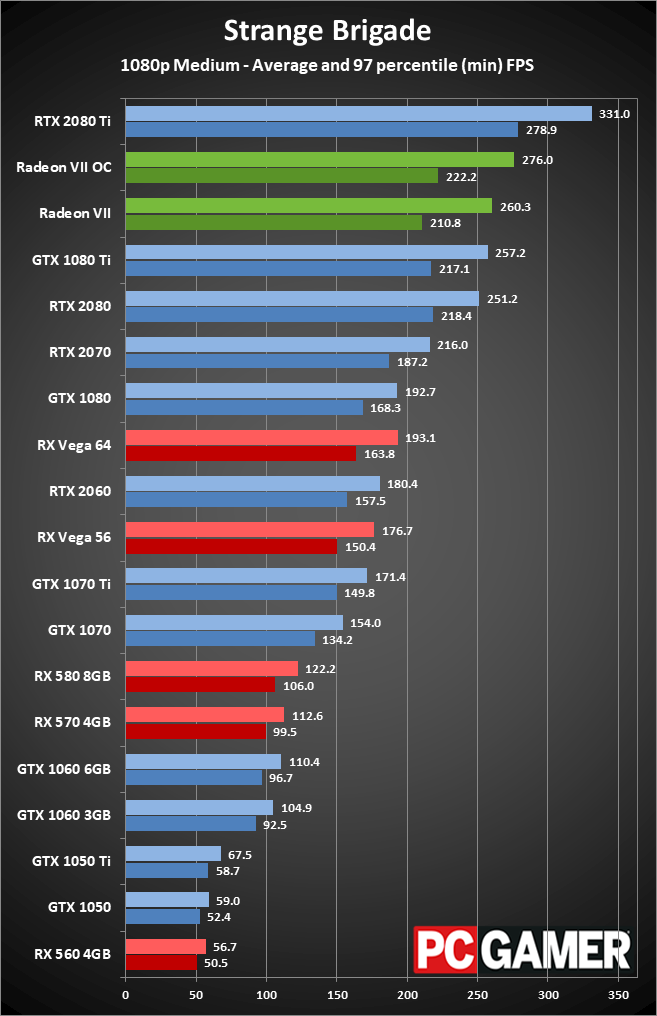

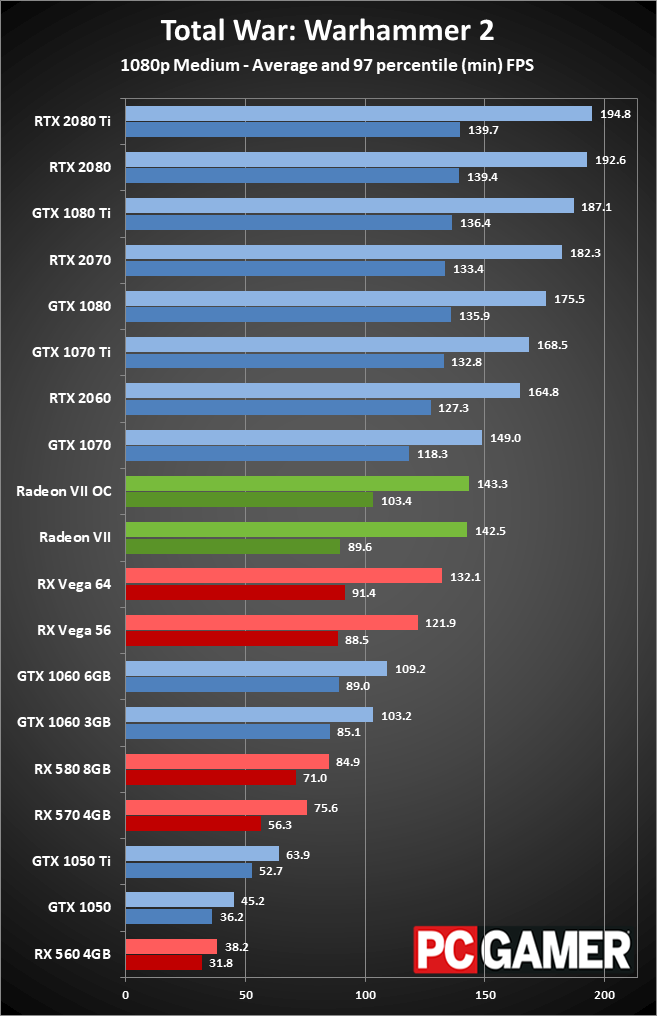

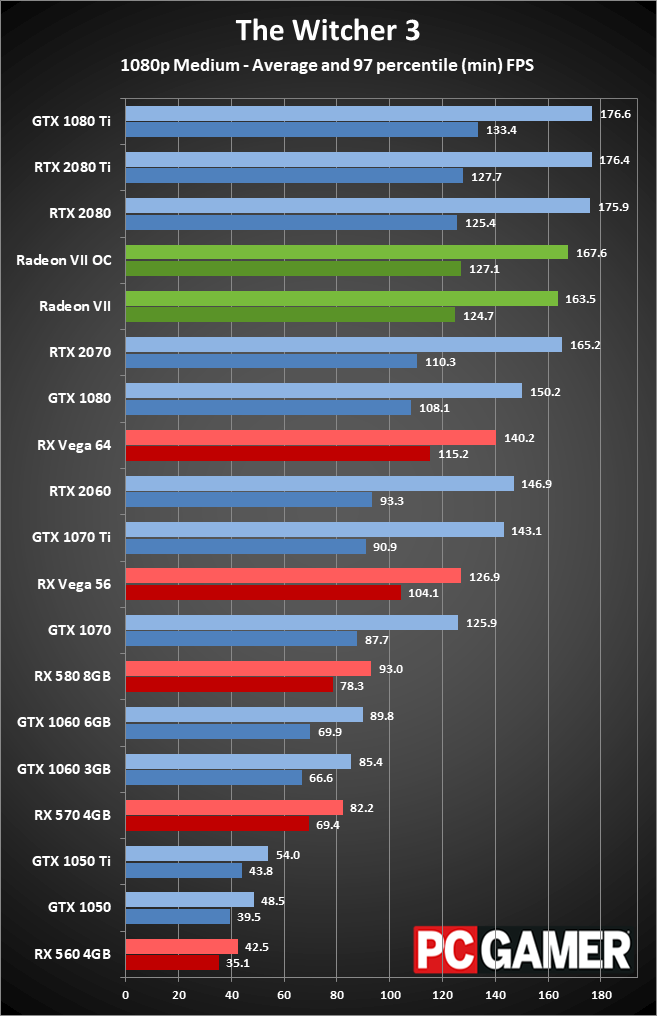

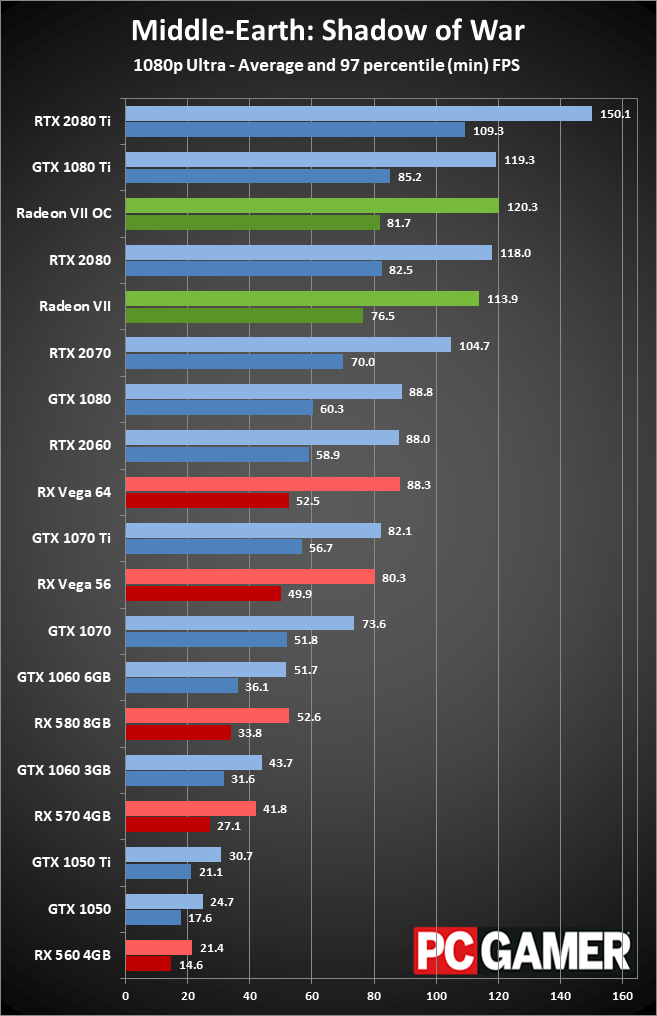

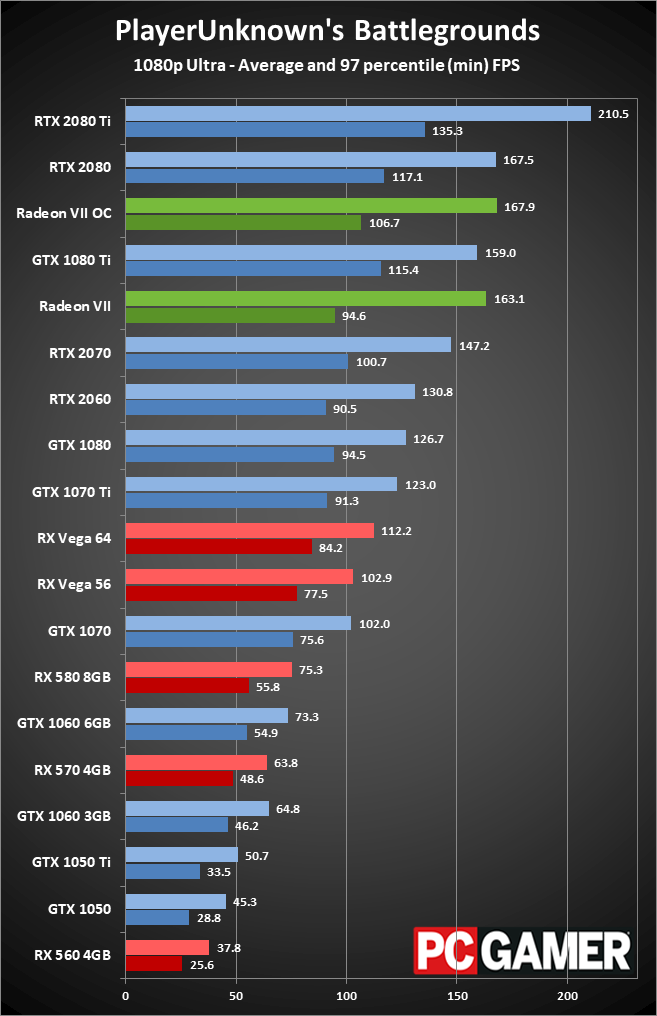

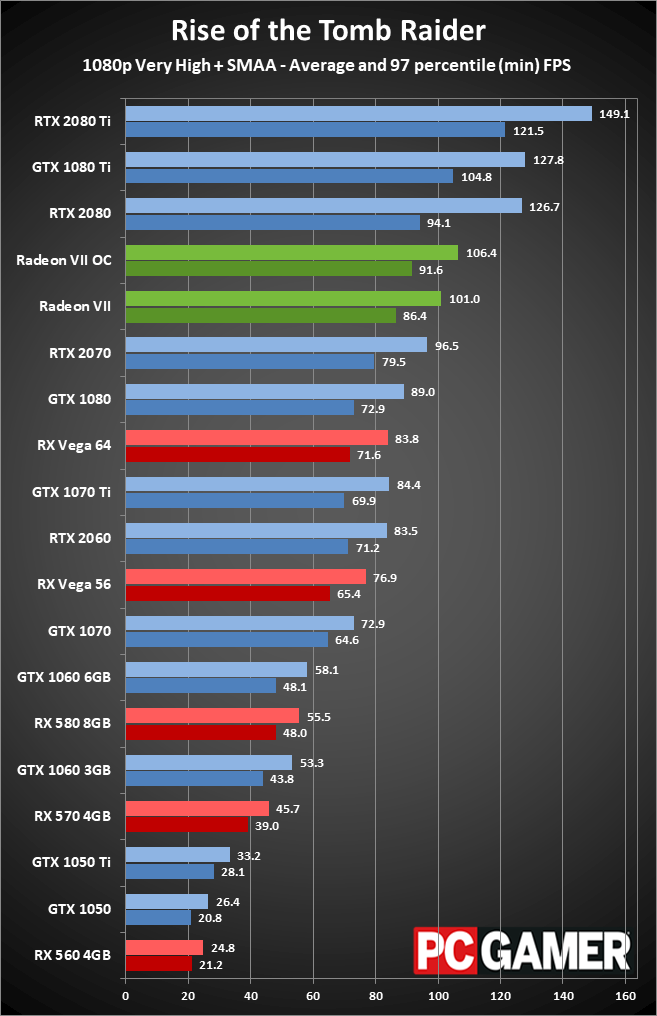

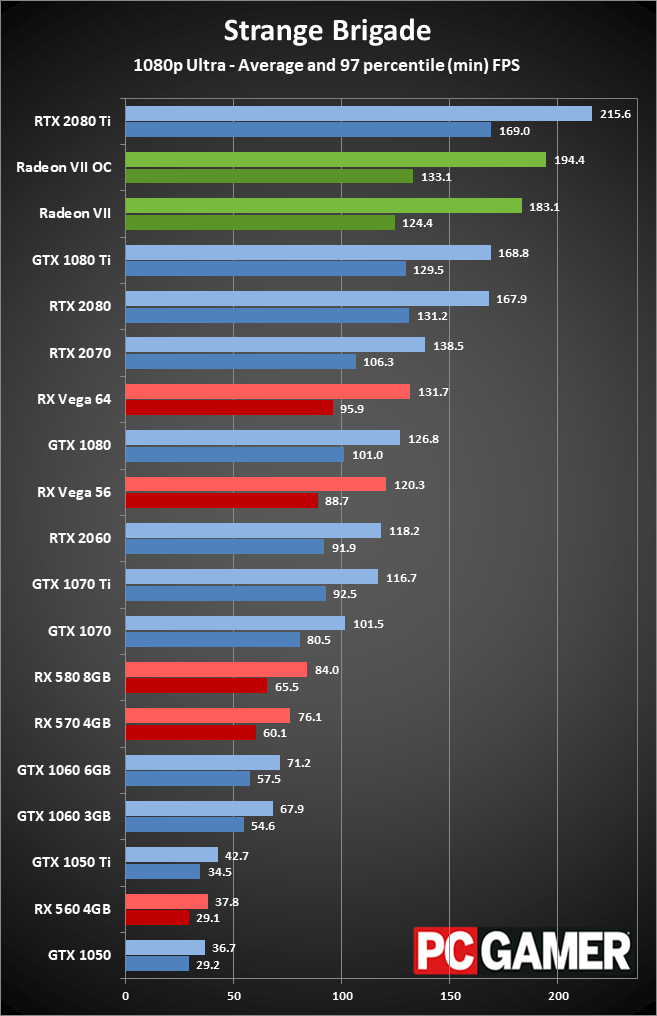

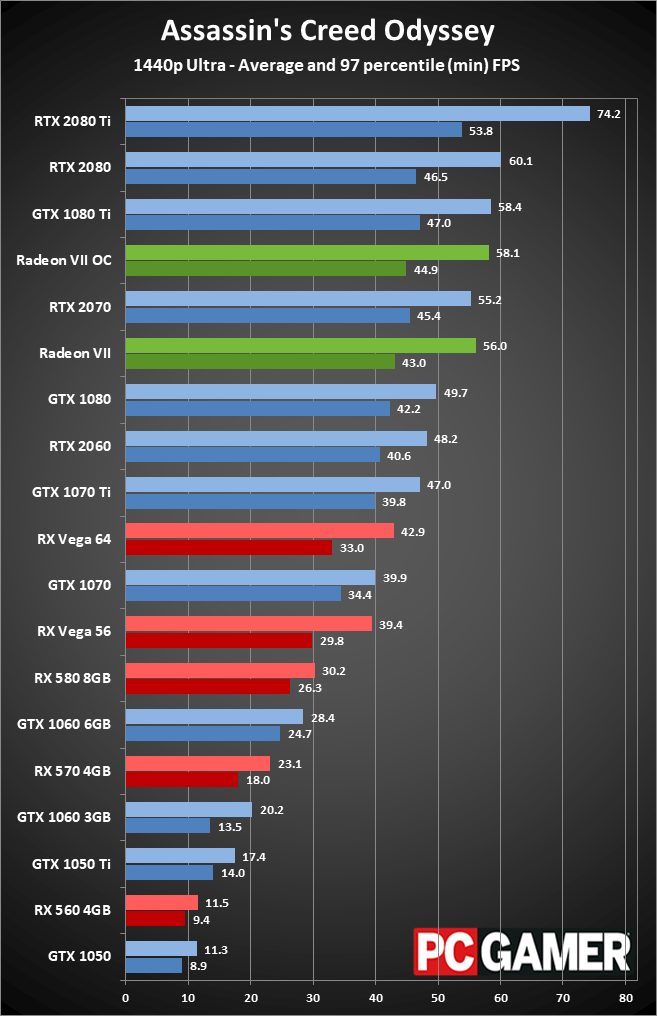

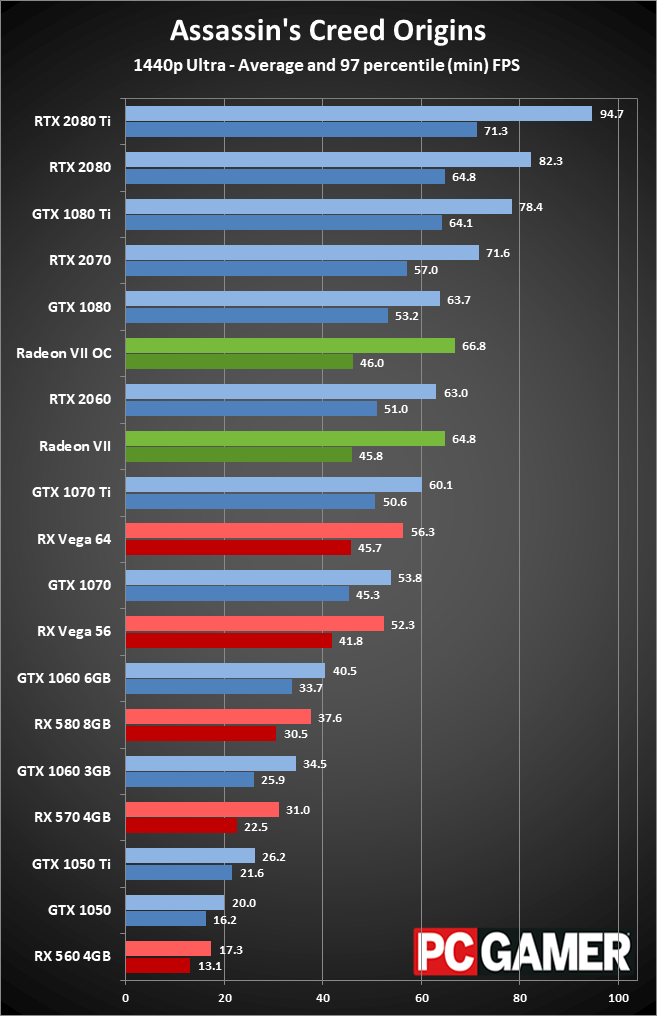

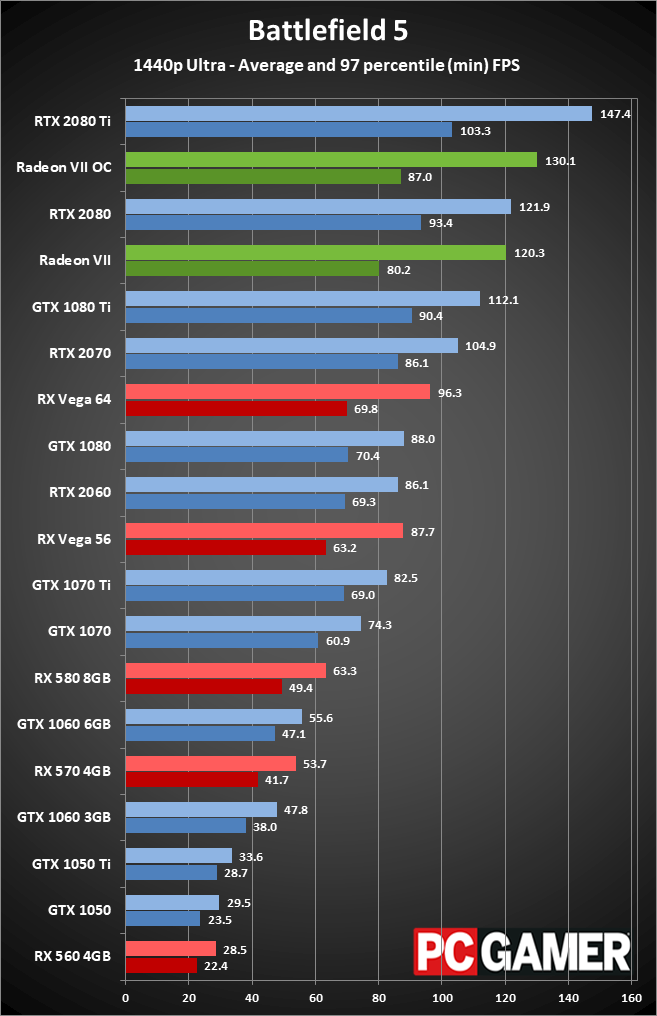

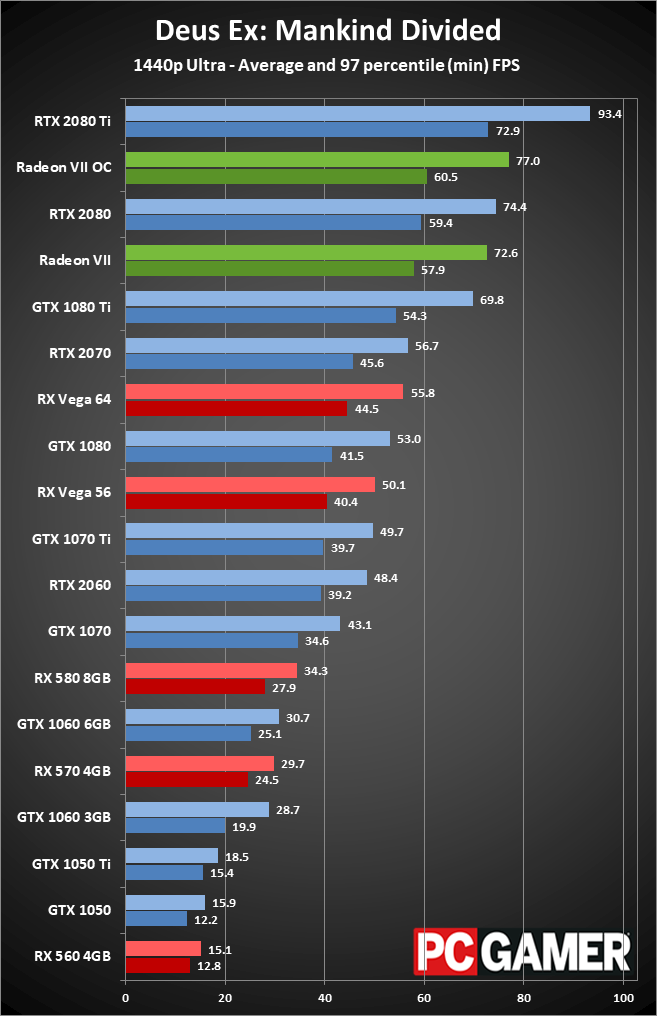

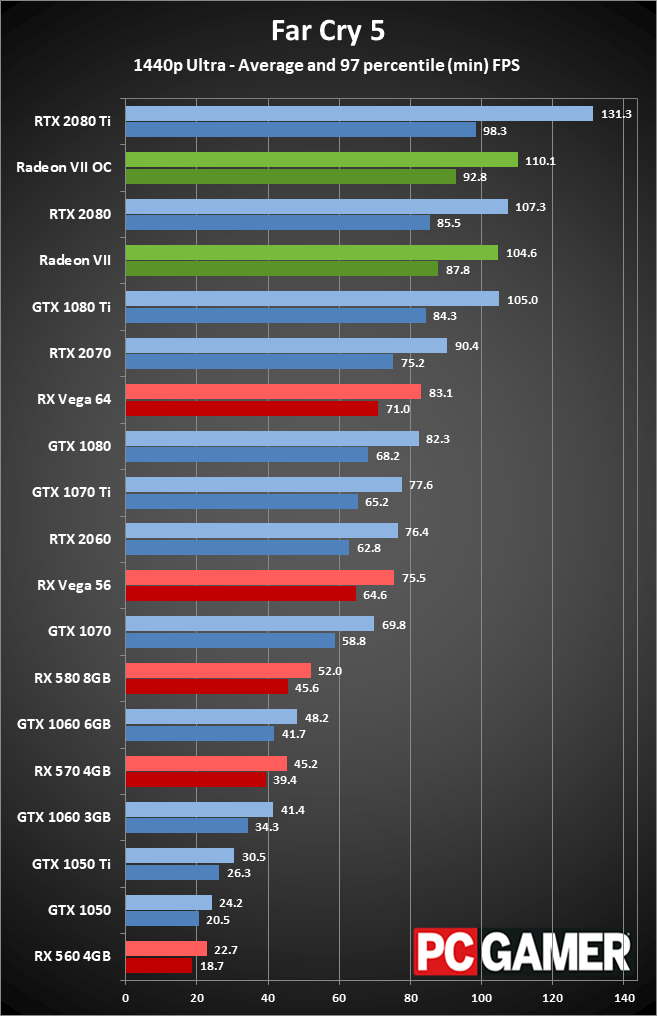

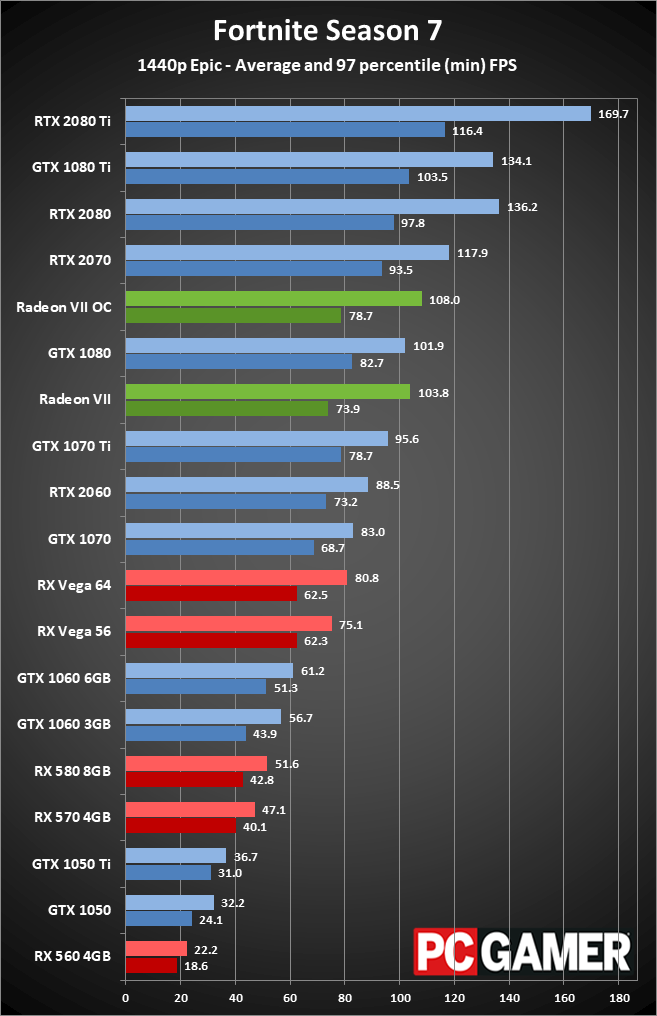

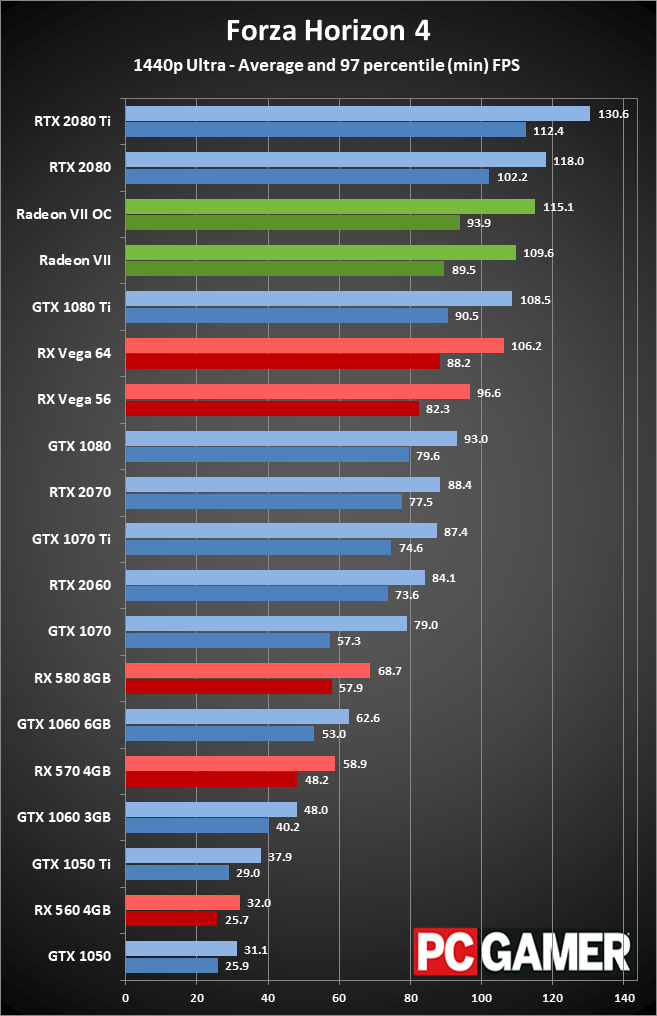

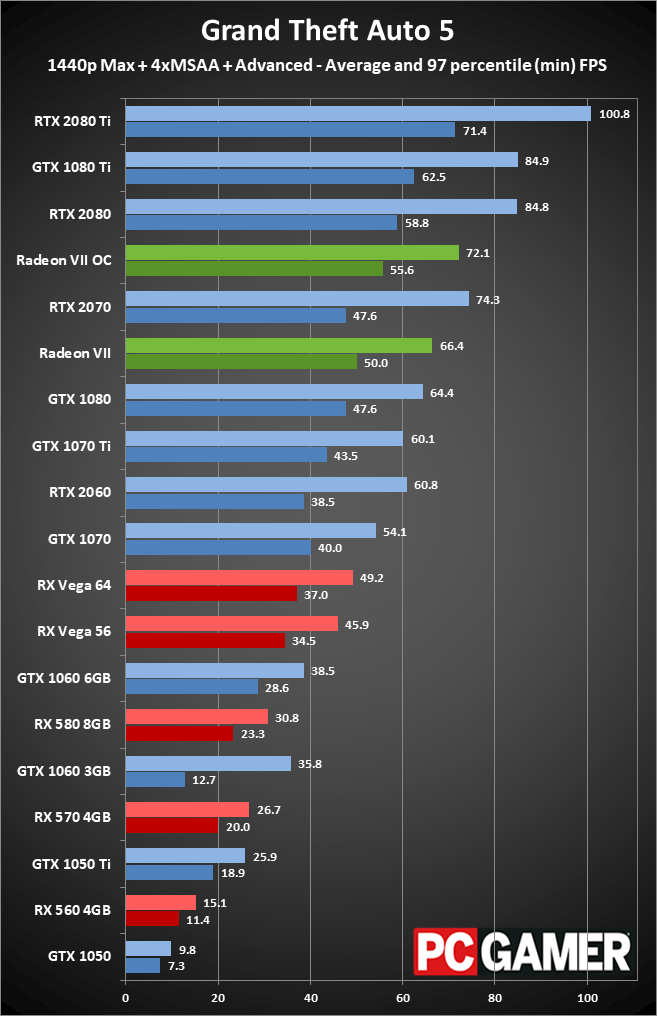

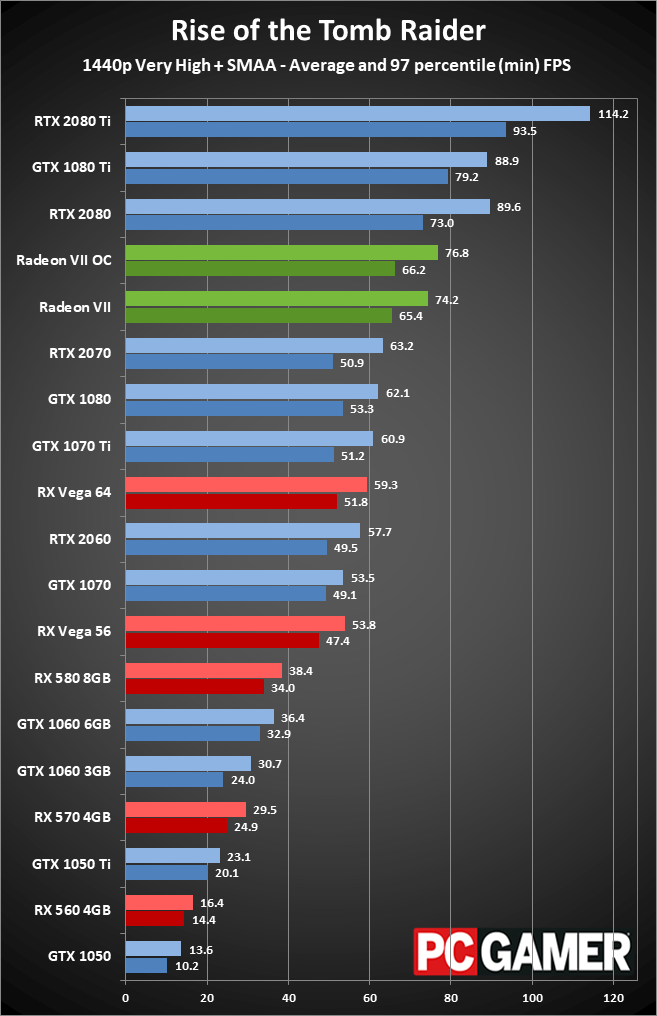

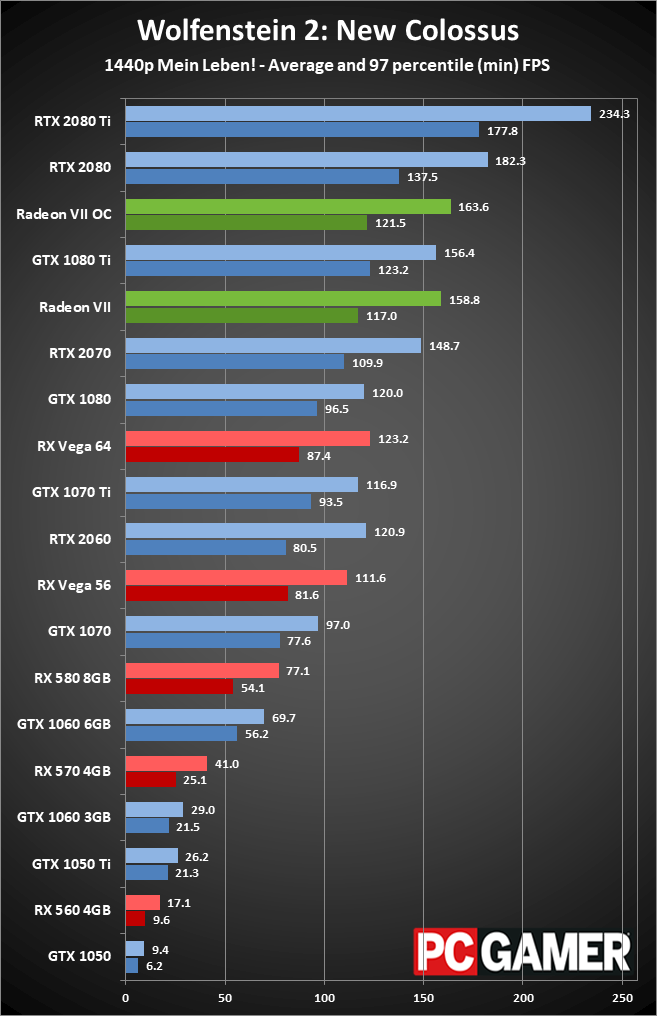

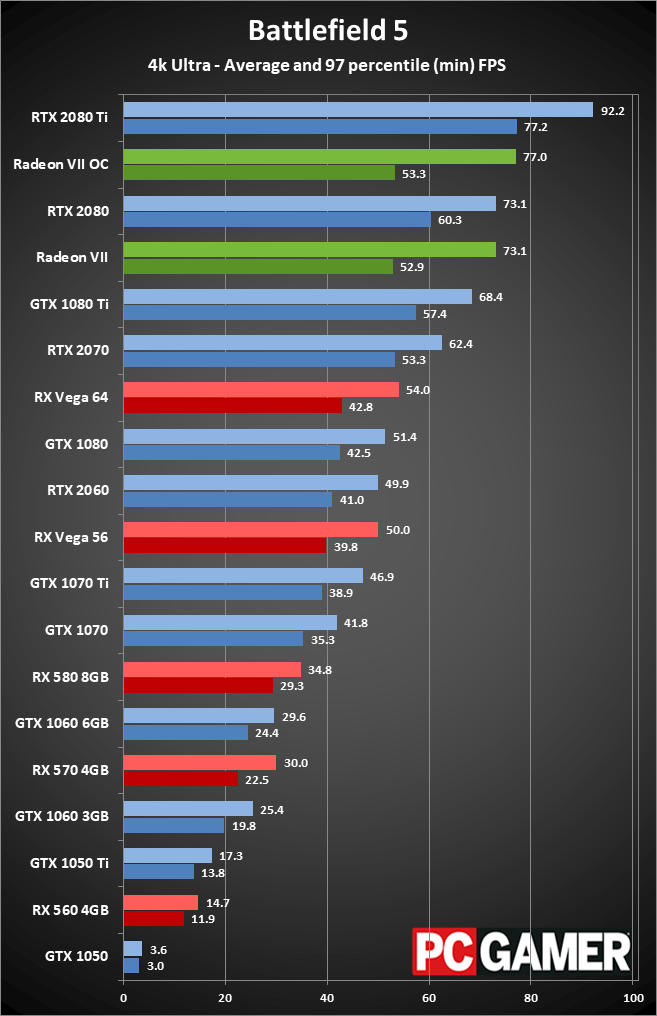

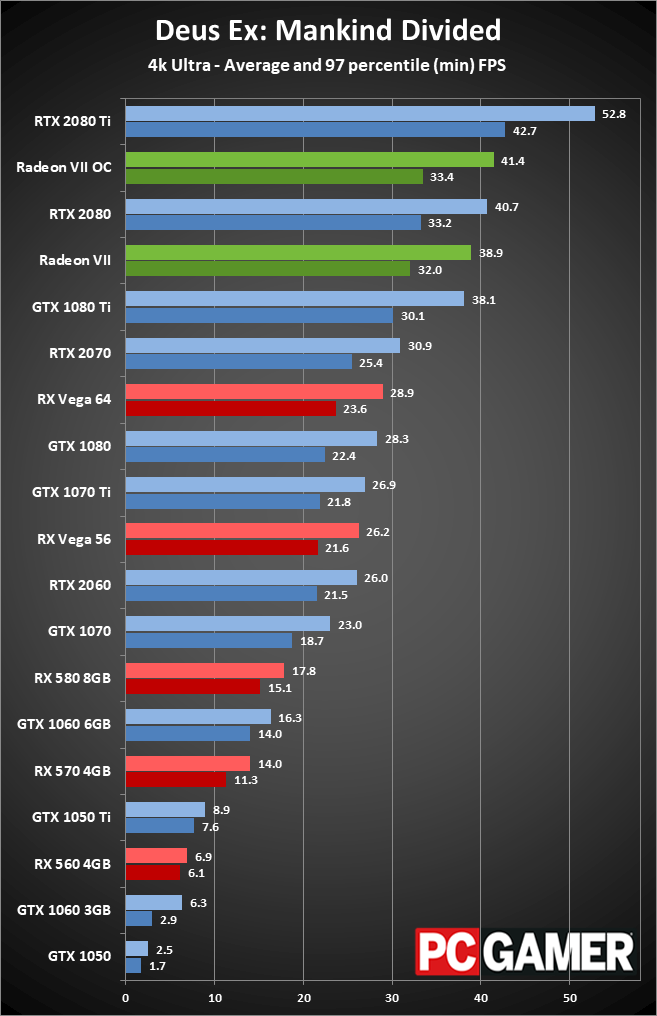

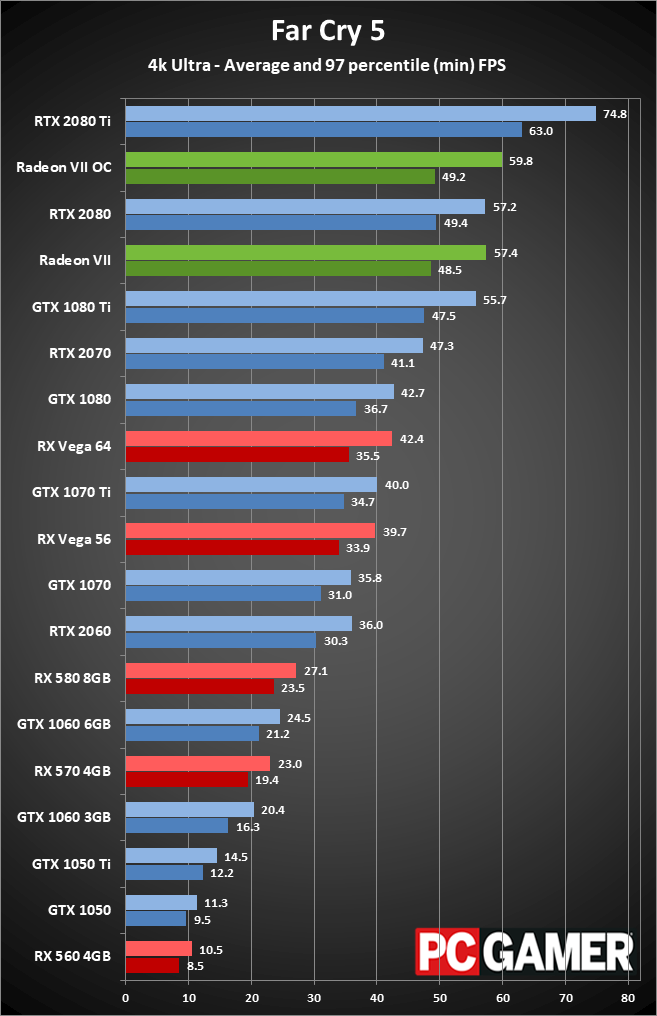

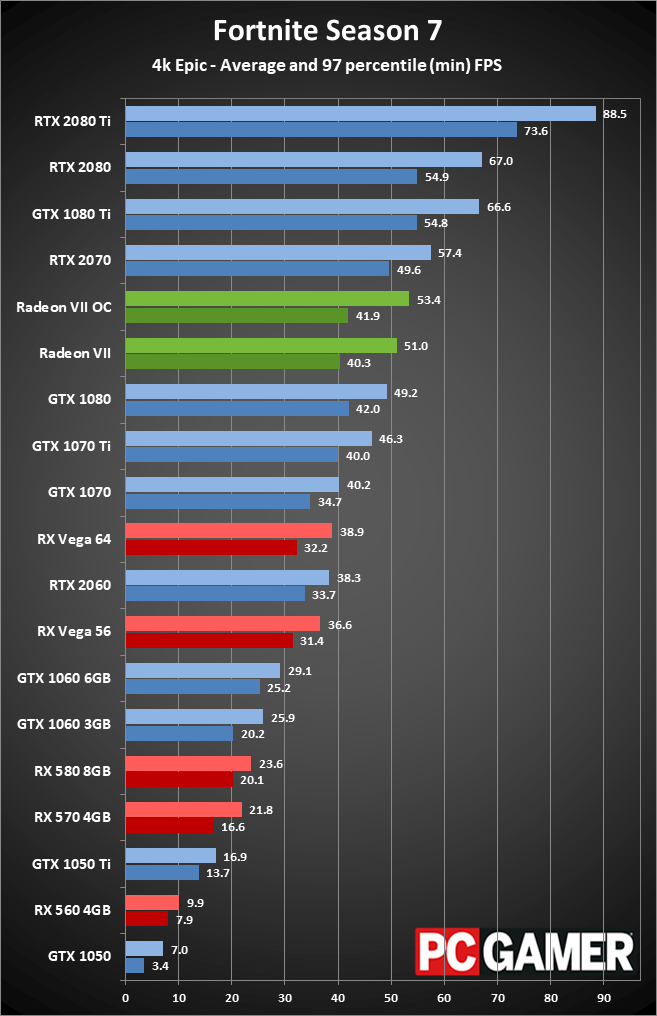

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

1080p definitely isn't the target resolution for the Radeon VII, so I'm including it more as a point of reference. Plus, some people might be thinking of gaming at 1080p and 144Hz or even 240Hz, which can help if a game can reach higher framerates … but many games don't get anywhere near that level of performance. Above are chart galleries for 1080p medium and ultra, and the CPU and other components generally bottleneck the fastest graphics cards at 1080p.

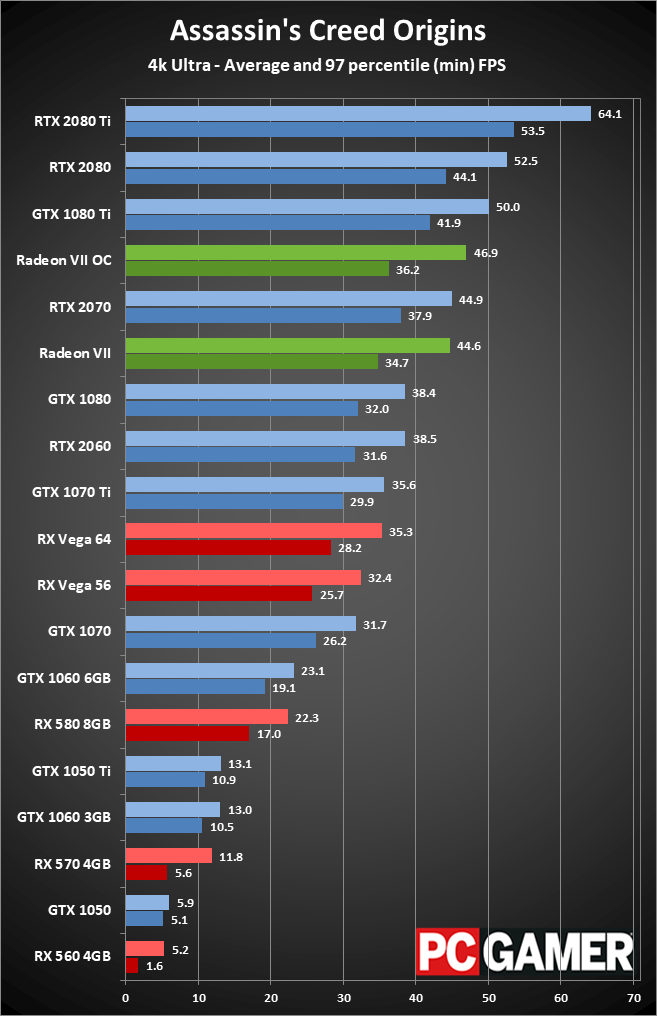

I don't want to spend too much time beating a dead horse, so let's just say that overall Nvidia's RTX 2080 is 13 percent faster at 1080p medium and 11 percent faster at 1080p ultra compared to the Radeon VII. If you look at the individual games, Strange Brigade is the only instance where the Radeon VII leads the 2080 (by 3-8 percent), while Assassin's Creed Origins is on the other end of the spectrum and the 2080 leads by 40-50 percent. The Radeon VII is also 11 percent faster than the Vega 64 at 1080p medium, and 19 percent faster at 1080p ultra, but the GTX 1080 Ti still beats it by almost 10 percent.

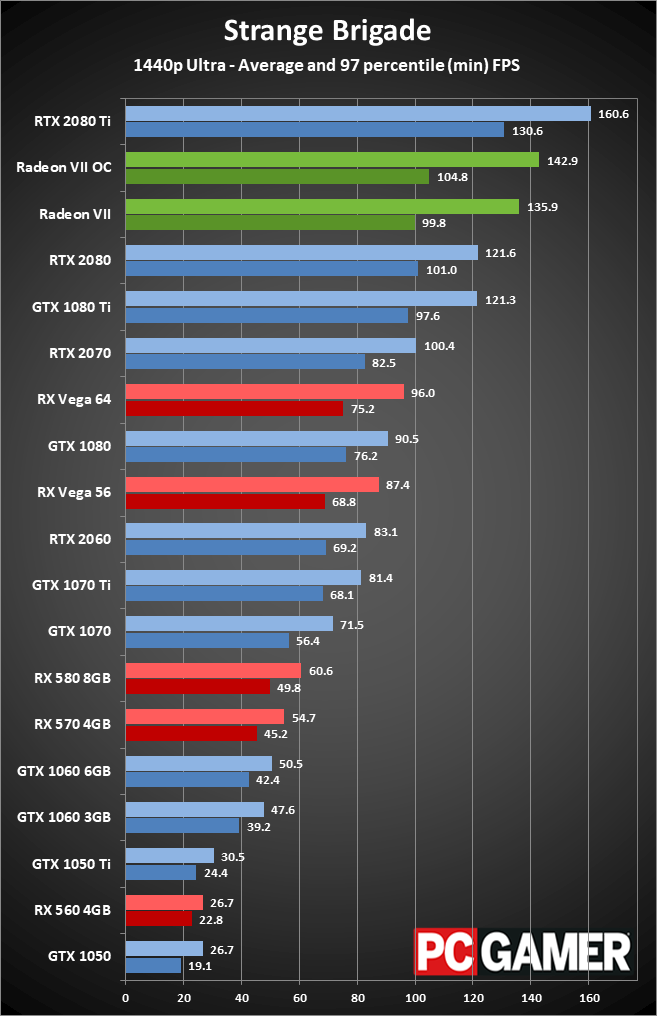

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

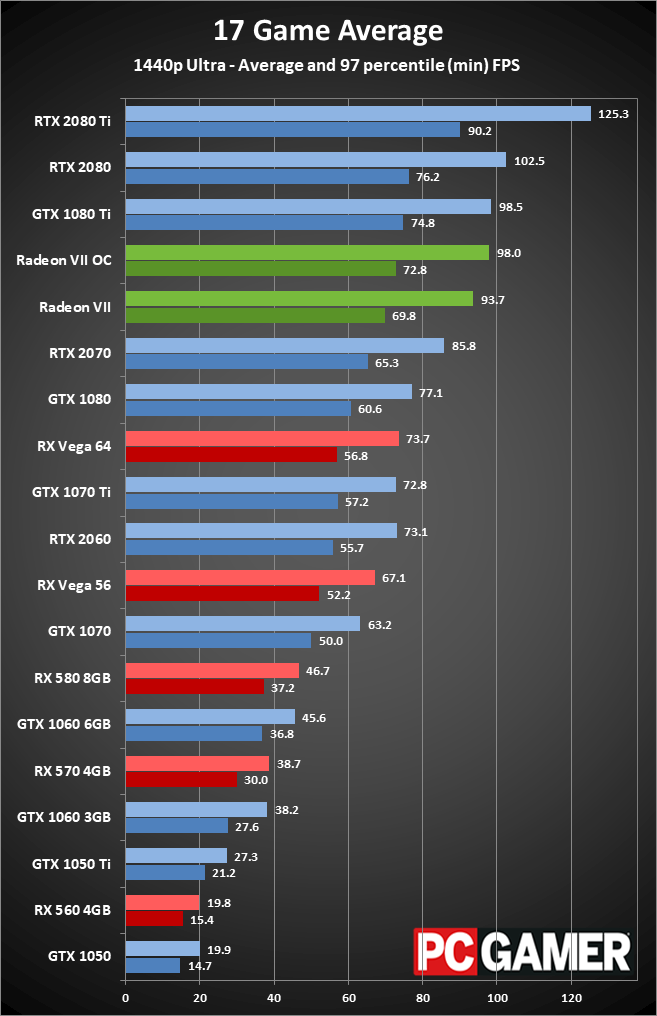

1440p ultra is arguably the best resolution for the Radeon VII, because 4k ultra at 60fps is still a bit of a stretch. Every game except Odyssey runs at more than 60fps, with the average sitting at nearly 100fps. Tweak a few settings and smooth 60-144 fps gaming on a 144Hz 1440p FreeSync display is definitely within reach. In something of an ironic twist, AMD's talking point of Radeon plus FreeSync being a better value than GeForce plus G-Sync is no longer as much of a factor, since Nvidia finally capitulated and has started to support adaptive refresh on FreeSync displays. You'll want a 144Hz for best results from what we've seen, but that's okay since 1440p 144Hz (or 165Hz) displays top our list of the best gaming monitors.

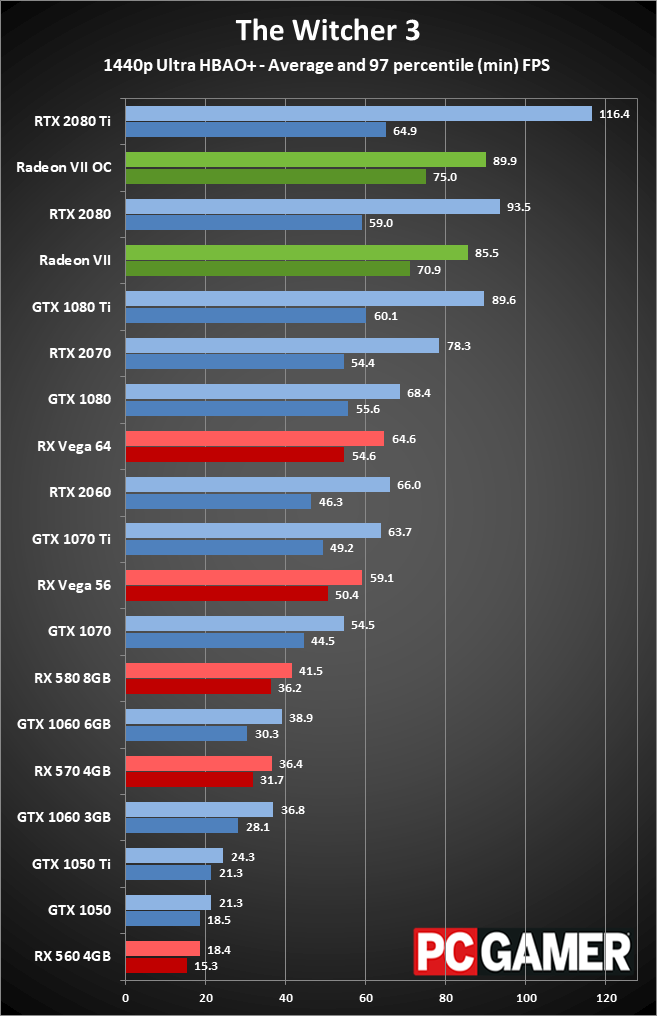

Looking at performance, things improve a bit from 1080p, with the Radeon VII now beating the Vega 64 by 27 percent on average, while the GTX 1080 Ti hangs on to a 5 percent lead. The RTX 2080 still maintains a 10 percent lead, however, and considering the added features and lower power requirements, it's a much easier recommendation at the $700 price point. You could argue the 16GB HMB2 might prove beneficial, but in current games it's only edge cases where having more than 8GB really matters—it doesn't really show up in any of the testing I've done (though Witcher 3 minimum fps is certainly improved). But overall, even minimum fps is about the same margin of victory/loss as average frame rates.

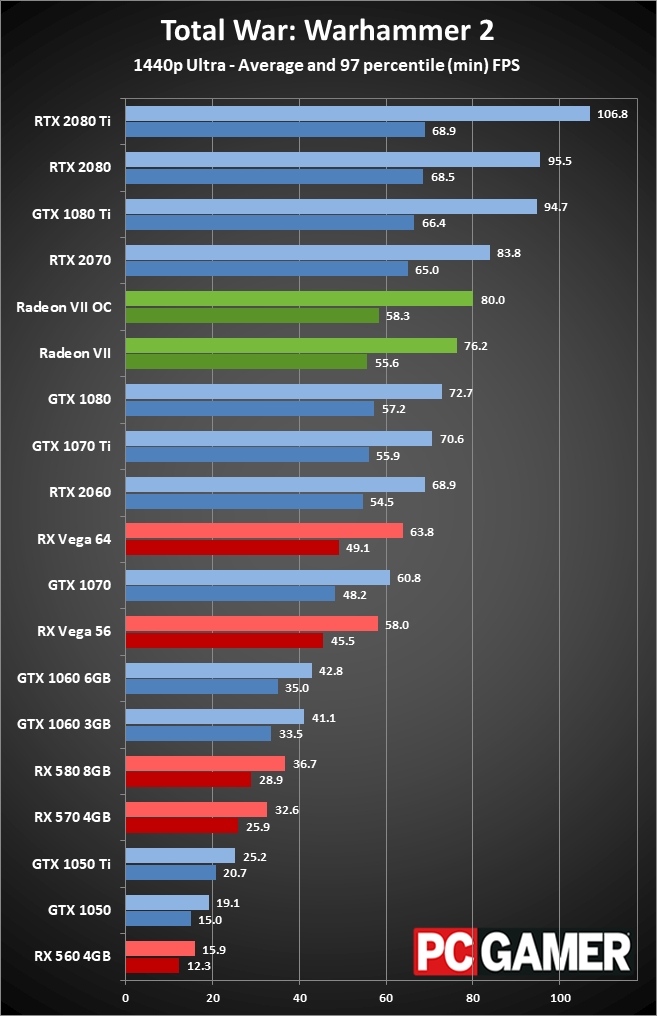

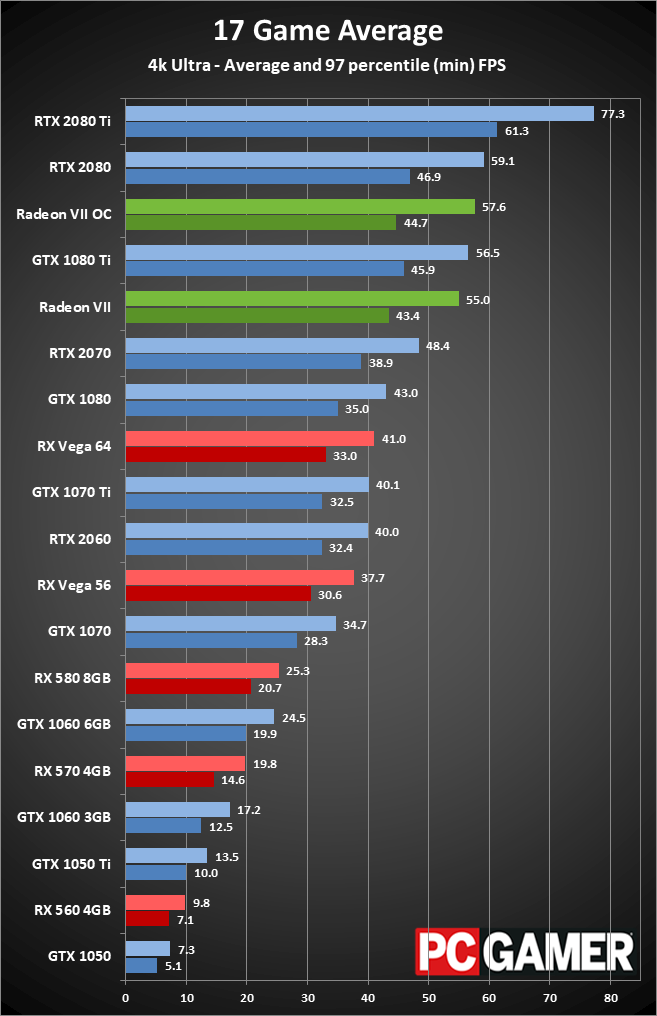

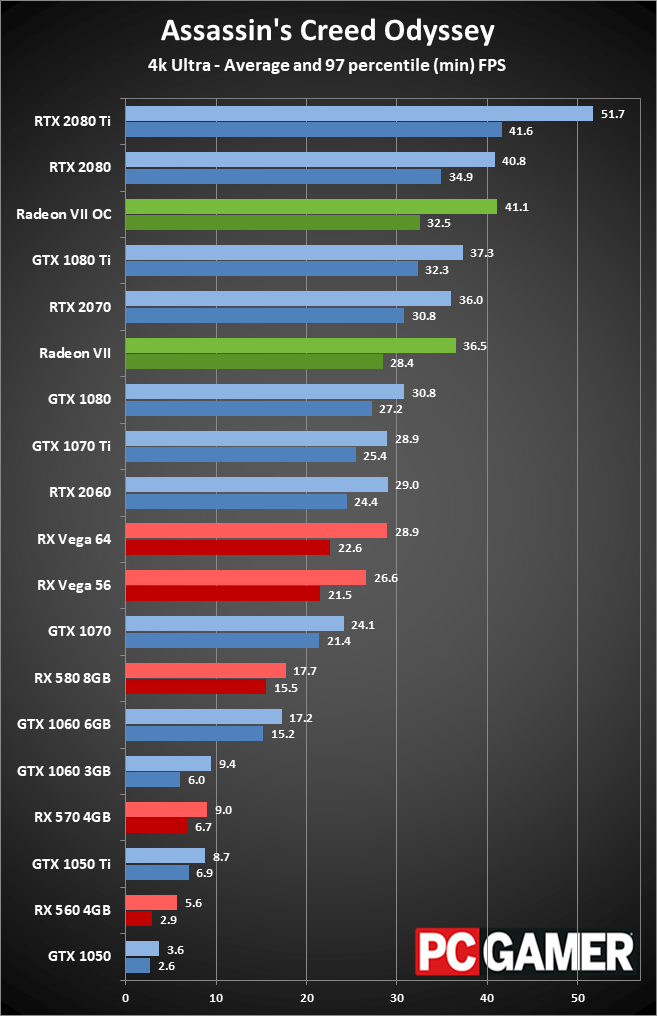

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

Swipe left/right for individual game charts

4k is ostensibly the target for the Radeon VII, given the massive amount of VRAM and bandwidth, but while it does better relative to other GPUs here—it's now 34 percent faster than the Vega 64, the 1080 Ti lead shrinks to just 3 percent (basically tied), and the RTX 2080 is now only 8 percent faster—that comes at the price of framerates that are no longer quite so silky smooth.

Only six of the 17 games average 60fps or more, and only three games keep minimum fps above 60. You can of course drop some of the settings down a notch or two to boost framerates, but that also reduces the demand for memory bandwidth and other resources. 4k at medium quality often ends up being similar in performance to 1440p at ultra quality—just with a higher pixel count.

I could see 8k further narrowing the margin of victory, perhaps even giving the Radon VII a lead over the RTX 2080, but not many people play games at 4k, and 8k is so bleeding edge that's it's not a consideration.

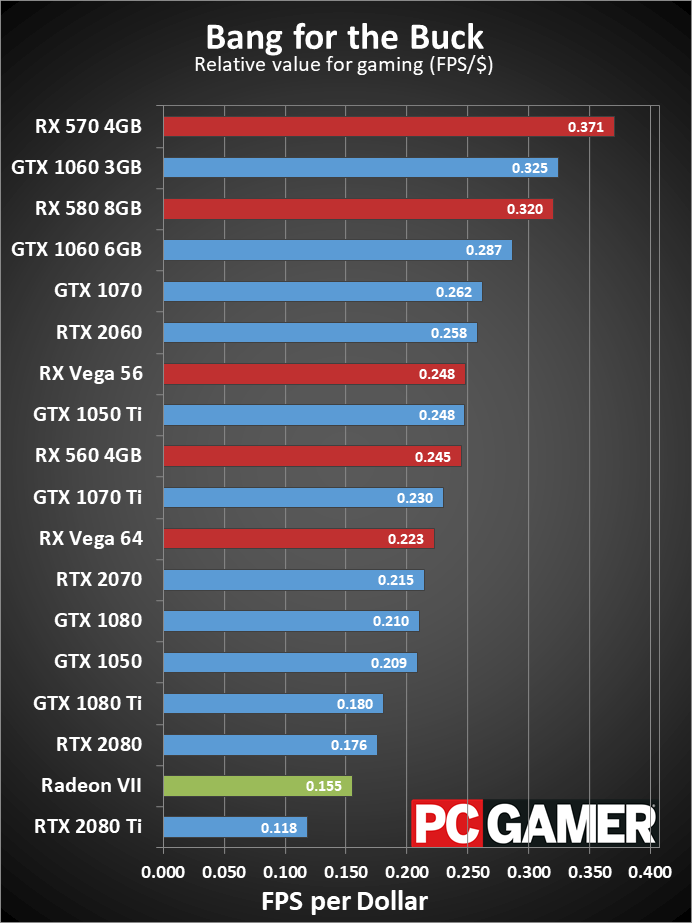

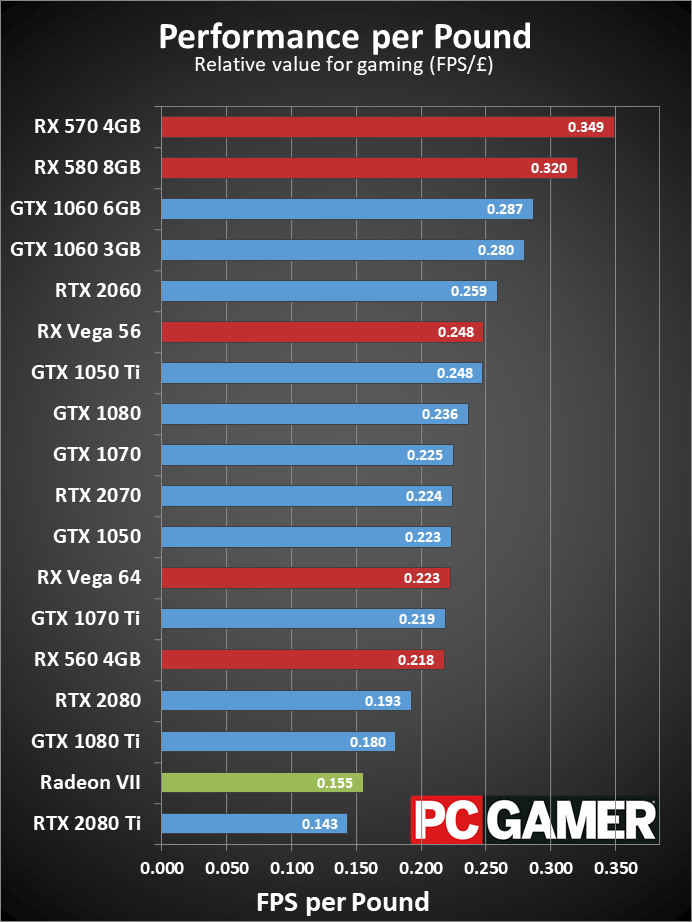

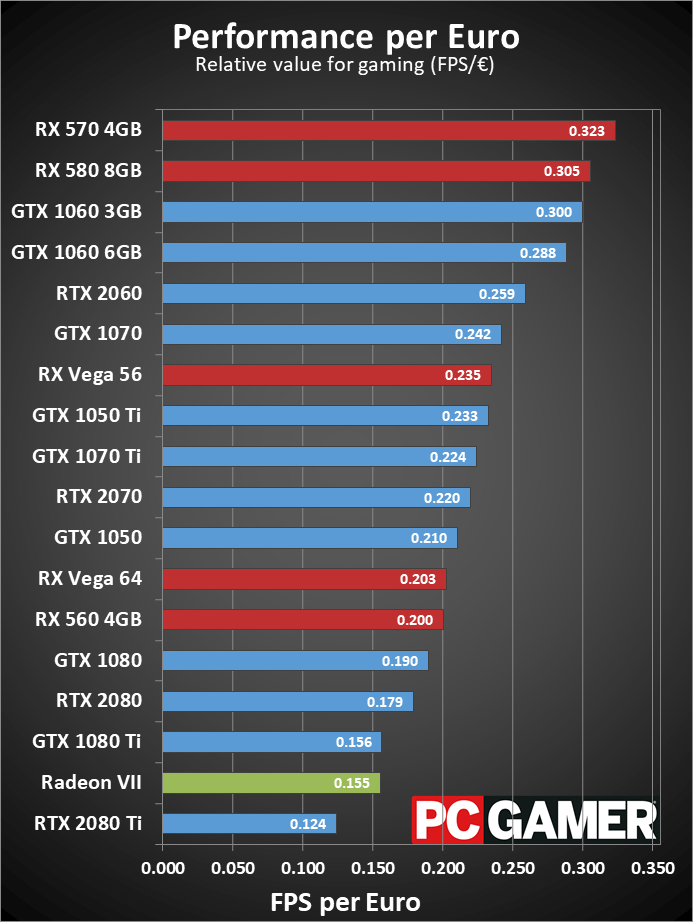

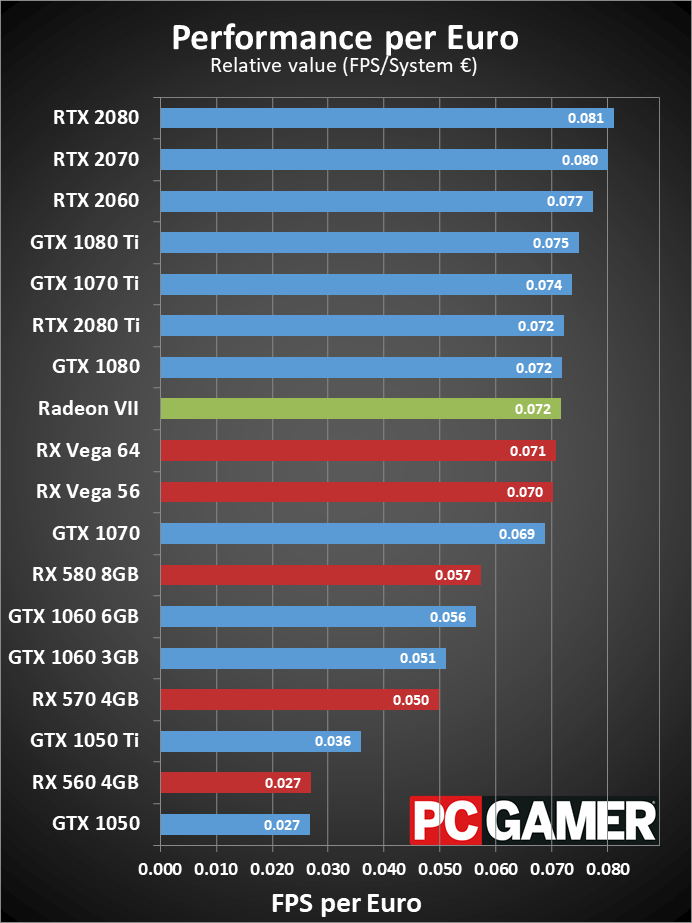

Wrapping things up, let's quickly talk value and where the Radeon VII sits in terms of the market. This is the most expensive single-GPU solution AMD has ever released for the consumer market (so, not counting things like the R9 295X2 or HD 7990)—it matches the price of the outgoing RX Vega 64 Liquid. Being more expensive doesn't inherently mean it's a worse value, but the Radeon VII is in a bit of a tough spot. It's up to 35 percent faster than the RX Vega 64, and it's the fastest AMD GPU currently available, but it also costs 75 percent more than a Vega 64. In that sense, it's like the RX 590 launch: faster and better than the preceding cards as far as performance goes, but of worse value once you factor in price.

Swipe left/right for additional charts

Swipe left/right for additional charts

Swipe left/right for additional charts

Swipe left/right for additional charts

Swipe left/right for additional charts

Swipe left/right for additional charts

What that means in overall value is that if you're buying a graphics card today, the only consumer GPU that's a worse buy would be the GeForce RTX 2080 Ti—or the Titan RTX if you want to include that. But GPUs don't exist in a vacuum, and if you're wanting to stick with an AMD GPU and you want something faster than a Vega 64, you don't really have any other good options. (Please don't say CrossFire Vega 56—multi-GPU is too much of a headache these days to warrant recommending it.)

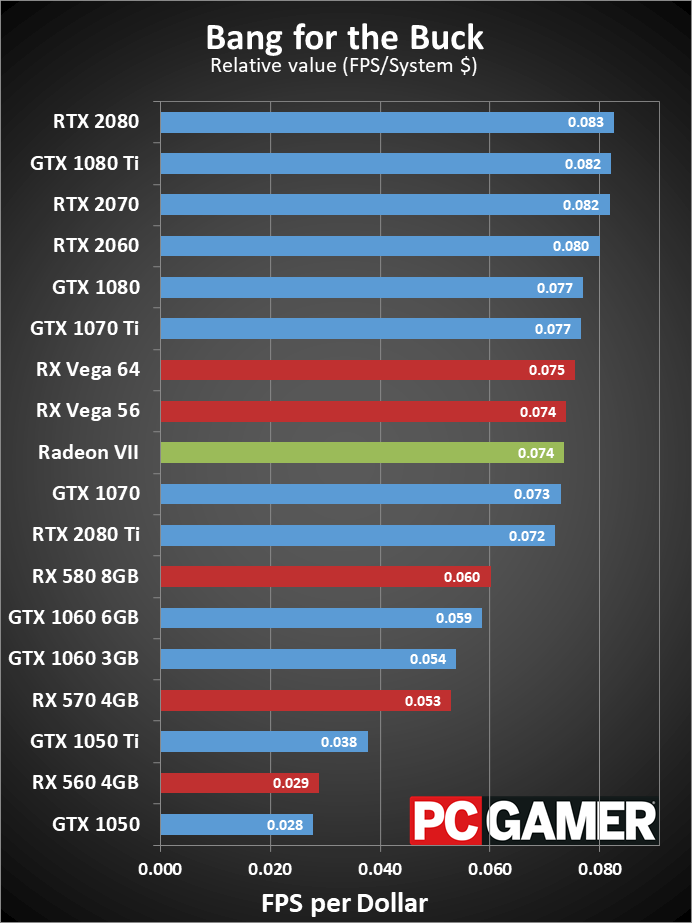

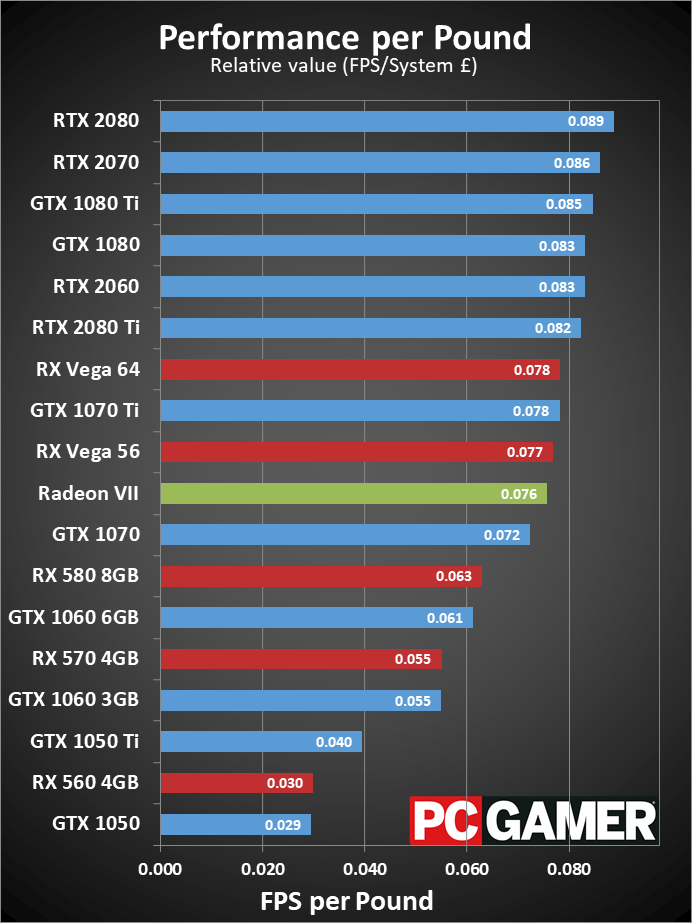

If you're looking at a complete PC upgrade, things aren't quite as bleak. Using a high-end build as the base price (around $800, not including the GPU), the Radeon VII is basically tied with the Vega cards. It will cost more, but taking the total system price from $1,200 to $1,500 means gaming performance scales almost directly with cost.

AMD Radeon VII: a decent card that's both more and less than its competition

Those hoping for a GeForce killer will unfortunately have to keep waiting: the Radeon VII isn't it. There may be a few specific areas where it's the better choice, mostly for content creation, but for gaming at least it routinely falls behind the RTX 2080—and that's without factoring in ray tracing or DLSS. It's better to think of the Radeon VII as an alternative to the GTX 1080 Ti, since it comes with a similar feature set (ie, no hardware DXR support), but that just emphasizes the point that AMD's GPU division is currently about two years behind Nvidia, even with a big process node advantage. What will happen when Nvidia inevitably releases a 7nm GPU?

The more interesting question is where AMD goes from here. Stuffing 16GB of HBM2 onto a consumer graphics card is impressive, as Nvidia reserves such things for ultra-expensive Tesla and Quadro cards, but you can certainly end up with too much of a good thing. The Radeon VII has twice the memory and over twice the memory bandwidth of the RTX 2080, but I'm not sure it needs it. Just like there's not much point in slapping 8GB of VRAM on a budget graphics card, there's not a pressing need for 16GB of VRAM on a high-end gaming card. Content creation is a different story, where the memory could make more sense.

Those who want to boycott Nvidia while still building a high-end gaming setup may find the Radeon VII a worthwhile alternative, but if you're in that camp thinking about splurging on a $700 graphics card, you probably already own a Vega GPU. I think the better hope is to wait for Navi—currently rumored to ship at the end of the year. Then again, we've heard that before: "Wait for the Fury X! Wait for Polaris! Wait for Vega! Wait for …" Waiting isn't much fun, though it can save you money at least. Or you can think of the Radeon VII as a $550 card, and you just happen to be buying $150 in games with it.

Despite taking different paths, it feels like AMD has ended up in the same place as Nvidia. The fervor of the crypto miners may have died down, but they've left a legacy of painfully high GPU prices. A price drop would go a long way toward rectifying things, but whether or when that might happen is anyone's guess. With 16GB HBM2, this certainly isn't a cheap card to manufacture. Unless you simply must have the fastest AMD graphics card currently available, this looks like a good generation of GPUs to skip. It's not that the Radeon VII is bad, but it's not enough of a jump in features or performance to warrant the cost of an upgrade.

Radeon VII is a modest improvement over Vega helped by a die shrink, but it doesn't overcome architecture limitations or warrant the price… unless you need 16GB VRAM.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.