The most demanding PC games right now

These PC games will push your hardware to the limit, and sometimes beyond.

As far back as I can remember, the gaming industry, as far as it relates to computer hardware, has been on a steady, constant climb of improved performance and higher system requirements. Today, few things can push your PC to its breaking point—and sometimes beyond!—like games. We've previously looked at what optimization in games means, which is a great primer for this discussion.

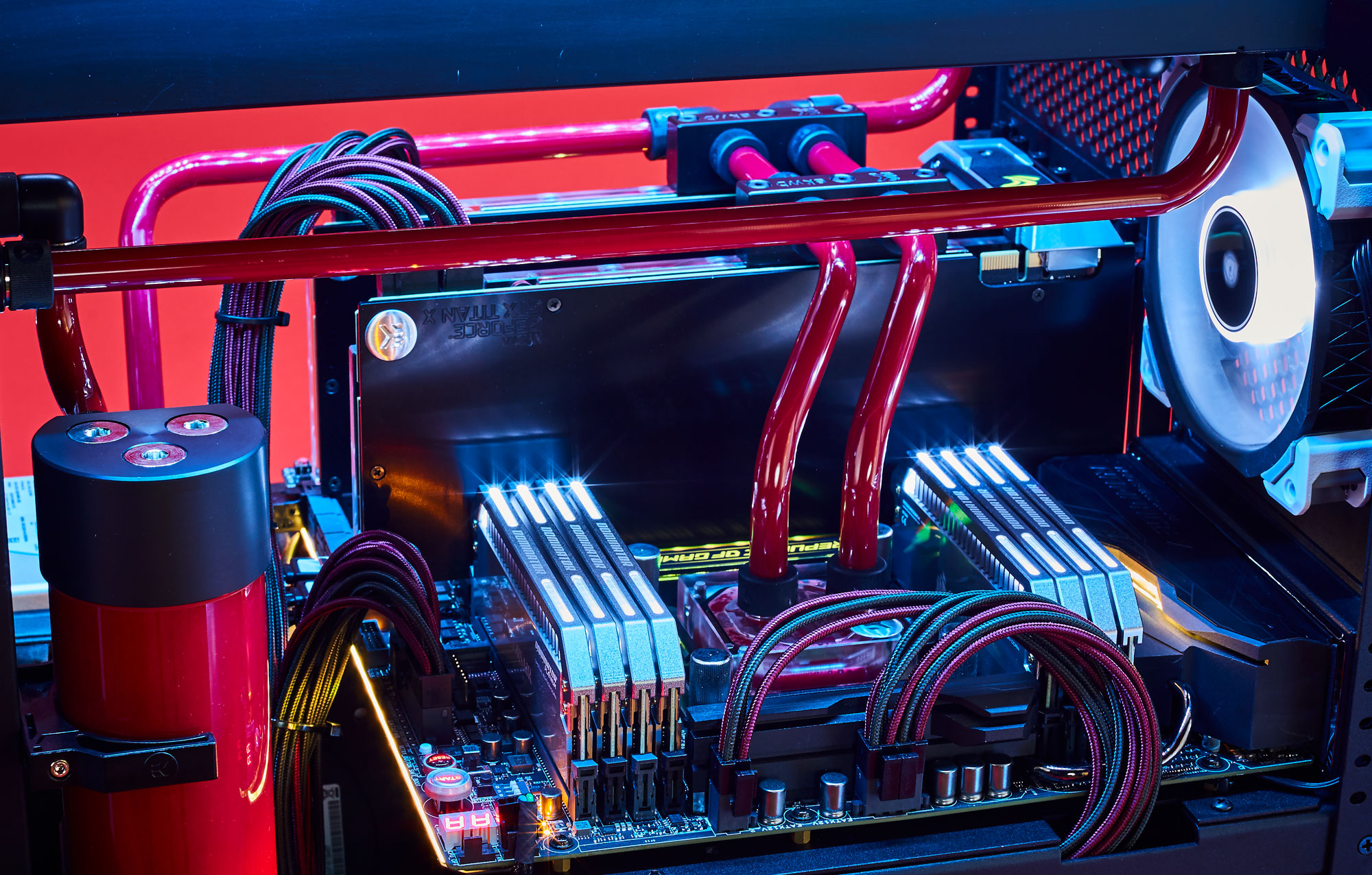

It really doesn't matter how much money or hardware you throw at the problem, because a year later, there will always be newer, faster technology that unlocks additional features. Our Large Pixel Collider (and Maximum PC's Dream Machine) both represent the best gaming hardware available. With a price well north of $10,000, you'd think they could handle any game we might care to run, at 4K and with all the settings maxed out. They get there most of the time, but there are inevitably a few games where they come up short. The hardware and software necessary for max quality simply doesn't exist yet.

Our test PC is very similar to our current high-end gaming PC build. We're using a GTX 1080, which is the main element, but we've upgraded the CPU to an overclocked Core i7-6800K with an MSI X99A Gaming Pro Carbon motherboard. This is a $2,500 / £2,200 system built to handle just about anything, with room for a second GTX 1080 if you're thinking about 4K, or if you want that 1440p 144Hz display to be put to good use.

So what are these games that can bring even the mightiest PC to its knees, and what are they doing that requires more processing power than a third-world country? Here's our list of the most demanding PC games currently available. I've tested each game in the list, running at 2560x1440 and (* mostly) maximum quality on a GTX 1080 paired with a 4.2GHz i7-6800K. This is a step down from the absolute maximum hardware and settings you might run, but running at 4K will basically cut the performance in half, and as you'll see shortly, that means nearly all of these games fail to achieve 60 fps. I'll report the average and 97 percentile minimum fps as well as the settings used on each game.

* Some features, like SSAA and even MSAA in certain games, are simply too demanding to warrant enabling. SSAA for example is equivalent to doubling or quadrupling the number of pixels rendered.

Crysis (2007)

Engine: CryEngine (1)

2560x1440 very high 4xAA: 72/33 fps avg/min

"Can it run Crysis?"

It's not the first game to punish high-end systems with extreme system requirements, but it's the game that kicked off a meme that continues today. That 20 billion dollar supercomputer is fast … but can it run Crysis? LPC is fast, but can it run Crysis? And so it goes. I just pulled this game out of retirement to see how it runs on a modern system, expecting to see some good performance, but either the drivers, or Windows 10, or the game itself just don't seem to care that nine years have passed. Crysis continues to be a hardware glutton, and even now it tends to run poorly at its very high preset, averaging above 60 fps on the test system but also routinely dropping well below that mark.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Crysis continues to be a hardware glutton, and even now it runs poorly at its highest settings.

Back in 2007, running Crysis at maximum quality wasn't just demanding, it was impossible—there wasn't a system around that could come close to 60+ fps without dropping many of the settings. What was the problem? Crysis pioneered the use of several advanced rendering techniques, and it was one of the first DirectX 10 games to hit the market. The combination of new algorithms and a new API meant that it pushed the technology boundaries in ways that the hardware wasn't really equipped to handle. And yes, the implementation probably wasn't optimal. Crysis Warhead and Crysis 2 stepped back the hardware requirements (relative to what was available) without a major drop in quality, and while Crysis 3 (2013) continues to tax modern systems (64/47 fps on my test system), none of those sequels have pushed hardware at the time of release quite as hard as the original Crysis.

Hitman (2016)

Engine: Glacier 2

2560x1440 max (no SSAA): 81/57 fps

Hitman won't punish your system like some other games on this list, but crank up the settings to maximum and it can be pretty choppy—and that's without supersample antialiasing (SSAA). It's not just about the graphics card either, at least not if you want to push high frame rates. You'll need a good CPU, preferably with multiple cores, and then you'll want to run the game in DX12 mode to fully utilize the CPU cores.

Since Hitman isn't quite as fast-paced as other games, slight dips in framerate aren't as painful, and lower settings can provide a big boost to performance. But the bare minimum GPU you'll need to maintain 60 fps at 1080p 'ultra' is GTX 980/1060 6GB or RX 470, while at 1440p the GTX 1080 provides (mostly) smooth sailing.

Grand Theft Auto V (2015)

Engine: Rage

2560x1440 max + advanced: 64/43 fps

Grand Theft Auto wasn't always known as a series that could bring your system to its knees, and to its credit, GTA5 can scale way back on image quality and get much higher frame rates. But if you want to max out the settings, Rockstar has a lot of extra features that put the hurt on your GPU. Along the way to maxing out every graphics option, I enabled Nvidia PCSS (that's 'percentage closer soft shadows' if you're wondering), 4xMSAA, and all the extras in the advanced graphics settings. That dropped performance down to just over 60 fps with periodic dips below that, and it required more than 4GB VRAM, which is pretty impressive for a game that can run at close to 200 fps at 'normal' settings on the same hardware. Multi-GPU scaling is good, however, so high-end SLI systems can generally handle 4K at max (or close to it) settings.

Battlefield 1 (2016)

Engine: Frostbite 3

2560x1440 ultra: 115/89 fps

Battlefield 1 looks quite nice and can run well on a large variety of graphics cards, but while the single-player campaign isn't too demanding, multiplayer can really tax your CPU. In most games, a Core i5 processor will be the least of your worries, but in BF1 with 64 players running around, we saw framerates drop nearly 30 percent going from an overclocked 6-core 4.2GHz i7-5930K to a 4-core 3.9GHz i5-4690. That puts performance just over 80 fps average still, but expect plenty of dips below 60 fps during intense battles.

Games that make me say you should seriously consider opting for a Core i7 over a Core i5 are rare, but Battlefield 1 definitely belongs on the list. Note also that Battlefield 4 and Star Wars: Battlefront use the same engine, though they don't seem to be quite as demanding as the newcomer.

Far Cry Primal (2016)

Engine: Dunia

2560x1440 ultra + HD textures: 76/56 fps

Despite coming from the same publisher, Ubisoft, Far Cry Primal uses a different engine than The Division (see below). It also omits some of the Nvidia-specific technologies, and the result is a slightly less taxing game. That doesn't mean it runs super well on every card, however, and with the HD texture pack installed our GTX 1080 manages to just about maintain a constant 60+ fps. If you've got a G-Sync or FreeSync display, preferably of the 1440p 144Hz variety, that's not a problem, but AMD's Fury X can't even hit 60 fps at the same settings. And at 4K, only dual-GPUs will get you there.

The Division (2016)

Engine: Snowdrop

2560x1440 max HBAO+ HFTS SMAA: 54/36 fps

The Division includes dynamic lighting, reflections, parallax mapping, and contact shadows, along with some Nvidia GameWorks technologies like HBAO+ and HFTS (Hybrid Frustum Traced Shadows, an enhancement of PCSS). I normally test using the 'ultra' preset, but there are quite a few features that can be set to even higher levels. For this test, I maxed out everything, including object rendering distance, SMAA, and of course HFTS shadows and HBAO+ ambient occlusion. The GTX 1080 scores 69/46 at the ultra preset, but adding in these other features drops performance by over 20 percent. At maximum quality settings, that makes The Division one of the most demanding games currently available, though you'll need an Nvidia GPU if you want to enable certain features like HFTS.

The Witcher 3 (2015)

Engine: REDengine 3

2560x1440 ultra w/ HBAO+: 53/40 fps

Some people complained that the graphics quality of The Witcher 3 was reduced between the preview 'bullshots' and what we eventually received, but that was probably done in the name of balancing performance against image fidelity. Because the game is still super demanding, especially if you enable HairWorks and all the other extras—which I did for this test. That drops performance by 25 percent compared to my normal testing (without HairWorks or HBAO+), and the GTX 1080 ends up dropping well below 60 fps.

One interesting fact to point out is that if you have an SLI setup, the in-game anti-aliasing (FXAA) causes serious issues with multi-GPU scaling, so you're better off disabling that feature in the game and using Nvidia's drivers to force FXAA on. Once you do that, a second GPU can improve performance by around 75 percent. Which means 1080 SLI is just about enough to run 4K at max settings and still get 60 fps.

Ark Survival Evolved (2015 Early Access)

Engine: Unreal Engine 4

2560x1440 epic: 30/20 fps

Okay, Ark is still in Early Access, though it's supposed to launch before the end of the year—maybe. It's been around long enough now that I had hoped performance would improve, but much like players in Ark, it punches and stabs your hardware in the face, then stomps all over it with a giant dinosaur. Using the 'epic' preset, I could barely hit 30 fps, let alone 60—and it doesn't really matter whether you're staring at the face of a rock wall or looking out over a vast expanse of the island, Ark will run like a three-toed sloth on its way to a nap. You might think dropping the settings would help a lot, and the low setting certainly does, but even at 1440p medium framerates hover around the 60 fps mark.

Ark runs like a three-toed sloth on its way to a nap.

There was talk last year of Ark getting a DirectX 12 patch to improve performance, and maybe that will still happen. But unless I'm mistaken, the game needs far more in the way of optimizations (see above note about staring at a rock wall—culling all the content behind the face of the rock should significantly boost framerates). And frankly, it's not the prettiest game around to begin with. Unless you have a pair of high-end GPUs (1080/1070 or Nano/Fury X), or a patch comes along that makes a huge difference in performance, plan on shooting for 30+ fps in Ark using the medium or high preset.

Rise of the Tomb Raider (2015)

Engine: Foundation

2560x1440 max + VXAO + SMAA: 54/44 fps

If you've noticed that many of these games use Nvidia's GameWorks libraries, you might be tempted to think that GameWorks is equivalent to a game being poorly optimized. That's not actually the case (or at least you can't prove it one way or the other), however, as GameWorks is simply a library of graphics effects that Nvidia has created, many of them designed to showcase stuff that you might not otherwise see. Case in point is Rise of the Tomb Raider's VXAO, voxel ambient occlusion, which is the next step in indirect (ambient) shadow quality beyond HBAO+.

The problem with many of these effects is that changes in the way things render aren't always clearly better. Check out this image comparison from Nvidia between VXAO and HBAO+, where VXAO looks like a brightening filter combined with bloom. Does it look better or worse than the HBAO+ image? I'm not sure I could say. What I can say is that Rise of the Tomb Raider is already a demanding game, and turning on VXAO causes a substantial 20 percent drop in performance, putting the GTX 1080 well below the 60 fps mark yet again. Note that VXAO is currently only supported on Maxwell 2.0 and Pascal cards, and it's only available in DX11 mode.

Ashes of the Singularity (2016)

Engine: Nitrous

2560x1440 max: 37/24 fps

Originally conceived as the tech demo Star Swarm to show the benefits of low-level APIs (AMD's Mantle at the time), the Nitrous engine, as it's now called, eventually got ported to DirectX 12 and became a real-time strategy game that can show hundreds of units on the screen at once. Under DX11, the number of draw calls will cause severe problems at maximum quality, and while lower settings help, even the 1080p 'standard' setting can punish systems.

I decided to take things to the next level, starting at the 'crazy' preset and then maxing out temporal AA quality, terrain object quality, shadow quality, and texture quality. This gave me the second lowest fps on a GTX 1080 of the games I've tested (behind Ark), but being a real-time strategy game it feels a bit more forgiving than your typical first-person shooter. What's interesting here is that, even with an overclocked 6-core processor, the CPU appears to be a significant bottleneck. Just plan on running lower quality settings on most systems and you'll be okay… maybe. A GTX 1050 at 1080p standard only averages 36 fps.

Arma 3 (2013)

Engine: Real Virtuality 4

2560x1440 max 4xMSAA: 42/22 fps

Developer Bohemia Interactive has a reputation for creating realistic warfare simulations, and all that number crunching combined with the large maps means you'll want a powerful system. Arma 3 is known for being a system crusher, and it can make use of faster CPUs like the Core i7 if you have them... sort of. The single player game isn't too bad, but like Battlefield 1, multiplayer takes things to a whole new level. I measured performance of 80/62 fps in single player, while on a server with 80 people on the island of Stratis, staying above 40 fps was a challenge, and dips into the low 20s were a common occurrence. Turning settings down helps a bit, but Arma 3 multiplayer doesn't seem like it's intended to run at higher frame rates, as even dropping to the low defaults still left my system below 60 fps in most areas, suggesting the bottleneck lies elsewhere.

Deus Ex: Mankind Divided (2016)

Engine: Dawn

2560x1440 ultra (no AA): 50/40 fps *

The undisputed king of demanding games right now, selecting the 'ultra' preset in DXMD will consume your graphics card and leave it spitting out broken and flickering pixels. Okay, not really. But the game can take even the fastest GPU and still fail to come anywhere near 60 fps at 1440p ultra—and that's without enabling 4xMSAA. * Enabling 4xMSAA drops performance in half, which means a single GTX 1080 pokes along at just 25/20 fps at 1440p. You can forget about 4K ultra for now, as that would halve framerates yet again. A pair of Titan X cards in SLI might get above 30 fps at 4K ultra with 4xMSAA, though. And unlike Ark, the game actually looks really great—you can at least appreciate the level of detail and complexity being rendered on your system and think, "okay, I see why my system is struggling."

Enabling 4xMSAA drops performance in half, to 25/20 fps at 1440p.

The secret to DXMD's GPU-killing performance comes via every modern graphical bell and whistle you care to name. Dynamic lighting, screenspace reflections, tessellation, volumetric lighting, subsurface scattering, cloth physics, parallax occlusion mapping, and hyperbolic refractions. (I made that last one up.) The good news is you can disable a lot of these features to improve performance, and not all of them make a huge difference in the way the game looks. Still, even using the low (minimum quality) preset on a GTX 1080, DXMD only manages to average 128/97 fps at 1080p. The GTX 1080 is about three times as fast as the GTX 1050, which means budget cards like the 1050 at minimum quality will only run at ~40 fps. This is the Crysis of 2016, using technology and hardware in ways that will tax even the fastest current system. Perhaps five years from now, we'll have hardware that will finally be up to the task.

For more details on what makes a game demanding, be sure to check out what optimization in games really means.

Having a game push my PC to the limit and force me to upgrade is nothing new. For me, it all started back with Wing Commander on a 286 12MHz. My first PC (that I bought) was less than six months old when the game came out, and I suddenly found out that my 16-bit CPU couldn't do things that the 32-bit 386 would allow—EMS / Expanded Memory being the specific issue in this case. I sold off my still-new 286 and eventually dropped another $500 to upgrade to the fastest 386 I could find (33MHz), doubling my RAM in the process. The 486 was technically available as well, but to a 17-year-old earning minimum wage, that was like going out and buying the $1650 Core i7-6950X today.

What's truly crazy to think about, however, is that 30 years later, my current 6-core i7 processor is nearly 100,000 times faster than that old 286 12MHz. Moore's Law may be dead, but ten years from now we're still going to have significantly faster systems than we do today, which will enable the next set of ultra demanding games.

What games have you played that punished your hardware so badly that you eventually upgraded just to get a proper experience from the game? Let me know in the comments, and I'll see about updating this list as needed!

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.