Nvidia is reportedly looking to cut gaming GPU production by up to 40% in 2026 due to VRAM supply issues, but it's not as bad news as you might think. Not yet, at least

There's already an excess of RTX 50-series cards, for starters.

It might feel like tech news is a stuck record at the moment, with every other headline reporting on the grim effects of the current DRAM supply crisis. And at first glance, a report that Nvidia is planning on cutting discrete GPU production by up to 40% just seems like a case in point. However, while it is all RAM-related, it's not necessarily bad tidings.

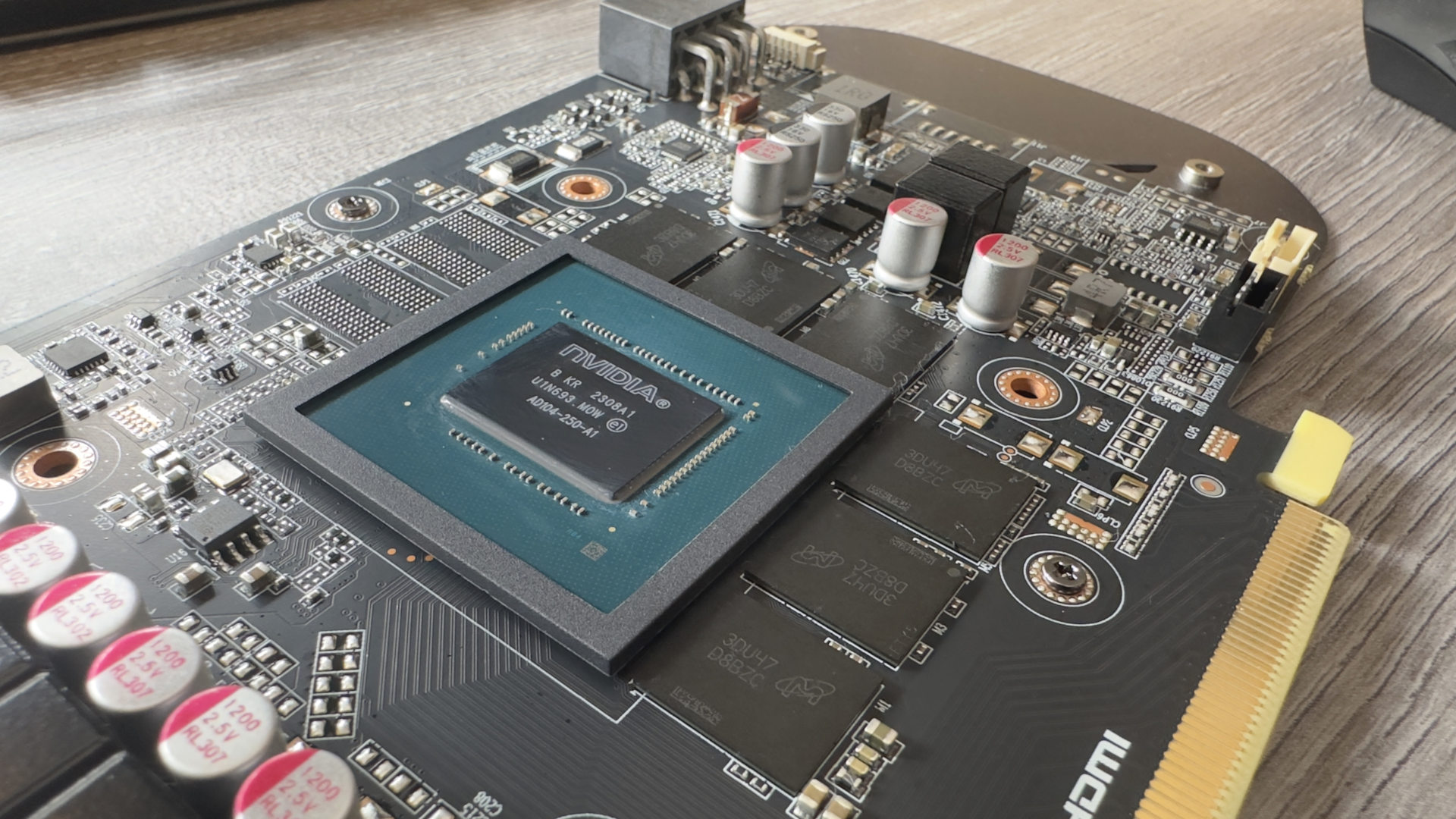

The claim in question was posted on the Chinese Board Channels forum (via Benchlife and Overclock3D) and basically claims that Nvidia is set to reduce the supply of its gaming GPUs by between 30% and 40% in the first half of 2026, compared to the volume of output during the same period in 2025.

That's not surprising given that the DRAM supply problems, affecting the entire computing industry, will result in fewer desktop and laptop PCs being shipped next year. Given just how many of them typically sport an Nvidia discrete GPU, either soldered onto the motherboard or in the form of a third-party graphics card, the demand for RTX Blackwell chips is obviously going to plummet.

However, there's not exactly a huge shortage of GeForce RTX 50-series cards at the moment, with the exception of sensibly priced RTX 5080 and 5090 models. Browse through any retailer's listings, and you'll see endless supplies of RTX 5050, 5060, and 5070 graphics cards. This certainly wasn't the case 10 months ago, but Nvidia and its partners ramped up production considerably since the launch of Blackwell.

So you're unlikely to see RTX 50-series cards suddenly disappearing from shelves, though the report also suggests that some add-in board vendors plan to adjust supplies of 16 GB models first, namely the RTX 5060 Ti and RTX 5070 Ti cards. Again, that makes sense because these use twice as many GDDR7 modules as the 8 GB RTX 5060 Ti and 33% more chips than the RTX 5070.

There's another factor to consider in all of this, which isn't covered in the report: Nvidia's expected RTX 50 Super variants. The general assumption for these cards, based on the numerous leaks and rumours that have cropped up throughout 2025, is that these will use 3 GB GDDR7 modules instead of the standard 2 GB ones.

RTX 5090 laptops already use these, which is why they sport 24 GB of VRAM, even though the GPU inside only has a 256-bit memory bus (256 bits comes from 8 x 32-bit wide memory buses, so 8 x 3 GB = 24 GB). Should an RTX 5080 Super, RTX 5070 Super, and possibly even an RTX 5060 Super all sport these size VRAM chips, then Nvidia will have to contend with the fact that while it has enough GPUs to meet the demand for these cards, it can't guarantee the same for the VRAM.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The solution? Cut the supply of 'normal' GDDR7-equipped chips so that the VRAM suppliers are able to produce sufficient memory chips for the Super models. As far as I can tell, only Samsung is producing 3 GB GDDR7 modules to any degree of quantity, and if Nvidia is already using the company to supply 2 GB chips, swapping the order books over to the higher density modules means less VRAM is going to be available for its regular Blackwell chips.

That said, there has been a rumour that Nvidia is also planning to stop packaging VRAM modules with its GPUs (Asus, MSI, etc buy both of them from Nvidia, as things currently stand), so if that comes to pass, then maybe the news is grim reading.

Since RTX Blackwell isn't even a year old yet, the next generation of GeForce GPUs isn't going to appear any time soon, so Nvidia will want to have something new for the graphics card market in 2026. That can only be RTX 50 Supers, and if those fresh models have already been planned and lined up for launch, Nvidia will need to do everything it can to ensure that they're actually available to buy.

Mind you, there wasn't a RAM supply crisis when Blackwell launched earlier this year, and yet supplies were so bad that most GeForce RTX 50 cards were either incredibly hard to find or they sported a price tag hundreds of dollars over the MSRP. I don't drink alcohol, but I'm still going down to the Winchester to have a pint of something fizzy and wait until this all blows over.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.