AMD Linux devs jamming nearly 24,000 lines of RDNA 4 supporting code into its mainstream driver suggests next-gen launch may be close at hand

Mesa mega merge means a magnificent moment may manifest…err…soon.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

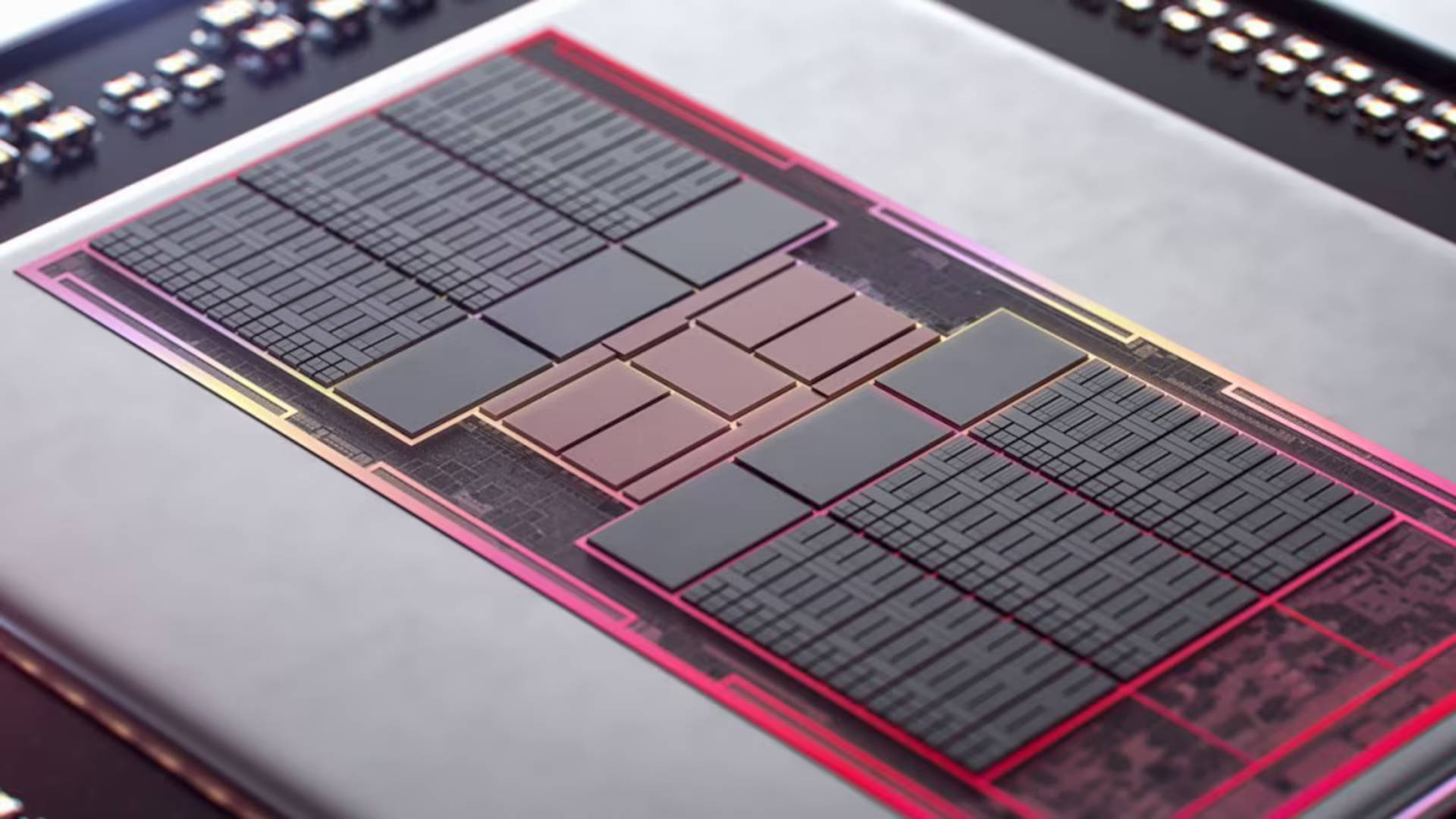

AMD's software engineers have been very busy of late, updating its Linux GPU kernel driver, shader compiler, and other code sources to provide support for its next generation of graphics architecture. Now it's done the same for its RadeonSI OpenGL driver, adding almost 24,000 lines of code for GFX12, aka RDNA 4, to the Mesa open-source graphics library.

The big code merge was spotted by Phoronix and there's only one reason why engineers would be so busy updating their code base for an architecture that isn't currently on the market—it will be very soon.

RDNA 3, the current GPU chip design, is internally codenamed as GFX11 by AMD, so anything referring to GFX12 is clearly about its successor. I'm not talking about RDNA 3.5, which is listed as GFX11.5 and will only bring minor changes when it appears in AMD's Strix Point and Strix Halo laptop APUs. All of the recent code merges are for RDNA 4, although the files themselves don't tell us much about what we can expect from the forthcoming architecture.

Radeon RX 7000-series GPUs were first introduced in November 2022, with the RX 7900 XTX and XT being the first consumer-level graphics cards to use chiplets. Despite having lots of performance, AMD has struggled to sell as many RDNA 3 cards as it has RDNA 2 ones, as the latter has generally offered better bang-for-buck.

Thanks to dwindling stocks of certain RX 6000 models and various price cuts for the likes of the RX 7900 XT and 7800 XT, things are a little rosier but overall, AMD hasn't managed to capture a significantly better share of the discrete GPU market. So what things can we expect, or hope, to see with RDNA 4—or another way of asking this is, what needs to be improved in AMD's next GPU architecture for sales to improve?

As there's nothing wrong with the fundamental rendering performance of AMD's design (aka rasterization), it comes down to areas where RDNA 3 trails behind Nvidia's Ada Lovelace-powered RTX 40-series. So that's ray tracing, leveraging machine learning for better performance, and power efficiency. And that's where the rumours have pegged AMD's new GPU architecture as being different from the current generation; in a different way of approaching ray tracing for RDNA 4.

RDNA 3 GPUs have dedicated hardware for accelerating ray-triangle intersections, but nothing for doing BVH traversals—that's all done via the same units that handle all of the usual shaders. It's a similar situation for doing calculations for deep learning neural networks. Where Intel and Nvidia have large dedicated matrix units, AMD uses a combination of 'AI accelerators' and shader units to achieve the same thing.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

And while the likes of the Radeon RX 7900 XTX have the same average power consumption as an equivalent card from Nvidia, the peak power demand is typically a lot higher, as is the idle consumption. Multiple chiplets and the complex Infinity Link system are great for wafer yields but the first implementation of them needs fine-tuning to make it all more power efficient.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

However, it's looking increasingly likely that we won't see a very high-end RDNA 4 graphics card, or certainly not at launch. There's been much talk of AMD skipping the halo sector for its next GPU release and focusing more on the mid-range and mainstream markets, which points to sticking with a monolithic design or one with just a few chiplets.

Whatever changes AMD has in store for us with RDNA 4, at least it's got everything all set from a driver perspective, because if it's all there for the relatively small Linux market, it's also certainly got everything in hand for the dominant DirectX market.

And best of all, it looks like we don't have much longer to wait to find out how good it is.

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?