GeForce RTX 2080 and RTX 2080 Ti unboxed

These beautiful graphics cards are just waiting to be punished with benchmarks.

The arrival of a new series of graphics cards is always an event—it doesn't matter if it's AMD or Nvidia, or even Intel. The last major GPU architecture launch from Nvidia was in March 2016, when Pascal arrived. Two and a half years can feel like an eternity sometimes. We've provided an in-depth look at the technical details of the Nvidia Turing architecture, talked about ray tracing, and gathered everything you need to know about the GeForce RTX 2080. But now the cards are finally here, and it's time to unpack and start benchmarking.

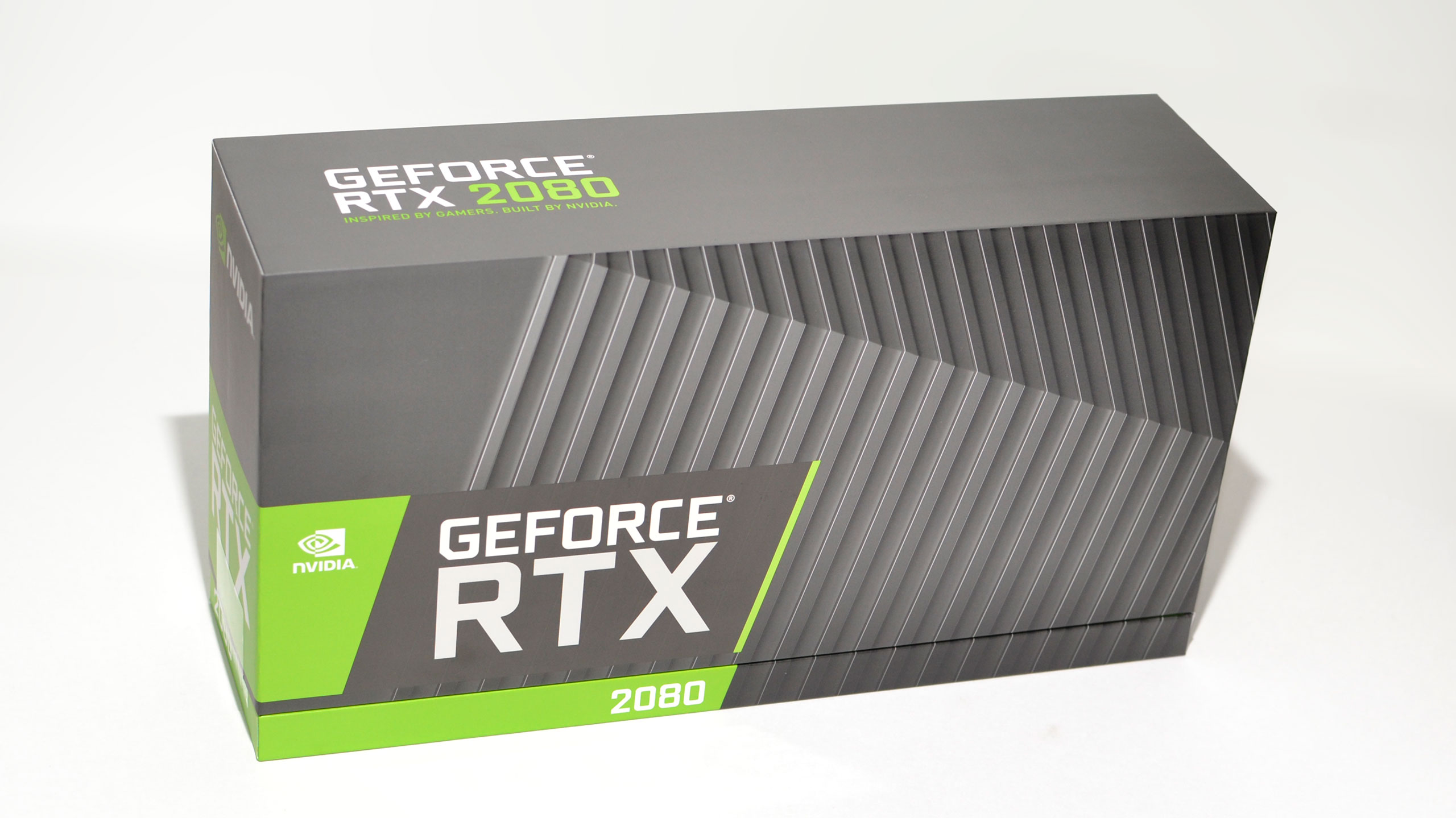

Nvidia's graphics card partners could learn a thing or two about packaging from the Founders Edition cards. There are no garish images or marketing claims, just a simple box with the name of the product. I get that Asus, EVGA, Gigabyte, MSI, et al are all trying to stake out mindshare and establish their brands, but the understated styling of Nvidia's box and cards deserves some praise.

Opening up the box is where I really appreciate Nvidia's attention to detail. There's a sturdy foam shell that conforms nicely to the GeForce RTX cards, rather than cheap cardboard or Styrofoam. You get the sense that FedEx or UPS could kick the box around and the card wouldn't be at risk. Such premium packaging might not be viable on a budget or midrange GPU, but with the Founders Edition RTX cards costing $799 for the 2080 and $1,199 for the 2080 Ti, there's no need to skimp.

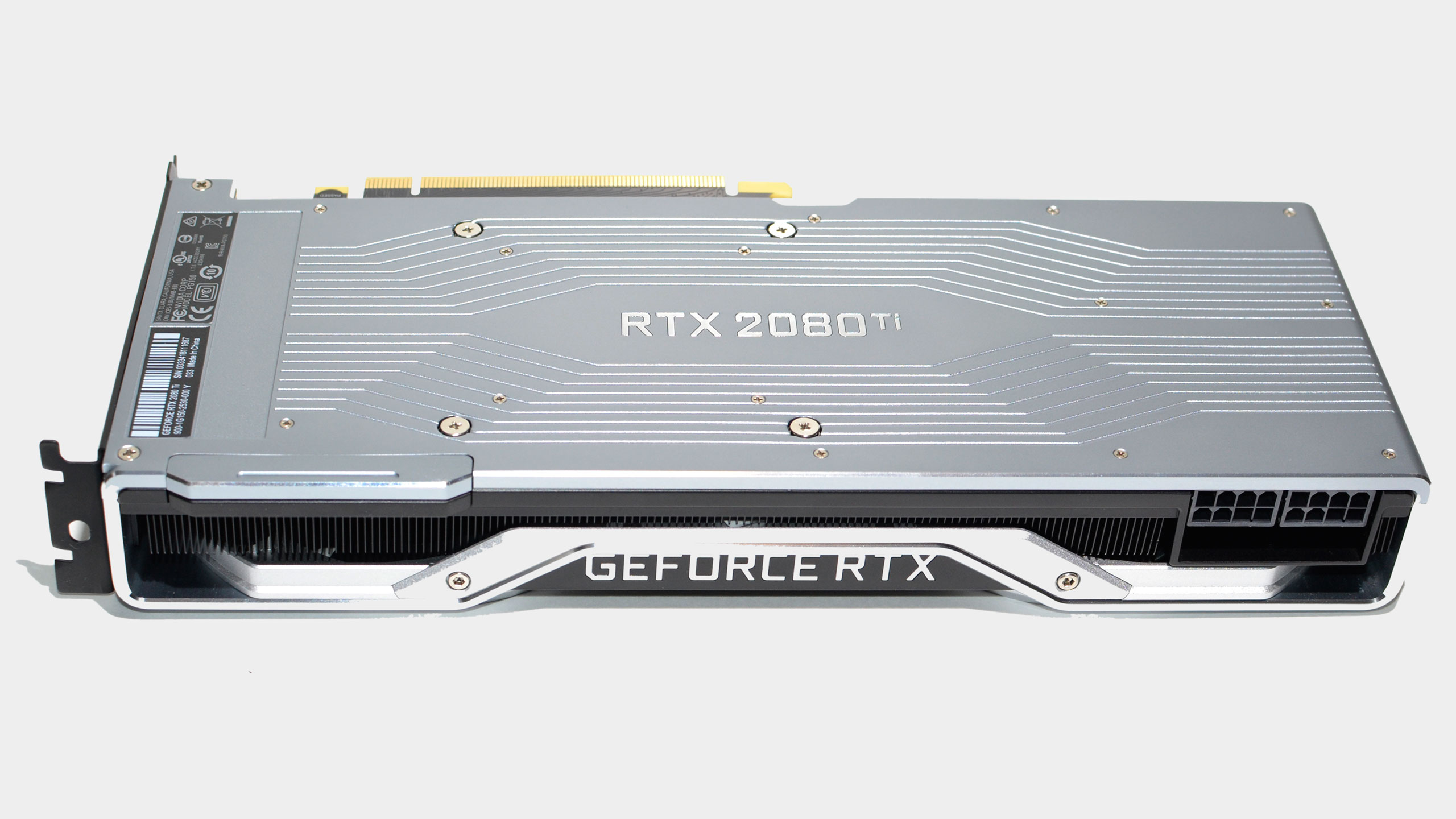

Other than the power connectors and the labels, the RTX 2080 and 2080 Ti are basically twins. They have the same video outputs, the cooling looks identical, and even weight is nearly the same. Speaking of which, the graphics cards themselves have a weightiness to them that gives confidence in the cooling capabilities, and Nvidia has made some bold claims about improved thermals, power, and overclocking that I'm eager to test.

The RTX 208 as an example has 8-pin and 6-pin PCIe power connections, while the RTX 2080 Ti has dual 8-pin connections. For 225W and 260W official TDPs (10W higher than the reference, thanks to the factory overclock), there's still plenty of extra power on tap—even considering the 35W that VirtualLink can draw when it's in use. Between the motherboard PCIe x16 slot and the PEG connections, the 2080 has 300W available and the 2080 Ti has 375W on tap. I suspect we won't come close to maxing out the power delivery without resorting to some extreme cooling.

I'm not quite as convinced about the shift from blower fans to dual axial fans. The dual fans will certainly be able to cool the GPU, but for smaller cases I really appreciated having a blower that would vent the heat away from other components. The pursuit of lower noise levels favors the dual fan approach, so I understand the reasoning, but I've used quite a few GPUs over the years and my experience is that blower fans are far less likely to fail. I've had two blowers where a fan failed (HD 4870 X2 and an HD 5870, if you're wondering), compared to dozens of failed fans for open air coolers. The Nvidia fans on the RTX cards do look pretty robust, but only time will tell if they hold up as well as fans on the older reference cards.

The good news is that with the graphics cards—and more importantly, the latest Nvidia drivers—now in hand, I can get to benchmarking. We'll have full reviews of both the 2080 and 2080 Ti Founders Editions up by the time the cards are for sale, next Friday. Stay tuned!

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.