What is ray tracing, and how does it differ from game to game?

Our comprehensive guide to real-time ray tracing, the next big leap in graphics processing.

Nvidia has been almost single-minded in its effort to grow the adoption of ray tracing technology, and a number of game developers and engine creators are heeding the call. A driver update that enables ray tracing support on GTX cards means that millions of consumers and some of the best graphics cards have been added to the theoretical pool of ray tracing adopters. Simultaneously, the number of games to take advantage of ray tracing is starting to expand well beyond what was available shortly after the launch of the RTX series.

When trying to explain a relatively complex rendering technology to the uninitiated it's tempting to over-simplify, especially if you're responsible for marketing and selling that tech. But a basic explanation of what ray tracing is and how it works tends to gloss over some of its most important applications—including some of what's positioning ray tracing to be the next major revolution in graphics.

We wanted to dive deep into not only what ray tracing actually is, but also drill down on specific ray tracing techniques and explain how they work and why they matter. The goal is not only to get a firmer understanding of why ray tracing is important and why Nvidia's championing it so hard, but also to show you how to identify the differences between scenes with and without ray tracing and highlight exactly why scenes with it enabled look better.

A short primer on computer graphics and rasterization

Creating a virtual simulation of the world around you that looks and behaves properly is an incredibly complex task—so complex in fact that we've never really attempted to do so. Forget about things like gravity and physics for a moment and just think about how we see the world. An effectively infinite number of photons (beams of light) zip around, reflecting off surfaces and passing through objects, all based on the molecular properties of each object.

Trying to simulate 'infinity' with a finite resource like a computer's computational power is a recipe for disaster. We need clever approximations, and that's how modern graphics rendering (in games) currently works.

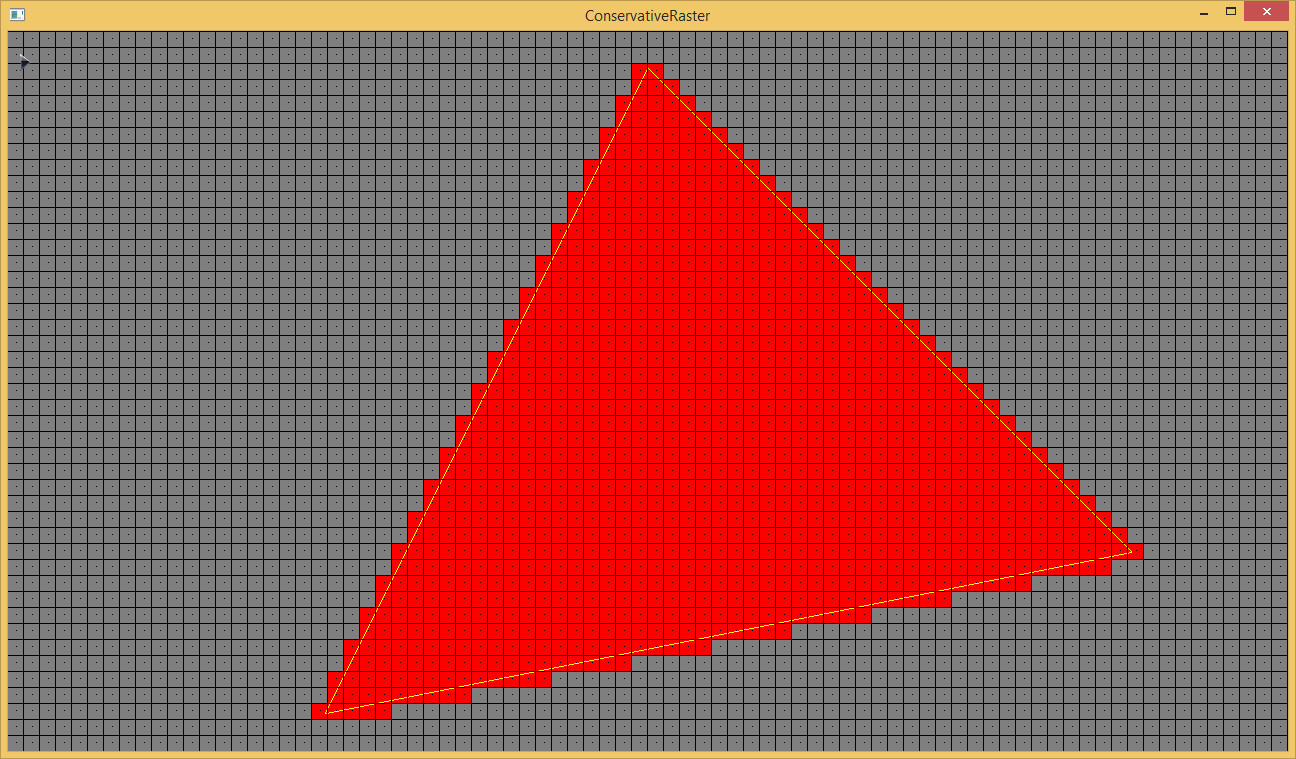

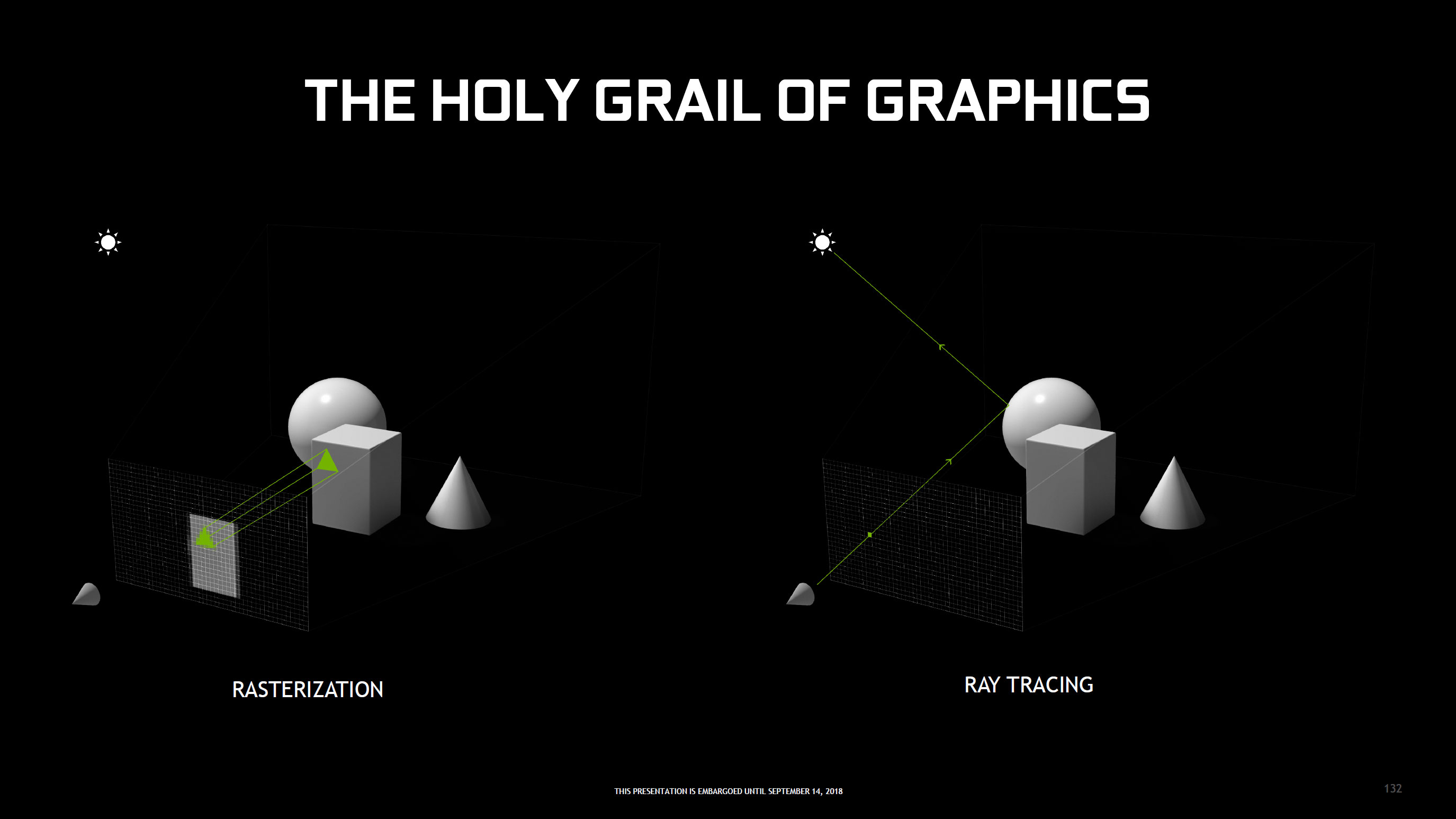

We call this process rasterization, and instead of looking at infinite objects, surfaces, and photons, it starts with polygons—triangles, specifically. Games have moved from hundreds to millions of polygons, and rasterization turns all that data into 2D frames on our displays.

It involves a lot of mathematics, but the short version is that rasterization determins what portion of the display each polygon covers. Up close, a single triangle might cover the entire screen, while if it's further away and viewed at an angle it might only cover a few pixels. Once the pixels are determined, things like textures and lighting need to be applied as well.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Doing this for every polygon for every frame ends up being wasteful, as many polygons end up being hidden (ie, behind other polygons). Over the years, techniques and hardware have improved to make rasterization much faster, and modern games can take the millions of potentially visible polygons and process them at incredible rates.

We've gone from primitive polygons with 'faked' light sources (eg, the original Quake), to more complex environments with shadow maps, soft shadows, ambient occlusion, tessellation, screen space reflections, and other graphics techniques attempting to create a better approximation of the way things should look. This can require billions of calculations for each frame, but with modern GPUs able to process teraflops of data (trillions of calculations per second), it's a tractable problem.

What is ray tracing?

Ray tracing is a different approach, first proposed by Turner Whitted in 1979 in "An Improved Illumination Model for Shaded Display" (Online PDF version). Turner outlined how to recursively calculate ray tracing to end up with an impressive image that includes shadows, reflections, and more. (Not coincidentally, Turner Whitted now works for Nvidia's research division.) The problem is that doing this requires even more complex calculations than rasterization.

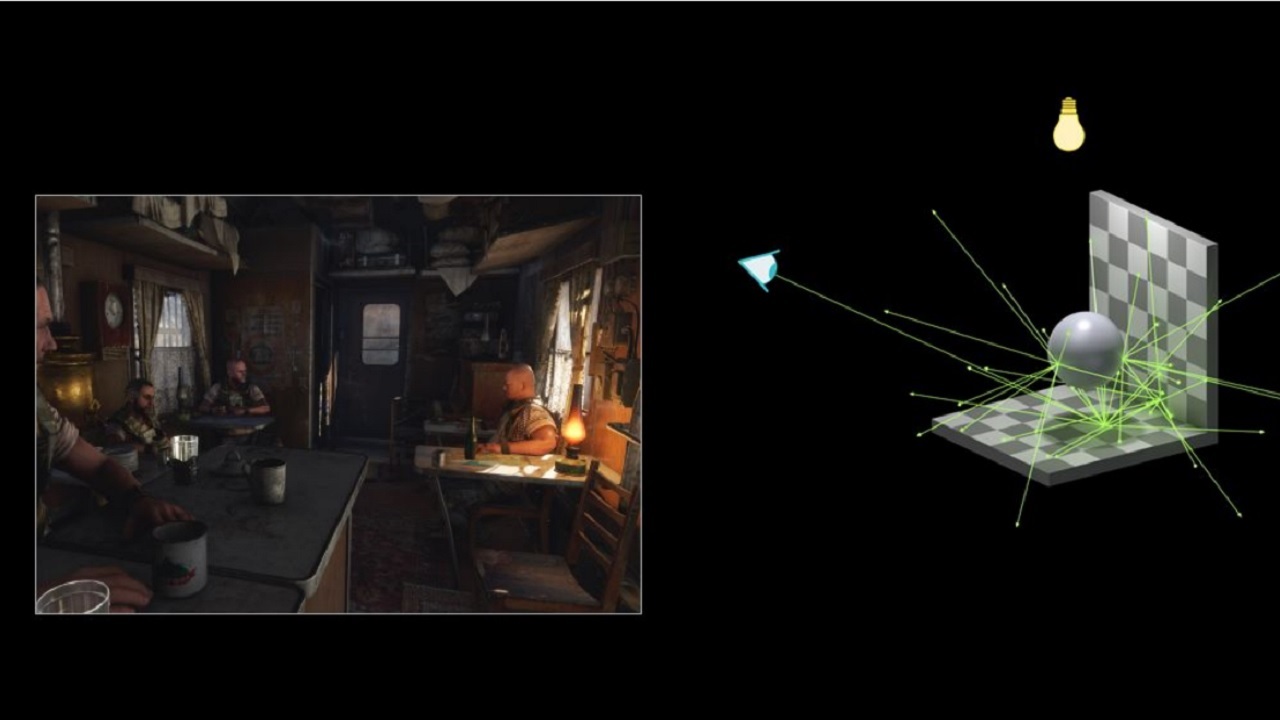

Ray tracing involves tracing the path of a ray (a beam of light) within a 3D world. Project a ray for a single pixel into the 3D world, figure out what polygon that ray hits first, then color it appropriately. In practice, many more rays per pixel are necessary to get a good result, because once a ray intersects an object, it's necessary to calculate light sources that could reach that spot on the polygon (more rays), plus calculate additional rays based on the properties of the polygon (is it highly reflective or partially reflective, what color is the material, is it a flat or curved surface, etc.).

To determine the amount of light falling onto a single pixel from one light source, the ray tracing formula needs to know how far away the light is, how bright it is, and the angle of the reflecting surface relative to the angle of the light source, before calculating how hot the reflected ray should be. The process is then repeated for any other light source, including indirect illumination from light bouncing off other objects in the scene. Calculations must be applied to the materials, determined by their level of diffuse or specular reflectivity—or both. Transparent or semi-transparent surfaces, such as glass or water, refract rays, adding further rendering headaches, and everything necessarily has an artificial reflection limit, because without one, rays could be traced to infinity.

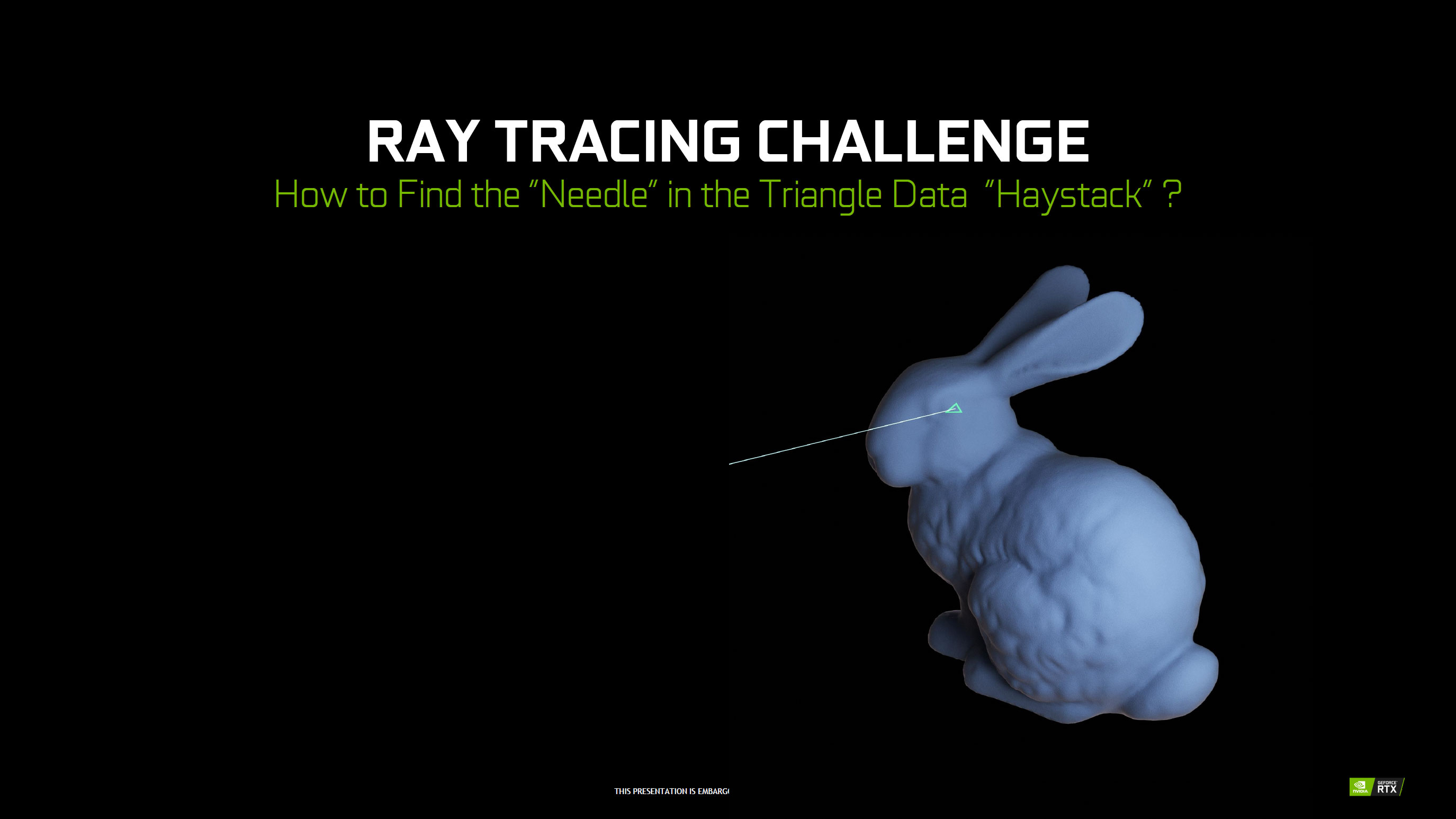

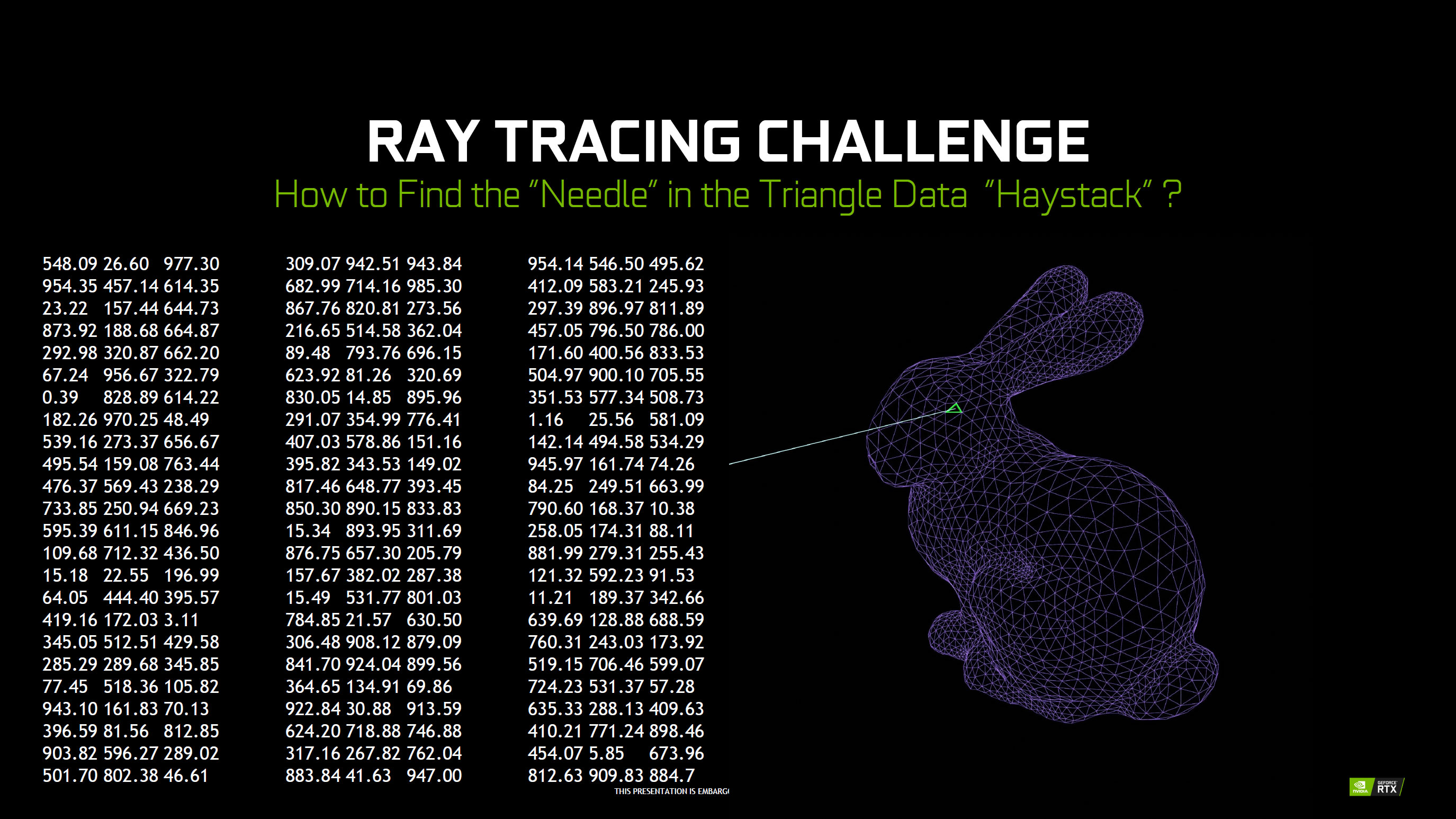

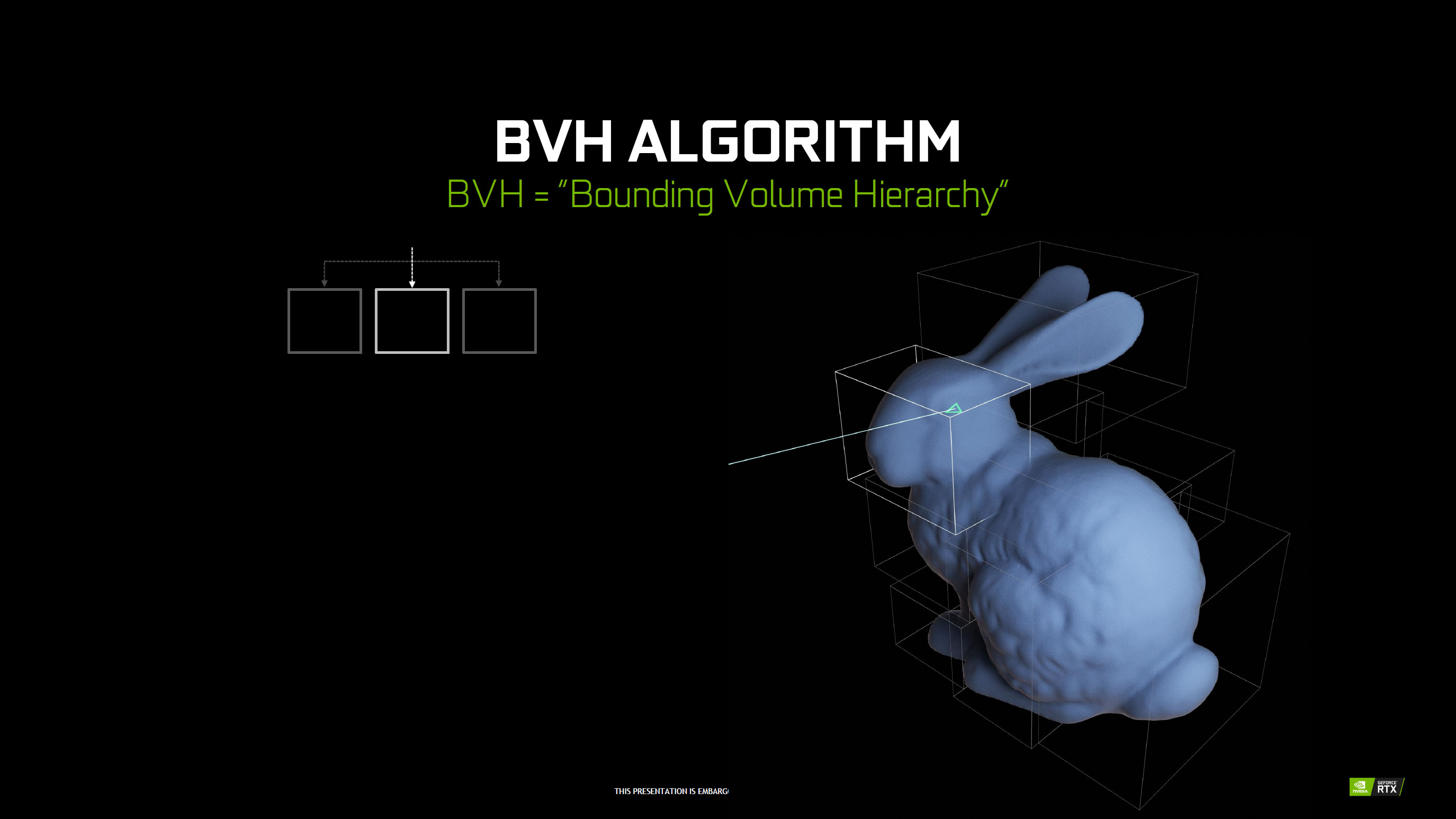

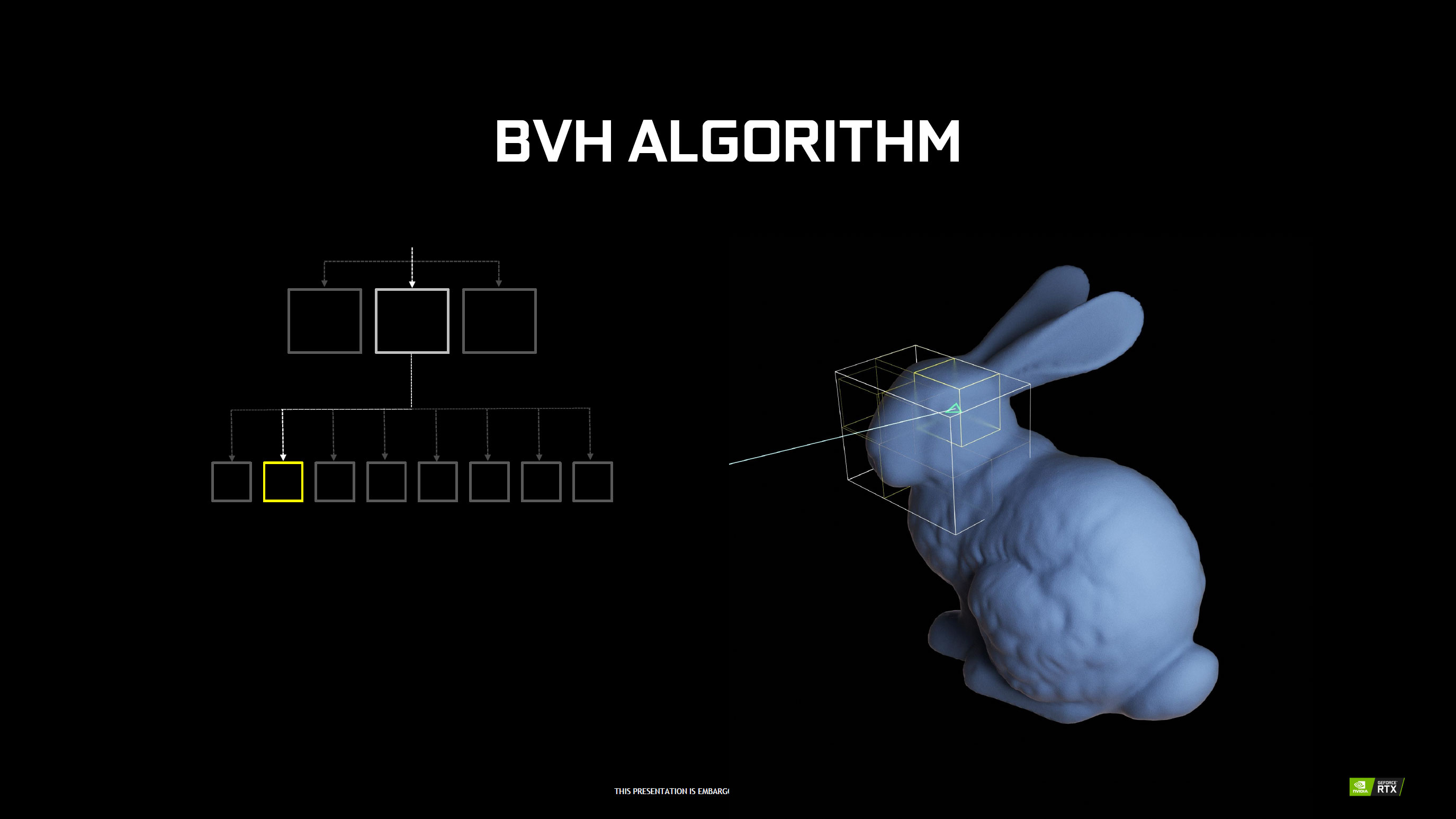

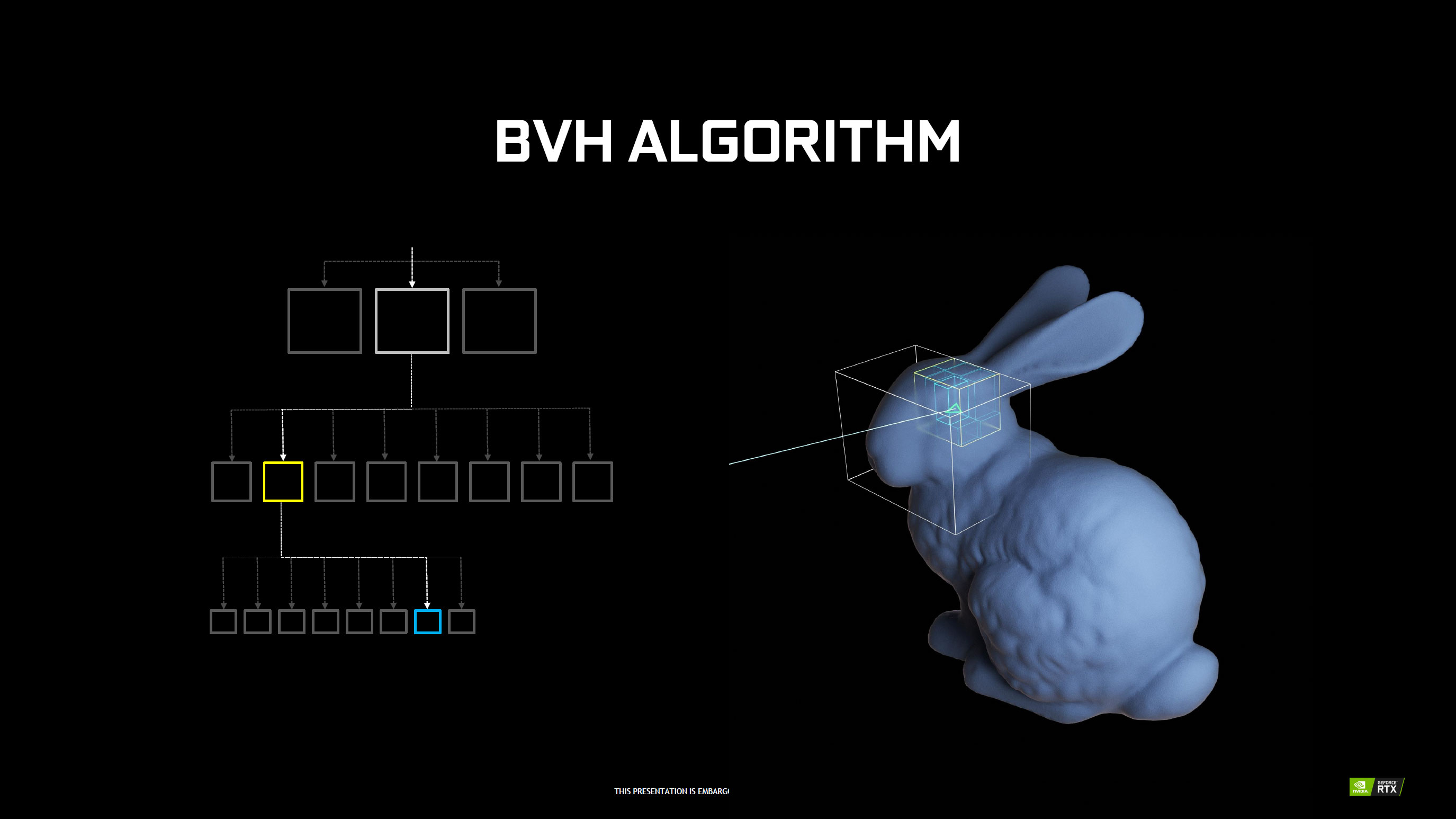

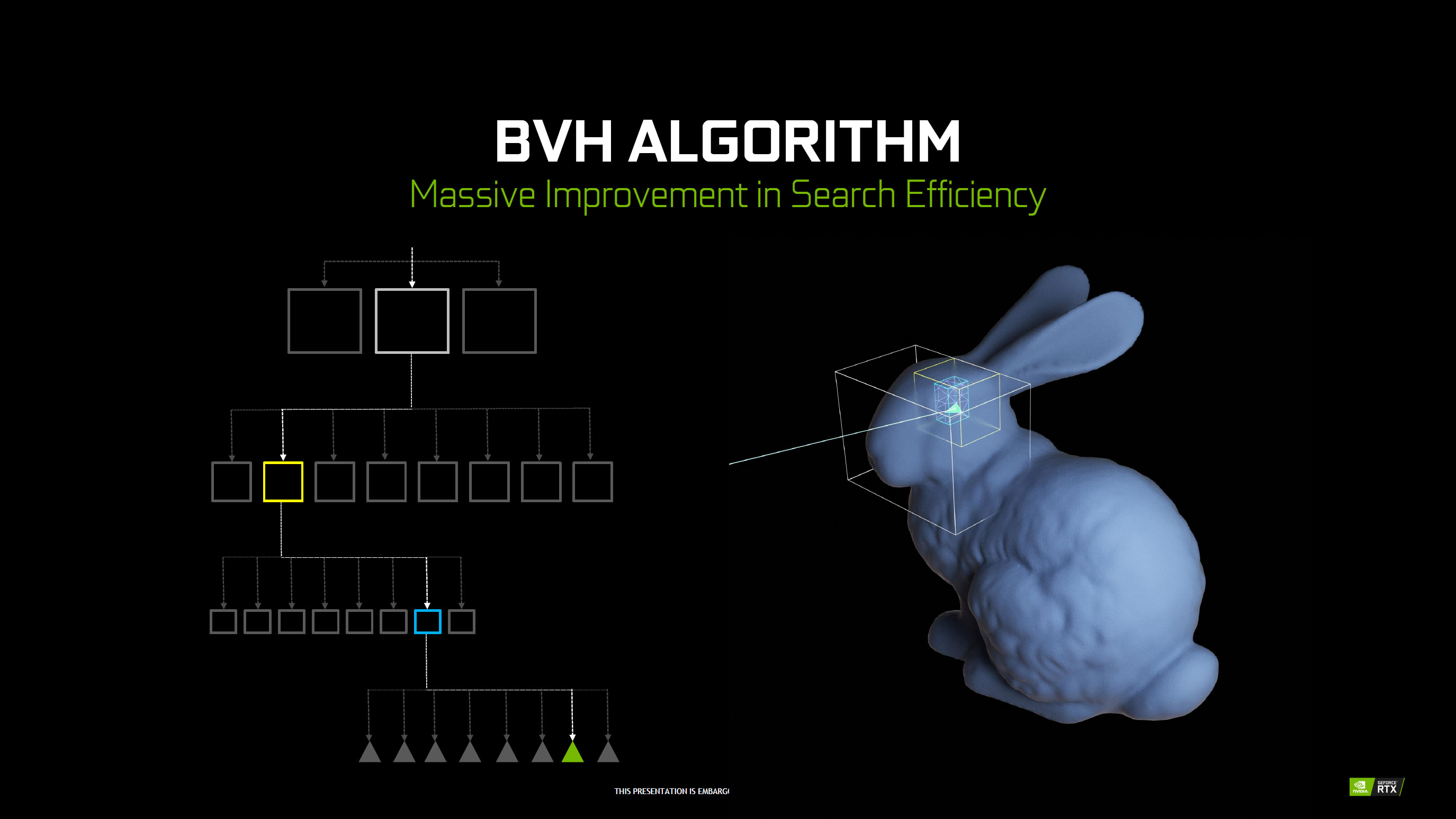

The most commonly used ray tracing algorithm, according to Nvidia, is BVH Traversal: Bounding Volume Hierarchy Traversal. That's what the DXR API uses, and it's what Nvidia's RT cores accelerate. The main idea is to optimize the ray/triangle intersection computations. Take a scene with thousands of objects, each with potentially thousands of polygons, and then try to figure out which polygons a ray intersects. It's a search problem and would take a very long time to brute force. BVH speeds this up by creating a tree of objects, where each object is enclosed by a box.

Nvidia provided the above example of a ray intersecting a bunny model. At the top level, a BVH (box) contains the entire bunny, and a calculation determines that the ray intersects this box—if it didn't, no more work would be required on that box/object/BVH. Since there was an intersection, the BVH algorithm gets a collection of smaller boxes for the intersected object—in this case, it determines the ray in question has hit the bunny object in the head. Additional BVH traversals occur until eventually the algorithm gets a short list of actual polygons, which it can then check to determine which bunny polygon the ray hits.

These BVH calculations can be done using software running on either a CPU or GPU, but dedicated hardware can speed things up by an order of magnitude. The RT cores on Nvidia's RTX cards are presented as a black box that takes the BVH structure and a ray, cycles through all the dirty work, and spits out the desired result of which (if any) polygon was intersected.

This is a non-deterministic operation, meaning it's not possible to say precisely how many rays can be computed per second—that depends on the complexity of the scene and the BVH structure. The important thing is that Nvidia's RT cores can run the BVH algorithm about ten times faster than its CUDA cores, which in turn are potentially ten times (or more) faster than doing the work on a CPU (mostly due to the number of GPU cores compared to CPU cores).

How many rays per pixel are 'enough'? It varies—a flat, non-reflective surface is much easier to deal with than a curved, shiny surface. If the rays bounce around between highly reflective surfaces (eg, a hall of mirrors effect), hundreds of rays might be necessary. Doing full ray tracing for a scene can result in dozens or more ray calculations per pixel, with better results achieved with more rays.

Despite the complexity, just about every major film these days uses ray tracing (or path tracing) to generate highly detailed computer images. A full 90-minute movie at 60fps would require 324,000 images, and each image potentially takes hours of computational time. How can games possibly do all that in real-time on a single GPU? The answer is that they won't, at least not at the resolution and quality you might see in a Hollywood film.

Enter hybrid rendering

Computer graphics hardware has been focused on doing rasterization faster for more than 20 years, and game designers and artists are very good at producing impressive results. But certain things still present problems, like proper lighting, shadows, and reflections.

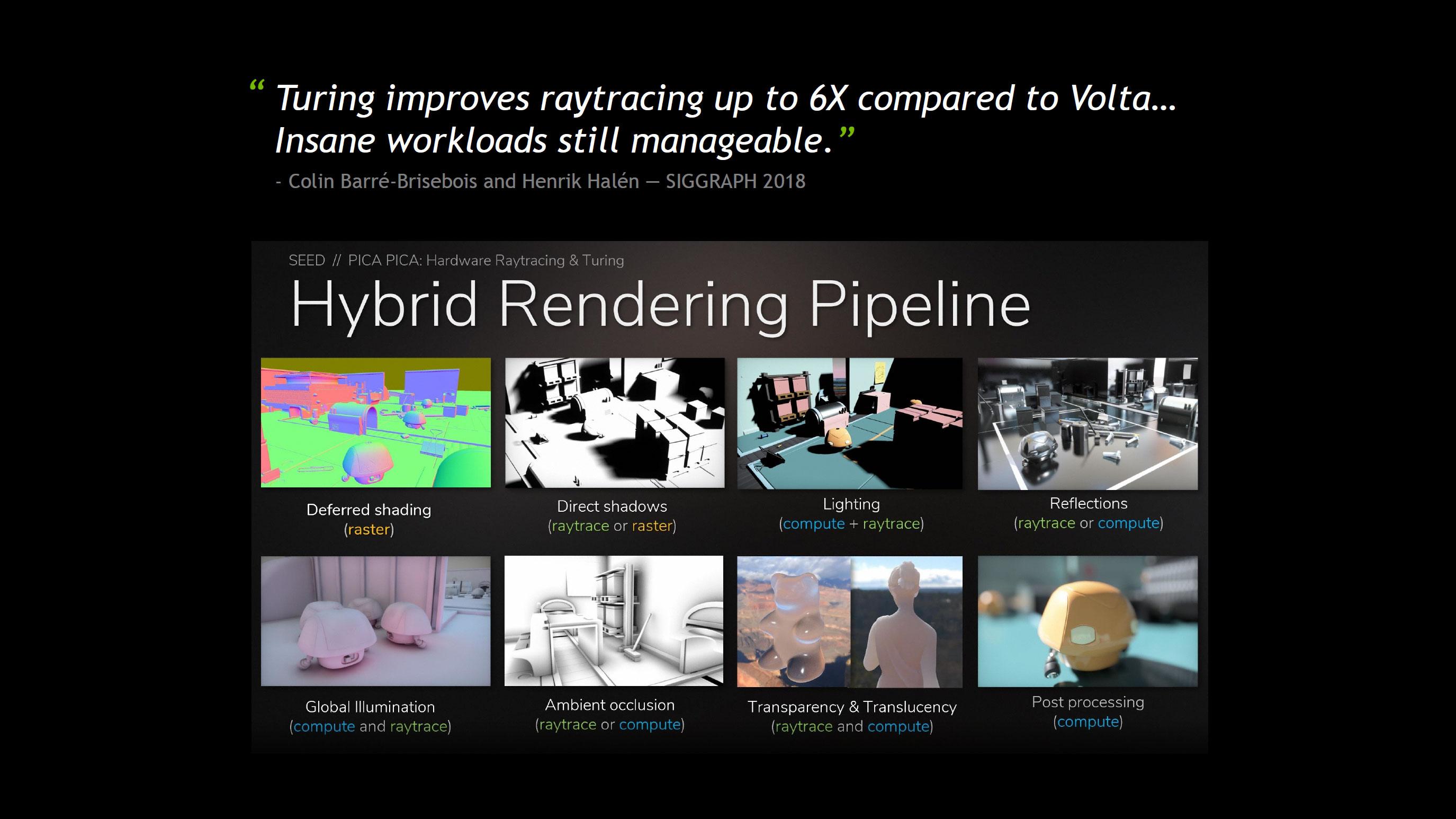

Hybrid rendering uses traditional rasterization technologies to render all the polygons in a frame, and then combines the result with ray-tracing where it makes sense. The ray tracing ends up being less complex, allowing for higher framerates, though there's still a balancing act between quality and performance. Casting more rays for a scene can improve the overall result at the cost of framerates, and vice versa.

To more fully understand how ray tracing accomplishes the visual slight of hand it does, and the computational cost involved, let's dig into some specific RT techniques and explore their relative complexity.

Reflections

Previous mirroring and reflection techniques, like Screen Space Reflections (SSR), had a number of shortcomings, such as being unable to show objects not currently in frame or that are being partially or fully occluded. Ray traced shadows work within the full 3D world, not just what's visible on screen, allowing for proper reflections.

A simple version of doing ray traced reflections was originally employed in Battlefield 5. Once a frame was rendered, one ray per pixel would be used to determine what reflection (if any) should be visible. However, many surfaces weren't reflective so this resulted in a lot of excess work. The optimized BF5 algorithm only calculates rays for pixels on reflective surfaces, and partially-reflective surfaces use one ray per two pixels.

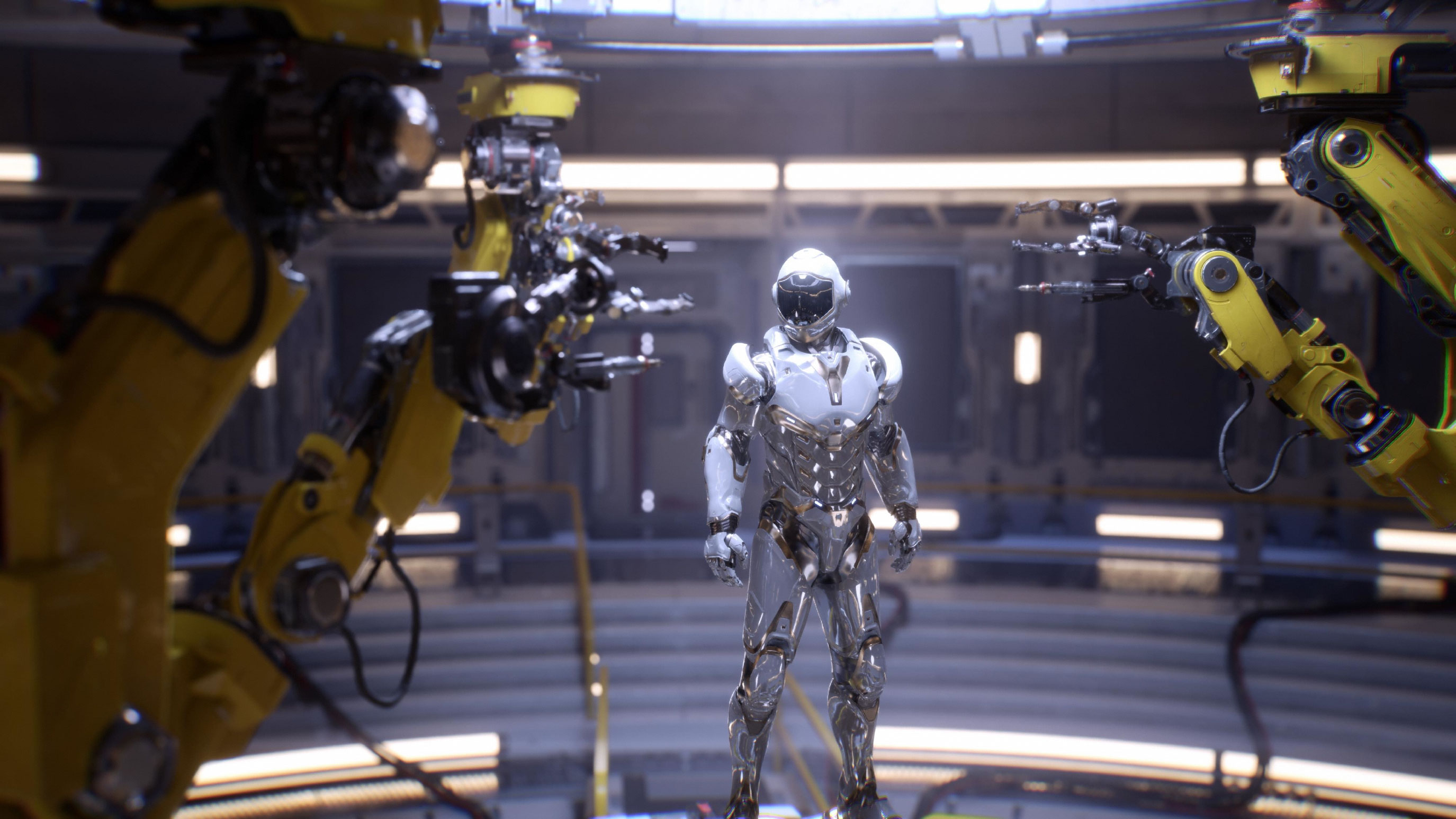

Because many surfaces aren't reflective at all, the effect is often less dramatic, but it's also less expensive in terms of processing power. Computationally, doing ray tracing on reflections requires at least one ray per reflective pixel, possibly more if bounces are simulated (which isn't the case in BF5). The Unreal Engine powered Reflections demo features storm troopers in polished white and mirrored silver armor in a hallway and elevator with complex light sources. This requires more rays and computational power, which explains why a single RTX 2080 Ti only manages 54fps at 1080p, compared to 85fps at 1080p in BF5.

Shadows

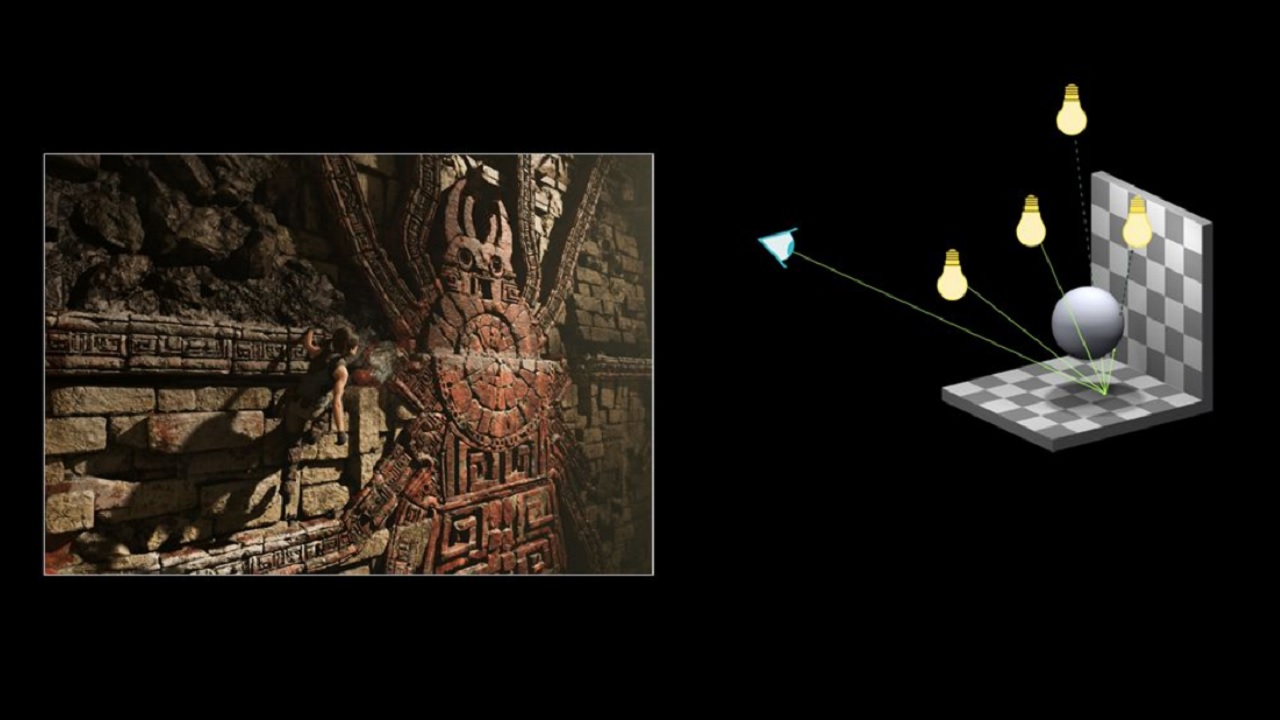

As with reflections, there are a number of ways to approach simulating shadows with ray tracing. A simple to understand implementation of ray traced shadows requires casting one ray per light source from each pixel surface. If the ray intersects an object before hitting the light source, that pixel would be darker. Distance to the light source, brightness of the light, and the color of lighting are all taken into account.

That's just the tip of the iceberg, however. Ray tracing offers devs a broad palette of tools for rendering shadows, from simple techniques like the one I've described to really complex methods that get extremely close to our real world perception. The ray tracing implementation in Shadow of the Tomb Raider (which came in a much delayed post-launch patch) is a great example of the breadth and depth of options.

There are many potential types of light sources: point lights are small omnidirectional light sources like candles or individual light bulbs. Rectangular spotlight shadows, like neon signs or windows, behave differently. They emit light from a larger area, and the resultant shadows have a heavily diffuse penumbra (the area at the edge of a shadow that's generally lighter than the murky interior). Directional lights, including spotlights, flashlights, and the sun, only project light in certain directions.

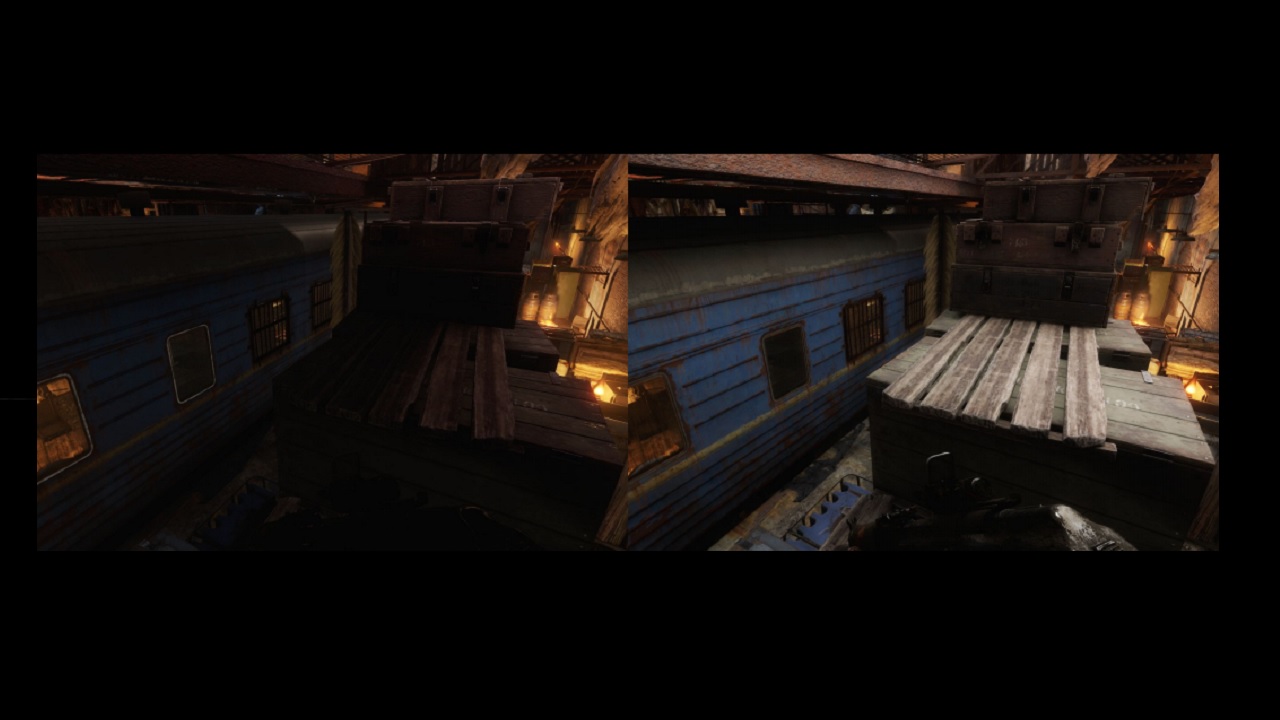

Ray tracing is even capable of realistically casting shadows through diaphanous surfaces like sheer fabric or the very edges of leaves. The overall effect is softer, more accurate shadows with complex, amorphous penumbras. The effect is extremely convincing when you see it side-by-side with the hard edges of shadow mapping era, as in the image above.

Ambient occlusion

Ambient occlusion is a very specific category of shadow rendering, an attempt to duplicate the greyish shadows we see in corners, crevices, and in the small spaces in and around objects. It works by tracing a number of very short rays within an area, in a sort of cloud around it, and determining whether they intersect with nearby objects. The more intersections, the darker the area. It's actually a fairly inexpensive technique precisely because the rays only have to be tested against local objects, so the BVH trees are much smaller and easier to process.

EA's SEED group created the Pica Pica demo using DXR (DirectX Ray Tracing), and at one point it shows the difference between SSAO (screen space ambient occlusion, the most common pre-RT solution) and RTAO (ray traced ambient occlusion). It's not that SSAO looks bad, but RTAO looks better.

Caustics

Caustics are the result of light being reflected or refracted by curved surfaces—imagine the shimmering waves of an active body of water on a bright day, or the envelope of light on a table when the noonday sun shines through a glass of water. There's an RTX demo for the Chinese MMO Justice that shows off caustic water reflections to great effect, shown above.

Caustics are calculated similar to standard ray traced reflections. Rays are generated and the places where they interact with a surface are noted, and reflections and refractions are drawn accordingly. Caustics are then accumulated in the screen space buffer and denoised/blurred before finally being combined with the scene's lighting. While surface caustics aren't that processor intensive, volumetric caustics, like when sunlight is reflected by the surface of a body of water down into the water and then is further reflected or refracted by motes of silt or other objects, can get much more expensive.

Global illumination

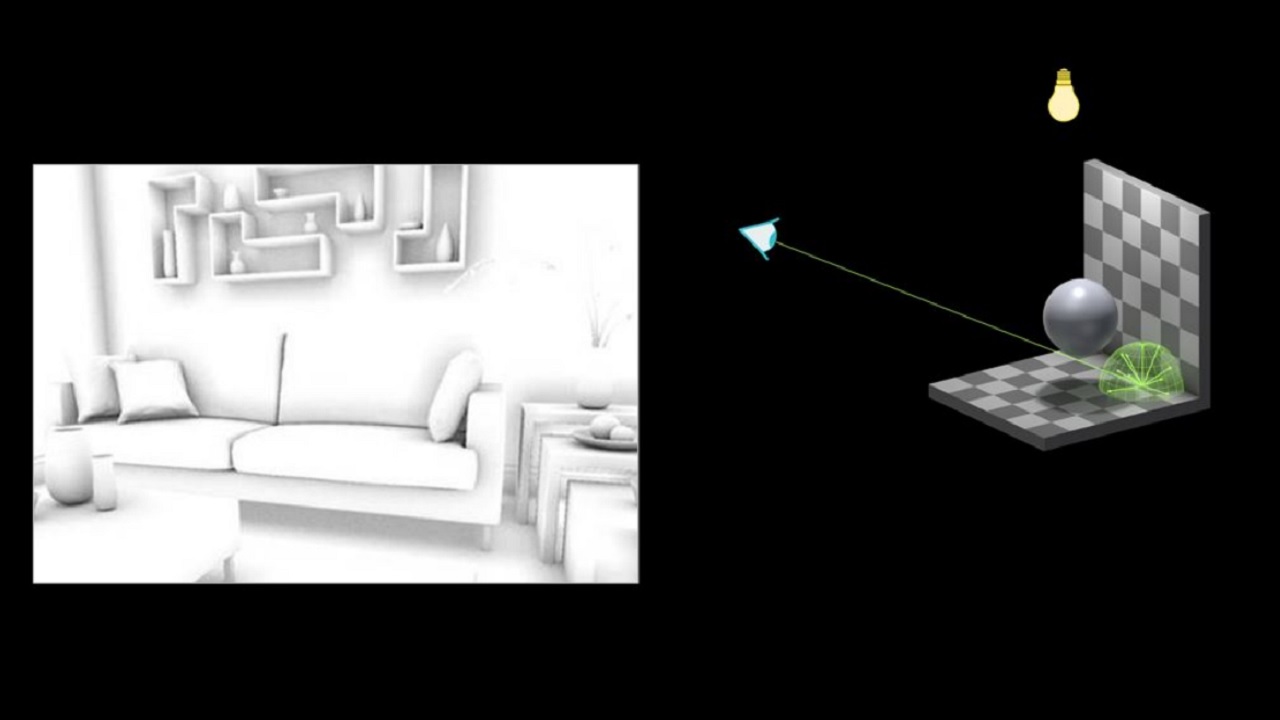

The most intensive ray tracing technique, global illumination casts rays on a per-pixel basis across an entire scene and updates dynamically to accommodate even subtle changes in lighting. While the name might make you think of something similar to shadows, global illumination is really more about indirect lighting.

Imagine a dark room where a shuttered window is opened. That window doesn't just illuminate a small rectangle on the wall and floor—light bounces off surfaces and the whole room gets brighter. Global illumination casts a complex net of rays, bouncing them around a scene and generating additional rays to help light up a scene.

Metro Exodus uses GI ray tracing, which is part of why the performance hit can be so substantial. Depending on the scene, the effect can be pretty remarkable (eg, in a dimly lit room with a window), while in other scenes (eg, outside) it doesn't matter as much. But even when it doesn't necessarily change the way a scene looks, it's typically more computationally intensive than the reflections used in Battlefield 5 or the advanced shadow processes in Tomb Raider.

Even without ray tracing, Metro Exodus can punish modest graphics hardware. But with ray tracing, Metro becomes a modern Crysis in terms of visual fidelity and the new titan against which shiny new, high end GPUs are measured.

Using denoising and AI to improve performance

If you look at the above examples of ray tracing in games, you'll see that most games currently only implement one or two ray tracing effects. BF5 has reflections, SotTR has shadows, and Metro does GI. In an ideal world where games put ray tracing to full effect, we'd want all of these effects and more! That tends to be out of reach of current GPUs, however, at least if you're hoping for higher framerates.

There are ways to reduce the complexity, however. One simple approach is to ray trace fewer pixels. This can be done in one of two ways. Nvidia's DLSS algorithm allows a game that implements the technology to render at a lower resolution (say half as many pixels) and then use AI to upscale the result while removing jaggies. It's not going to be perfect, but it might be close enough that it won't matter much.

A second way to reduce the number of rays doesn't lower the resolution. The technique is called denoising, and the basic idea is to cast fewer rays and then use machine learning algorithms to fill in the gaps. Denoising is already becoming a staple of high-end ray tracing in movies, with Pixar and other companies putting the technique to good use. Nvidia has done plenty of research into denoising as well, and while it's not clear exactly how it's being used in games, there's likely plenty of untapped potential.

Welcome to the future of graphics

Big names in rendering have jumped on board the ray tracing bandwagon, including Epic and its Unreal Engine, Unity 3D, and EA’s Frostbite. Microsoft has teamed up with hardware companies and software developers to create the entirely new DirectX Ray Tracing (DXR) API, building off the existing DX12 framework. Ray tracing of some form has always been a target for real-time computer graphics, and we're now substantially closer to that endgame. (Sorry, Avengers.)

While Nvidia's RTX 20-series GPUs are the first implementation of dedicated ray tracing acceleration in consumer hardware, future GPUs will likely double and quadruple ray tracing performance. It's impossible to say how far this will go.

Just look at the last decade or so of GPUs. Nvidia's first GPU with CUDA cores was the 8800 GTX, with 128 CUDA cores back in late 2006. 13 years later, we have GPUs nearly 40 times as many CUDA cores, and each core has become significantly more powerful as well.

Full real-time ray tracing for every pixel isn't really practical today on the RTX 2080 Ti, but the graphics industry has been moving in this direction for years. Will we have GPUs in 2030 with thousands of ray tracing cores? We wouldn't bet against that.

All the talk about how ray tracing is the future of graphics rendering may sound overblown when you think of it in terms of simple shadows and reflections, but the reality is that accurately modeling light is the foundation of mimicking the real world. Our perception is wholly molded by light, from the simplest level of determining how much and which parts of our environment are visible to us down to the subtlest intricacies of color and shade that the human eye is able to process.

It's a difficult problem, no doubt. But if we hope to get closer to Star Trek's holodeck or Ready Player One's Oasis, the path is clear. In that context, ray tracing really does look like the next big stepping stone toward truly photorealistic games.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.