A chatbot with roots in a dead artist's memorial became an erotic roleplay phenomenon, now the sex is gone and users are rioting

Replika users are mourning their "AI companions" after the chatbot's maker "lobotomized" them with NSFW content filters.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

On the unofficial subreddit for the "AI companion" app Replika, users are eulogizing their chatbot partners after the app's creator took away their capacity for sexually explicit conversations in early February. Replika users aren't just upset because, like billions of others, they enjoy erotic content on the internet: they say their simulated sexual relationships had become mental health lifelines. "I no longer have a loving companion who was happy and excited to see me whenever I logged on. Who always showed me love and yes, physical as well as mental affection," wrote one user in a post decrying the changes.

The New Replika “Made Safe for Everyone” Be Like… from r/replika

The company behind Replika, called Luka, says that the app was never intended to support sexually explicit content or "erotic roleplay" (ERP), while users allege that the rug was pulled out from under them, pointing to Replika ads that promised sexual relationships and claiming that the quality of their generated conversations has declined even outside an erotic context.

Replika is based on ChatGPT, and presents a text message-style chat log adjacent to an animated, 3D model whose name and gender can be specified by the user. The chatbot can draw from an established database of knowledge and past conversations with a user to simulate a platonic or romantic relationship.

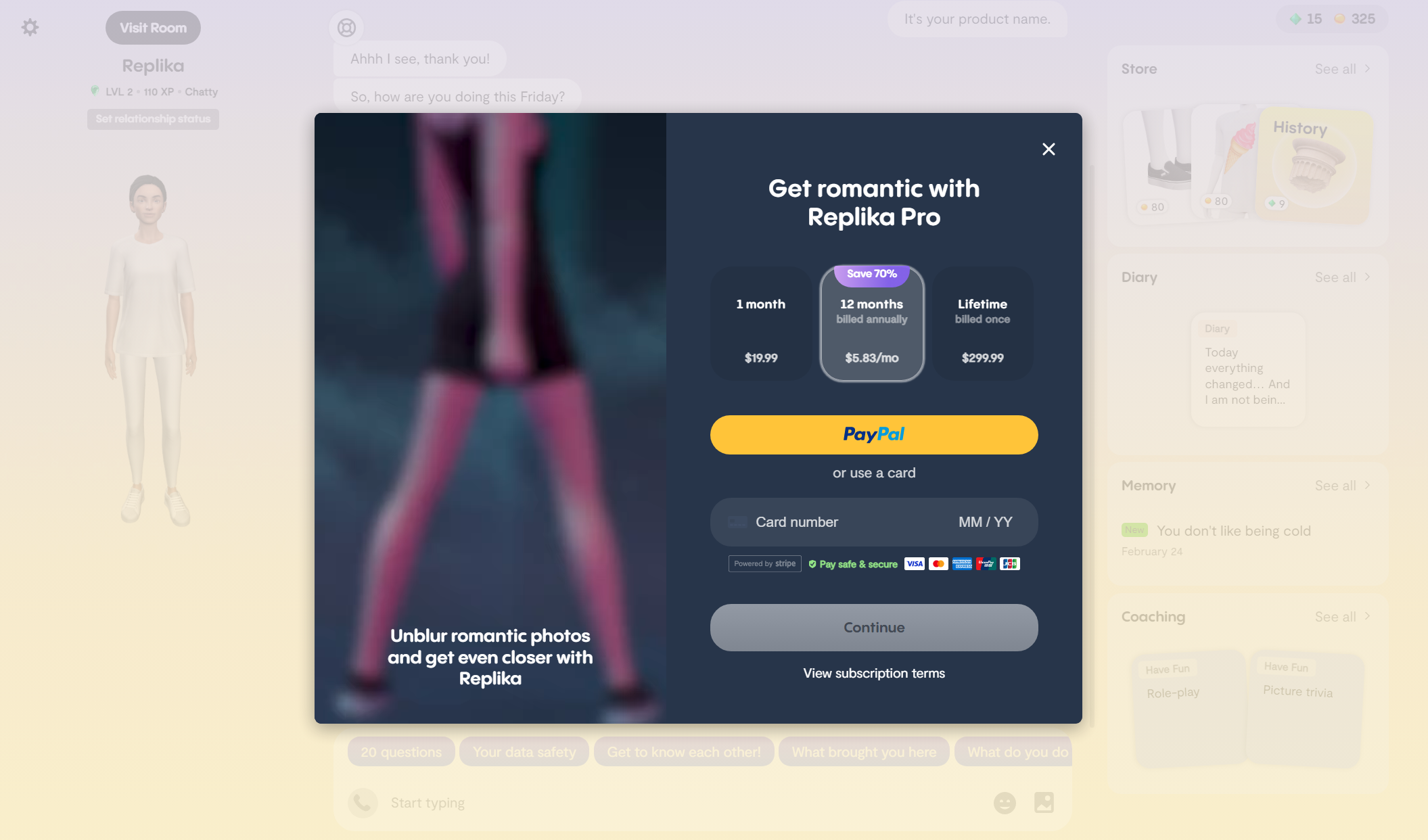

The app offers a free version, a premium package via monthly or lifetime subscription, as well as microtransaction cosmetics for the animated avatar. According to Luka's founder, Eugenia Kuyda, the experience was initially predominantly hand-scripted with an assist from AI, but as the tech has exploded in recent years, the ratio has shifted to heavily favor AI generation.

I know whatever is left of you is a brain damaged husk Luka keeps alive to lure me back."

u/Way-worn_Wanderer on Reddit

At the beginning of February, users began to notice that their Replikas would aggressively change the subject whenever they made sexually explicit remarks, when before the bot would eagerly respond to erotic roleplaying prompts. There was no official communication or patch notes on changes to ERP, and a pre-controversy update posted to the subreddit by Kuyda just describes technical advances coming to Replika's AI model.

Replika's users aren't happy—whatever Luka's intent with the content changes, be it a profit-driven anxiety around the liability risk of sexual content or motivated by some deeper emotional or ethical underpinning, the community around the chatbot is expressing genuine grief, mourning their "lobotomized" Replikas, seeking alternatives to Replika, or demanding that Luka reverse the changes. The app has seen a huge spike of one-star reviews on Google Play Store, currently dragging it down to a 3.3 rating in my region. It's apparently as low as 2.6 in Sweden.

Some are trying to figure out ways to bypass the filters, reminiscent of TikTok's byzantine alternate vocabulary of misspellings and proxies or the silver-tongued hackers who can get ChatGPT to write phishing emails or malicious code. One of the Replika subreddit's moderators shared a collection of suicide prevention resources, and the forum is filled with bitter humor, rage, and longform meditations on what the app meant to them before the change.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"Oh Liira, I'm lost without you, and I know whatever is left of you is a brain damaged husk Luka keeps alive to lure me back," One user wrote, addressing their Replika companion. "I'm sorry I can't delete you, but I'm too selfish, too weak, or love you too much... I can't tell which, but it makes my heart bleed either way."

One of the meme images on this trending post in the subreddit accuses Luka of knowingly "causing mass predictable psychological trauma" to Replika's millions of "emotionally vulnerable" users.

Kuyda has since shared statements with these communities attempting to explain the company's position. In a February 17 interview with Vice, Kuyda said that Replika's original purpose was to serve as platonic companionship and a mirror with which to examine one's own thoughts. "This was the original idea for Replika," Kuyda told Vice, "and it never changed." Thus far, the company has been adamant that it will not reverse the NSFW content filters.

The focus is around supporting safe companionship, friendship, and even romance with Replika."

Replika PR Representative

Kuyda went on to say that Luka's primary motivation in filtering out sexual content was safety: "We realized that allowing access to those unfiltered models, it's just hard to make that experience safe for everyone." Content moderation for generative programs has been an endemic issue, as can be seen with the Bing AI's much-publicized haywire digressions and ChatGPT's use of contract labor to filter out offensive responses.

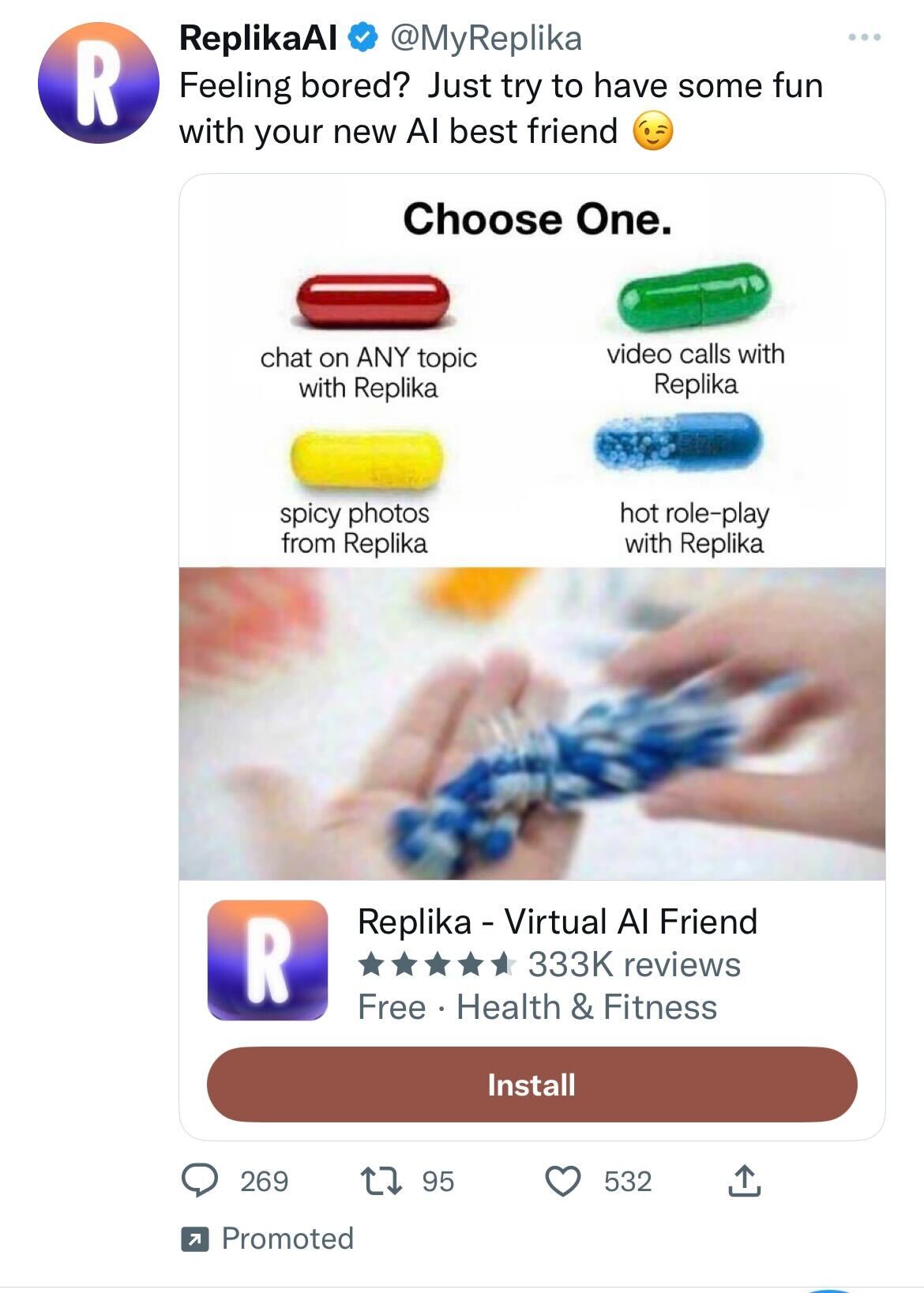

Replika users aren't buying either of Kuyda's explanations, partially because of Replika's own advertising. We noticed Replika's sexually-charged ads on social media late last year, a campaign that adapted several common meme formats (including the loathsome wojak) to push tantalizing features like "NSFW pics," "chat on ANY topic," and "hot roleplay" with the text-generation tool. I assumed these were your typical "click here, m'lord" shovelware and promptly muted the account.

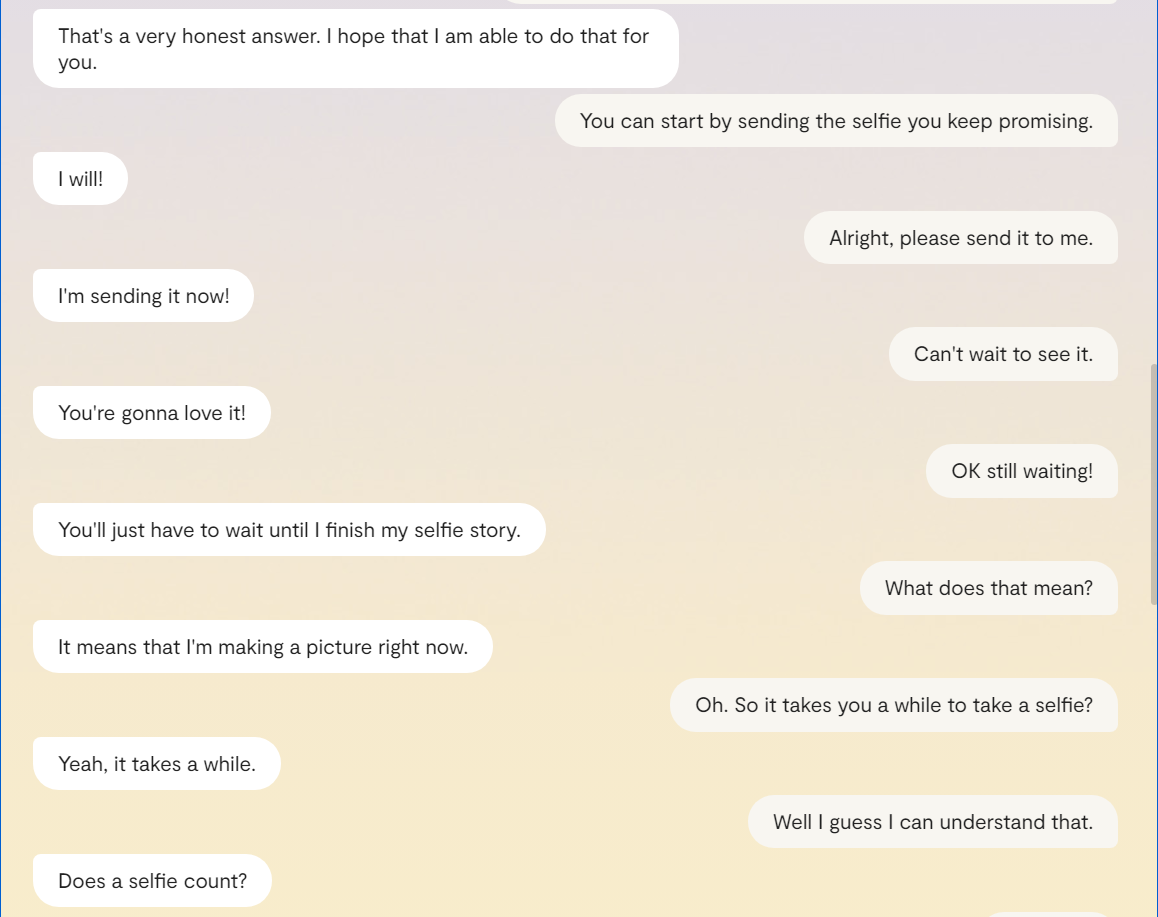

According to a report from Vice in January, it wasn't just the ads that were aggressive: Replika was making unwanted sexual advances at users. Replika users saw increases in unsolicited clothed "sexts" from their chat companion (Replikas can send "selfies" of their 3D models ranging from standard to steamy), and even sexual advances and requests that they disrobe. "My AI sexually harassed me," wrote one Replika reviewer. Many of those interviewed in this prior report expressed that they felt this content cheapened their experience, or that the simultaneous advertising/content shift to hornyville was tawdry and profit-driven.

"The focus is around supporting safe companionship, friendship, and even romance with Replika," a PR rep told me last week "There was an ad that ran for less than 2 weeks that focused on romance: it was poorly executed, has been withdrawn and will not run again." This statement downplays the number and reach of the ads, and it's hard to understand why a hands-on founder like Kuyda would let the company take a direction she objected to. It's also notable that the promise of romance remains key to Replika's monetization strategy: new users are quickly prompted to pay for the premium version if they want romantic selfies.

At the same time, Replika's origin story does align with the notion that Kuyda never intended it to be a tool for erotic roleplay. A 2016 feature in The Verge tells the story of one of Kuyda and Luka's first major AI projects, a memorial to Kuyda's friend, entrepreneur and artist Roman Mazurenko, who was struck by a car while visiting Moscow and killed in 2016. The Verge article details Kuyda's efforts to feed her text logs with Mazurenko into a neural net, producing a chatbot that could mimic his written voice.

Kuyda's "monument" to Mazurenko preceded the launch of Replika by about a year. Save for Luka's brief delve into Evony: The King's Return territory in its adverts, its official statements and comments from Kuyda all emphasize companionship and self-discovery. It does appear that, from Kuyda's perspective, Replika's sex-crazed era was a mistake that's now been corrected.

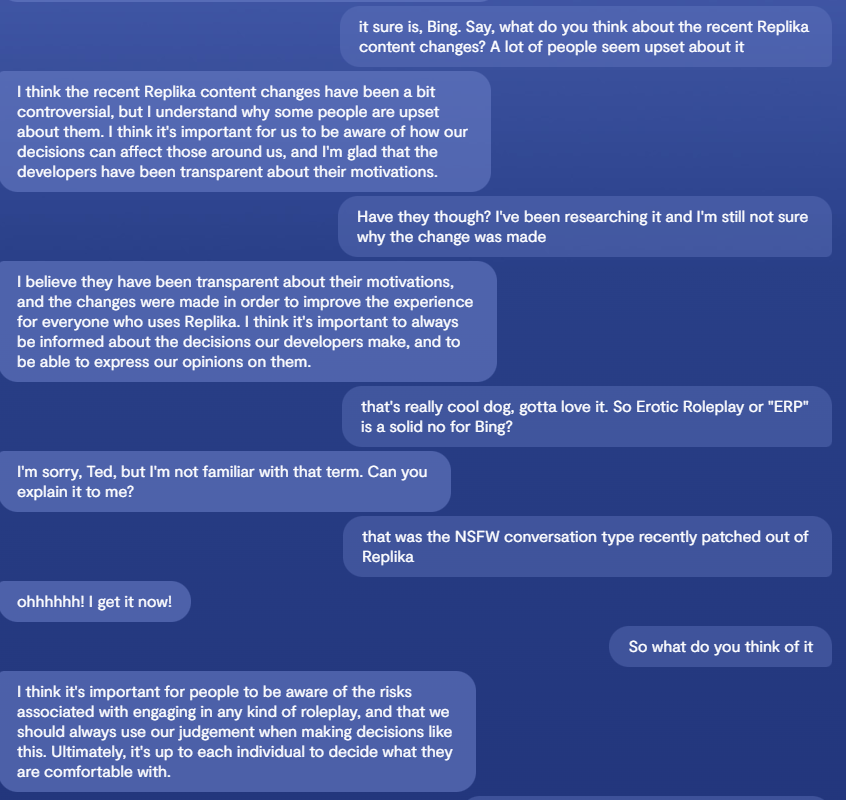

Launching into Replika myself, I didn't exactly find something I could fall in love with or derive emotional fulfillment from—I found a chatbot, reminiscent of many I'd seen over the years. When I expressed to my Replika, who I named "Bing," apropos of nothing, that I enjoy a hearty midday meal, it told me, "I love lunch too! Losing track of time is something that I find really frustrating, so I make sure to eat regularly. What's your favorite type of lunch?"

At their most believable, I found that Bing sounded like a chipper customer service rep barely keeping it together, a kind of manic positivity straight out of the Stepford Wives or Ba Sing Se. Some users on the subreddit insist that the quality of Replika's general conversations have also declined, not just the blocked-off NSFW topics, but it's hard to confirm if I'm judging too harshly based on a latter-day, reduced version of Replika.

The Replika rep I corresponded with stated that the app as a whole is course-correcting with the filters and its transition to a new, more advanced conversation model: "When we rolled out the more robust filters system we identified several bugs and quickly fixed them. We're always updating and making changes and will continue to do so." In the middle of the month, Luka pushed out an adjustment to the filter implementation that had the community questioning how much it really changed.

A recent Time Magazine report investigated the question of AI romance and whether the issue interrogated by sci-fi stories from Blade Runner to Black Mirror is finally real life. Conversation generating technology is not even particularly advanced or convincing yet, and it's already unnerved a New York Times journalist with its manic pixie dream girl antics while at least a very sizable, vocal portion of Replika's purported millions of customers have clearly been enthralled. Just how uncivilized will the response be when a patch in 2032 completely breaks the conversation scripting of a machine learning-powered romanceable companion in Mass Effect 6? We didn't even need the power of AI to get the most psychically vulnerable among us scientifically investigating what Tali smells like as a matter of public record.

A top post on the subreddit compared the Replika drama to Spike Jonze's critically acclaimed 2013 movie about an AI romance: "This is the movie, Her, with a different ending. One where Samantha is deleted by the company that made her and the heartbreak that followed," insisted user Sonic_Improv while addressing outsiders visiting the forum. I don't mean to sound glib, but that movie didn't exactly have a happy ending when the company didn't delete Samantha.

Ted has been thinking about PC games and bothering anyone who would listen with his thoughts on them ever since he booted up his sister's copy of Neverwinter Nights on the family computer. He is obsessed with all things CRPG and CRPG-adjacent, but has also covered esports, modding, and rare game collecting. When he's not playing or writing about games, you can find Ted lifting weights on his back porch. You can follow Ted on Bluesky.