Intel XeSS upscaling looks as good as DLSS in Tomb Raider and is almost as quick

Death Stranding: Director's Cut also gets some upscaling love from Intel.

Intel's entrance into the graphics card market has been a long time coming. It's taken so long, in fact, that you'd be forgiven for thinking it wasn't actually happening at all. Well, yesterday it announced the price and release date of the Arc A770. It also started the ball rolling on its XeSS upscaling tech.

Shadow of the Tomb Raider is the first major title to get support for the tech, and thanks to its GPU-agnostic approach, you can try XeSS for yourself right now. Death Stranding Director's Cut also gets some XeSS love, although it doesn't seem to have been added to the standard game, which is a bit of a shame.

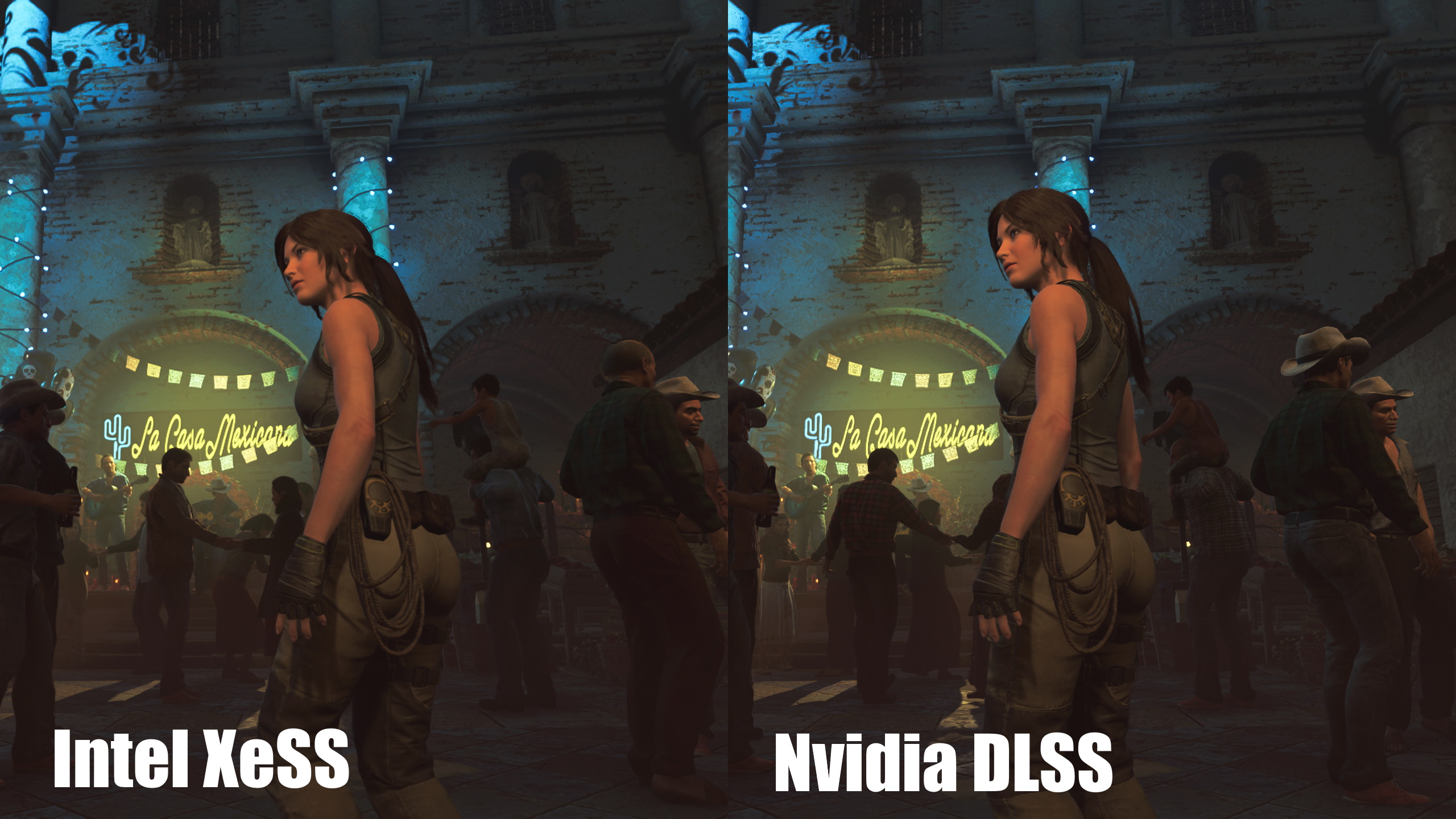

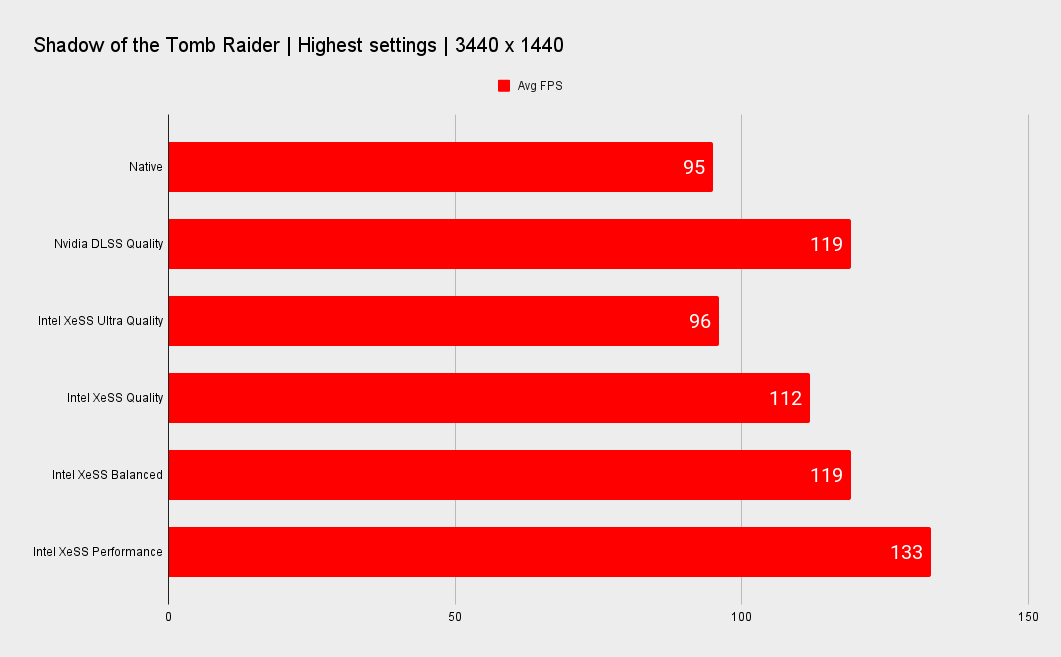

I've looked at XeSS running in Shadow of the Tomb Raider, and straight away it looks like a worthy alternative to Nvidia's DLSS 2.0. Slightly slower performance at the same visual settings, but not by much, and the fact that you can run this on non-Intel GPUs is certainly a boon. Like AMD FSR and Nvidia DLSS, you have a range of quality settings on offer—with the option to sacrifice image quality to hit smoother frame rates if needed.

You will need a compatible graphics card to use XeSS though, that is one that supports DP4A or Intel's XMX as found in its Arc GPUs, but that's it. That covers AMD's RDNA 2 GPUs and the last three Nvidia architectures, although once again if you have an Nvidia GPU then that's probably your best option anyway.

To see how it looks I patched Lara's most recent outing to the "Sept. 27th Update" and tried it on an Nvidia RTX 2080 Ti. The good news is it looks like a promising technology. The performance increase is notable compared to native and depending on your hardware, it could mean that you hit playable frame rates at your monitor's native resolution where before you would have struggled.

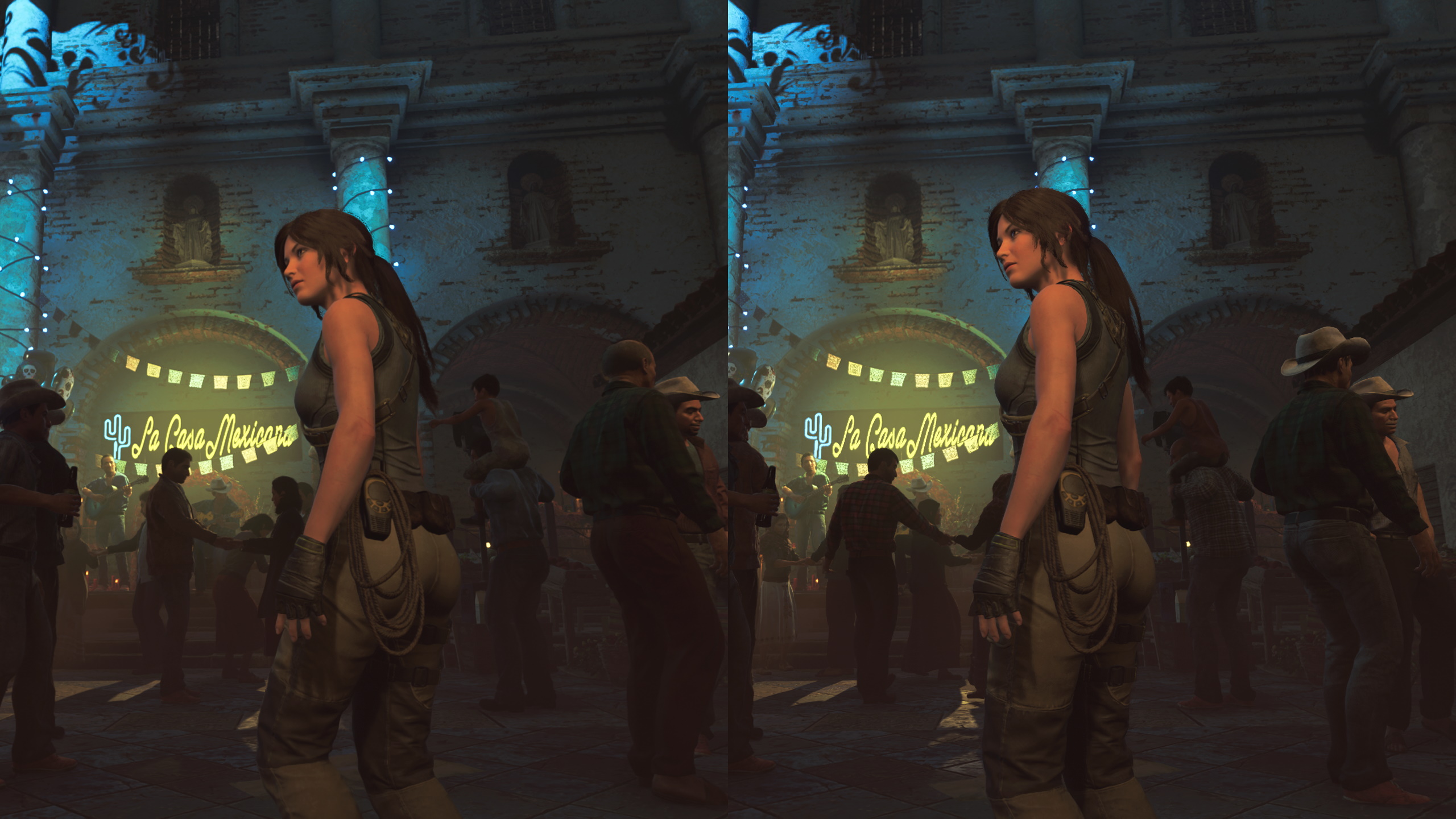

As far as quality is concerned, I saw no notable artifacts while benchmarking. The Quality setting produced a good final image and enjoyed a 17% bump in performance at the highest settings.

For comparison the DLSS Quality setting ups the performance by 25%, so that's still the better choice if you have an Nvidia GPU, and it's tough to see any difference between the final images. This also gives owners of AMD's RDNA 2 GPUs an option for higher performance too.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Of course, Nvidia has just announced DLSS 3 will launch with its RTX 40-series graphics cards, and that promises big things for frame rates. DLSS 3 only works with the new Ada Lovelace GPUs though, which limits its appeal somewhat.

Intel has also made the SDK for XeSS available on Github.

Overall, this is an encouraging start for Intel's XeSS. And with plenty more games set to support it, it looks like Intel should be good in a good place come October 12 and the launch of its Arc A770 GPUs.

Alan has been writing about PC tech since before 3D graphics cards existed, and still vividly recalls having to fight with MS-DOS just to get games to load. He fondly remembers the killer combo of a Matrox Millenium and 3dfx Voodoo, and seeing Lara Croft in 3D for the first time. He's very glad hardware has advanced as much as it has though, and is particularly happy when putting the latest M.2 NVMe SSDs, AMD processors, and laptops through their paces. He has a long-lasting Magic: The Gathering obsession but limits this to MTG Arena these days.