Why Minimum FPS Can Be Misleading

What the numbers really mean

There are a few things in life that are constant: death, taxes, and hardware upgrades. Every time we experience a major shift in graphics fidelity, there's a requisite purchase of new hardware to keep up. Having now finished off the recent launches of Nvidia's GTX 980 Ti and AMD's Fury X, there's something we want to discuss a bit more that will affect future GPU reviews-or at least, it will affect our presentation of data. That topic is minimum frame rates.

Unlike average frame rates, usually expressed as FPS (frames per second), minimum frame rates are prone to some wild fluctuations between benchmark runs. The problem is that depending on built-in benchmarks and their reported frame rates isn't always reliable. Some games appear to sweep a few low results under the table, others report the absolute minimum frame rate, and still others use a sort of "average minimum" value. All of these can be useful to varying degrees, but the inconsistency between benchmarks is a real concern. To understand why, we need to talk about why minimum FPS matters and then look at a few benchmarks as examples.

The short summary is that minimum frame rates matter because they can cause a game to stutter. Imagine, as a worst-case scenario, a game where one frame renders at 20fps and then the next three frames render at 180fps. While the average frame rate would be 60fps, on a typical 60Hz display with VSYNC disabled you would see the first frame for three screen updates followed by one update showing parts of the three fast frames. Or let's take an even more extreme case: imagine a game that renders at 60fps for 19 frames and then 10fps for a single frame. The game would feel smooth for those 19 frames and then there would be a big stutter on the last frame. The average frame rate is still a respectable 48fps, but the minimum frame rate indicates there's a serious problem somewhere.

The difficulty is that minimum frame rates often don't come at regular intervals. The 19-to-1 ratio of the second example would be horrible if it happened, but it typically doesn't occur in normal gameplay. What's more likely is that you'll have games that run for hundreds or even thousands of frames at higher FPS values, but at times there's a scene transition or the GPU runs out of VRAM and you'll have some stuttering. Ideally, that's what we want to capture, but many games abstract the benchmark results into just minimum and average FPS. So let's look at a few examples.

First, Tomb Raider (2013) has a decent built-in benchmark. The test shows Lara Croft overlooking a scene of crashed boats, airplanes, etc., as the camera orbits around her. As the entire sequence consists of a single scene in the game, the loading of assets is done ahead of the benchmark run and the results are very consistent. If you run the test ten times, you might see a fluctuation of a few percent at most on both the average and minimum frame rates. Maximum frame rates may show greater variability, but few people are worried about the maximum FPS, so that's not a problem. The Tomb Raider benchmark at least is an example of a trustworthy minimum FPS result.

At the other end of the spectrum, the Unigine Heaven 4.0 benchmark has extremely unreliable minimum frame rates. The test consists of 26 scenes, but frame rates are captured during scene transitions—so for example, the first few scenes may have all their assets loaded into memory, but at some point there's a scene that has to load some assets. When this happens, there might be a single instance where the frame rate drops to 30fps. If the 30fps result happened consistently, it might be meaningful, but if it only occurs during a camera/scene change and it only happens for one or two frames out of thousands, it has little bearing on normal game play.

In between these extremes, there are other games where the built-in benchmark may have erratic minimum FPS results during the first few seconds of a benchmark. Shadow of Mordor is like this, as the level assets are still loading for the first few seconds. If you were actually playing Shadow of Mordor rather than just benchmarking it, you might have stuttering frame rates right as a saved game loads, but then for minutes or even hours afterward the frame rates would be higher and generally consistent. Run the built-in benchmark once and the minimum frame rate might show as 25fps. Run it three times in a row and you'll typically find that the second and third runs show significantly higher "minimums." But even running the test multiple times doesn't fully account for variations between runs.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Enter the 97 Percentile

The good news is that the problem with looking at pure minimums is a well-known fact, so clever statisticians have already had a solution for decades (or more): percentiles. The concept is easy enough: given a large enough set of numbers, sort them and the 97 percentile would be the number that is larger than 97 percent of the results. For minimum frame rates, we're going the other way and looking at the number that's smaller than 97 percent of results. Of course, you can make arguments for a different percentile—99 and 95 percentile are commonly used—but that's more debating semantics.

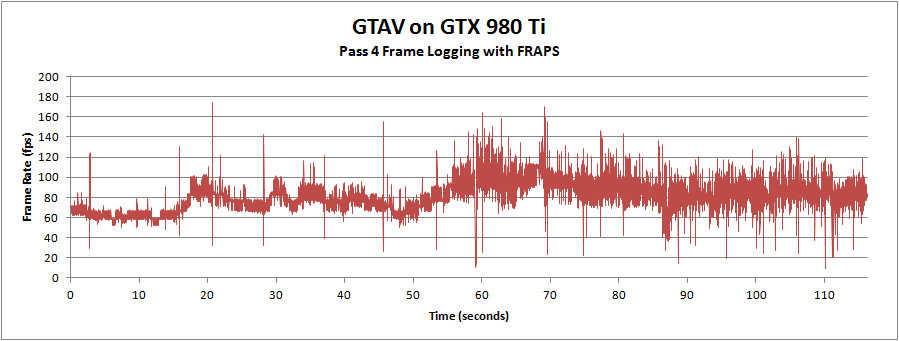

Some games even report percentiles already, e.g., GTAV's built-in benchmark reports 90–99 percentiles for the five test scenes, along with 50, 75, 80, and 85 percentiles for good measure. GTAV also goes one step further and reports the number of frames under 60fps and 30fps for each test sequence. Let's quickly look at what this means for a specific GPU tested in GTAV, the GTX 980 Ti running at 1080p.

GTAV GTX 980 Ti 1080p Results – Pass 4

| Min | Avg | % > 60fps | % > 30fps | 97% |

| 8.6 | 83.1 | 93.9 | 99.8 | 58.8 |

If we only look at the minimum frame rate, it might appear that GTAV stutters a lot for this specific test case—the average FPS is a rather high 83, but minimum FPS is only 8.6! But looking closer, that minimum frame rate is quite rare, likely occurring only when a bunch of data has to be loaded into memory. Looking at the frame rates above 30fps, only 0.2 percent of frames were below that threshold, while 6.1 percent were below 60fps. And finally, the 97 percentile tells us that 97 percent of frames rendered at 58.8fps or faster.

Of course, we could just present you with a complete graph of frame rates for the test, as shown above. The problem with this approach is that it makes comparing products difficult, especially for people who don't eat, breathe, and sleep statistics. We would need one chart per GPU per game, or perhaps we could do a few GPUs in each chart, but either way it quickly results in information overload. It also requires a lot more time to create all the charts, time which could be better spent in other endeavors. Using a 97 percentile result allows us to quickly get to the heart of the matter and provide a meaningful "typical minimum FPS" value.

Of course, totally ignoring all frame rates below the 97 percentile doesn't necessarily make sense either. Those slow frames are still present, and if there's enough of them—and if they're slow enough—it can dramatically impact the overall experience. Our solution is simple. Instead of looking at just the 97 percentile frame rate, we can find the average FPS for all frames that are slower than the 97 percentile. That way we don't miss out on the effect of a few very slow frames.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.