Visual novel Eliza explores the privacy risks of digital therapy

Zachtronic's Matthew Burns chats about the real-world apps that inspired Eliza.

Over recent years, mindfulness and mental health apps have skyrocketed in popularity. Self-care and wellbeing apps were named Apple's top trend of 2018 with an abundance of apps like Fabulous, Calm, Headspace, Shine, and Joyable packed with meditation techniques, healthier habits, breathing exercises, and audio pep-talks. According to Marketdata (via the Washington Post) the market for these kinds of services topped $1 billion in the US.

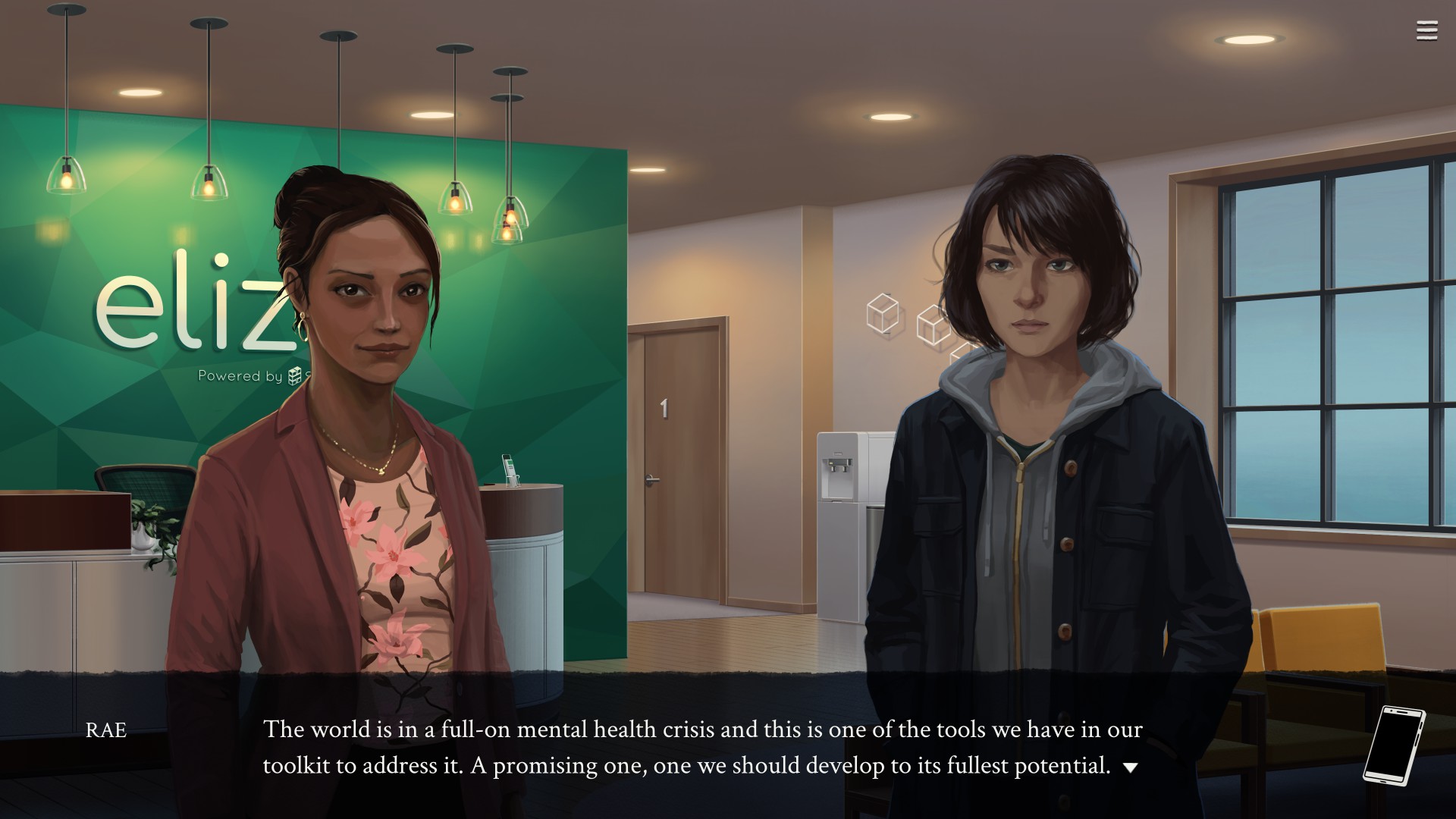

Self-care and mental health apps have become a viable market for business as more people are investing time in their mental wellbeing. Some apps are even taking it further by offering professional help to app users. But is e-counselling the way forward? Eliza is a visual novel that grapples with this complicated topic. Developed by Zachtronics, Eliza explores a future where digital therapy and e-counseling are an accessible and on-demand service available to everyone.

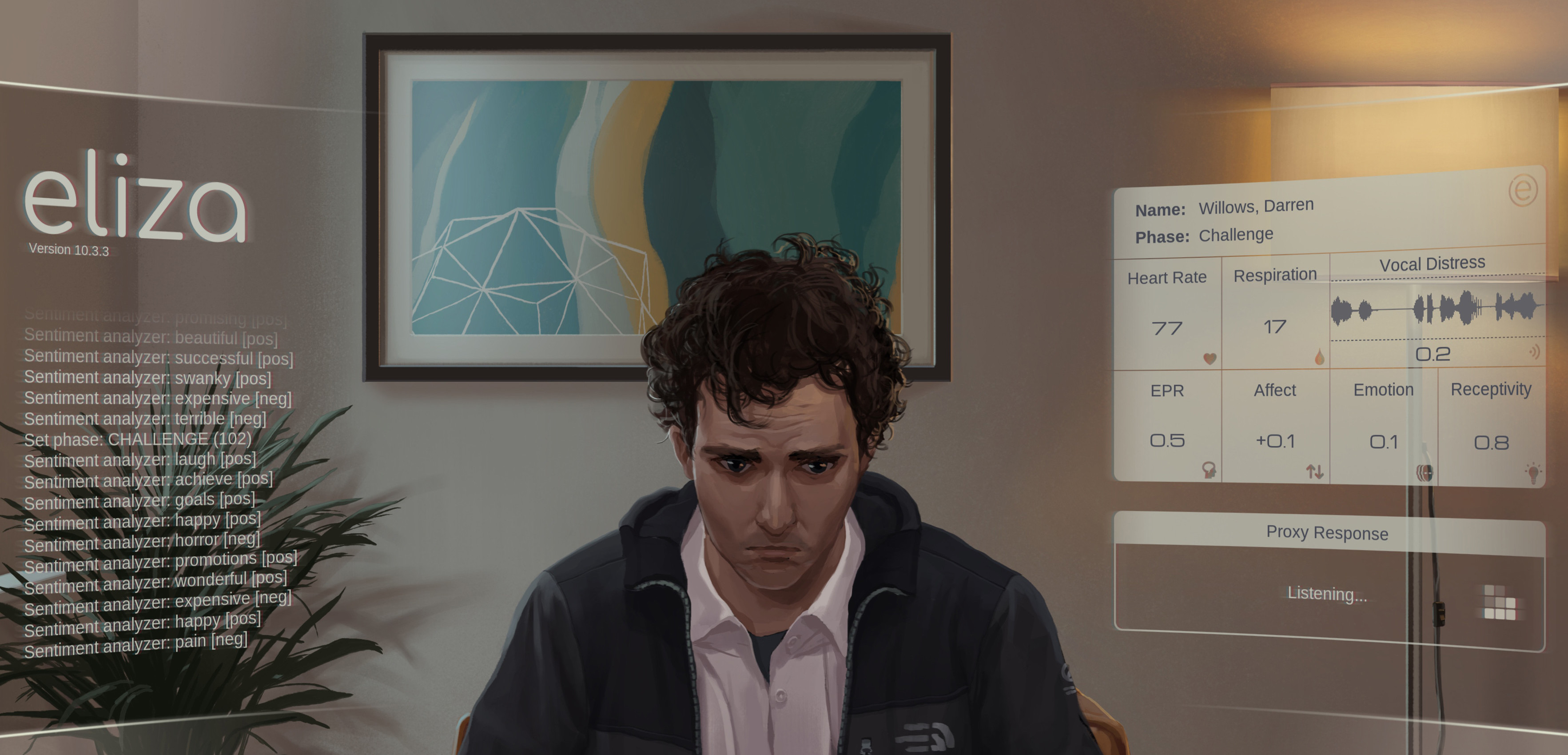

The story is about a one-to-one AI counseling service named Eliza and the woman who created it, Evelyn. Eliza is an AI therapist that listens to patients and replies through a human 'proxy'. The company behind the AI, Skandha, believes that having a human proxy makes the computer's clinical script and sound warm and understanding. The AI listens to the user and then recommends breathing exercises, VR experiences, medication and the use of a wellness app. The story follows Evelyn as she decides to reconnect with the group who helped her develop Eliza. This might seem like science fiction, but Eliza was inspired by real projects.

"I saw this demo of this research project, which was a virtual counselor," Eliza developer Matthew Burns says. "It had a camera trained on the person who was talking and it did all these metrics on their face to see if they were smiling, or laughing or sad and track their movement. It had a low poly Second Life-looking avatar that would talk to you.

"It was a research project that was funded by DARPA, which is the Department of Defense's research arm. They were thinking about how they could treat soldiers with PTSD. I remember sitting there seeing this demo and it brought about this dystopian feeling. Imagining being 20 years old and being sent to Afghanistan then coming back with PTSD and they won't even give you like a real person to talk to."

The research funded project Burns saw was the SimSensai and MultiSense healthcare support project. Burns also decided to name the AI therapist after a computer program from the '60s of the same name that was designed to emulate a psychotherapist, a program that you can still chat with.

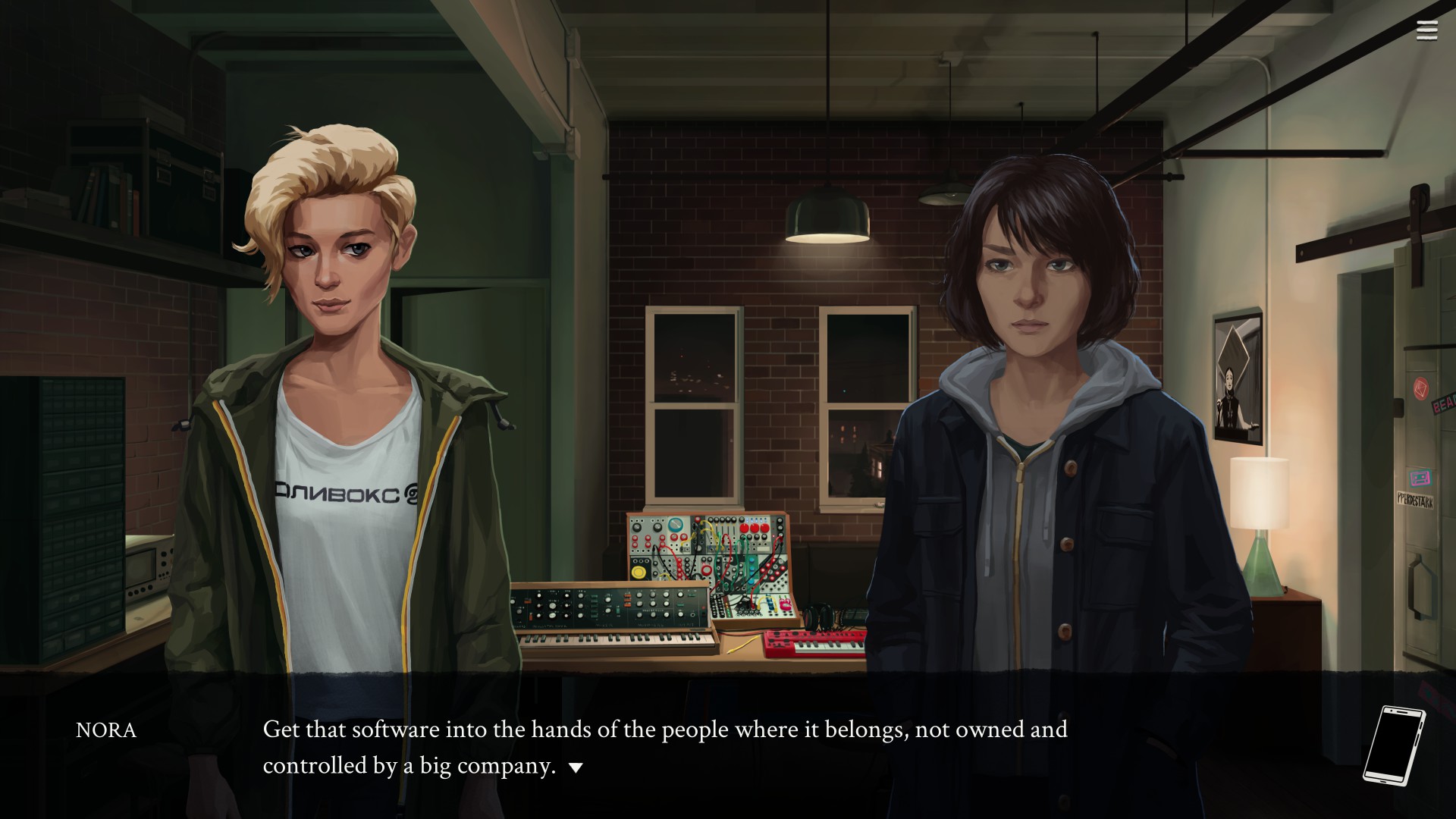

Burns also took research from a bunch of other wellness apps, the online counselling app BetterHelp that lets you speak to a therapist, WoeBot the CBT and mindfulness chatbot, Wysa the AI life coach, and Youper, an emotional health assistant. Eliza is an extension of these apps, and in the game it's a revolutionary program and a useful service for many people with mental health issues. But, like with many real-world mental health technologies, there is something else beneath the surface.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Emotionally draining

As the story continues, Eliza's corporate higher-ups decide to introduce a new 'transparency' option in Eliza that patients can willingly opt into. The transparency option promises better results as long as the program, and its human proxies, can look at patients' personal information. This information is not only seen by the human proxies but is also gathered into valuable data that Eliza's parent company can do with as it pleases. E-counselling and therapy apps can make a world of difference to its users, but Eliza asks the question: at what cost?

"Therapy should be like one of the most intimate things that you share with people," Burns says. "And yet, it also feels weirdly clinical and medical in a way. That's something I wanted to represent in the game. People are talking about some of the darkest, most intimate things about them and yet it's also being processed through this mass data centre.

"Regardless, I didn't want to just say that Eliza is 100% bad because sometimes being prompted to talk with someone who sounds like they're listening is good and so I wanted to reflect that complexity there."

It really is a complex topic. In Burns's world, Eliza is a revolutionary counselling device that genuinely helps people and changes their lives. At the same time Eliza reflects a major problem with the dangers of health-monitoring technology.

There have been plenty of apps that record a person's physical body—blood pressure, pregnancy, body weight, menstrual cycles—and now there is a movement towards mental health profiling and tracking. A 2018 study by Science Direct found out of 61 prominent mental health apps, almost half of them did not have a privacy policy explaining to users how their personal information would be collected, used, and shared with third parties.

One of the most popular apps for depression on Apple's store, Moodpath, actively uses third-party tools to share a user's personal information with both Facebook and Google—it discloses this information under section seven in its privacy policy. It's no secret that Facebook actively buys and sells user data on a mass scale.

There are numerous other examples of the sharing of people's personal data. There's the controversy behind the previously mentioned counseling service app BetterHelp. It was revealed that the pregnancy tracking app FLO was sharing its user's data with Facebook. These monitoring apps even have a future role in the workplace—Activision Blizzard is encouraging its employees to use health tracking and family planning apps.

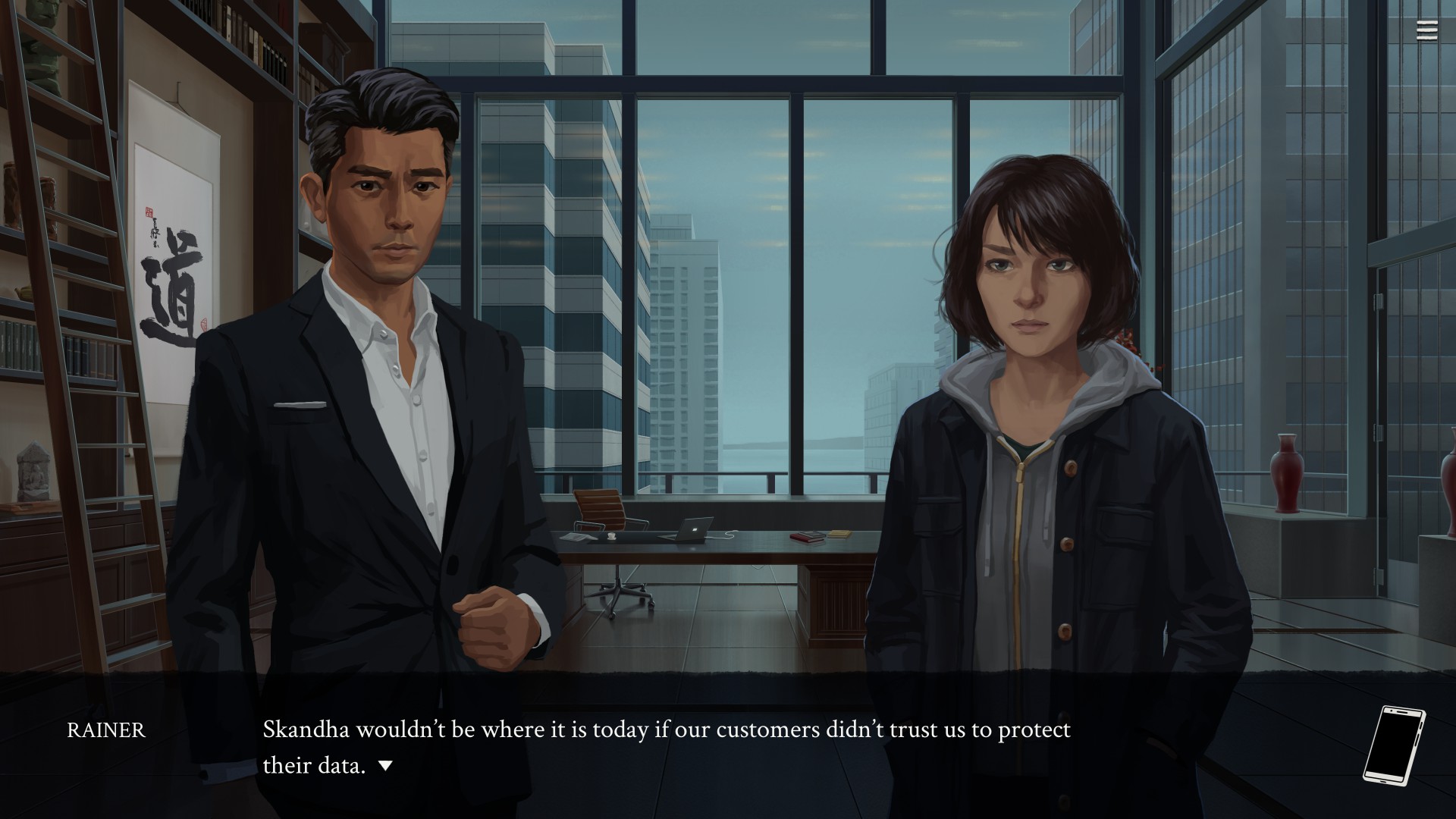

As you play the game, you discover that Eliza's parent company, Skandha, is collecting the private information that people reveal in their sessions.

"It was very important to me to make that feel as insidious as it is in real life," Burns explains. "It's very easy to create a science fiction story where there's a dystopia and the evil corporation very obviously does evil. It's all dark and with neon colours, and it's always night-time and raining right? But that's not what it looks like in real life. There's a moment at the very beginning of Eliza when a man talks about how he's severely depressed but at least things look nice."

Eliza is an important reflection on our lives and culture; examining a world where people's deeply personal experiences are being churned into data. Burns captures this sentiment perfectly via one of his characters: "thousands of people reveal their most intimate secrets to their therapists every day, this is just a more efficient way to complete that information transfer."

"It's not some fantasy like this is a thing that's happening right now," Burns says "I was talking to someone who worked at Amazon and I was telling him about the premise of Eliza and he just said, Oh yeah, we're working on that. People are talking to their Amazon Alexa and that data is going to Amazon so there's nothing even that fantastical about it."

Eliza helps us reflect on how our information is being used, by whom, and for what purpose. It's a warning of what can happen when invasive data collection techniques are used to gather information from vulnerable people.

Rachel had been bouncing around different gaming websites as a freelancer and staff writer for three years before settling at PC Gamer back in 2019. She mainly writes reviews, previews, and features, but on rare occasions will switch it up with news and guides. When she's not taking hundreds of screenshots of the latest indie darling, you can find her nurturing her parsnip empire in Stardew Valley and planning an axolotl uprising in Minecraft. She loves 'stop and smell the roses' games—her proudest gaming moment being the one time she kept her virtual potted plants alive for over a year.