Gaming without a dedicated graphics card on i7-7700K

What can Kaby Lake's HD Graphics 630 do for gaming?

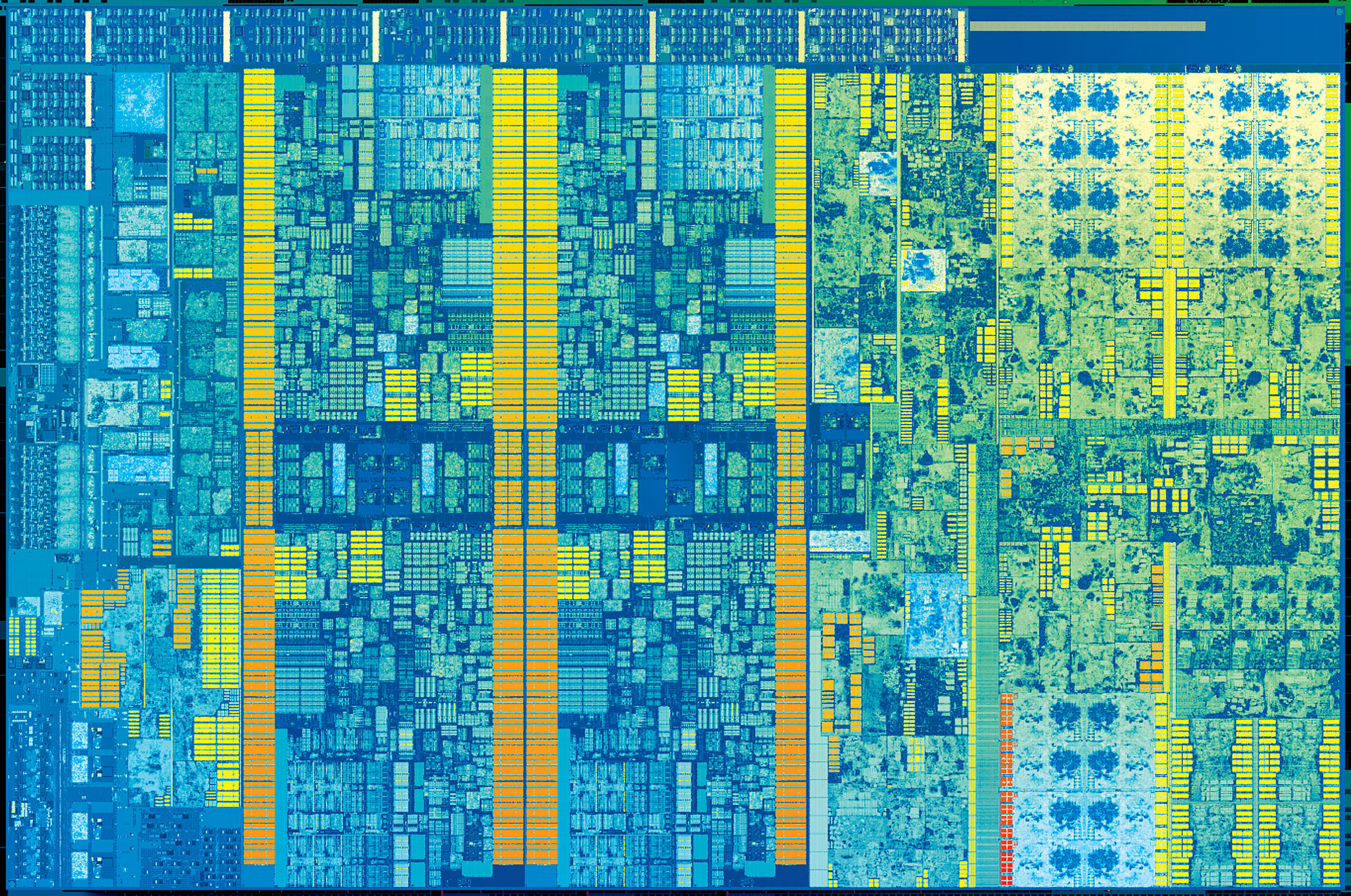

About one third of the current desktop Kaby Lake CPUs is devoted to graphics and multimedia—and that's on the quad-core die. Take the dual-core i3-7350K and you're looking at roughly half of the CPU die. For desktop gamers, all of those transistors are basically wasted as soon as they plug in a dedicated graphics card. 4K Netflix, Quick Sync video transcoding, and of course all the graphical rendering capabilities are for naught. But let's suppose you want to get by on just the integrated graphics. How do they perform?

CPU: Core i7-7700K

Mobo: MSI Z270 Gaming M7

RAM: Corsair DDR4-3200

SSD: Samsung 960 Pro 512GB

SSD: Samsung 850 Pro 2TB

Case: Corsair Carbide Air 740

PSU: Corsair RM650x

Cooling: Corsair H115i

I decided to put HD Graphics 630 to the test, using Intel's top desktop CPU, the i7-7700K. Our test hardware is about as potent as it gets—there's nothing bottlenecking the HD 630 besides itself. I'm using DDR4-3200 memory to give it every possible ounce of bandwidth, and a beefy AIO cooler as well (not that the cooler is really necessary). Frankly, this isn't a configuration I'd expect many people to use for gaming—you don't buy a $350 processor for games and skimp on the GPU. But here's the thing: the integrated graphics aren't just included in high-end desktops. In fact, that's really the last place they're needed. They're really there for other products, particularly mobile devices, so whether you're running a 2-in-1 device, and Ultrabook, a gaming notebook, or even a desktop, you get Intel's HD Graphics.

Things get a bit interesting (or confusing, if you prefer) when you look at the various HD Graphics models available. The desktop Kaby Lake parts mostly come with HD 630, though a few of the low-end parts Pentium and Celeron parts come with HD 610. The main difference is that HD 610 has fewer graphics cores, but how many isn't clear. (Each subslice is typically 8 cores but Intel has had the option of partially disabling subslices in the past, so there are likely two subslices of six cores each.) Mobile Kaby Lake meanwhile has a few more options, with HD 630 showing up in the 35/45W parts and HD 610 on 15W Celeron/Pentium U-series chips, but joining these are quite a few others. HD 615 and HD 620 are similar to the 630, respectively, but Iris Plus Graphics 640 and 650 double the number of GPU cores and add in a 64MB eDRAM.

What really separates the various Intel graphics solutions from one another is their TDP—their Thermal Design Power. The 615 through 630 are all 24 cores (Execution Units in the Intel nomenclature), while the Iris chips have 48 cores—so far there's no 72 EU variant announced. Most of the other differences amount to minor clock speed variations, with the fastest parts clocked at 1150MHz and the slowest clocked at 1050Hz, but a chip with a 15W TDP often won't be able to sustain the maximum boost clock while the 35W and above TDPs won't have such difficulties.

What this means is that the naming of Intel's various graphics solutions often doesn't tell you everything you need to know. A desktop i7-7700K might have 24 EUs running at up to 1150MHz while a mobile i5-7260U has 24 EUs running at up to 950MHz, but which is faster? The 7700K has a 91W TDP while the 7260U has a 15W TDP, so I suspect the two will end up performing pretty similar in many tasks. That makes benchmarking of the desktop HD 630 potentially useful even if most desktop users won't bother. So let's get to it.

Intel's HD Graphics 630 performance

The above video runs through the performance of the HD 630 in a collection of 15 games. Obviously, many of these are demanding games, but I also tested at pretty much the minimum quality level and 1280x720—or 1280x768 in a few games where 720p isn't supported. I had initially hoped to shoot for 1920x1080, but that's not happening in anything but the lightest of games—stuff like League of Legends, Counter Strike: Global Offensive, and even Overwatch should be able to run decently at 1080p, perhaps even at medium or high quality in some cases. But demanding PC games are out of the question—even doubling the number of EUs would still come up short of 30 fps in many games.

It's not just about playable vs. unplayable framerates, though. I remember testing earlier versions of HD Graphics, and even as recently as 4th Gen Core (Haswell), there would be quite a few games that either refused to run or ran but had severe rendering errors—even Broadwell had some issues in GTAV at launch! While the framerates aren't stellar on the HD 630, I do have to give Intel a lot of credit for getting their drivers to the point where most titles ran without any noticeable rendering errors. Even DX12 games worked for the most part. Battlefield 1 wouldn't render fullscreen, and Total War Warhammer wouldn't allow me to select the DX12 beta, but all the others worked—with lower performance than DX11, though.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

This chart summarizes the performance of the games I tested. While there's no absolute requirement for a game to average 30+ fps to be 'playable,' I think it's safe to say anything below 20 fps average is unplayable, and having minimum fps in the low 20s will also be problematic. I use the 97 percentile average of the longest frametimes and not the absolute minimum fps, so the reported minimums are for three percent of the total frames rendered, making them even more impactful. Doom for example actually runs pretty well at times, hovering close to 30 fps on some levels, but later in the game it starts to throw more pyrotechnics at the player and dips into the low 20s become more frequent. How tolerant you are of such things is a personal choice, but most of the results are for 'typical' areas of the games in question and do not represent the worst-case scenario.

What about overclocking? It is available, at least on the K-series CPUs I've tested, but it's not usually enough to take a game from unplayable to playable. The stock GPU clock on the i7-7700K is 1150MHz, and I was able to push that up to 1350MHz without too much difficulty. That's nearly a 20 percent overclock, which pushes a few games like Doom past the 30 fps mark, but minimum fps still routinely dips into uncomfortable territory on most of the games I tested.

The road ahead for integrated graphics

What I find perhaps most interesting is what Intel has been doing—and not doing—over the past eight or so years, ever since the original HD Graphics showed up in the Arrandale/Clarkdale first generation Core processors. If you want to look at real-world performance comparisons, I find it's best to focus on the FP32 performance, so that's what I'll do here. I'm referencing the Core i5 desktop models as examples of each generation, with a view toward relative performance. (And yes, GFLOPS can be a skewed metric, but as a rough estimate it can still be useful—particularly when looking at products from the same company.)

Clarkdale (e.g., i5-655K) had 12 EUs with up to four FP32 instructions per clock each, running at 733MHz—or about 35 GFLOPS, if you're willing to stretch the meaning of GFLOPS a bit. Sandy Bridge (i5-2500K) had 6 EUs doing four FP32 ops, but the clock speed was increased substantially to 1100MHz and Intel added FMA (Fused Multiply Add) support, giving 52 GFLOPS; mobile parts with up to 16 EUs and 124 GFLOPS were more common. These first two iterations were pretty tame, but Intel had to start somewhere.

Ivy Bridge (i5-3570K) represented a substantial change to the EUs, with twice the FP32 instructions per clock, so 16 EUs at 1150MHz had a potential 294 GFLOPS. Ivy Bridge also included support for the DX11 API, Intel's first graphics chip to do that. Haswell (i5-4670K) didn't really change much but added more EUs, so with 20 EUs at 1200MHz yielding 384 GFLOPS. Haswell also saw the introduction of GT3 and GT3e configurations that doubled the EUs and in the case of the GT3e added embedded DRAM—potentially up to 832 GFLOPS.

Broadwell (i5-5675C) was practically a non-event on desktop, though it included a GT3e configuration with potentially 844 GFLOPS. The more common mobile Broadwell solutions meanwhile were still closer to 384 GFLOPS (23 EUs at <1000MHz). Skylake (i5-6600K) and now Kaby Lake (i5-7600K) wrap up the list, adding DX12 feature level 12_1. Both feature 24 EUs clocked at 1150MHz, or around 442 GFLOPS. Skylake also had GT3/GT4 variants (up to 844/1244 GFLOPS), though Intel hasn't disclosed any GT3/GT4 Kaby Lake parts so far.

The reason I go through that list is to show that while Intel increased their mainstream graphics performance by nearly an order of magnitude going from 1st Gen to 3rd Gen Core, most of the desktop parts for the past several generations (Broadwell being the exception) haven't seen much in the way of improvements. More critically, desktop Skylake and Kaby Lake at 440 GFLOPS are way behind the 1733 (GTX 1050) to 2150 (RX 460) GFLOPS found on even the most basic of modern GPUs, and that's a big part of why everyone ends up needing to run a dedicated graphics card. There are many other factors as well—memory bandwidth, TMUs/ROPs/fillrate, and of course drivers to name a few. As an example of the latter, I noticed several DX12 games didn't run at all with Intel's current drivers, and Vulkan support also appears MIA. Oops.

I can't simply compare GFLOPS and declare a victor (that's why I ran some benchmarks), but there are fundamental limitations with processor-based graphics. Sharing system memory bandwidth is a big hurdle, so even if AMD or Intel stuffed eight times as many GPU cores into a chip, without a way to increase the memory bandwidth a lot of the potential performance is lost. Cost is another major factor, and with integrated GPUs being non-upgradeable, putting $100-$200 worth of graphics into a CPU package just means the user will ultimately upgrade to a dedicated GPU and the integrated GPU will likely sit dormant. A couple of HBM2 stacks might sound like a cool way to fix the bandwidth issue, but that doesn't help the price or other factors.

It might be tempting to look at the smartphone SOC market as an analog and try to figure out how that applies to PC. Here's the thing: the best Adreno graphics solution right now, to take one specific example, sits right around the 500 GFLOPS mark for theoretical performance. PowerVR's best GPUs (six cluster Rogue) are around 730 GFLOPS. But SOCs are often sold for prices that make Intel, AMD, and Nvidia weep—$50 to $75 is usually about the highest price you would find on the fastest SOCs. In terms of raw performance, the SOCs are about an order of magnitude slower than desktop GPUs.

What I'm getting at is that I really don't expect integrated graphics on PCs to actually become a major force anytime soon. The market leaders for graphics have a vested interest in keeping discrete graphics cards alive, and one of the biggest advantages of PCs over other devices like consoles and smartphones is their upgradeability. Yes, it's possible to add a dedicated GPU to a system with faster integrated graphics, but then the integrated graphics isn't used anymore. When you look at Intel's Broadwell desktop CPUs, which packed their most potent GPU ever at the time, even now the i5-5675C still runs $300. More importantly, it's vastly outgunned for gaming purposes by a $130 CPU paired with a $100 graphics card.

If you can't upgrade to a discrete graphics card, for example if you're using a laptop, faster integrated GPU solutions might sound attractive. The problem is that they're underpowered and become (even more) outdated far too quickly. Graphics cards also get outdated, but upgrading a GPU doesn't normally require upgrading other parts of your system. A better option for laptop users might be streaming games from your desktop PC, or using one of the game streaming services like GeForce Now or LiquidSky. Ultimately, though, for PC gaming you're almost always going to want some form of dedicated graphics.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.